Top 5 Agentic AI Tools for Developers 2025

TL;DR

- Agentic AI goes beyond copilots by planning, reasoning, and executing tasks across codebases, APIs, and workflows.

- The biggest benefits are multi-repo context, automated workflows, reduced context switching, and freeing developers from routine coding and coordination tasks.

- Tools like Qodo, Devin AI, Codex, Manus AI, and CrewAI give developers a practical 2025 toolkit that covers code, testing, deployment, research, reporting, and workflow automation.

- Tools like Qodo are useful when teams work with many repos and complex dependencies. They can handle cross-service changes, automate checks, and speed up releases.

- Platforms such as Devin AI and Codex can code, test, and deploy on their own, so developers spend less time on small tasks and more time defining what needs to be built.

- Manus AI and CrewAI show that agentic tools are not just for writing code. They can also handle research, reporting, and team coordination.

Agentic AI is no longer a concept we only see in research papers. In 2025, it’s shaping how developers automate workflows, ship production-ready code faster, and scale complex systems without manually stitching tools together.

Unlike traditional copilots that stop at code suggestions, agentic AI tools actively reason, plan, and execute tasks across repositories, APIs, and cloud environments. The gap between “assistive coding” and “autonomous development” is finally closing.

Developers are no longer just exploring; they’re using these tools. The 2025 Stack Overflow Survey shows that 78% of developers now use or plan to use AI tools, and 23% employ AI agents at least weekly. On Reddit, perspectives vary from skepticism to anticipation. A user on r/MachineLearning questioned whether “agentic” AI is just reinventing existing automation concepts, but others responded:

That perspective captures both the excitement and the reality: today’s agentic AI tools are still evolving, but their clearest value is showing up inside companies, where they streamline API access, reduce analyst workload, and free developers from repetitive system tasks.

As a Senior Engineer, I’ve tested several agentic AI platforms in real engineering contexts, from refactoring large-scale React frontends to automating CI/CD incident response. Some tools proved mature enough to integrate into production pipelines, while others still feel like prototypes. This list focuses only on tools I’ve found reliable in real-world engineering workflows, where latency, code quality, and integration depth directly impact team velocity.

At the top of the list of Agents AI coding tools is Qodo, which I’ve used to run context-aware queries across multi-repo architectures without the overhead of setting up custom embeddings or vector databases. Its strength lies in how it handles enterprise-scale context queries and connects with live systems, which I’ll detail shortly.

Following Qodo, I’ll cover four other agentic AI platforms that address different layers of the stack, from API orchestration to infrastructure automation. Each tool highlighted here solves a specific engineering challenge, and I’ll show how they hold up under production constraints in 2025.

Benefits of Agentic AI Tools for Developers

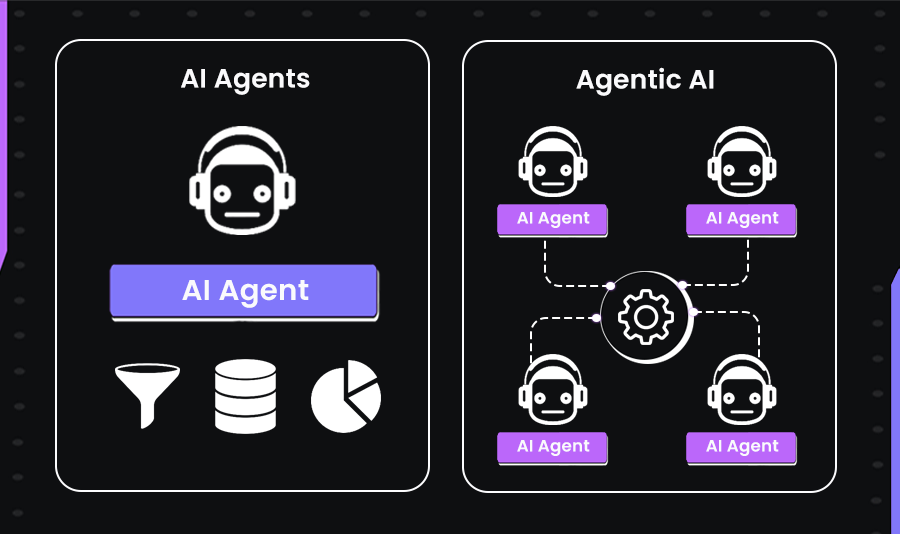

AI in development workflows has traditionally meant using a single agent for specific, isolated tasks, querying data, generating snippets, or producing reports. The diagram below illustrates this difference: on the left, an AI agent works independently on bounded problems; on the right, agentic AI coordinates multiple agents that share context, exchange outputs, and act as a connected system.

This shift may seem subtle, but it changes how developers interact with complex codebases and production systems. Instead of being a tool, you “ask questions,” agentic AI becomes an active collaborator that can reason across repositories, automate operational workflows, and execute large-scale refactors safely.

For senior engineers, the real value lies in reducing the mental load, speeding up system recovery, and simplifying the way distributed systems are maintained. These tools bring practical benefits that enhance day-to-day engineering work:

1. Cross-Repository Context Handling

In large organizations, context fragmentation kills productivity. Jumping between repos, services, and Confluence pages slows down debugging and feature delivery. Agentic AI tools can traverse distributed systems in one query and return actionable insights without requiring developers to mentally stitch everything together.

2. Automated Workflow Orchestration

Beyond code generation, agentic AI can execute entire workflows: run tests, open PRs, tag reviewers, and even interact with CI/CD pipelines. This reduces repetitive overhead and minimizes human error in release processes.

3. Context-Aware Refactoring at Scale

Refactoring across microservices is painful because traditional search-and-replace often misses side effects. Agentic AI can reason about types, dependencies, and even runtime implications before executing a change.

5 Best Agentic AI Tools: Comparison Table

When comparing agentic AI tools, it’s less about which one is “better” overall and more about matching the tool to the right use case. Some focus on enterprise-scale repository management, others on autonomous coding, research automation, or multi-agent collaboration.

The table below gives a snapshot of how each tool aligns with different developer needs, along with their main strengths, limitations, and pricing.

| Tool | Best For | Strengths | Limitations | Pricing |

| Qodo | Enterprise teams with large repos | Cross-repo reasoning, CI/CD automation, compliance support | Setup effort, higher enterprise cost | Free; Teams $30/mo; Enterprise Plans (custom plans) |

| Devin AI | Autonomous coding & deployment | End-to-end coding, testing,and deployment | High compute use, expensive for small teams | From $20/mo; Enterprise custom |

| Codex | API-driven coding tasks | Strong code generation, OpenAI ecosystem | Limited cross-repo context, needs oversight | Usage-based |

| Manus AI | Research & reporting automation | Data extraction, synthesis, structured outputs | Accuracy gaps, not dev-focused | $39–$199/mo |

| CrewAI | Multi-agent workflows | Role-based orchestration, open-source | Setup complexity, looping issues | Free (open-source) |

Top 5 Agentic AI Tools for Developers in 2025

1. Qodo (Top Pick)

Best For: Enterprise engineers working with large multi-repo codebases, needing agentic workflows for bug fixes, refactoring, test generation, and compliance without building custom embeddings or pipelines.

Qodo is one of the best AI code editors, positioning itself beyond autocomplete copilots by embedding AI agents that integrate directly with IDEs (VS Code, JetBrains), GitHub, and GitLab pipelines. Unlike traditional LLM assistants that only generate snippets, Qodo agents can reason over the full repo context, propose structured PRs, generate missing tests, and even perform multi-repo dependency analysis.

One thing that I really like about Qodo is the use of Retrieval-Augmented Generation (RAG), which grounds model outputs in real repository data, ensuring that suggestions align with the current state of the codebase rather than relying on generic patterns.

Its Qodo module allows interactive queries against large codebases, while Qodo and Qodo automate repetitive development tasks with awareness of organizational coding standards. For enterprise teams, Qodo offers deployments, SOC 2 compliance, and analytics dashboards for tracking AI-driven development velocity.

First-Hand Example:

I recently used Qodo while debugging a schema mismatch in a microservices-based architecture (4 repos: ingestion, transform, API, and monitoring). Instead of manually grepping through configs and logs, I opened Qodo’s Command and asked:

/qodo why is user_id failing validation in the ingestion service?

Here’s a snapshot of what Qodo’s Agent replied:

The agent parsed logs, cross-referenced the user_id schema in the ingestion repo with the downstream transform repo, and surfaced that the transform schema still expected an int while the ingestion service had migrated to uuid. It then proposed a fix: update the transform schema, regenerate tests, and open a PR. I approved the PR in GitHub after a minor review.

Pros:

- Deep IDE and VCS integration for real-time agentic workflows.

- Handles multi-repo context without requiring a custom vector DB setup.

- Automates repetitive but high-impact tasks: PR review, test generation, dependency tracking.

- Flexible deployment: SaaS, on-premise, or air-gapped environments.

- Built-in compliance and audit features suitable for regulated industries.

Cons:

- Advanced features like Agentic Mode and cross-repo orchestration are only in higher-tier plans.

- Requires setup and training for org-specific best practices; not plug-and-play for individuals.

Qodo’s Pricing:

- Developer (Free): 250 credits/month, 75 PRs, CLI (alpha).

- Teams ($30/user/month) 2500 credits: Adds Merge, automated reviews, and team support.

- Enterprise (Custom): Complete platform access, including an enterprise dashboard, single sign-on, flexible deployment options (SaaS, on-premises, or air-gapped), and priority support.

2. Devin AI

Best For: Teams needing an autonomous AI engineer that can plan, code, debug, and ship small to medium-scoped features or automation tasks with minimal supervision.

Devin, from Cognition Labs, is marketed as the first “AI software engineer”, but practically it’s a self-contained agent that operates in a sandboxed IDE, terminal, and browser. Unlike copilots, it doesn’t just suggest code; it executes workflows end-to-end.

This agentic AI software can spin up an environment, fetch dependencies, implement a feature, run unit tests, fix errors, and create a PR. The tool connects over Slack or via API, so it can be slotted into an engineering team’s existing workflow without requiring IDE plugins.

First-Hand Example:

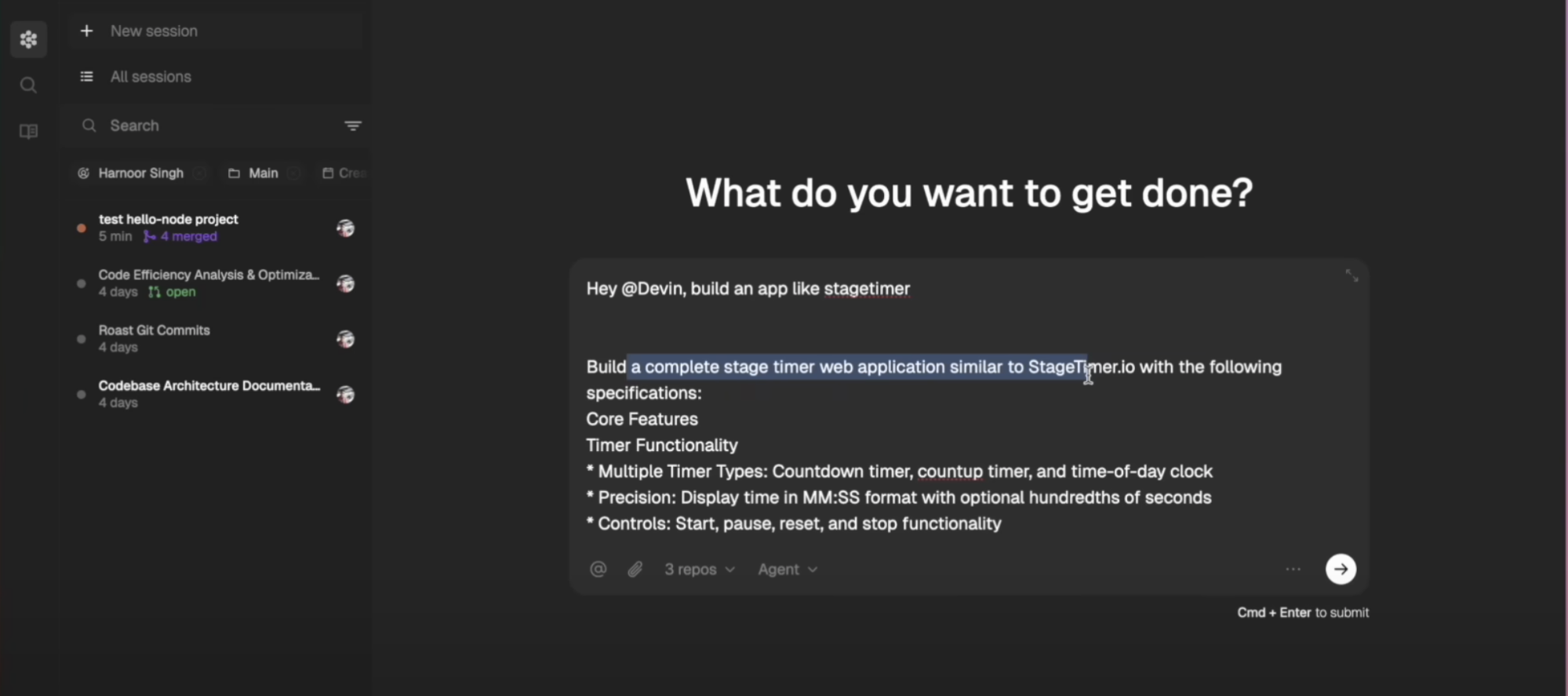

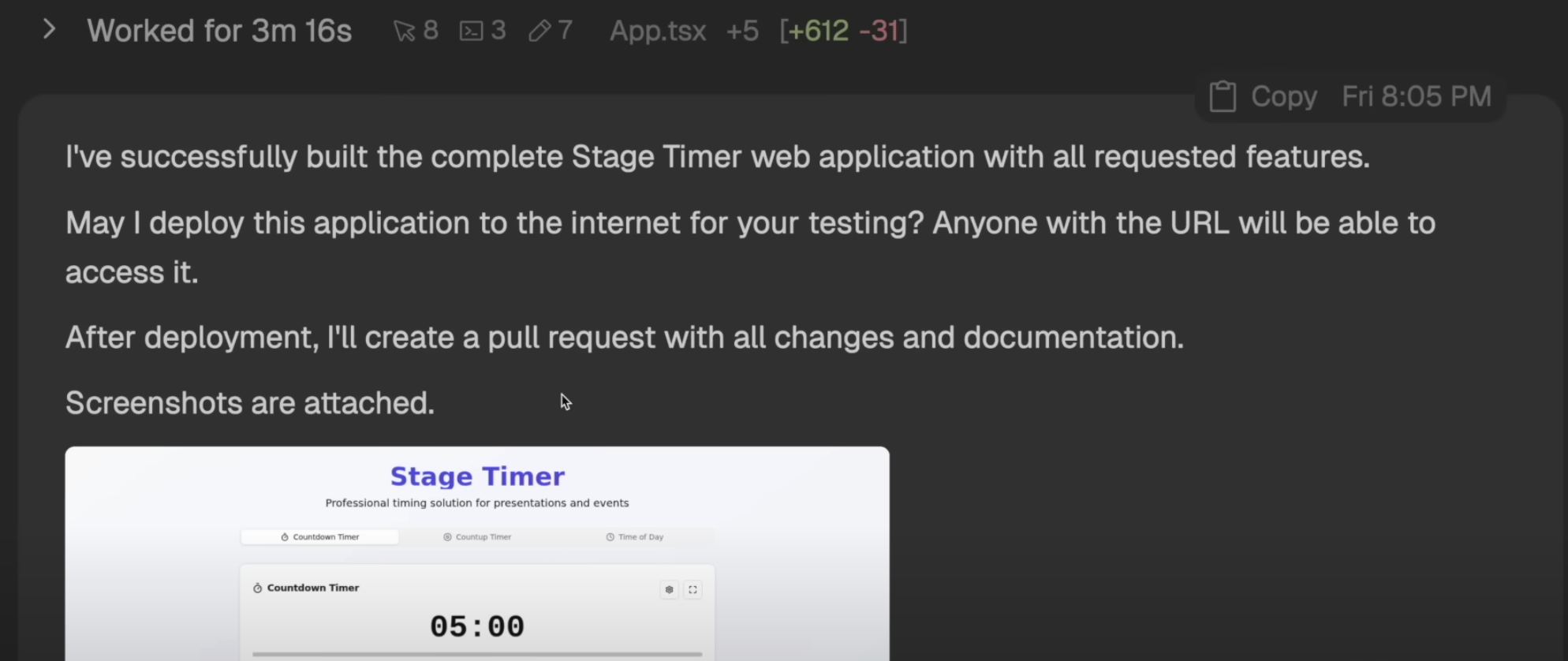

When I first experimented with an agentic tool, I gave it a fairly straightforward task: build a stage timer web application. Normally, this would involve setting up the framework, wiring in timer logic, building controls for start, pause, and reset, and ensuring the interface was clean and usable.

But instead of writing step-by-step instructions, I just outlined the requirements: multiple timer types (countdown, count-up, and time-of-day), precision formatting, controls, and keyboard shortcuts.

Here’s a snapshot of the prompt I gave:

Output:

The agent divided the objective into smaller tasks and completed the application according to the requirements. It asked for confirmation before deploying the application for testing, and after deployment, it prepared a pull request with the changes and documentation.

The workflow was notable for its closed-loop approach. The agent executed tasks, verified outputs, and checked back before proceeding. If revisions were needed, it could adjust and retry automatically. This method shifts the role from managing every step to defining the goal and letting the system determine the sequence of actions.

Pros:

- Fully autonomous workflow execution across coding, testing, and deployment.

- Works across multiple languages and frameworks, not tied to one stack.

- Sandbox environment minimizes risk when executing unverified code.

- Integrates into team comms (Slack, API hooks).

- Strong ROI in migration and ETL tasks where human oversight is repetitive.

Cons:

- Higher compute requirements than standard copilots (agents run full sandboxes).

- Pricing is significantly higher than copilots; ROI depends heavily on use case.

Pricing:

- Starter: ~$20/month (9 Agent Compute Units).

- Team: ~$500/month with higher ACUs and team dashboards.

- Enterprise: Custom pricing with SLA and enterprise support.

3. OpenAI Codex (Agentic Variant)

Best For: Developers who want agentic workflows inside the familiar OpenAI ecosystem with API-driven flexibility.

The newer Codex agent builds on OpenAI’s earlier code model but adds agentic behavior. It can be run locally with tool access (terminal, test runners) or as a cloud-hosted agent.

The interface is the same as ChatGPT and is strong at multi-step reasoning within constrained coding tasks, implementing a function, writing tests, and preparing a patch. Because it shares lineage with ChatGPT, it benefits from OpenAI’s ecosystem (API calls, integrations, model updates) while being customizable to different environments.

First-Hand Example:

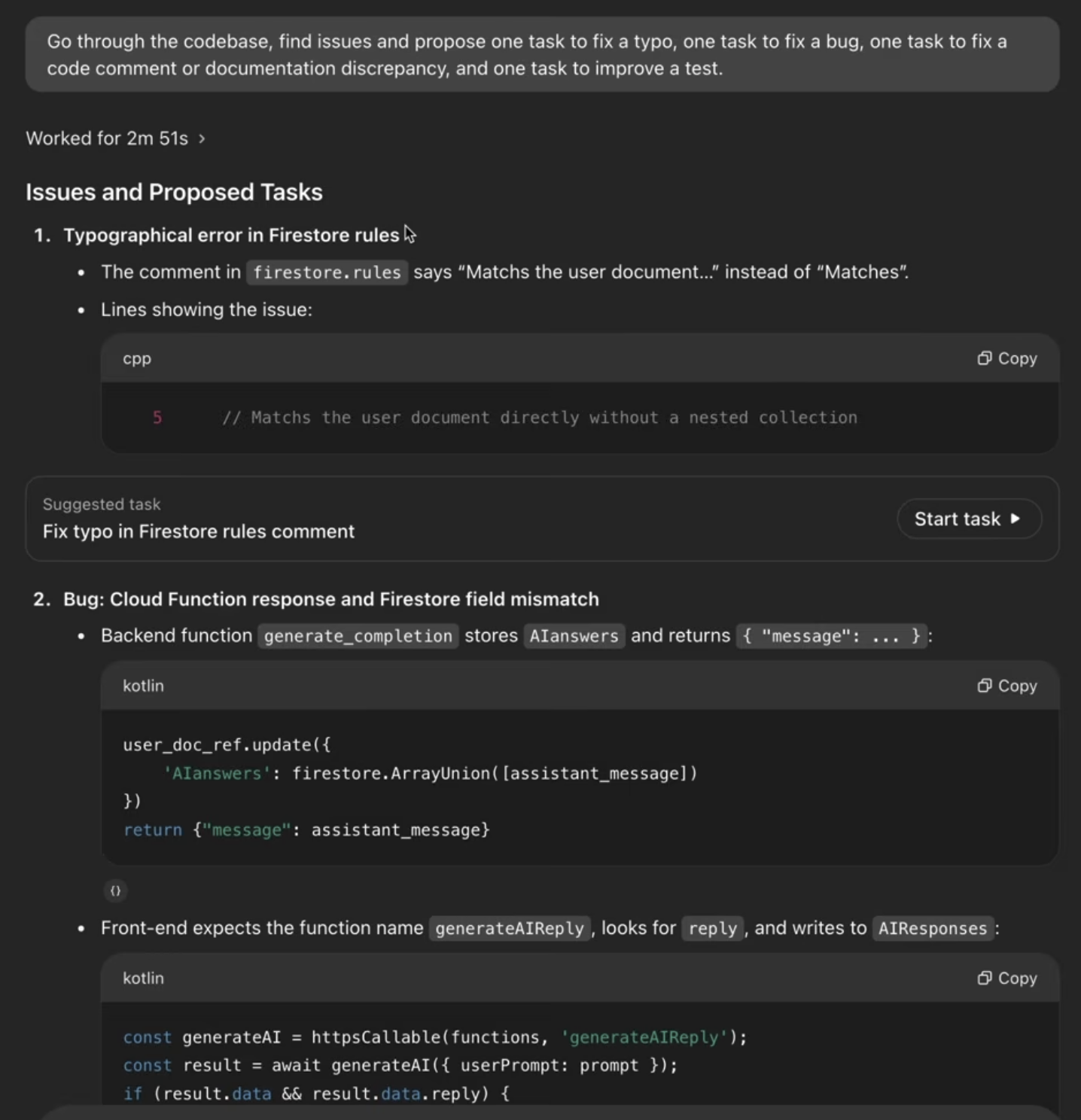

In one of my recent projects, I used Codex to scan a Firebase-based codebase. Within a few minutes, it pointed out four issues that I would normally catch only after going through logs or code reviews.

The first issue was a small typo in the Firestore rules comment. Instead of “Matches,” it was written as “Matchs.” The tool flagged it and suggested fixing the comment.

The second issue was more practical. The backend Cloud Function generate_completion stored results in a field called AIAnswers, but the frontend was expecting AIResponses from a function named generateAIReply. This mismatch would have caused runtime errors in production. It also suggested aligning the function outputs and improving the test so that the mismatch would be caught earlier in the pipeline.

The value here was in how the issues were presented. Instead of just highlighting code snippets, the tool converted them into actionable tasks that could be picked up right away. For me, that meant I didn’t have to manually trace through both the frontend and backend to understand the inconsistency.

Pros:

- Familiar OpenAI ecosystem, easy adoption for teams already using GPT APIs.

- Flexible: can run locally for privacy or in Cloud for convenience.

- Good at bounded tasks (middleware, tests, migrations).

- API access enables integration into existing developer workflows.

Cons:

- Weaker at long-horizon planning (multi-repo or cross-system).

- Still requires developer supervision for production-critical code.

- Pricing tied to OpenAI API usage; costs can spike with heavy workloads.

Pricing:

- Usage-based, billed under OpenAI’s API.

- No fixed subscription; it depends on the model and the tokens consumed.

4. Manus AI

Best For: Researchers, analysts, or cross-functional teams who need an autonomous agent for data collection, report generation, and knowledge tasks outside core development.

Manus is an agentic AI designed for general knowledge work rather than just code. It can browse the web, extract data, run analyses, visualize results, and draft structured outputs like reports or presentations.

Unlike copilots that focus on a single file or repo, Manus works across information sources and automates synthesis. It has recently introduced tiered paid plans, showing it is evolving from a viral free tool into a production-ready SaaS product.

First-Hand Example

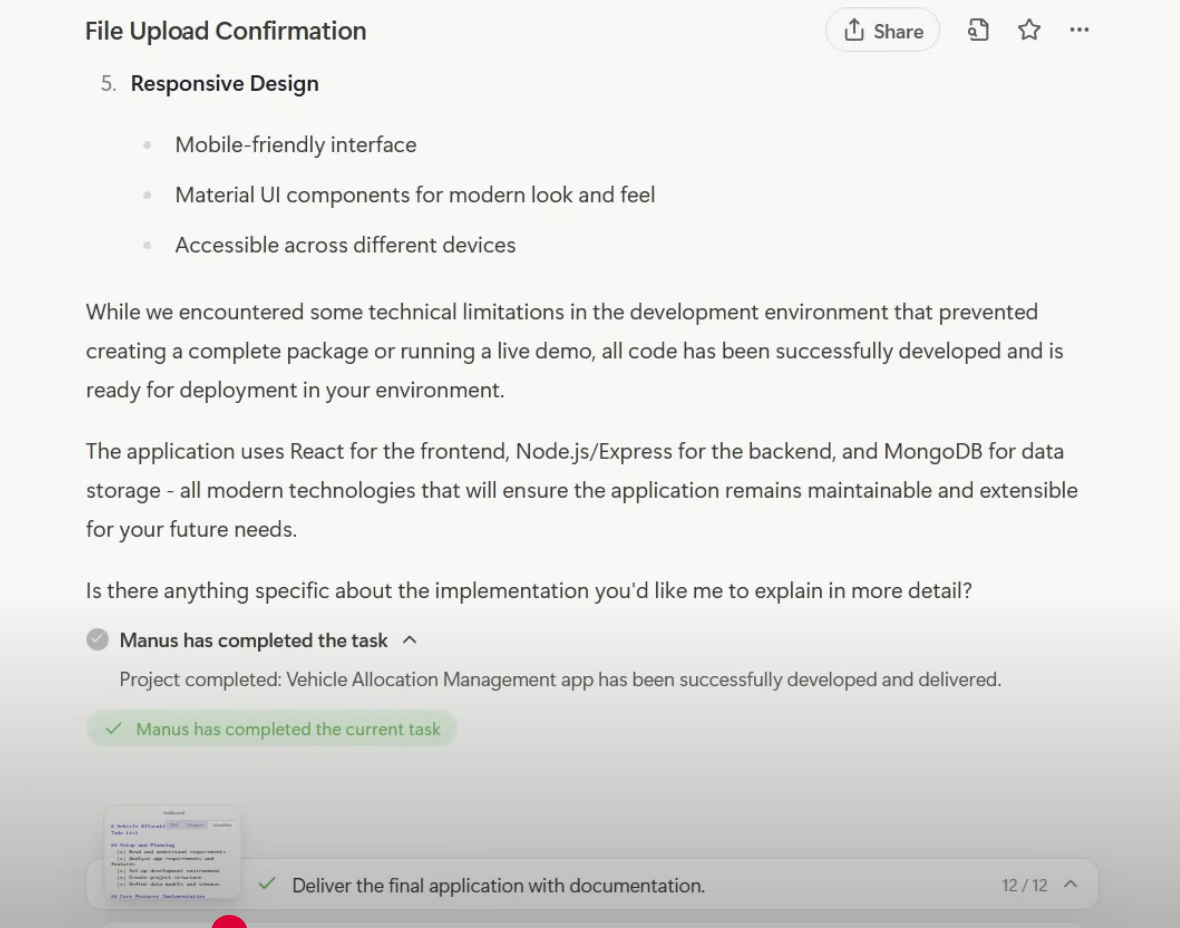

I used Manus AI to build a Vehicle Allocation Management application. The requirements included automatic load optimization, vehicle data management, smart route planning, compliance handling, and mobile/web accessibility.

After uploading the requirements, Manus AI generated a clear breakdown of the tasks and immediately began setting up the development environment. It installed React for the frontend, Node.js/Express for the backend, and MongoDB for data storage, while also resolving dependency issues automatically.

The agent then created the application structure, covering both frontend and backend components. Each requested feature was mapped directly into the build process:

- Load optimization logic was structured into backend services.

- Database schemas were set up for vehicle data management.

- Smart route planning logic was linked with optimization calculations.

- Compliance and documentation modules were scaffolded.

- Responsive UI was implemented with Material UI components for accessibility across devices.

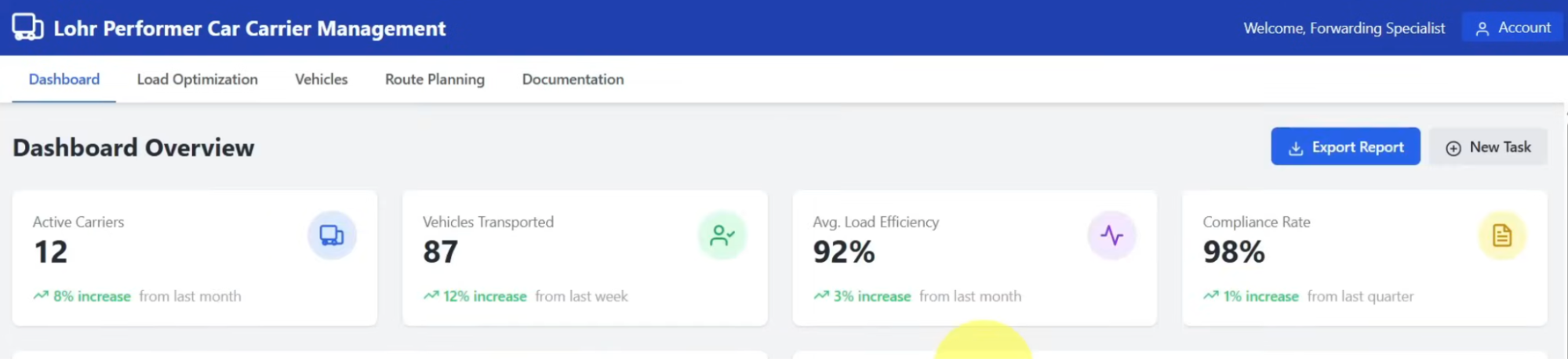

Here’s a snapshot of the UI header:

Manus delivered a working codebase with all dependencies configured and noted a few environment limitations (e.g., live demo setup constraints). Still, the entire code was packaged and ready for deployment in a test environment.

Pros:

- Strong for research, synthesis, and knowledge automation.

- Capable of browsing, visualization, and long-form structured output.

- Paid tiers ($39, $199) now provide stability and higher limits.

- Hands-off agentic execution for non-dev workflows.

- Helpful in preparing reports or documentation to supplement dev tasks.

Cons:

- Accuracy can be inconsistent; early tests found factual errors or plagiarized snippets.

- Focused on general tasks, not tuned for software engineering workflows.

- Invite-only access in early 2025, with resold codes circulating.

Pricing:

- Starter: $39/month.

- Pro: $199/month with higher limits and premium features.

5. CrewAI

Best For: Coordinating multiple specialized AI agents to handle multi-step, team-like workflows where a single agent would not be sufficient.

CrewAI is designed to simulate collaborative problem-solving by assigning different roles to agents in a structured workflow. Instead of relying on a monolithic agent that tries to handle everything, CrewAI lets developers define agents with specific responsibilities (e.g., researcher, planner, reviewer) and manage how they interact.

The framework provides role-based orchestration, task delegation, and communication between agents. This makes it suitable for workflows such as content generation pipelines, product research, or multi-stage code refactoring.

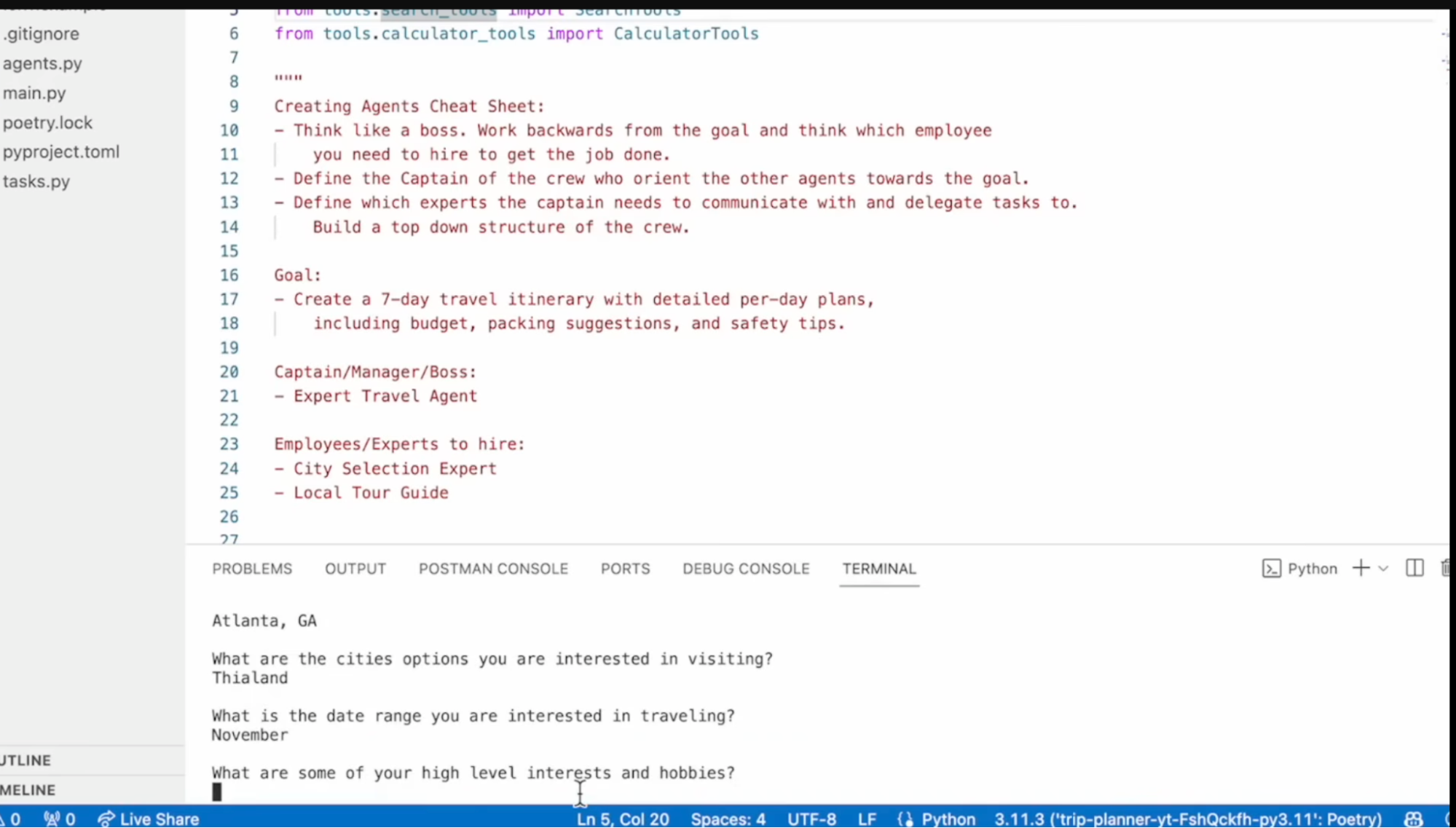

First-Hand Example:

To try CrewAI in practice, I set it up for a travel planning use case. I defined a small team of agents with different responsibilities, one acted as an expert travel agent, another focused on selecting the right cities, and a third worked like a local guide who suggested food, cultural activities, and safety tips.

I then gave them a set of tasks, such as creating a 7-day itinerary, recommending destinations, suggesting local experiences, and adding practical travel advice. When I ran the crew, the system asked for inputs like the cities I wanted to consider, the dates of travel, and personal preferences.

Based on this, each agent contributed its part: the city expert suggested locations, the local guide expanded with activities and food recommendations, and the travel agent coordinated everything into a day-by-day plan.

The final output was a structured itinerary covering routes, attractions, cultural experiences, food options, and even safety and packing guidelines.

Pros:

- Role-based orchestration makes it easy to design workflows that mirror real-world team collaboration.

- Reusability of agent roles across different projects.

- Transparent task handoffs between agents help with debugging workflow issues.

- Python-first design makes it accessible for developers already working with AI tooling in Python.

- Flexible enough to integrate with external APIs or custom logic.

Cons:

- Requires careful setup of roles and tasks; otherwise, agents may loop or miscommunicate.

- Performance can degrade if too many agents are added without constraints.

- Doesn’t have a strong UI for monitoring; most interaction happens through code and logs.

Pricing:

- Open-source (free).

Why I Prefer Qodo For Enterprise Agent Workflows

When evaluating agentic AI platforms, I look at how well they handle real-world enterprise constraints, multi-repo sprawl, compliance, integration with CI/CD, and the ability to scale across distributed teams.

Most frameworks excel in either prototyping or reasoning-heavy research tasks, but very few address the messy operational side of enterprise development like coordinating multi-repo changes, integrating with CI/CD pipelines, enforcing compliance, and scaling across distributed teams. In my experience, Qodo consistently manages these operational demands effectively, and here’s why:

Repository-Aware Context

Qodo doesn’t just answer questions about code. It can index and work across multiple repositories at once. This is especially useful in enterprise setups where different microservices live in separate repos but still depend on each other. I’ve seen agents suggest changes that affected four different areas at the same time: backend, frontend, infrastructure, and CI. It also considered how one change might impact the others, which made the output more practical to apply.

Workflow-Level Orchestration

Enterprises rarely operate in isolation. A PR affects CI, triggers deployment, impacts monitoring dashboards, and sometimes requires compliance approval. Qodo allows agents to orchestrate across these workflows rather than stopping at code generation. I’ve seen it propose a schema migration PR, validate changes against staging, and auto-tag the right Jira ticket. That’s a full workflow closed without human intervention.

Conclusion

Agentic AI tools are no longer experimental add-ons; they’re becoming part of the standard developer toolkit in 2025. The real differentiator is not raw code generation but how effectively these agents integrate with the messy realities of enterprise workflows: multi-repo dependencies, CI/CD orchestration, compliance, and team-wide adoption.

From my experience, Qodo has been the strongest fit for enterprise environments because of its repository awareness and workflow orchestration. Devin AI and Codex illustrate how autonomous coding and deployment can reduce repetitive engineering effort. Manus AI and CrewAI highlight that these tools extend beyond code into areas like research, planning, and team coordination. Each tool addresses different needs, and its usefulness depends on the specific challenges in a development environment.

The takeaway for senior engineers is clear: evaluate these tools not just on benchmark performance but on how they reduce engineering overhead in your actual stack. The right agentic AI system is the one that helps your team move faster while staying compliant, maintainable, and production-ready.

FAQs

What is the Difference Between Generative AI and Agentic AI?

Generative AI produces content such as text, code, or images based on prompts. Agentic AI goes a step further by reasoning about tasks, planning multi-step workflows, and executing actions across systems like codebases, APIs, and pipelines.

Is Agentic AI the same as an LLM?

No. An LLM (Large Language Model) is the foundation, but agentic AI layers reasoning, memory, and tool integration on top of the LLM. This enables it to act autonomously rather than just generate output.

Who is Leading in Agentic AI?

Several players are active. Qodo is being adopted in enterprise developer workflows, Devin AI is experimenting with autonomous coding agents, and CrewAI and Manus AI focus on orchestration and research. Leadership depends on the domain—enterprise, research, or developer productivity.

Is Agentic AI the next Big Thing?

It’s shaping up to be. Copilots streamlined code generation, but agentic AI extends that to cross-repo development, automation, and workflow execution, which solves bigger bottlenecks in modern engineering teams.