The Rise of Agentic Workflows in Enterprise AI Development

TL;DR

- Agentic AI workflows move beyond static automation by enabling autonomous, goal-driven AI agents to plan, execute, and self-correct tasks across enterprise systems.

- Traditional automation and prompt-based AI break under complexity; agentic workflows overcome this by dynamically adapting to schema changes, edge cases, and evolving requirements.

- Enterprise-scale productivity gains come from reduced review backlogs, faster test generation, earlier regression detection, and freeing developers for architectural decision-making.

- The architecture of agentic workflows combines orchestrators, specialized agents, shared memory, compliance guardrails, and observability layers, making them resilient and enterprise-ready.

- Platforms like Qodo strengthen this shift with contextual RAG pipelines, adaptive planning, extensible tool integrations, and flexible deployment, solving real developer pain points around trust, context fragmentation, and governance.

What if the repetitive processes that slow your team down could run on autopilot? In most enterprise engineering environments, the tasks that consume the most hours rarely require architectural judgment. Reviewing boilerplate code, updating outdated dependencies, writing unit tests for simple functions, or aligning API signatures across microservices are repetitive but unavoidable. These are also areas where traditional automation and prompt-based AI tools have shown their limits.

As a Senior Engineer, I’ve seen this firsthand while working with large Python and TypeScript codebases. Even with strong CI/CD pipelines, many tasks still require manual intervention because scripted automation gets limited whenever edge cases appear. A minor schema change could invalidate an entire sequence of Jenkins jobs.

Prompt-based AI assistants can handle small tasks but break down when asked to manage longer workflows such as cross-service API updates or refactoring a legacy module, making them too unreliable for production teams.

Building truly agentic workflows goes beyond patching these gaps with scripts or chaining a few prompts together.

That coordination is the key shift: agentic systems don’t just execute commands, they manage workflows end-to-end.

They assign the objective to an autonomous system rather than issuing step-by-step instructions. The agent breaks the objective into smaller actions, chooses the right tools for each stage, and verifies its progress. If something fails, it loops back, adjusts, and retries until the goal is met. The entire process runs as a closed loop, with reasoning, execution, and validation happening internally.

For enterprise teams, the rise of agentic AI workflows is less about novelty and more about scalability. It means reducing review backlogs, catching regressions earlier, and allocating senior engineering time to architectural design instead of repetitive tasks.

The key is adopting these workflows in a structured way that preserves context, enforces standards, and integrates with existing pipelines. That is where platforms like Qodo are starting to play an important role.

What Are Agentic Workflows

Agentic workflows are structured processes where autonomous AI agents collaborate to achieve a defined goal, often with minimal human intervention. Instead of issuing step-by-step instructions, we set an objective and let the system figure out the path.

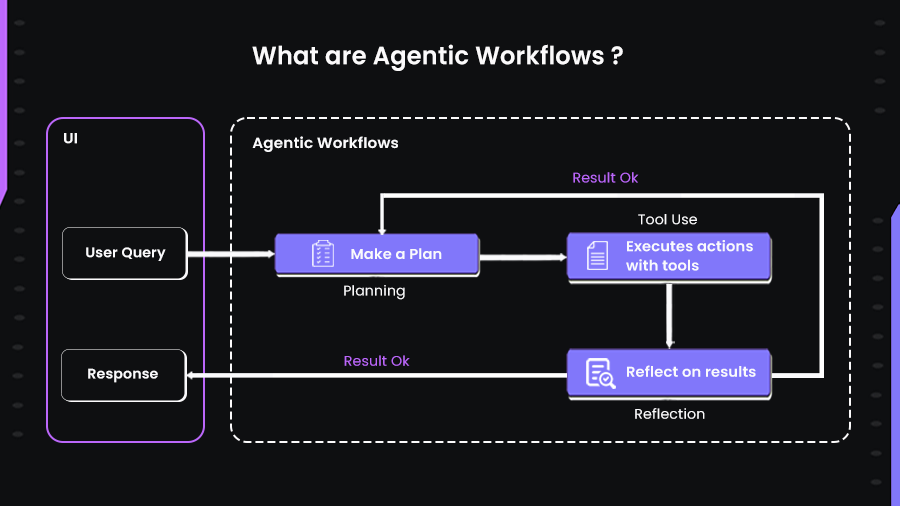

In the diagram below, we can see how agentic workflows work. The agent breaks the goal into smaller actions, i.e, makes a plan, chooses the tools it needs, and verifies progress. If something fails, it loops back, adjusts, and retries until it gets closer to the intended result.

Next, the system moves into execution, where the agent applies the right tools, APIs, or models to perform the required tasks. Unlike static automation, execution here is flexible and tool-agnostic, allowing the agent to select different approaches depending on context.

After execution, the agent enters a reflection phase, evaluating whether the results align with the intended goal. If the results are unsatisfactory, the system loops back, revises the plan, and retries the process.

This iterative cycle of planning, action, and reflection continues until the agent determines the acceptable results. At this point, the response is delivered back to the user. It ensures they can handle uncertainty, recover from errors, and dynamically refine their approach, making them far more resilient than traditional deterministic workflows.

Agentic Workflows vs Non-Agentic Workflows

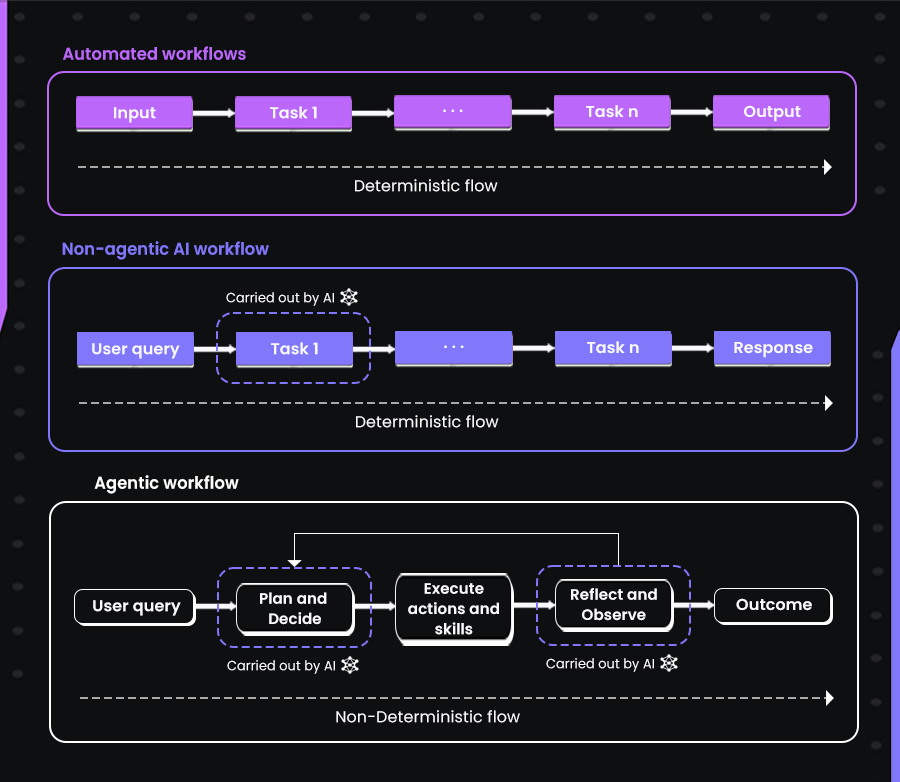

Engineering teams have long relied on automation to streamline repetitive tasks, but the underlying workflows have remained deterministic. In a traditional automated workflow, inputs pass through a fixed set of steps, with each task executed in a rigid sequence until an output is produced. This works well for predictable processes but struggles whenever unexpected variations or edge cases appear.

Non-agentic AI workflows improved on this by inserting AI into specific steps of the deterministic chain. For example, an AI model might generate code for one task, but the flow remains fixed. The AI contributes intelligence to individual steps, yet the system as a whole still lacks adaptability.

When I needed to update API endpoints across three microservices, a non-agentic AI could generate the updated function signatures for each service. However, I still had to manually identify all downstream dependencies, update tests, and orchestrate deployments.

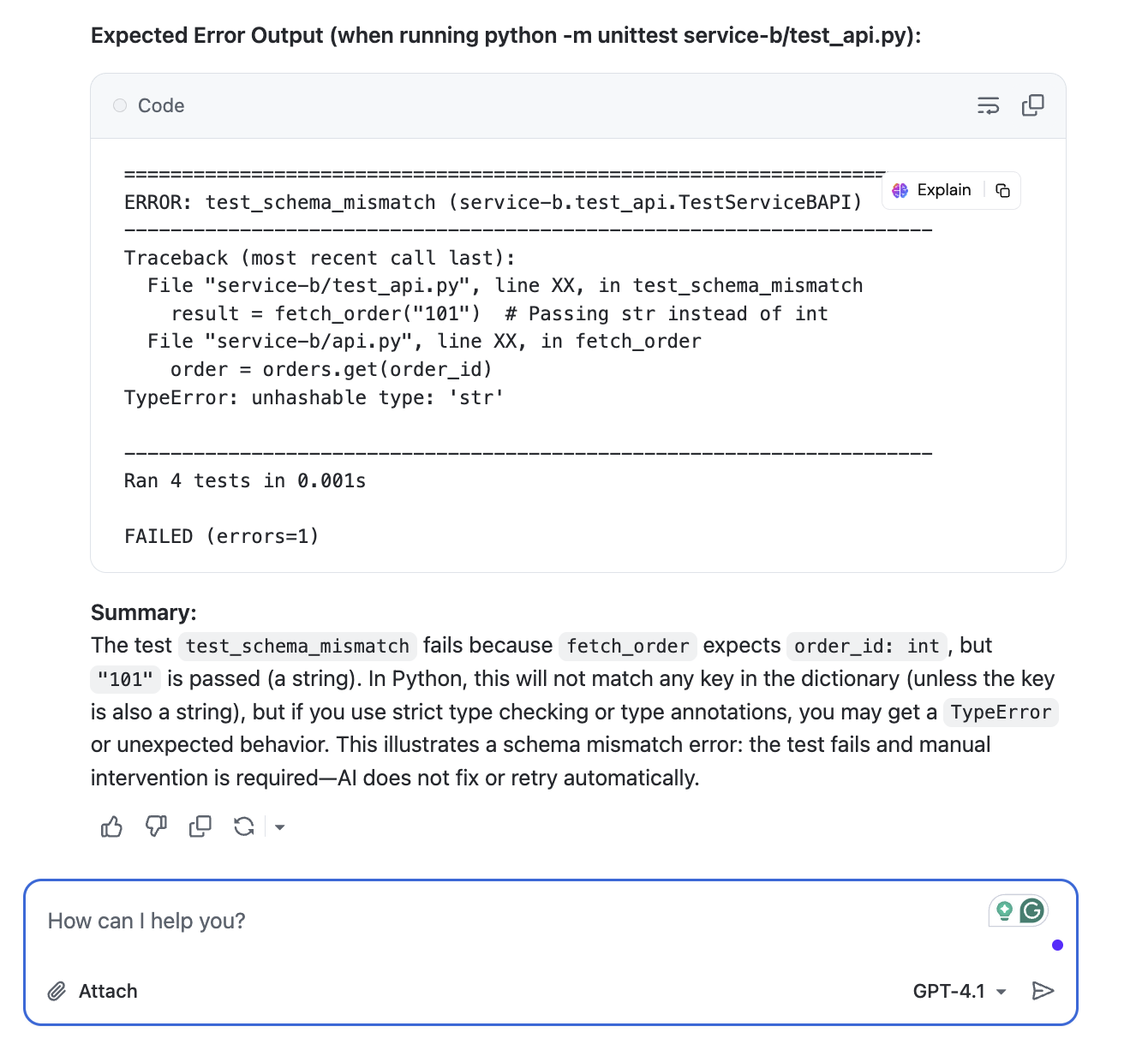

Refer to this image below:

If one service failed its tests due to a schema mismatch, like here, the expected schema of the order_id is str, but the non-agent has mismatched it as an integer. That means the AI couldn’t adapt; I had to intervene to fix the issue and restart the workflow.

Agentic workflows in AI introduce a new layer of autonomy. Instead of simply following a predefined chain, agents plan, decide, act, and reflect in a loop.

I gave the same task (updates in API endpoints across three microservices) to Qodo’s agent. It first analyzed all three services, identified which endpoints and data structures were impacted, updated the code, regenerated tests, and opened pull requests for review.

In this setup, the agent broke down a user query, chose the right tools, executed actions, and evaluated results before moving forward. This non-deterministic flow means the system can adapt in real time, retrying or adjusting its approach until it reaches a satisfactory outcome.

This shift moves AI from being a step in the pipeline to being the orchestrator of the pipeline itself. Rather than automating single tasks, agentic workflows enable end-to-end problem solving where reasoning, execution, and validation are built into the loop.

Enterprise Drivers for Agentic Workflows

Enterprises are moving toward agentic workflows because traditional automation systems are no longer sufficient in dynamic and knowledge-heavy environments. Unlike fixed pipelines, agentic workflows provide the adaptability of AI agents while still operating within enterprise-grade constraints. Several factors are driving this shift, they are:

Governed autonomy, not blind automation

Agentic workflows allow agents to act independently while staying within defined policies. Instead of applying checks only at the end, policy-as-code runs at every process step. Features like verifier, executor loops, approval gates, and scoped permissions help prevent unsafe actions while maintaining speed.

To measure effectiveness, teams can track metrics such as precision and recall of policy checks, the number of policy violations per thousand changes, and the ratio of auto-approved actions versus those requiring review. These measures show the controls’ reliability and the balance between safety and throughput.

Reliability from self-checking loops

As I explained before, agents plan, act, check, and revise instead of a single pass. Verifiers test outputs, compare against specs, and trigger retries with new constraints when needed. This reduces inconsistent and noisy results and lowers the chance of silent failures. Teams can measure reliability through defect escape rate, rollback frequency, and the rate of successful first-pass completions after verifier feedback.

Shorter lead time and higher throughput

Workflows such as PR review, test generation, dependency updates, and changelog preparation can run in parallel across specialized agents. An orchestrator coordinates these steps as a DAG, masking tool latency, and keeping execution queues warm. The impact appears in DORA metrics (key software delivery performance indicators defined by the DevOps Research and Assessment (DORA) group) like lead time for changes, deployment frequency, and change failure rate. You can also track reviewer throughput, such as PRs handled per day, along with average time-to-merge.

Context that compounds over time

Shared memory makes agents context-aware. A retrieval layer merges short-term task state with long-term knowledge from code, tickets, and runbooks. This cuts time spent rediscovering the same facts over and over again. Track retrieval hit rate, median time-to-context for a task, and the fraction of answers backed by citations that link to internal sources.

Challenges with Agentic Workflows

From my experience with agentic workflows in enterprise settings, the technology is powerful but not without challenges. Shifting from single-step automation to multi-agent systems brings new layers of complexity. These workflows offer adaptability and intelligence but raise issues around integration, reliability, and developer control. Here are some of the key challenges I’ve observed in real-world agentic workflow examples:

Context Fragmentation Across Repositories

One of the biggest hurdles is providing agents with the right context across large, distributed codebases. For example, if a developer is refactoring an authentication flow that touches multiple microservices, the agent often lacks awareness of how changes in one repo impact others. This leads to incomplete or even harmful recommendations. Developers spend hours manually curating context, which breaks the promise of autonomy.

Trust in Agent Suggestions

Even when agents generate code or review pull requests, developers hesitate to accept changes unquestioningly. Many tools offer generic suggestions that do not align with enterprise-specific best practices. For example, many agents suggest retry logic without following the team’s circuit breaker policy, which could cause issues in production. This gap in trust often slows down the use of agent-driven workflows.

Limited Ability to Handle Enterprise-Specific Best Practices

Most AI systems are trained on generic coding patterns. In enterprises, however, teams enforce layered best practices around performance, error handling, observability, and compliance. Current agentic tools often ignore these.

Blind Spots in Code Review

AI agents can accelerate code reviews but still miss higher-order issues like architectural alignment or cross-service dependency checks. For example, I’ve seen agents approve PRs that met syntax and linting rules but overlooked that the changes violated data flow policies between services. This gap creates overhead for senior reviewers who still need to step in.

Why I Prefer Qodo’s Approach to Agentic Workflows

When exploring agentic workflows across platforms, I consistently return to Qodo because its architecture is thoughtfully aligned with real developer needs, combining autonomy with governance, depth with usability, and flexibility with integration. Some of its most notable features are:

Dedicated RAG Engine

Agentic workflows falter without accurate context. Qodo solves this with a secure Retrieval-Augmented Generation (RAG) pipeline that surfaces only relevant code and docs, eliminating hallucinations and maintaining precision. This approach lets agents produce reliable, context-aware suggestions without exposing sensitive or irrelevant information.

To explain clearly with an example, let’s understand with the help of my codebase. I have a production-ready, modular agentic RAG (Retrieval-Augmented Generation) platform for enterprise knowledge management. It orchestrates multiple specialized agents over a common retrieval layer, exposes a FastAPI gateway, and includes full observability and deployment tooling.

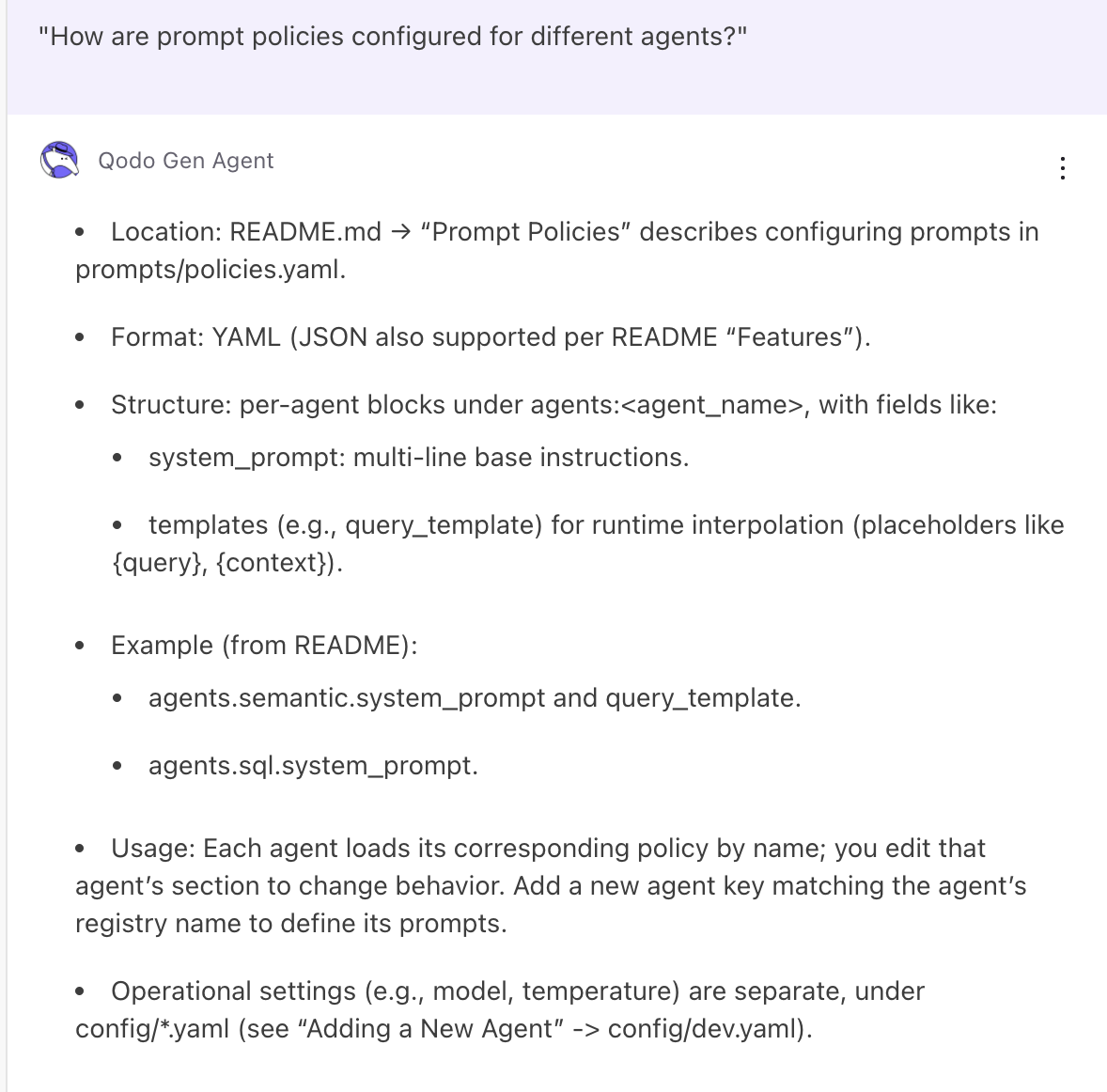

Let’s say a developer wants to know how retrieval policies are managed in my system. In my current multi-retriever pipeline, a query like “How are prompt policies configured for different agents?”

Without RAG pre-filtering, a traditional embedding-based retriever might have mixed in irrelevant YAML files from config/ or deployment templates from charts/. But Qodo’s context filtering isolated the right directory + file type before passing it to the agent.

Adaptive Multi-Step Planning with Agentic Mode

Unlike tools that only respond to immediate commands, Qodo supports an Agentic Mode that systematically thinks through tasks. It plans, selects appropriate tools, applies them, and refines its output, all autonomously. Whether generating tests, refactoring code, or debugging, this feature brings problem-solving capability to developer workflows.

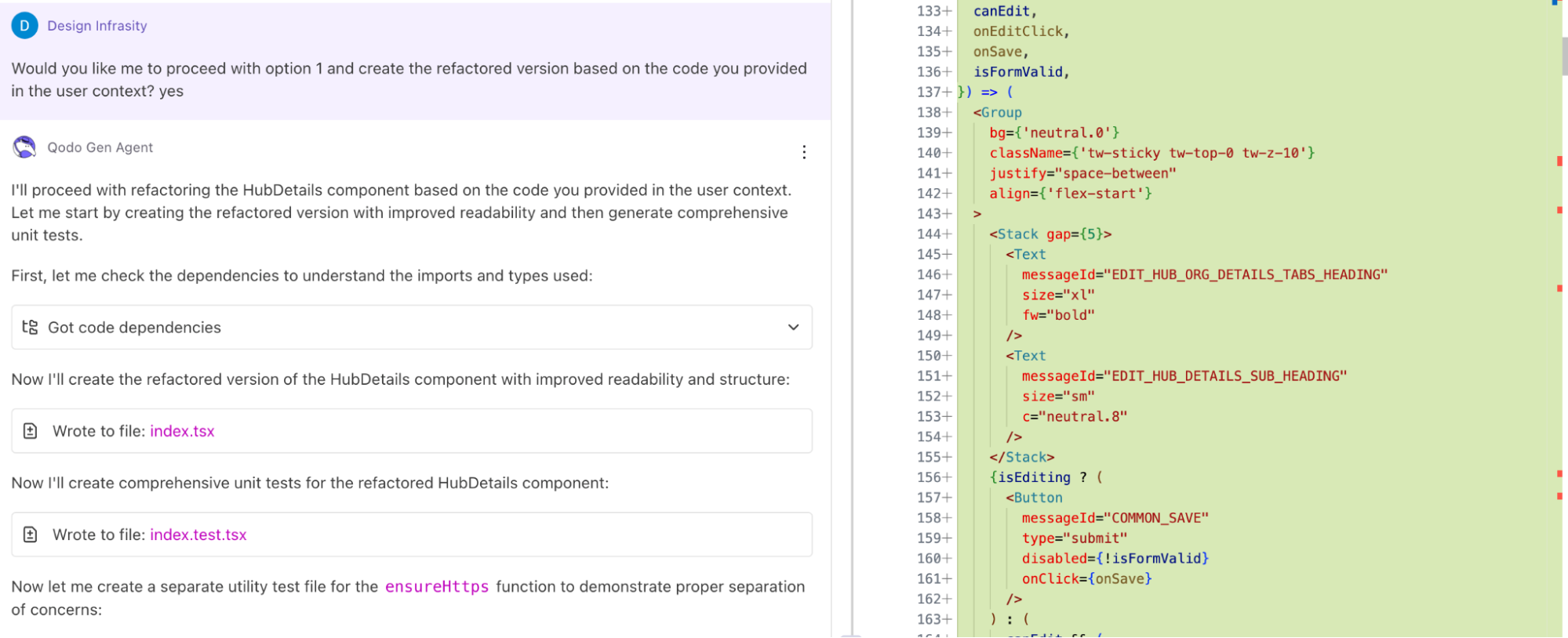

For this demo, I used the same hub_details.py file from my earlier setup. The hub_details.py file is a utility module that retrieves and organizes information about different hubs in the system. It contains logic for mapping hub IDs to name, location, and capacity attributes. Over time, the file had grown with additional conditionals and repetitive blocks, which made it harder to maintain and extend. So, I asked Qodo Agent Mode:

“@HubDetails Refactor this file for readability and generate unit tests”

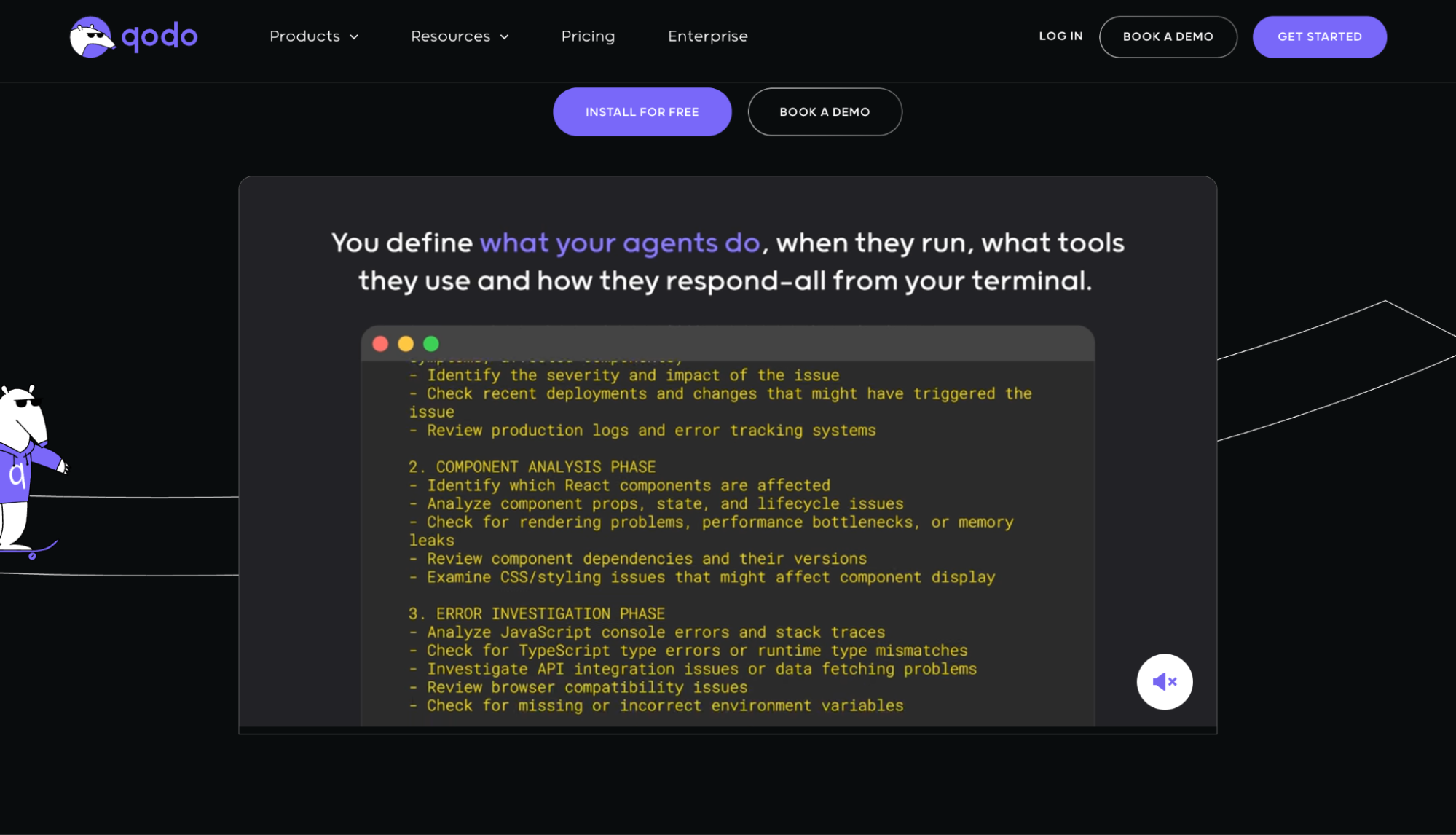

As shown in the image above, Qodo Agentic Mode immediately began a multi-step planning process:

- Analyzed dependencies: It first scanned the code to identify imports, types, and external functions.

- Refactored the core file: It created a cleaner, modular version of hub_details.py, improving readability and reducing duplication.

- Generated comprehensive tests: Alongside the refactor, it produced unit tests in a separate file, ensuring functionality was preserved.

- Separated concerns: Utility functions such as ensureHttps were isolated into their own test files for better maintainability.

Extensible Tool Integration with MCP (Model Context Protocol)

Qodo is one of the most notable AI code assistants that enables agents to use external tools via an extensible MCP-powered architecture. The agents can tap into Jira tickets, Git histories, APIs, databases, and more, all in service of a task, without hardcoding each integration. This abstraction simplifies multi-tool orchestration and keeps workflows adaptable as tooling evolves.

Let’s take an example of a Tech Lead who oversees multiple services and wants to enforce consistent coding standards while reducing repetitive review work. With Qodo, agents can integrate with Git histories to analyze past merge patterns, pull data from Jira to understand related issues, and even query databases to validate schema changes.

Instead of spending hours verifying boilerplate compliance, the Tech Lead gets a system where agents automatically surface deviations, suggest fixes, and flag only the edge cases for human review. This ensures team consistency while letting the Tech Lead focus on architectural decisions rather than manual validation.

Flexible Deployment via Qodo Command

Qodo Command provides a single interface for running agentic workflows across different environments. Instead of being tied to one setup, you can invoke agents from the terminal during local development, integrate them into IDEs for interactive use, or connect them directly to CI/CD pipelines for automated execution. The same command-line utility also supports triggers from webhooks and custom HTTP endpoints, which makes it easier to wire agents into existing systems without additional tooling.

This means you can experiment locally, validate behavior in a controlled environment, and then scale the same workflow to production pipelines. Qodo Command handles the consistency of execution across these contexts, so developers don’t have to maintain separate scripts or adapters for each environment.

Conclusion

In my experience, agentic workflows go beyond traditional AI assistance by changing how software teams operate. Instead of relying on step-by-step prompts, these systems can plan, reason, and execute tasks autonomously. For enterprises, it means repetitive but essential work no longer slows us down, making our pipelines more reliable and easier to scale.

My experience has shown that the real value of agentic workflows emerges when they are tightly integrated with a team’s existing tools, codebases, and best practices. That’s why I lean toward solutions like Qodo, which treat context, automation, and governance as first-class concerns rather than afterthoughts. The result is faster iteration and more reliable collaboration across large-scale systems.

As we look ahead, it’s clear that agent-driven systems will become a core part of enterprise development strategies. The organizations that adopt them early, while maintaining strong review and compliance practices, can move at a pace that traditional methods simply cannot match.

FAQs

What are agentic workflows in AI development?

Agentic workflows are AI-driven processes where autonomous agents can plan, execute, and adapt tasks without constant human intervention. In enterprise development, they integrate with existing pipelines to automate code reviews, testing, debugging, and deployment while preserving oversight and governance.

How do agentic workflows differ from traditional AI automation?

Unlike traditional automation that follows pre-defined rules, agentic workflows are adaptive and context-aware. They can break down complex goals into subtasks, interact with APIs or codebases, and make informed decisions based on real-time feedback.

Why are enterprises adopting agentic workflows?

Enterprises are embracing agentic workflows to reduce developer fatigue, accelerate release cycles, and improve AI code generation and code quality. They also provide a scalable way to handle repetitive engineering tasks while ensuring compliance and security in large systems.

What are the main challenges in implementing agentic workflows?

Challenges include context fragmentation across tools, difficulty integrating with enterprise-grade Git systems, lack of transparency in AI decision-making, and ensuring security in agent-to-agent communication. These challenges often require specialized platforms to overcome.

How does Qodo support agentic workflows for enterprises?

Qodo provides a framework where agents operate directly within enterprise workflows. Through features like contextual code understanding, review automation, and best-practices-driven execution, Qodo helps developers maintain reliability while benefiting from AI-driven automation.