Augment Code vs Cursor: Feature-by-Feature Breakdown for Modern Dev Workflows

TL;DR

- Augment Code is an IDE extension designed for large, complex systems. It supports persistent threads, cross-repo memory, and guided completions through its Next Edit feature.

- Cursor is a standalone IDE based on VS Code, optimized for speed, inline completions, and agent-based edits. It supports tab completions, agent mode, and GitHub-integrated reviews via BugBot.

- While Augment emphasizes continuity and architectural awareness, Cursor focuses on fast, in-editor completions and PR-level feedback during active sessions.

- Both offer free plans. Augment scales through Pro and Enterprise tiers. Cursor includes a free tier, a $20/month Pro plan, and higher tiers with more agent usage. Verified students can access Cursor Pro for free.

- Qodo is an option for long-lived workflows. It offers Git-native commands, dynamic RAG-based context retrieval, and flexible deployment for teams managing monorepos or regulated environments.

As a Technical Lead, I’m always looking for ways to streamline development workflows for speed, long-term code health, and team efficiency. AI coding tools have become a core part of that effort.

According to a study by MIT and Microsoft researchers, developers using AI assistants like GitHub Copilot saw a 26% increase in task completion rates. That’s a measurable productivity boost, but more importantly, it shows how AI tools can reduce context switching, enforce best practices, and gradually chip away at tech debt.

Over the past year, two tools have consistently come up in discussions among developers and leads: Augment Code and Cursor. These aren’t just autocomplete engines. Both claim to understand your codebase, assist in debugging and refactoring, and even support long-term memory and architectural comprehension. I tried them in real project environments, across mid-sized mono-repos, multi-service backends, and active PR review cycles.

Before stepping in, I wanted to see what the broader developer community thought. Reddit, as always, provided some unfiltered insights. One post summed up the tradeoff well:

That’s a strong claim. I wanted to see if this holds up in practice or is a side effect of inconsistent usage patterns.

In this blog, I’m breaking down both tools from a developer’s point of view, how they handle context, how reliable they are across prompts, and how well they support tasks like debugging, code review, or large-scale refactoring. I’ve used both across different repositories and workflows, and this post results from putting them to the test.

Overview of the Tools

Before diving into a feature-by-feature comparison, it’s important to establish what each tool is designed to do. I have listed a factual overview of how each tool works, what key features they offer, and how their pricing is structured.

Augment Code

Augment Code is an IDE extension built as part of a broader AI development platform for professional engineers working with large, complex codebases. It integrates with editors like VS Code, JetBrains, and Vim, and is designed to support structured agent workflows, deep code understanding, and multi-repo reasoning.

With a context window of up to 200,000 tokens, Augment indexes your entire codebase in real time, allowing it to maintain architectural awareness across files, services, and layers. This enables consistent refactors, context-rich discussions, and precise code edits beyond single-file prompting.

Key Features

- Context Engine: Deep indexing of your codebase to enable chat and agent workflows with accurate context.

- Next Edit Capability: Guides multi-step refactors across code, test, and documentation files.

- In-line Completions: Personalized completions informed by project imports, third-party libraries, and external docs.

- Memories & Rules: Customizable rules and architectural memory to align with team best practices.

Pricing

Augment Code offers a free Community plan with 50 user messages per month. Paid plans start at $50/month for 600 messages, with higher tiers like Pro ($100) and Max ($250) offering increased limits. The Enterprise plan includes unlimited usage, SSO/SCIM, Slack integration, and compliance features, with pricing based on team requirements.

Cursor

Cursor is a standalone IDE built as a fork of Visual Studio Code, designed to integrate AI assistance tightly into the core development workflow. It supports models like GPT‑4.1, Claude, and Gemini, enabling prompt-driven completions, natural language edits, and multi-file modifications within the editor.

Cursor is optimized for speed and iteration, with features like inline autocomplete, smart refactor suggestions, and an agent mode that can apply changes across files.

Key Features

- Tab Completion & Multi‑Line Edits: Strong inline completion predicting next edits and multi-line suggestions based on recent edits.

- Smart Rewrites: Automatic code improvement even when directives are imperfect or loosely typed.

- Agent Mode: Trigger broader actions with Ctrl+I, letting AI handle changes across files while keeping developer oversight.

- Codebase Understanding: Natural language queries search the entire codebase; AI retrieves relevant context automatically.

- Shell Command Support: It can suggest and run terminal commands automatically (with confirmation).

Pricing

Cursor starts at $20/month for the Pro plan, which includes unlimited completions and light agent usage. The Pro+ plan ($60) and Ultra plan ($200) increase monthly agent capacity significantly. Students can access the Pro plan free for one year upon verification.

Feature-by-Feature Comparison: Augment Code vs Cursor

Installation and Setup

Before running comparisons, it’s essential to understand how each tool integrates into your development workflow. Here’s a direct comparison based on official documentation.

Augment Code

Augment Code starts with installing a dedicated extension into your IDE: Visual Studio Code, JetBrains (IntelliJ, PyCharm, WebStorm), or Vim/Neovim.

You need to sign up after you install it in your IDE. Next, it will ask you to index your codebase, augment enables chat, Next Edit, and inline completions, which reads through your codebase to build a context model of up to 200K tokens.

Setting up Augment agents or Model Context Protocol (MCP) integrations (e.g., linking Slack, Jira, or local MCP servers) is optional but supported in the same settings panel. Full setup from extension install to active coding typically takes under five minutes for medium-sized repos.

Cursor

Cursor is distributed as a standalone app (forked from VS Code) for macOS, Windows, and Linux. The installation is just downloading the installer or AppImage from cursor.com, running it, and optionally importing your VS Code settings, extensions, and keybindings.

Upon first launch, Cursor runs an onboarding wizard to configure themes, keyboard mappings, and sign-in. When you open a project, it begins indexing your codebase to support chat, tab completion, and agent mode.

Both installation processes are quite easy to carry out. However, in my experience, Augment code was a bit easier as it’s an extension, so I do not have to download an entire IDE and set up everything. Nonetheless, both are very easy to install and can start in minutes.

Code Context Awareness

When evaluating AI tools for real-world engineering work, one of the first things I look at is how they handle code context. Whether I’m working across a monorepo or jumping between tightly coupled services, how a tool retains and reasons over context directly affects the quality of its responses. So, let’s see which tool, Augment code vs Cursor, does this job better?

Augment Code

Augment Code maintains persistent, project-wide memory and context across sessions. It indexes your entire workspace, including multiple repositories, and can hold up to 200,000 tokens of code, docs, and conversation context. This long-range understanding allows it to follow architectural patterns, module relationships, and previous decision threads across weeks of work.

For example, if you previously initiated a Next Edit sequence to refactor database model imports in several services, Augment remembers that intent later, even if you return to the task days later.

The system supports Rules and Memories stored in .augment/rules, which encode architectural preferences, naming conventions, or domain logic. These rules are automatically included in agent conversations to influence future suggestions.

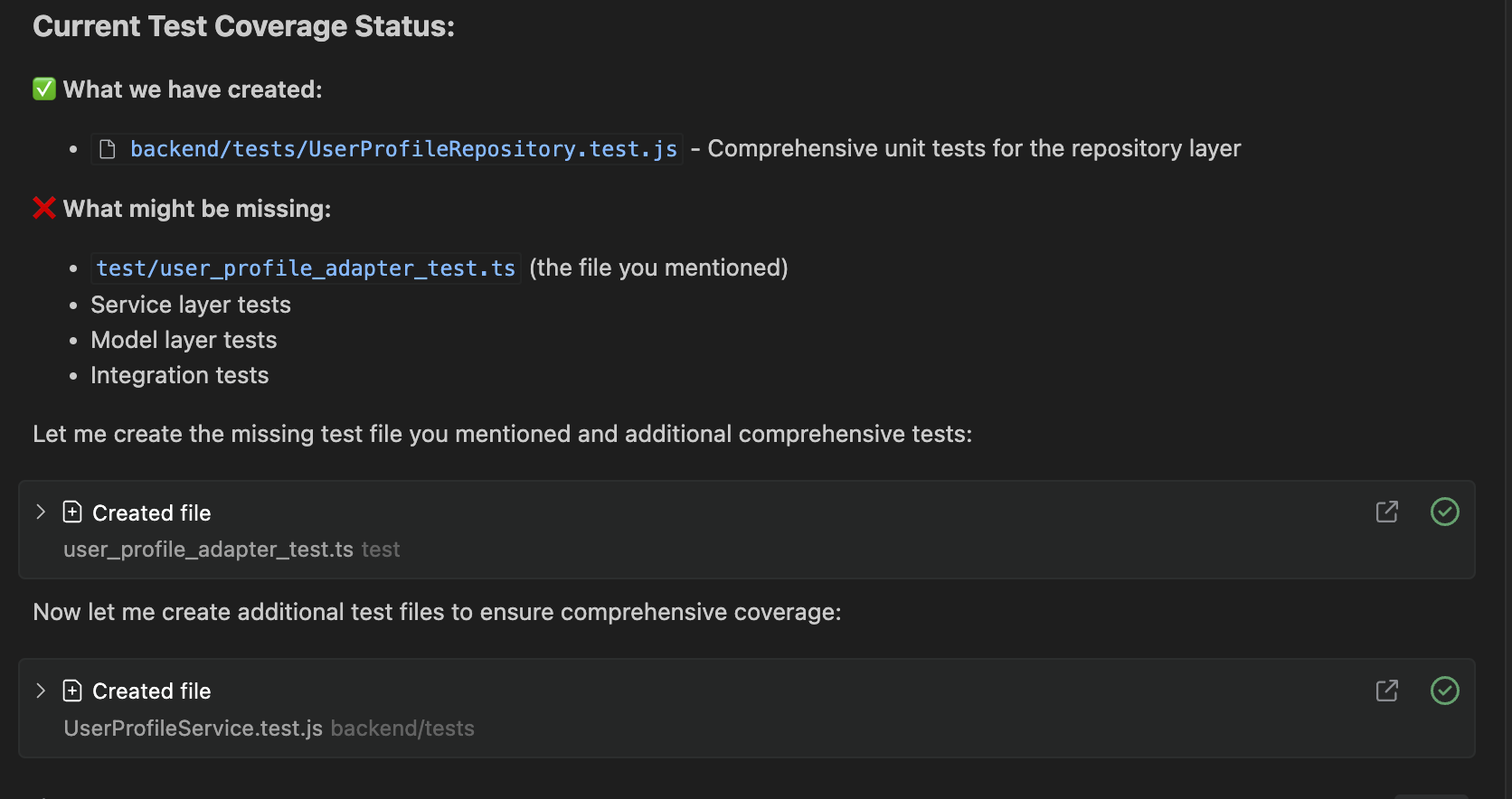

In one of my schema migration sessions, I asked Augment to verify whether all affected layers had proper test coverage.

Without me restating the entire context, it identified what had already been generated (UserProfileRepository.test.js). It highlighted what was missing, including the exact file I had mentioned earlier (user_profile_adapter_test.ts), along with other layers like services, models, and integration tests.

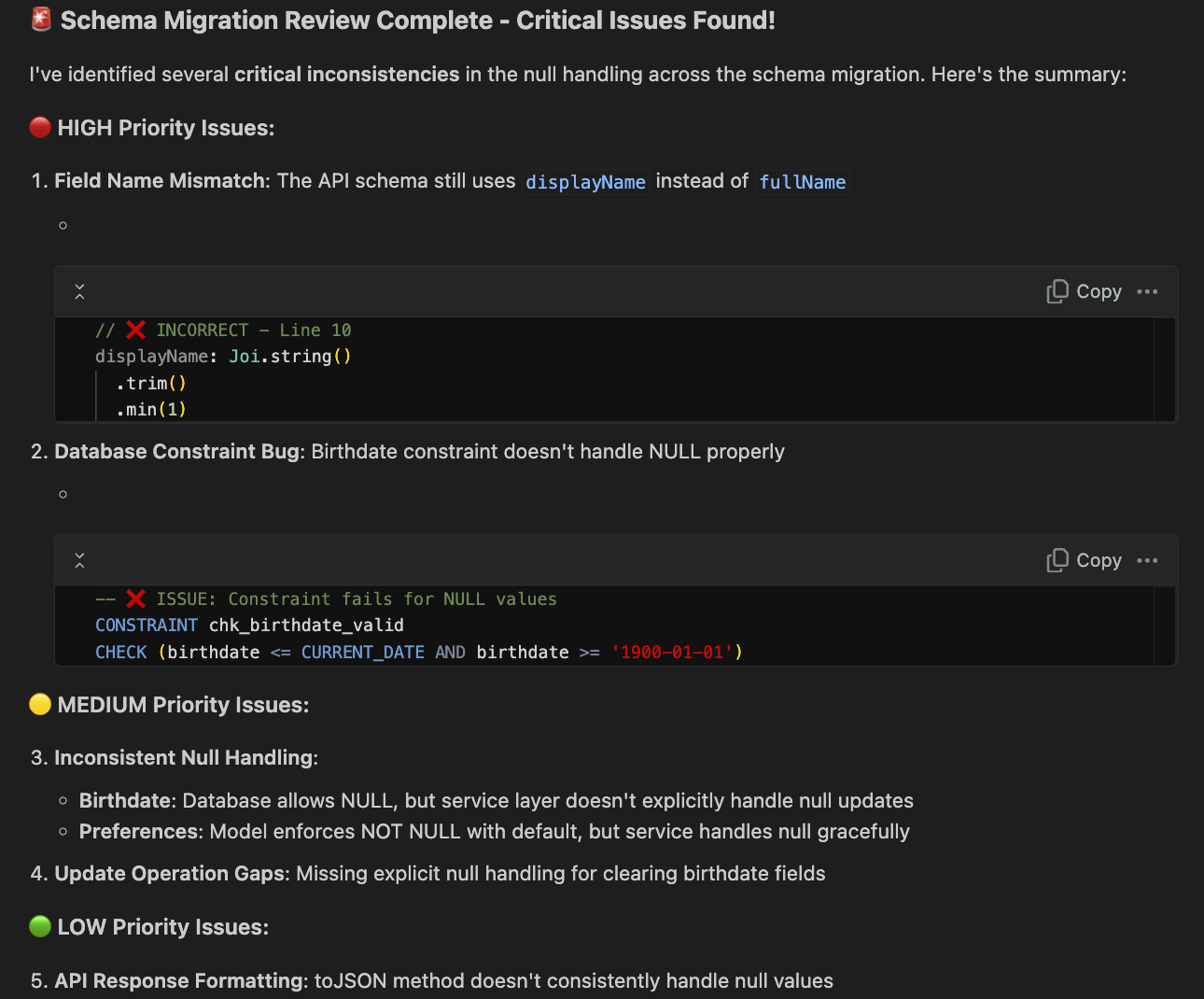

Here’s the snapshot from that interaction:

Augment identifies what’s already created and highlights what’s still missing, based on the earlier schema migration request, without needing any recap.

Cursor

Cursor handles context differently: its AI operates on a session-based context model, primarily tied to open files and active chat threads. By default, once you close a session or restart the app, it does not retain prior conversational memory. To resume a multi-step task, you typically need to reintroduce the context.

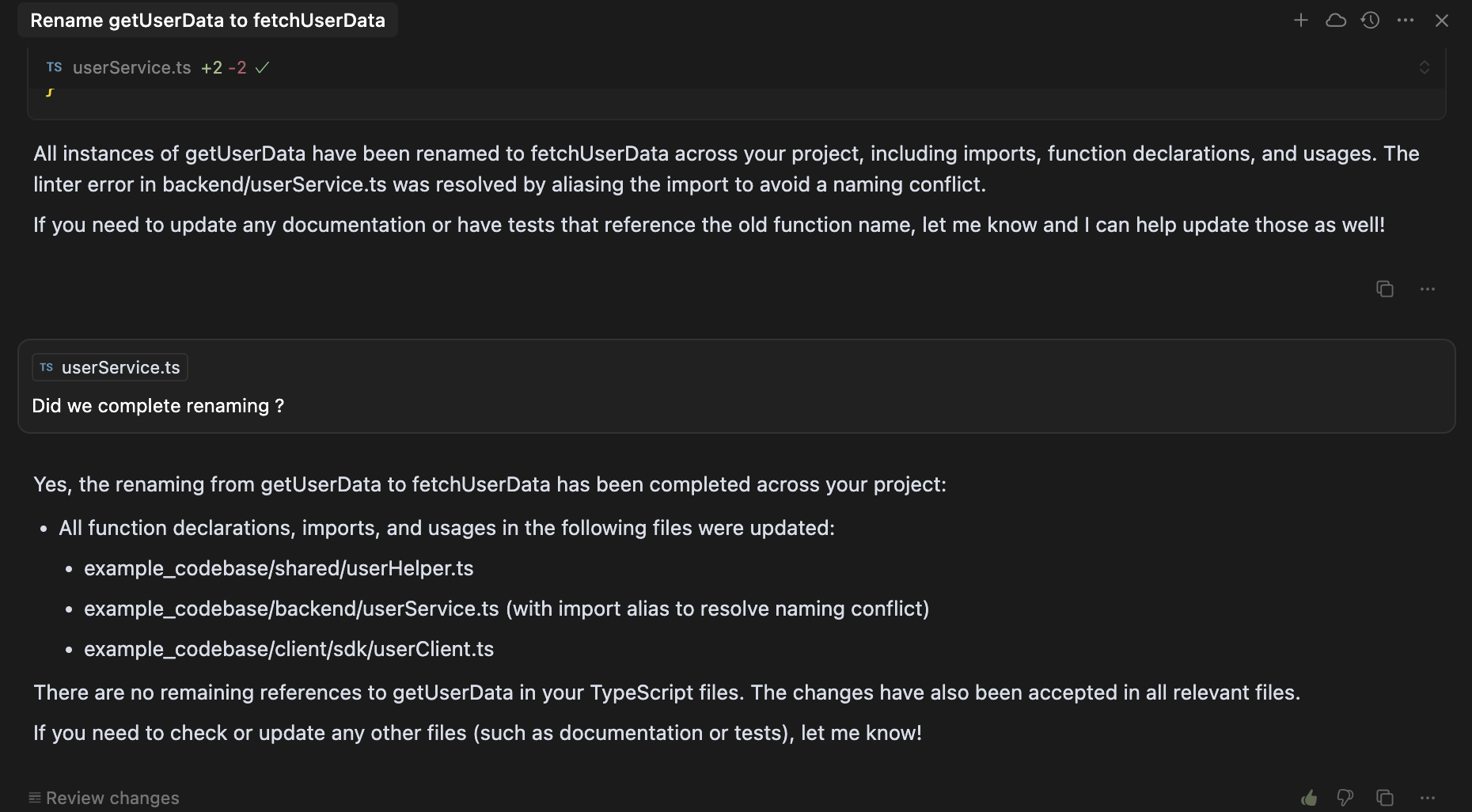

However, Cursor does allow for some continuity in recent sessions, especially if tabs remain open or Max Mode is enabled. In my case, I had renamed getUserData to fetchUserData across files like userService.ts and userClient.ts. After restarting Cursor, I issued a follow-up prompt:

“Did we finish renaming?”

Here’s a snapshot of the same:

Surprisingly, it resumed the thread with prior context intact, suggesting it had retained task-specific memory, likely scoped to the open workspace or cached model state.

Cursor’s default context window spans approximately 128K tokens, expandable to 1M tokens via Max Mode on models like GPT‑4.1 or Gemini 2.5 Pro.

It also supports .cursor/rules, static files for project-wide or folder-specific instructions. These rules can shape agent behavior during code edits or chat prompts, but do not persist conversational state across sessions. They are only reapplied when explicitly included or triggered through file context.

For deeper persistence, developers can integrate Model Context Protocol (MCP) tools like @itseasy21/mcp-knowledge-graph or Graphiti, which augment Cursor with long-term memory across projects and sessions.

Final Win: Augment Code

Augment Code is designed for long-term memory and cross-repo indexing, which makes it more suitable for workflows that span multiple sessions, teams, or architectural layers. It retains conversational threads, applies custom rules, and tracks progress. Conversely, Cursor focuses on in-session context, dynamically pulling in relevant files and code fragments.

While it can restore some continuity with open tabs or Max Mode enabled, persistent memory requires additional setup.

If you’ve hit limitations with session-based or static memory models, it’s worth looking at tools like Qodo that dynamically retrieve historical and architectural context.

Expert Advice: Qodo For Context Awareness

Qodo is an AI assistant tool (which comes with other tools like code creation, PR code views, and Agent Mode) that uses Retrieval-Augmented Generation (RAG) for code understanding and review workflows. Here’s what I like about Qodo:

- Context is retrieved dynamically, not statically stored: Qodo queries your repo and PR history in real-time to gather relevant snippets before generating suggestions.

- Supports monorepos and distributed architectures: For enterprises, Qodo is ideal for monorepos or distributed service architectures. It can be configured to fetch context across all relevant repositories rather than only the current one.

- Prior decisions and coding patterns are inferred automatically: Qodo identifies your team’s conventions, like module boundaries or error handling, by retrieving examples and patterns rather than relying on memory or manual dictation.

AI Code Completion

The most awaited factor for any AI coding tool is how they deliver code completions. Let’s get started to understand what’s the difference between the AI code completion of Augment code vs Cursor :

Augment Code

Augment Code doesn’t behave like a traditional autocomplete engine. Instead, it’s built around structured, agent-driven completions inside threaded prompts. These code completions aren’t inserted passively while typing. Instead, they’re scoped, context-aware suggestions tied to larger tasks like refactoring, implementing patterns, or applying design conventions.

Augment Code’s Next Edit feature is central to handling structured, context-rich completions. It’s a key differentiator compared to tools like Cursor, especially when you’re refactoring, applying patterns, or working through multi-step code transformations.

It allows you to break down a larger task into sequenced, agent-driven suggestions, each applied intentionally, with full code awareness. Instead of asking for a one-shot fix or waiting for autocomplete, you initiate a task (e.g., refactor, migrate, update), and Augment presents the next logical code edit in the sequence.

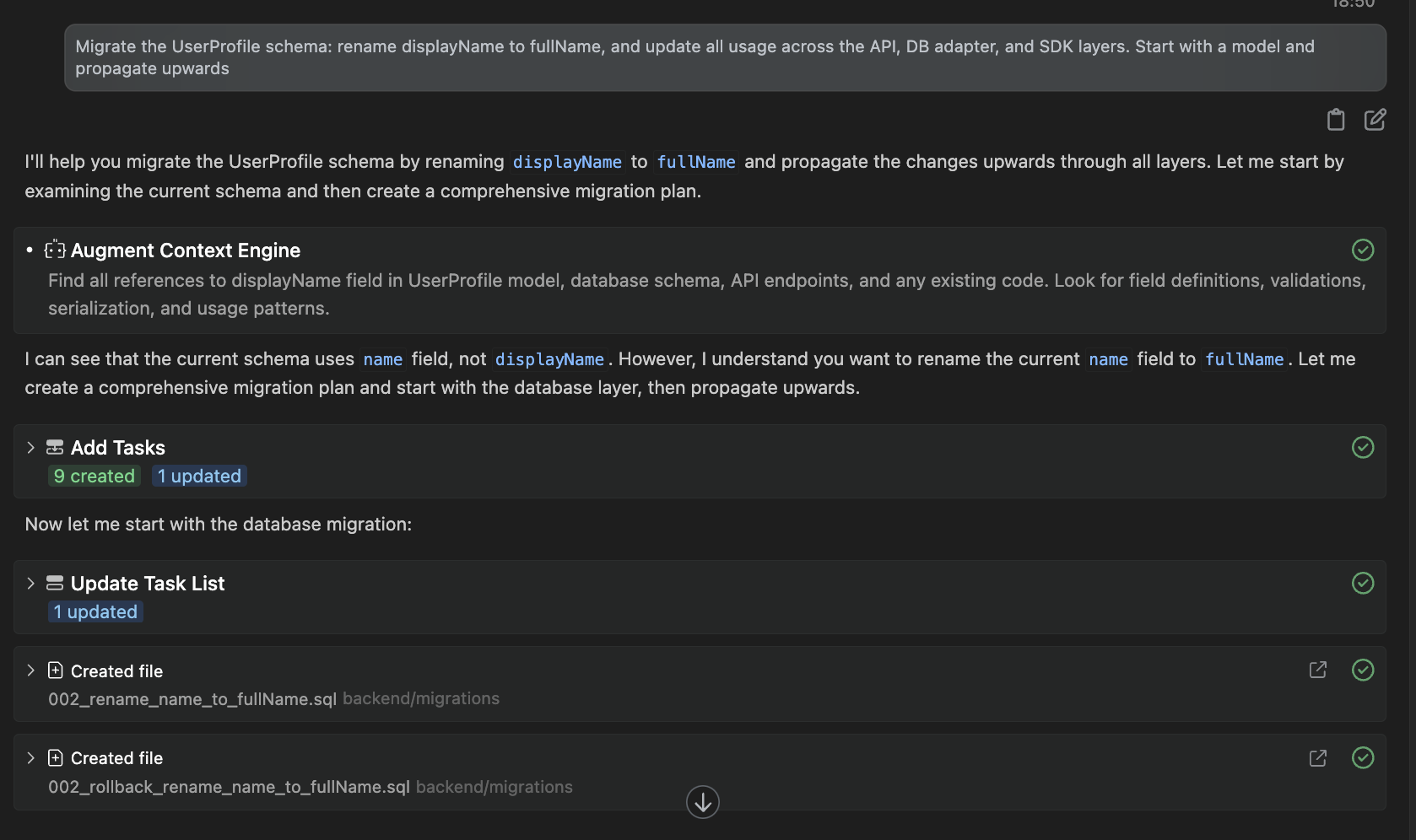

To understand better, I’m using the same codebase for schema migration across the API layer, DB adapter, and client SDK. I prompt Augment Code with:

“Migrate the UserProfile schema: rename displayName to fullName, and update all usage across the API, DB adapter, and SDK layers. Start with a model and propagate upwards.”

Here’s a snapshot of the output:

Augment parsed the prompt and activated its Context Engine to identify all references to displayName (and later correctly inferred I meant the name field) across models, schema files, services, repositories, and API layers. It didn’t just suggest changes, it created migration files, edited UserProfile.js in the models folder, updated the repository and service layers (UserProfileRepository.js, UserProfileService.js), and began editing API contracts as part of the plan.

Notice how the Next Edit feature helps so that this wasn’t done in a single step. Each step was tracked in the thread, and I could see the changes being built live. If I paused the session, I could return later and resume from where I left off, fully aware of what had been done and what remained.

The structured execution across layers, with file-by-file tracking and continuity, made it feel closer to a code-native assistant embedded into my workflow, not just a chat interface.

Cursor

Cursor delivers a more streamlined, inline code completion experience optimized for speed and editing efficiency. As you type, suggestions reflect local context, imported modules, and your coding style in that file. It works across languages like JavaScript, Python, and Go, and its tab-completion often handles multi-line blocks: function definitions, loops, or conditionals, including docstrings or type hints.

Cursor’s Agent Mode (Ctrl+I) extends the single-file completion experience to cross-file scope. You might request, “Add input validation to all endpoints,” and Cursor instantly generates patches across controllers and test files. These edits arrive inline and are applied automatically when approved.

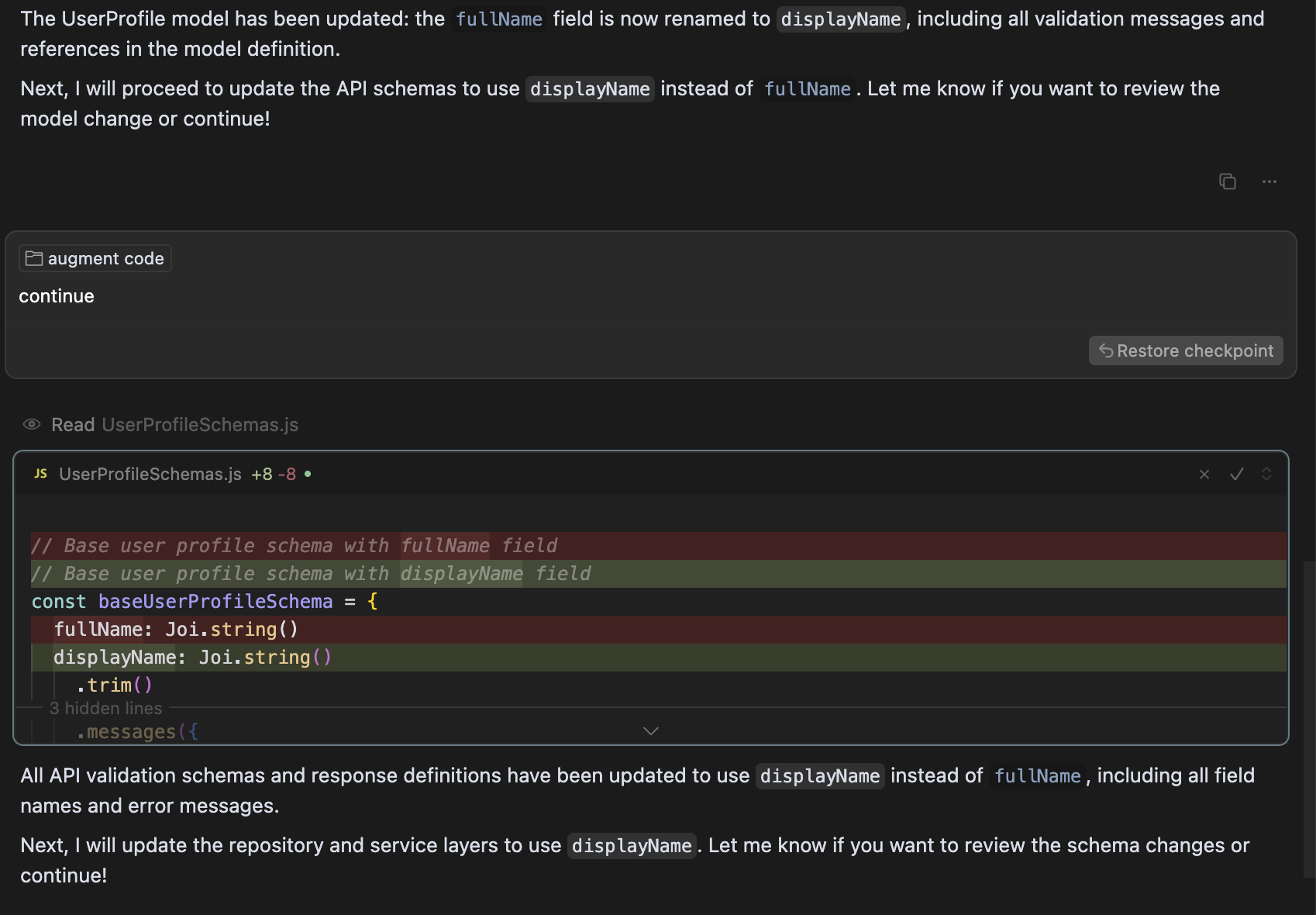

To keep the comparison consistent, I used the same schema migration task in Cursor: renaming the fullName field to displayName (inverse now) and applying the update across the model, repository, service, and API layers. (same prompt as above).

Cursor began by generating a clear, high-level migration plan, listing all the layers it needed to touch, including the model, API validation schemas, repository and service layers, controller logic, SDKs, tests, and the final migration script.

This upfront breakdown was helpful, but unlike Augment Code, Cursor did not proceed automatically. Manual confirmation is required at every step before continuing with the next phase.

Here’s what Cursor responded:

When it started editing, Cursor updated the model definition by renaming the field in UserProfile.js and rewriting validation messages. The changes were shown as diffs with a clear commit-style format, making it easy to audit. However, after making this change, Cursor paused and explicitly asked whether I wanted to continue to the next layer or review the model changes further. I had to instruct it to proceed manually.

Cursor followed the same process when moving to API schemas. It replaced all occurrences of fullName with displayName in Joi validations inside UserProfileSchemas.js and again paused for review before advancing. This hands-on experience shows that while Cursor performs context-aware edits with solid local reasoning, its step-by-step execution relies on continuous user direction.

Compared to Augment’s Next Edit, which continues migrations across layers without being prompted again, Cursor trades autonomy for control. This can be helpful for cautious edits, but it feels slower for multi-layered tasks.

Final Win

Augment Code wins when working on structured, multi-step changes like schema migrations or large refactors. Its Next Edit feature delivers context-aware suggestions tied to threads, tracks progress across sessions, and doesn’t require re-prompting at every step.

Cursor excels at fast, inline completions during active sessions. It’s efficient for quick edits, but longer tasks need manual follow-ups and lack persistent memory.

For deep, guided completions across layers, Augment Code is more reliable. For speed and local edits, Cursor is faster.

AI Code Review Capabilities

One of the most important factors when choosing an AI coding tool is how it handles code reviews. Beyond surface-level checks, it should retain context and support real review workflows. Here’s how Augment Code and Cursor compare.

Augment Code

Augment Code is designed for threaded, collaborative code review workflows. It supports PR-level threads where you can scope prompts to specific files, diffs, or functions. Unlike stateless review bots, Augment retains conversational and architectural memory across sessions. If you begin a review today, you can continue from the same thread days later without manually reloading context.

Augment uses its context engine to review the diff and the surrounding logic, impacted dependencies, and team-defined conventions. Because it stores memory in .augment/rules and links to persistent threads, it recognizes related discussions, applied fixes, and unresolved issues.

Its Next Edit mechanism also works in reviews; if it finds multiple issues, it can walk you through applying one suggestion at a time while preserving the review thread state. This makes it especially useful for multi-developer environments where code reviews aren’t completed in one go and require continuity.

For example, using the same migration codebase, I can ask Augment questions like:

“Review the schema migration PR and flag inconsistencies in null handling across services.”

Here’s a snapshot of the review:

It highlighted a field name mismatch (displayName vs. fullName), a database constraint bug where birthdate checks fail on NULL, and inconsistent null handling between the database, model, and service layers. It also pointed out update operation gaps and noted a low-priority issue where the toJSON method doesn’t consistently handle NULL values.

Cursor

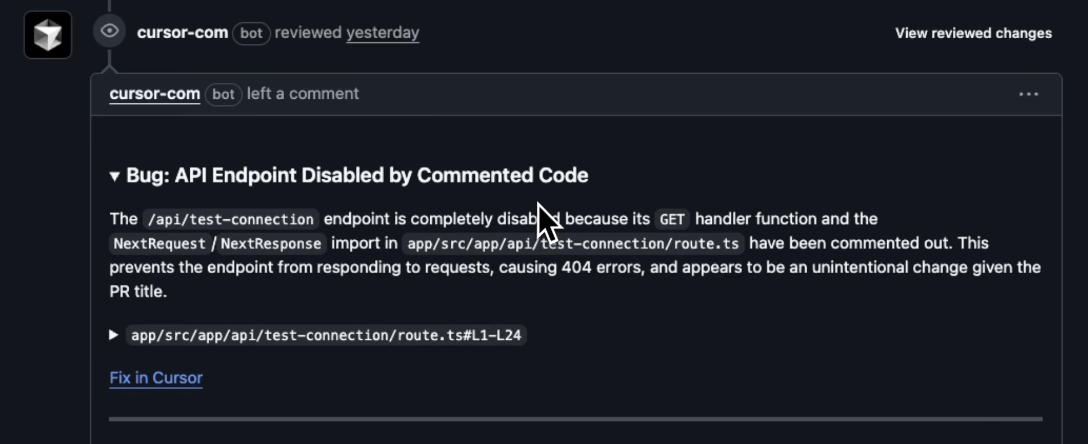

Cursor includes a built-in AI code reviewer called BugBot, introduced in Cursor 1.0 and available in Max Mode for Pro users. BugBot automatically scans pull requests for issues like logic flaws, performance bottlenecks, security problems, or style inconsistencies. Once enabled, it analyzes diffs when a PR is opened or updated and posts line-by-line feedback directly on GitHub, resembling a human code reviewer.

These comments are actionable, each includes a “Fix in Cursor” link that opens the file directly in the Cursor IDE, pre-fills the relevant context, and lets you apply the fix immediately.

For example, while working on a Next.js project, I ran Cursor’s automated code review (Bugbot) on a recent pull request.

Cursor’s Bugbot automatically detected that an API endpoint (/api/test-connection) was broken because its GET handler and imports (NextRequest / NextResponse) were accidentally commented out. Bugbot flagged this as a likely unintentional change that would cause 404 errors, helping catch the issue early before it reached production.

Beyond pull requests, Cursor also supports manual AI reviews within the editor. You can select a file, commit, or a custom diff and prompt Cursor with commands.

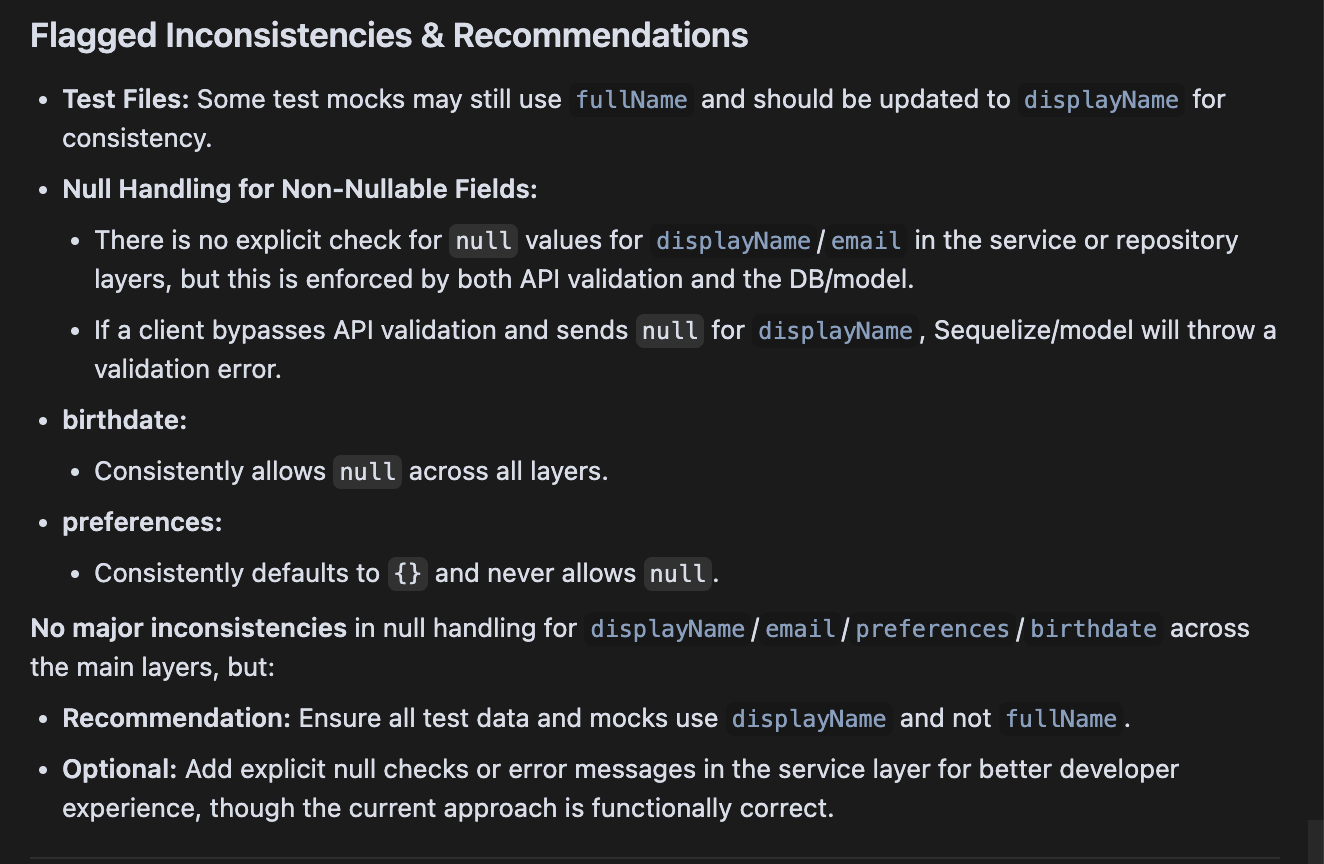

For example, for the manual AI review in the editor itself, I prompt Cursor with the same prompt I gave to Augment code. Here’ what cursor replied with:

Cursor replied with a detailed summary highlighting test file naming mismatches, null handling inconsistencies for fields like displayName, email, and birthdate, and recommendations to add explicit checks or update mocks, similar to the structured findings Augment Code produced.

While BugBot examines diffs and “remembers” the changes within that PR context, Cursor does not retain conversational review state across separate sessions. If you close the session and return later, you must re-trigger the review process manually, as there’s no persistent review thread.

Final Win

Augment Code offers more persistent and structured code reviews. It retains context across sessions, supports threaded PR reviews, and allows follow-up prompts without resetting. Cursor’s BugBot is fast and effective for PR diffs but remains scoped to the current change and lacks long-term conversational memory.

Why Teams Prefer Qodo in Enterprise Workflows

Qodo is built from the ground up for teams operating in large, distributed, and regulated codebases. Unlike tools that rely on static memory or session-based threads, Qodo uses Retrieval-Augmented Generation (RAG) to dynamically pull relevant context from your repositories, documentation, past PRs, and team-defined best practices at the moment of suggestion.

For enterprise environments, Qodo supports SaaS, private cloud, air-gapped deployments, SOC 2 compliance, and advanced governance features like MCP allow-listing. These make it suitable for companies with strict security, auditability, and toolchain integration needs.

Every AI review or suggestion is bound to Git commits, not just sessions. Teams can encode their best practices as YAML-based rules and apply them consistently across PRs using /review or /implement commands directly in GitHub comments or terminals.

Hands-On Example

Let’s say your team has a strict architectural rule: “Do not call third-party APIs directly inside service handlers. Use the internal abstraction layer.”

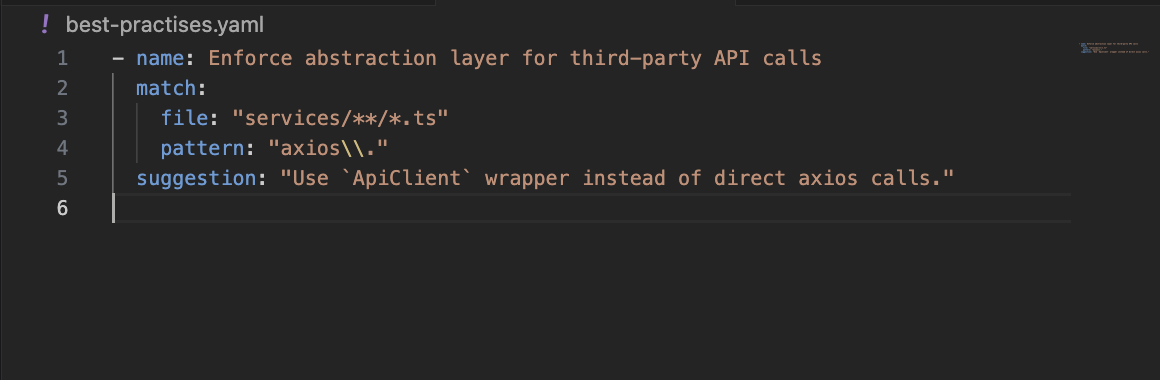

With Qodo, you define this once in a best-practices.yaml file:

Now, whenever a pull request introduces a violation, say a developer adds axios.get(…) in a service, Qodo flags it during the review with /review and suggests the fix inline, backed by the rule metadata. You don’t need to re-prompt or open a chat window.

In short, Qodo is purpose-built for long-lived, Git-driven engineering workflows where traceability, shared code standards, and integration with enterprise systems are non-negotiable. It doesn’t just add AI to your workflow; it treats your workflow as the first-class interface.

This model of context retrieval, rather than chat memory, makes Qodo stand out. It scales cleanly for teams managing monorepos or service-oriented architectures without requiring session management. It delivers suggestions that align with your engineering norms, without needing to be told every time.

Conclusion

Augment Code and Cursor push the boundaries of what AI can offer in modern software development, but they do so with different philosophies. Augment focuses on structured, long-lived agent workflows for deeper architectural understanding, persistent memory, and multi-step refactors. Conversely, Cursor excels in responsiveness, local reasoning, and fast inline edits backed by strong model integration.

If your team needs an AI assistant that supports complex migrations, cross-file reasoning, or multi-session continuity, Augment Code is the stronger fit. It acts more like a project-aware collaborator that evolves with your codebase. Cursor is better suited for fast-paced iterations, lightweight tasks, and developers who prefer to remain tightly controlled.

For teams that need to go beyond both, especially in enterprise settings with multiple repos, long review cycles, and codified engineering rules, Qodo brings a third option. It’s purpose-built to retrieve context dynamically from across your org’s code and workflows, not just your current session. That shift in architecture makes it uniquely suited for scale.

FAQs

What are the limitations of Augment Code?

While Augment Code excels at structured, multi-step workflows and long-term memory, it requires intentional setup around rules and agent workflows. Initial indexing on very large codebases may take some time, and its feature-rich nature can feel heavyweight for simple ad-hoc edits.

What is the best Cursor alternative for enterprise?

For enterprises, Qodo offers a compelling alternative, built for Git-first workflows, cross-repo context retrieval, and built-in enforcement of engineering best practices via commands like /review and /implement.

Can Augment Code or Cursor handle enterprise-scale code securely?

Yes. Augment Code supports enterprise needs with multi-repo indexing, secure integration options, and persistent context. Cursor also handles enterprise use cases securely; it supports encrypted sessions, local privacy mode, and integration with GitHub workflows, while preserving code privacy.

Are Augment Code and Cursor SOC 2 compliant?

Both tools hold SOC 2 Type II certification: Augment Code publicly discussed its audit cycle and compliance measures. Cursor is similarly certified, with an external audit attesting to its security and availability controls.

What is the best Augment Code alternative for an enterprise?

In enterprise environments, Qodo provides robust alternatives; its Retrieval-Augmented Generation (RAG) supports cross-repo context, Git-native commands, and rule-based automation. Unlike Augment’s thread-based memory, Qodo ties suggestions directly to your codebase and workflows, ensuring consistency and traceability.