Beyond Vibe Coding: Scaling AI Across the Software Development Lifecycle

Remember when AI in coding meant simple autocomplete? Those days feel like ancient history. We’ve rapidly evolved through AI copilots that helped with small tasks, to “vibe coding,” where developers generate entire functions, files, and features from natural language prompts. The productivity gains are undeniable, but faster isn’t always better when the output can’t be trusted.

The hidden cost of speed without standards

Picture this scenario: Your team adopts AI code generation. Sprint velocity doubles. Everyone’s celebrating. Six months later, you’re drowning in technical debt, bug fix ticket double, pull requests are piling up, and that “miraculous” AI-generated codebase has become a maintenance nightmare.

This isn’t hypothetical, it’s happening right now across the industry. Teams are discovering that AI-generated code that “works” isn’t the same as code that’s:

- Trustworthy: Code must be reliable and consistently perform as expected.

- Aligned: Code needs to adhere to architectural and team standards.

- Compliant: Code should meet all security and regulatory requirements.

- Easy to Maintain: Code should be testable and understandable for future debugging.

The gap between “it runs” and “it’s production-ready” has become the bottleneck that threatens to undo all of AI’s promised benefits.

From code generation to code quality

AI tools have proven they can accelerate development, but speed without quality creates fragility. The real challenge isn’t how fast AI can produce code, but whether that code is correct, tested, aligned with architecture, and safe to ship.

But code quality at scale isn’t just about tools, it’s also about principles that shape how AI should be applied across the lifecycle.

4 principles to scale AI code quality

Shifting quality left (way left)

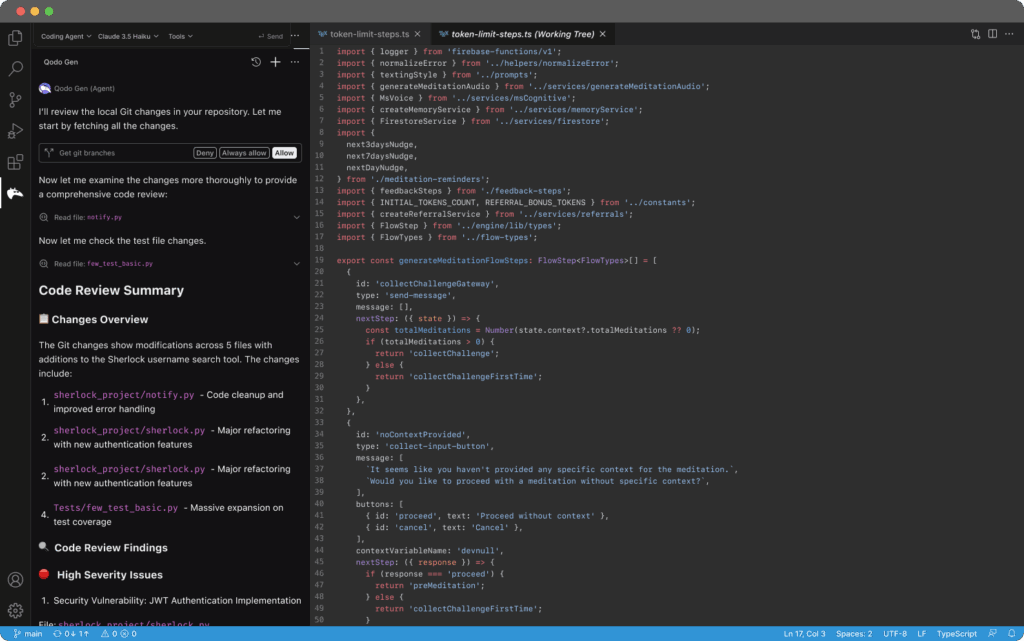

Forget waiting for code review to catch problems. AI should be identifying and fixing issues—missing feature flags, insecure patterns, architectural violations—before code is even committed. When quality becomes proactive rather than reactive, rework drops dramatically.

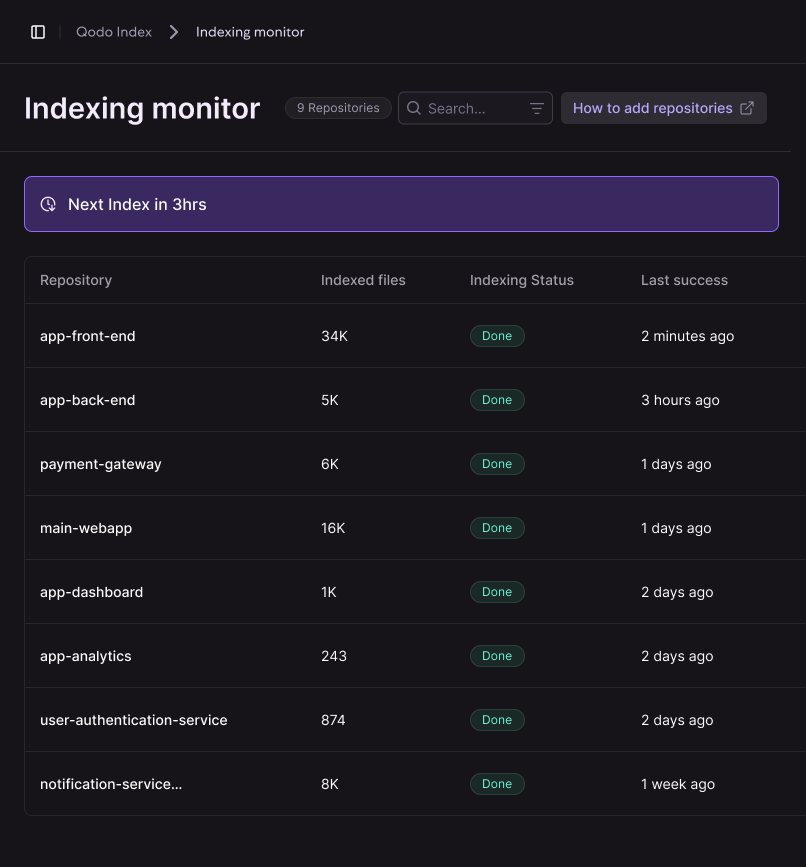

Master context management

AI is only as good as the context it draws from. Without control, polluted or outdated sources can undermine accuracy. That’s why organizations need context governance for which repos, documents, and experiments feed into AI reasoning. Managed properly, AI reflects authoritative, up-to-date knowledge instead of noise.

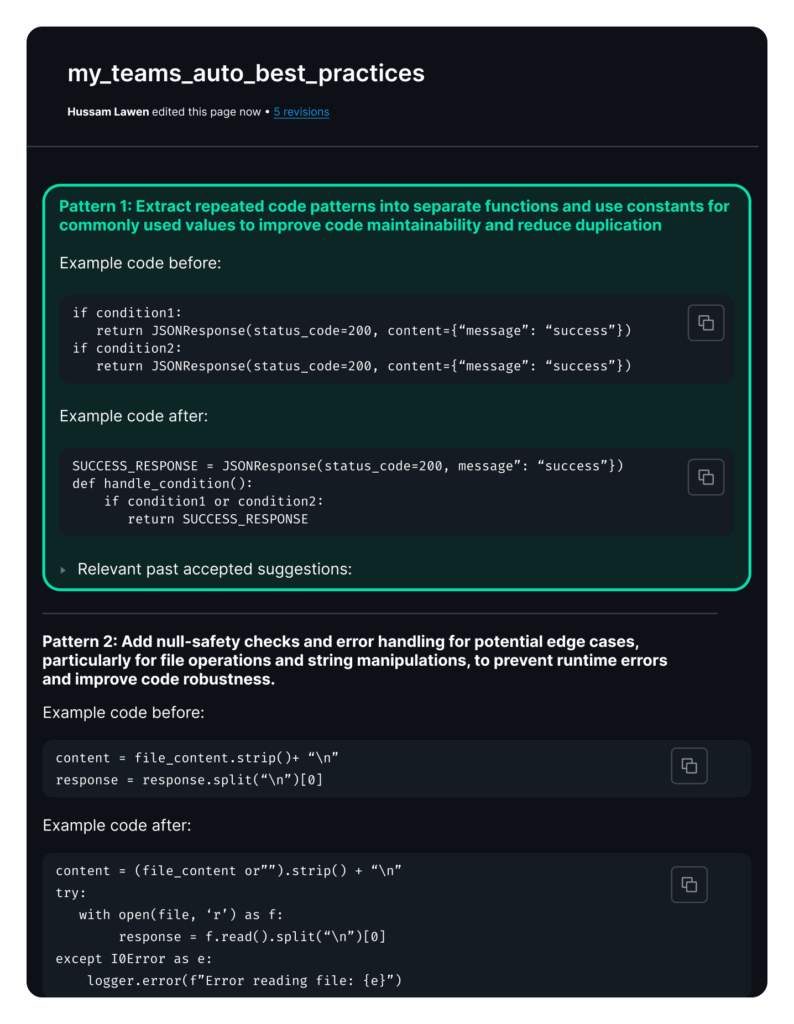

Close the loop

Every time a developer accepts, modifies, or rejects AI-generated code, it’s a valuable signal. The best systems capture these actions and use them to improve future outputs. Over time, this creates AI that doesn’t just generate code, it generates code that matches what your team actually ships.

Transform Tribal Knowledge into Codified Standards

In small teams, conventions can be enforced informally through habits or peer review. At enterprise scale, that breaks down. Governance provides the consistency layer not just for suggesting rules, but tracking how often they’re applied, where exceptions occur, and which critical practices are ignored. Without that visibility, standards erode quietly. One missed feature flag becomes ten, logging conventions drift, and test coverage fragments across teams.

Protocols, Models, and the Next Layer of Governance

As AI becomes infrastructure, governance must extend beyond the code to the AI systems themselves.

Model Context Protocols (MCPs): Power with Responsibility

Model Context Protocols make AI extensible. They let developers connect models to external services, APIs, and datasets, unlocking powerful workflows. But with that power comes risk. An unverified MCP can introduce insecure data flows, leak sensitive information, or bypass enterprise policies. Without oversight, every developer may extend AI differently, creating a patchwork of integrations that is impossible to secure or standardize.

Governance here means treating MCPs like dependencies:

- Maintaining an allowlist of trusted MCPs

- Reviewing new MCPs before adoption

- Controlling when and how custom MCPs are permitted

Handled well, MCP governance doesn’t slow innovation, it channels it. Teams can extend AI safely, knowing they are building on sanctioned, secure foundations.

Model governance

The same discipline applies to LLMs. With multiple models available, the question isn’t “can we use them?” but “which model is right for which task?” Not all LLMs are created equal. Smaller, faster models excel at structured tasks like code completion and syntax suggestions, while larger models better handle complex reasoning, architectural decisions, and nuanced refactoring. Some models might be off-limits entirely due to privacy or licensing concerns.

Much like standardizing a tech stack, organizations must define which models are approved, which are restricted, and how to balance accuracy, cost, and risk. This not only prevents waste and fragmentation, but also aligns AI usage with strategic priorities—ensuring the right tool is applied at the right time.

Context Is King: Building the “Second Brain”

If governance defines the rules and reviews enforce them, context is what makes the whole system work. Without it, even the best guardrails collapse. Qodo’s 2025 State of Code Quality Survey highlights the scale of the challenge: 65% of developers say AI misses context during refactoring, and 44% of those who report degraded code quality blame context gaps directly. It’s no surprise that 60% of MCP usage with agents is dedicated to context retrieval.

The most dangerous failures aren’t obvious crashes, they’re subtle misalignments that only surface in production. A missing understanding of your rate limiting strategy. An unawareness of a critical architectural decision made six months ago. A failure to recognize that this particular service requires special handling for regulatory compliance.

Building an effective “second brain” for your AI—a continuously updated knowledge base of your codebase, architecture, standards, and decisions—isn’t optional. It’s the difference between AI that generates generic solutions and AI that produces code that actually fits your system.

The Path Forward: Quality as a First-Class Citizen

The organizations that will win with AI aren’t those generating the most code, they’re those generating the most trustworthy code. This requires treating code quality not as an afterthought, but as the foundation of AI adoption.

This is precisely the challenge Qodo was built to address. As the first agentic code quality platform, Qodo deploys specialized agents across the entire software development lifecycle to continuously improve code by automating review processes, ensuring compliance, enforcing standards, and embedding quality at every step. By eliminating the speed-versus-quality tradeoff, Qodo ensures that AI delivers results that actually scale.

The Bottom Line

Vibe coding was just the beginning. The real revolution happens when AI-generated code is indistinguishable from your best engineers’ work—not just in functionality, but in quality, maintainability, and alignment with your organization’s standards.

The question isn’t whether AI will transform software development—it already has. The question is whether your organization will harness it effectively or drown in the technical debt it can create.