Building the Verification Layer: How Implementing Code Standards Unlock AI Code at Scale

Building the Verification Layer: How Implementing Code Standards Unlock AI Code at Scale

The dream was simple: AI would make developers 10x more productive overnight. Ship code faster. Build features in half the time. Scale your team with AI-assisted coding.

The reality? It’s far messier and actually, way more interesting.

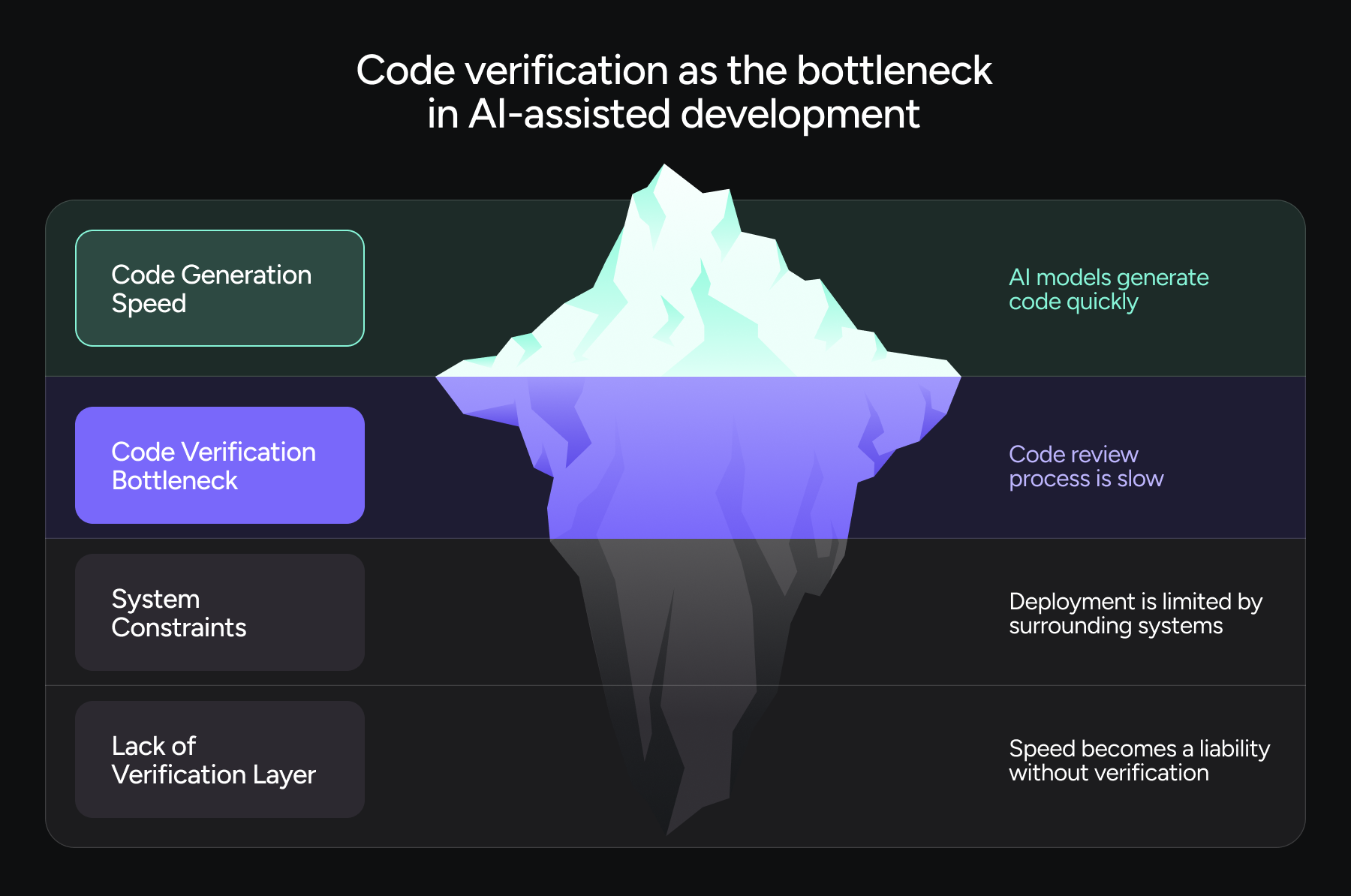

Last year, I watched the industry confront a compelling truth: it turns out, the bottleneck was never code generation. It’s code verification.

When you have AI models that can generate thousands of lines of code in minutes, but your team still reviews code one pull request at a time, you’ve just moved the bottleneck, not eliminated it. You’ve made developers faster at writing code, but you haven’t solved the systems and processes around them that constrain deployment. And more specifically, without a verification layer, speed becomes a liability.

The Vibe Coding Experiment Failed—And That’s Actually Good

And by spring of 2025, “vibe coding” was everywhere. The pitch was irresistible: prompt your AI, let it generate a feature, ship it. Trust the models. Trust the velocity.

Across enterprises, from Salesforce to Qodo to early-stage teams, the experiment played out the same way. Teams tried it and developers loved the speed, but then the cracks began to appear.

Developers would drop an AI agent into a massive codebase without proper context or onboarding, expecting magic. The AI would hallucinate dependencies. It would miss edge cases. It would generate code that was technically correct, but architecturally misaligned with the system it was joining. Code review became a nightmare: reviewers had to untangle the logic and understand what context the AI was working from in the first place.

Ben Stice, VP of Software Engineering at Salesforce Commerce Cloud, captured it perfectly in a recent industry panel: “Surgery is much harder than invention.” When you’re working in a large codebase—which is most enterprise work—you’re in surgery mode, not invention mode. Invention can be messy. Surgery cannot be messy, because one small mistake in production affects customers.

In my opinion, the fundamental problem wasn’t (and isn’t) inherently AI. It was that we’d built all this speed without the infrastructure to safely deploy it.

This is where the conversation shifted. And it’s where the real opportunity lies.

The Verification Layer is the Multiplier

Here’s what leading engineering teams realized: to increase autonomy, you have to increase verification.

Dedy Kredo, Co-founder and Chief Product Officer at Qodo, framed it this way: “If you want to push the autonomy level higher, and you want to do more autonomous stuff, you also need to invest more in the verification layer. And in defining standards and figuring out ways to test and verify at scale.”

When you combine AI code generation with a robust verification layer, something changes. The AI doesn’t become less necessary—it becomes more useful. Developers spend less time worrying about whether the generated code is “right” and more time evaluating whether it’s aligned with your standards.

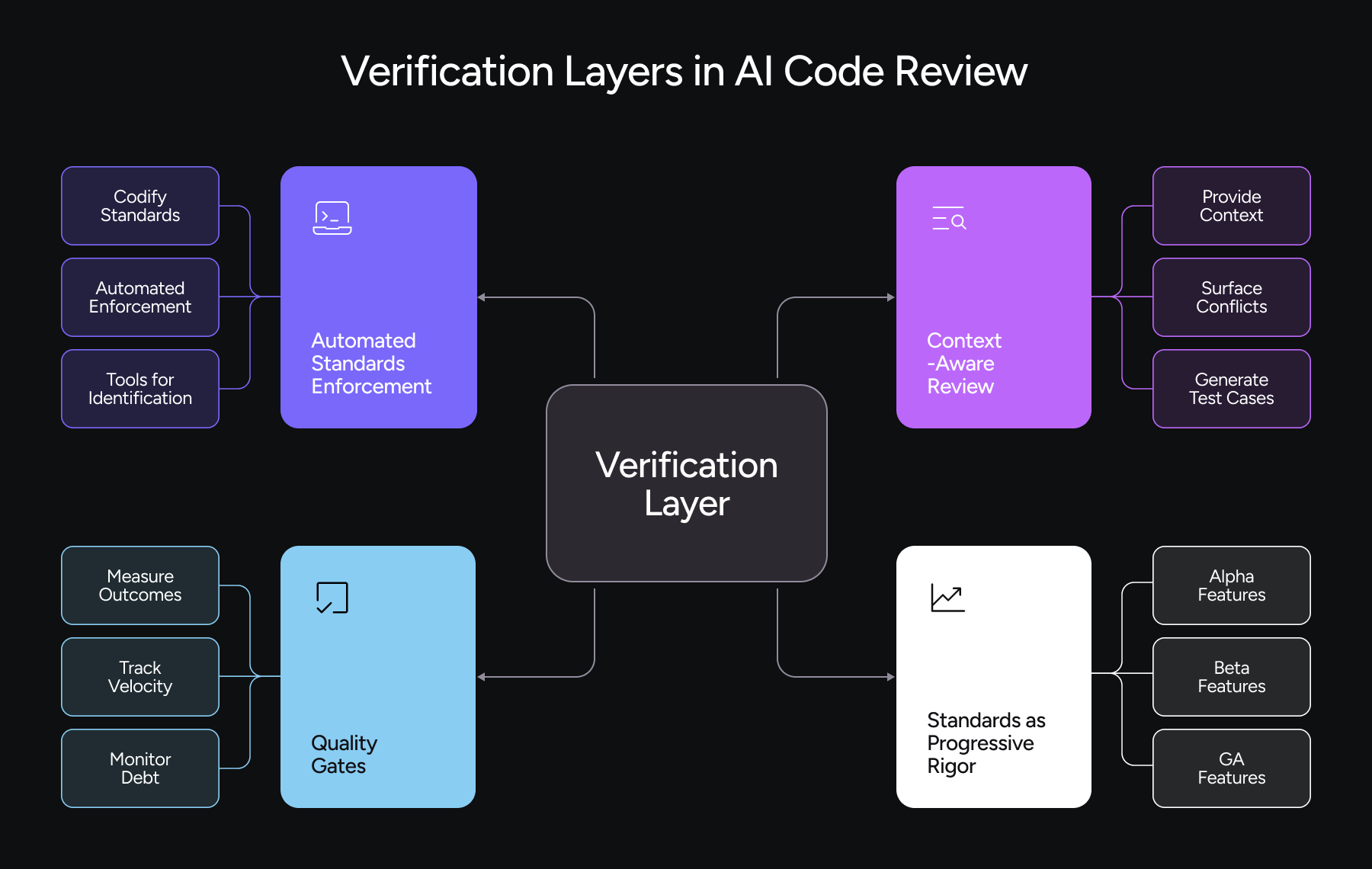

Let me break down what this actually looks like in practice:

1. Automated Standards Enforcement

The first layer is simple but powerful: codify your standards, then enforce them automatically.

This means:

- Defining organizational coding standards, architecture patterns, and security requirements—not just style guides, but meaningful architectural constraints

- Setting up automated enforcement in your CI/CD pipeline so that every PR (whether from humans or AI) gets checked against those standards before it can merge

- Using tools that can identify bugs, security vulnerabilities, and complexity issues at scale

The beauty here is that it scales. When a developer or an AI agent generates code, it immediately hits these gates. You’re not waiting for a human reviewer to catch that the code violates your organization’s caching strategy or security posture.

Walmart’s approach is instructive here. They built a multistage validation process that checks code for accuracy, security compliance, and even lineage, before it reaches production. The crucial detail: “Humans are always in the loop,” but automation handles the heavy lifting of catching systemic issues first.

2. Context-Aware Review with AI-Assistance

Here’s a problem with some code review tools currently: they tend to treat every PR the same way.

AI-generated code requires different scrutiny than human-written code. It can be syntactically perfect while missing the broader context—team naming conventions, architectural patterns, business constraints that aren’t codified anywhere. Research from Qodo shows that 65% of developers cite missing context as the top issue when working with AI tools, even more often than hallucinations.

This is where the verification layer gets sophisticated. Instead of asking reviewers to evaluate whether the code works, you’re asking them to evaluate whether it’s aware of your system’s constraints, your team’s patterns, your architectural vision, and more.

Tools that power this layer do things like:

- Provide reviewers with both the generated code and the original requirement/prompt that triggered it

- Automatically surface context about the parts of the codebase the AI touched

- Flag potential conflicts with existing patterns or architectural decisions

- Generate targeted test cases for edge cases the AI might have missed

3. Quality Gates that Enforce Outcomes, Not Just Metrics

This is where many teams stumble. They implement metrics—code coverage, cyclomatic complexity, defect density—but they optimize for the metric instead of the outcome.

Ben Stice, again, hit on this. “[It’s about] Goodhart’s Law: when a measure becomes a target, it ceases to be a good measure.” You can move any metric if you’re willing to game it. What you actually need is to be “aggressively curious” about what the data is telling you.

For AI-generated code, this means:

- Measuring not just lines of code produced, but lines of code removed (because the best engineers deliver negative LOC by killing complexity and technical debt)

- Tracking the velocity of code reaching production safely, not just reaching review

- Monitoring technical debt accumulation specifically in AI-generated vs. human-written code

- Measuring review time reduction across the organization, not just per PR

Here’s a metric that matters: Can you increase deployment velocity while maintaining or improving code quality? If you’re shipping faster but quality is declining, you’ve just optimized for the wrong thing.

4. Standards as Progressive Rigor

Here’s a subtle but important point: not all code needs the same level of rigor.

Qodo applies this through their alpha/beta/GA framework. An alpha feature might be experimented with by a small group of design partners with looser constraints. A beta feature is available to everyone but carries lower quality guarantees. GA (general availability) features must meet enterprise-grade standards—documentation, security, performance, everything.

This matters because it lets you move fast in experiments without compromising on production systems. Your AI can help you prototype quickly. But when code reaches production, the verification layer tightens.

The parallel in development workflows: Yonatan Boguslavsky from Port notes that teams are using AI for planning via vibe coding—generating exploratory code to estimate task complexity—but they’re not shipping that code. They throw it away and start fresh with disciplined design. This is the right balance: use AI for speed and exploration, then apply rigor for safety.

What Moved the Needle in 2025

Let me zoom out for a moment. Across the panelists and research I reviewed, here’s what actually produced measurable improvements:

For teams that invested in verification layers, the results included:

- Code review time reduction (Walmart saw significant velocity improvements through automated validation)

- Defect detection earlier in the process (catching issues pre-merge rather than in production)

- Consistent application of standards across distributed teams

- Reduced cognitive load on reviewers (they’re not doing first-pass checking, they’re doing high-level architectural review)

For teams that tried to skip verification, the results included:

- Accumulation of technical debt (research from SonarSource shows 2024 was the first year AI-related code quality showed measurable decline at scale)

- Reviewer bottlenecks (paradoxically, faster code generation created slower deployment because review became the constraint)

- Security vulnerabilities in production (hallucinated dependencies, missed edge cases in critical paths)

- Team friction (developers don’t trust AI output, so they rewrite it anyway)

The data supports this. According to Qodo’s State of AI Code Quality report: among teams using AI for code review, code quality improvements jump to 81%. Without proper review infrastructure, quality improvements drop to 59%. The difference is the verification layer.

The Shift From “Move Fast and Break Things” to “Move Fast and Verify Things”

We’re living through an inversion of the lessons from the last decade.

Clinton Herget, Field CTO at Snyk, pointed out something that stuck with me: “We’re doing the opposite now. We’re saying the code is disposable. The actual important part of the development process is the set of prompts that define the functionality.” This is spec-driven development.

Fifteen years ago, the mantra was: “The code is the source of truth. Documentation is dead.” Now we’re saying: “The spec is the source of truth. The code is generated. Verification is the craft.”

For platform engineering teams and DevRel leaders (and yes, I spent enough time in platform engineering communities to know), this is the frontier. The organizations excelling in 2026 won’t be the ones with the best code generators. They’ll be the ones with the best verification layers—the standards, the gates, and the human-in-the-loop processes that let them trust AI output enough to ship it fast.

What This Means for Your Team

If you’re evaluating AI coding tools in 2026, here’s what I’d actually look for:

- Does the tool integrate with your verification layer or just your IDE?

Code that ships fast but can’t integrate with your review process and CI/CD gates is useless. You need tools that understand your standards, your security requirements, and your quality gates—and that make it easy for reviewers to make decisions confidently.

- Can you define standards for your organization, and can the tool enforce them?

This is foundational. If you can’t codify what “good” looks like for your codebase and then automate the checking, you’re stuck doing manual review forever.

- Does the tool help you understand why it generated something, not just what it generated?

Context is everything. When an AI generates code, you need visibility into what it understood from the prompt, what it pulled from your codebase, and where it might be making assumptions. This is where tools that provide detailed feedback and reasoning win.

- Can you measure and iterate on your verification process?

You’re going to learn a lot as you deploy this. You need tools that give you metrics—review time, quality gate pass rates, defect escape rates—so you can see what’s working and adjust.

The 10x Engineer is Still a Human

Let me end on this: The 10x engineer isn’t dead, but the definition has certainly changed.

It’s not the person who codes 10x faster. It’s the person who ships 10x faster while maintaining quality. And in 2026, that person isn’t coding alone. They’re orchestrating AI, setting standards, building verification layers, and making the high-level architectural decisions that keep the system coherent.

As Yonatan from Port said: “There are some parts in the code that you can develop 10x faster. But not every engineer now is a 10x engineer. That’s just not the case.”

The real 10x move is building the systems that let your entire team move at that speed safely.

That’s the verification layer. That’s what actually matters.