ChatGPT or FormGPT? – Which is the Best LLM Interface for generating tests?

Human communication is a fundamental aspect of our lives. From a very young age, babies learn to communicate in order to get their needs met. Babies are able to communicate their basic needs, such as hunger, thirst, and the need for comfort, through crying and other nonverbal cues. Toddlers learn to communicate as a way of exploring and making sense of the world around them. Through communication, they are able to ask questions, seek clarification, and learn new things.

In recent decades, online communication has become an increasingly important part of our daily lives. With the widespread adoption of the internet and the proliferation of digital devices, it is now possible for people to communicate with each other (as well as with computerized systems) from anywhere in the world. Many types of online communication exist, including chats, online forums, online form submissions, and others. Each type of communication has its unique features and capabilities and is suitable for different purposes and contexts.

But with the introduction of ChatGPT in late 2022, people are expected to start communicating with a new type of computerized system, namely, large language models (LLM), in order to seek information and get tasks done. It is anticipated that a large number of companies will develop products that make use of these advanced chatterbot capabilities. The question that comes to mind is then –

What is the most effective way for humans and LLMs to interact?

There are some differences between human interaction and human-LLM interactions. While human communication includes exchanging information, thoughts, and feelings through verbal and nonverbal means, interacting with LLMs is mostly about exchanging information.

Also, human communication is a complex and multifaceted process involving language, gestures, facial expressions, and other nonverbal cues to convey meaning; human-LLM interaction currently revolves solely around language (mainly text interactions).

In general, effective communication involves listening actively, expressing oneself clearly and concisely, and adapting to different communication styles and contexts.

How should we adapt our communication style when talking to an LLM such as ChatGPT?

There is no single answer to this question, it differs from one use case to another, but there are also some commonalities. Here are some guidelines:

- Communication sessions – The emails and online forums, which are relics of times when people were not always online, are probably not a good choice. But the online forum’s thread concept can be imported in case the user wishes to distinguish between different sessions and allow for collaborative work between different users on the same session. ChatGPT’s interface, for instance, does include a session bar alongside its chat interface to keep the history of sessions.

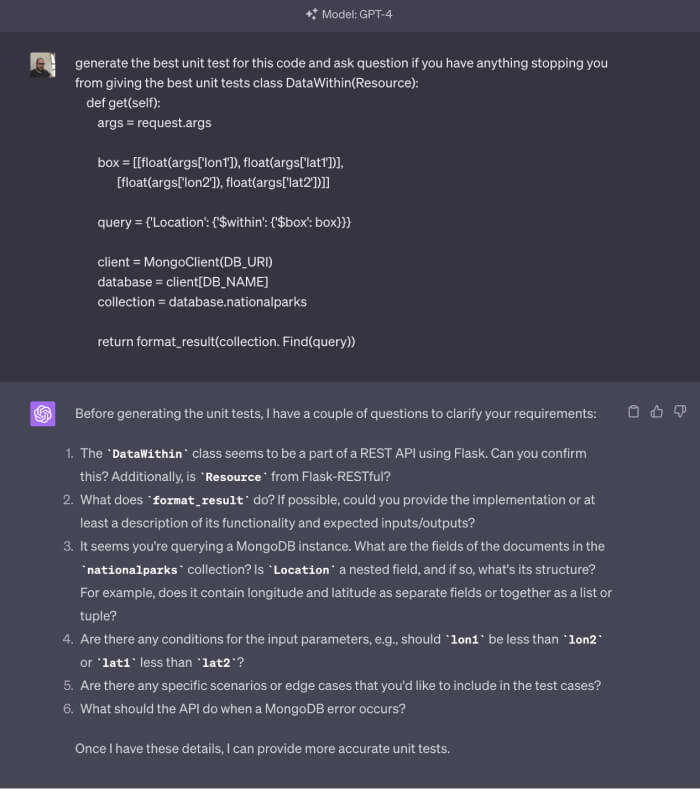

- LLMs need context – Human acquaintances carry large amounts of context for each conversation. Even strangers that strike up a conversation have some context, such as the location of the conversation, their clothes, etc. Currently, LLMs, at the beginning of a new session/conversation, do not possess any priors and therefore require as much context as possible to be given to them. The user can try to supply all the context at once, but structuring the conversation as an interactive chat can help gradually create the context through a series of coarse-to-fine prompts in order to get the best results. In some scenarios, such as code generation, context gathering isn’t a trivial task for the user to perform, and hence, a background process is needed in order to supply the context to the LLM. In the future, it is not unreasonable to assume that our LLMs will carry context from past sessions and save the need for some of the common context, for example, our coding style. And possibly, models will have permanent access to ‘context’ assets such as our code repositories, documentation, social network activities, etc. But Chatbots have their advantages; unlike real-life conversations, which have short longevity (as time passes, we can no longer reconstruct or recall the exact information from that conversation), online chat sessions can be saved and restored for infinite time.

- Are we exploring or exploiting? As in human conversations, some conversations are more practical than others. A person approaching an information booth at the rail station is aiming to achieve the information as fast as possible (i.e., the minimum number of questions), whereas two individuals at a cocktail party aren’t aiming for a specific informative goal. Two collaborating artists, for example, aren’t aiming at a specific creative outcome but rather a fruitful collaboration. While they all strive for effective communication, the structural nature of the information exchange is different. The same rules apply to human-LLM interactions; some conversations focus on a specific outcome, while others don’t. While chat allows for a more free-style unstructured communication, online forms may structure and limit conversations for specific tailored use cases, lowering the number of interactions needed to get the results. Online forums will also save the need to have prompt engineering know-how (because the online form itself will run the best prompts for the task in the background).

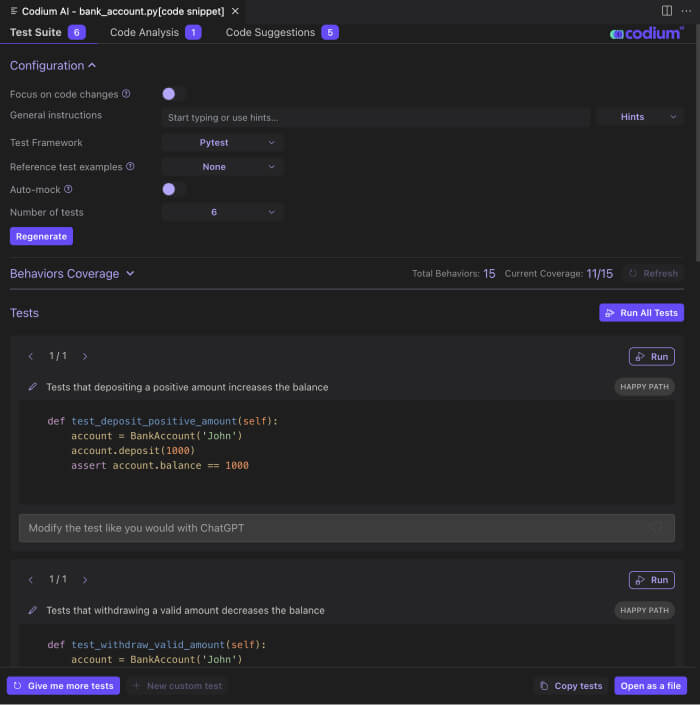

Interacting with a computerized system to generate test code is a practical type of conversation and hence requires different types of communication styles. For some end goals, using predetermined forms is more efficient; for others, an open-ended, flexible chat is more efficient. Context collecting will always be an essential piece of the puzzle in order to create high-quality tests and a basic requirement for any such system. Developers will never have the time or will to a. manually collect all the needed context b. optimize the context to fit the limited token budget or reduce costs (developers may even find it challenging to know which code context to prefer over another).

We believe there’s more to communication than just humans asking questions and the LLM producing information (or requesting tasks to be done and the LLM creating something for them). Effective communication depends on the LLM’s ability to understand that it didn’t accumulate enough information or context to achieve the goal and ask directive questions that lead to the expected information or executed task.

What do you think can further improve human-LLM communication?