Claude Code vs Cursor: Deep Comparison for Dev Teams [2025]

TLDR;

- Claude Code excels in terminal and multi-step workflows with consistent 200k token context and deep repo reasoning.

- Cursor offers a fast, GUI-first IDE with a similar interface to VS Code. It has a Normal mode of 128K token capacity and a Max Mode of 200K token capacity. However, in practice, it can reduce the token capacity as the Cursor may shorten the input or drop older context to keep the response fast.

- Cursor supports three primary Agent modes: Ask, Manual, and Agent, with background execution support for parallel workflows.

- Claude Code’s context window is more reliable for large codebases, offering true 200k-token capacity ideal for CLI workflows, as Cursor’s token window can reduce the capacity for maintaining performance.

- Claude Code is usage-based ($20-$100/month for model access), while Cursor comes with a free plan, offering flat tiers ($20-$200/month) with usage caps on premium models.

- If you’re looking for Git-native prompting with team-wide context, Qodo is a strong choice. It supports

/review,/ask,/implement, and Retrieval-Augmented Generation (RAG)-backed suggestions aligned with your repo structure, making it ideal for enterprise workflows and automated PR handling.

Cursor and Claude Code have been the subject of quite a discussion among developers about how they serve developer needs. As a Senior Engineer, I have evaluated both tools thoroughly, and I can list what they bring to the table.

On the one hand, Claude Code is a terminal-first AI agent built to understand and manipulate your entire codebase deeply. According to Anthropic’s documentation, it “lives in your terminal, understands your codebase, and helps you code faster through natural language commands.”

Practically speaking, I can map project structure, triage issues, generate PRs, execute tests, and commit changes without leaving the shell. Plus, IDE integrations mean I’m not locked into any specific environment.

Meanwhile, Cursor presents itself as a fully featured AI-augmented IDE, forked from VS Code, offering intuitive code completion, IDE-integrated actions, and smart refactoring within a familiar GUI. It supports frontier models, including Claude 4 Sonnet and Opus, within the editor and offers advanced controls such as Cursor Rules (project-scoped guidelines that influence how the AI responds) and custom documentation injection to keep suggestions aligned with your codebase and conventions.

But what do developers say? On Reddit, one seasoned developer championed Claude Code’s CLI flexibility for remote setups and real‑world tasks beyond IDE scope:

But is this correct? Which one is better for code generation and modification? It depends on your workflow.

In this blog, I’ll explore how each tool performs across common senior-developer scenarios, from project onboarding and refactoring to CI/CD automation and team collaboration, helping teams choose the right fit without oversimplifying their unique needs.

Overview of the Tools

Before getting started on the feature-by-feature comparison, let’s review the tools’ overview and their key features. This will help us understand the comparison better. Let’s start with Claude Code first:

Claude Code

Claude Code is Anthropic’s terminal-first AI coding assistant that deeply understands your full codebase by using agentic search to scan, map, and interpret your entire project without requiring manual file selection. Running locally enables everything from natural-language-based code modifications to documentation generation, test execution, and CI/CD tasks, all from the CLI.

Key Features:

- Full codebase context: Terminal-based agents can navigate files and branches, and run tests or deployments across environments. It is ideal for headless servers and remote workflows.

- Minimal friction in shell workflows: Enables high-level operations like “@ file: run integration tests, then fix errors” without leaving your terminal.

- Powerful reasoning models: Utilizes Sonnet and Opus models; Opus 4 can handle long-running refactors and complex logic tasks.

Cursor

Cursor is a standalone, AI-augmented IDE forked from VS Code. It offers natural-language prompts, deep codebase querying, smart refactoring, and inline autocomplete, combining GUI convenience with agentic code assistance.

Key Features:

- Rich editor experience: Familiar with VS Code environment with tab-based multiline completions and smart code rewrites.

- Context-aware agents: Users can inject custom documentation or “Cursor Rules” for project-specific behavior.

- Privacy and enterprise readiness: SOC 2 certified, with privacy mode and team/SAML support.

Feature-by-Feature Comparison: Claude Code vs Cursor

I’m sure you would have understood the overview of Claude Code vs Cursor now. It’s time we get started on the feature comparison to evaluate which tool is suitable for your needs. These evaluations are my own experiences, and it solely depends on which one suits you based on your preference.

I’ve included a side-by-side comparison table of Claude Code, Cursor, and Qodo across key features to simplify your evaluation. It gives a quick overview of each tool’s strengths. If you’re short on time, it’s a helpful scan:

| Feature | Claude Code | Cursor | Qodo |

| Interface | Terminal-first | VS Code-based IDE | Git-native CLI + optional IDE |

| Context Handling | 200k tokens (reliably exposed) | Max Mode: up to 200k (often 70k–120k usable) | RAG-based on files, diffs, commits |

| Prompting Style | Natural-language CLI prompts | Inline + chat (Cmd+K, Cmd+I), Ask/Manual/Agent modes | Git-native commands: /review, /implement, /ask |

| Instruction Following | Strong multi-step reasoning, vague prompts supported | Precise with scoped prompts; planning supported | Config-driven + RAG context enables reliable outputs |

| Automation & Agents | Parallel agents, Task Tool, .claude scripts |

Agent Mode + MCP chaining, background execution | Custom agents via .toml, chained Git workflows |

| Customization | Prompt files, multi-dir access, CLAUDE.md | Cursor Rules, doc injection, model switch | .qodo/config.toml, best practices, agent logic |

| Model Support | Anthropic (Sonnet, Opus) | Claude, GPT-4.1, Gemini (managed or BYOK) | Anthropic, OpenAI, Gemini (via config) |

| IDE Integration | Supports VS Code, and JetBrains via CLI | Native IDE with autocomplete, diffing, BugBot | VS Code extension; works via PR comments (Qodo Merge) |

| Pricing | Usage-based ($20–$100/month) | Flat tiers ($20–$200/month), model metering | Developers use $0/month for 250 credits, Teams ($30/month) |

Context Window (Token Capacity)

Claude Code uses Anthropic’s Claude models (Opus, Sonnet, etc.) with a 200K token context window available through API and CLI agents. This allows it to handle large codebases, extended conversations, and sizable files without losing context.

Anthropic explains that Claude’s models support extended reasoning, also known as “thinking,” during a response. These internal reasoning steps can be lengthy and complex. However, Anthropic’s documentation clarifies that these internal “thinking” blocks are automatically removed from the conversation history before being used as context for future turns, ensuring the full context window remains available for user-visible messages and inputs.

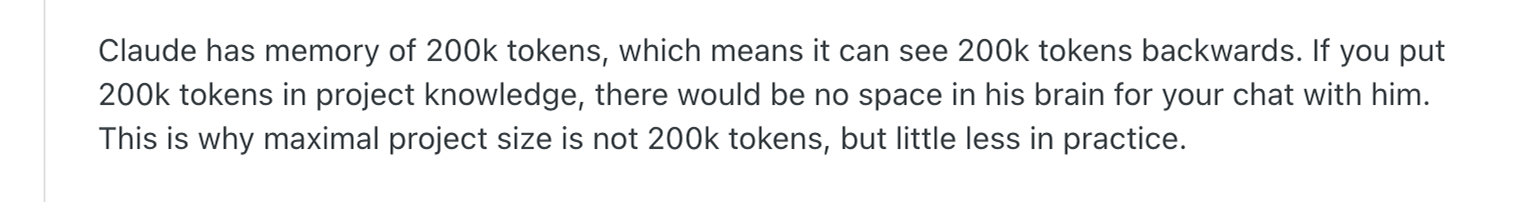

Real‑world note from Reddit:

A user on r/ClaudeAI shared how they have uploaded a document of 34K, yet Claude says that 70% of the knowledge size is filled ( if it’s 200k?) To which a user has replied:

This highlights practical limits: near saturation, new messages or responses may displace earlier context, even though the nominal window is still 200k.

In this regard, Cursor auto-manages context. It limits chat sessions to around 20,000 tokens by default and inline commands (like cmd-K) to about 10,000 tokens, ensuring speed and low latency.

Max Mode for Expanded Context

Cursor offers a “Max Mode” setting that provides higher token capacity. Normally, this extends support to the model’s full context window; models such as Claude 4 Sonnet/Opus can access up to 200k tokens in Max Mode.

Cursor’s Models documentation confirms:

- Normal Mode for Claude 4 Sonnet: 128k tokens

- Max Mode for the same model: 200k tokens

However, in my experience, Max Mode comes with trade-offs. Cursor’s official documentation notes that enabling full context windows can be slower and more expensive, especially when working with large models like Claude Opus, Gemini 2.5 Pro, or GPT‑4.1.

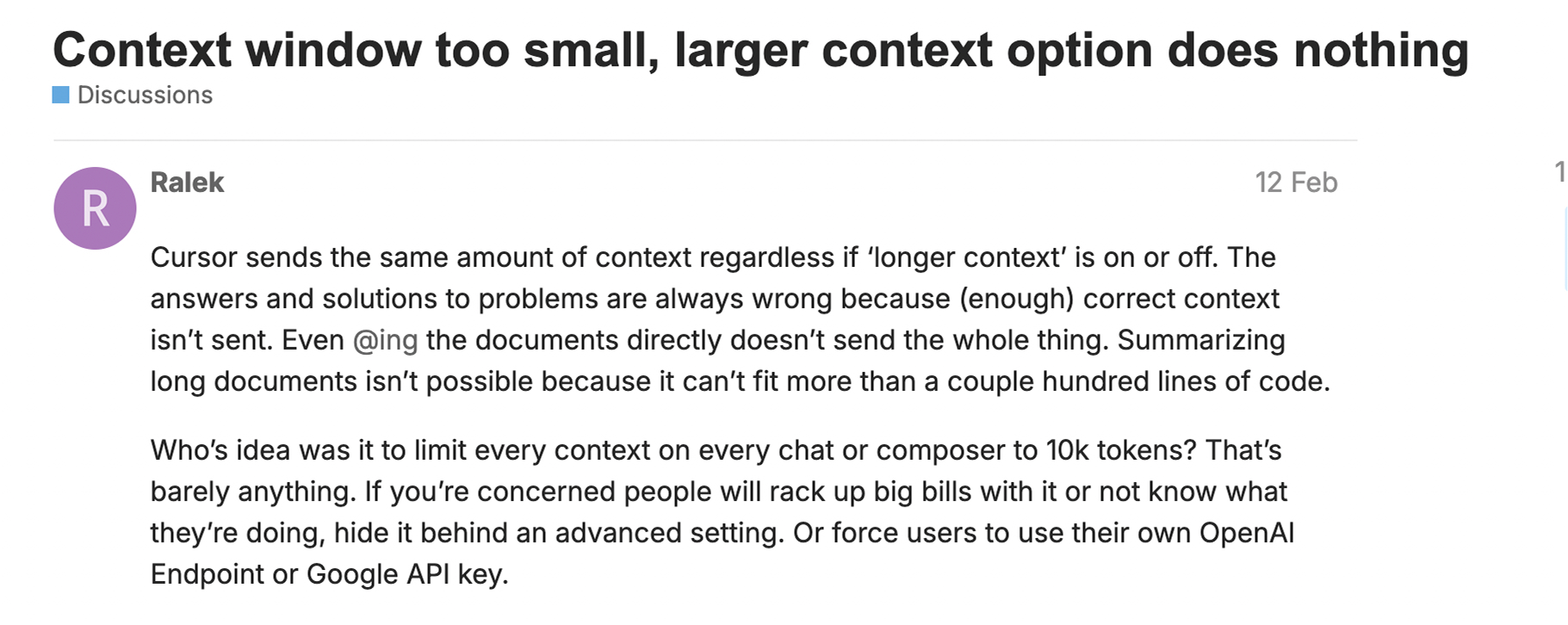

Despite the 200k token claim, developers have reported on Cursor’s community forum that usable context often falls short, sometimes limited to 70k–120k tokens in practice. This is likely due to internal truncation, performance safeguards, or cost controls applied silently during execution.

This suggests users may experience limited token usage in practice, potentially due to internal truncation for performance or cost management. In simple terms, Cursor may automatically shorten the context it sends to the model by trimming older files or deprioritizing less relevant content to maintain responsiveness, reduce latency, and manage API costs. So, even though the model supports 200k tokens in Max mode, the full window isn’t always used.

Who Wins?

Claude Code provides a more dependable and explicit 200K-token context window, particularly valuable for CLI-based workflows and multi-file reasoning. While Cursor offers Max Mode for expanded context, practical usage often falls short of the theoretical 200K limit, making it less consistent for large-scale developer tasks.

Integration & Interface

Now, let’s compare the integration and interface of Claude Code vs Cursor.

The most significant difference is that Claude Code is CLI-first and editor-agnostic, while Cursor is a full-fledged IDE built on top of VS Code. This fundamentally shapes how developers interact with each tool.

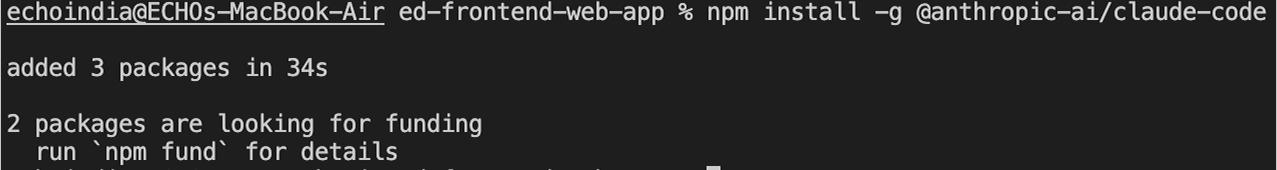

Claude Code runs entirely in the terminal, giving it broad flexibility across environments, from local development to remote servers and CI pipelines. To get started, you need to install it via the command:

npm install -g @anthropic-ai/claude-code

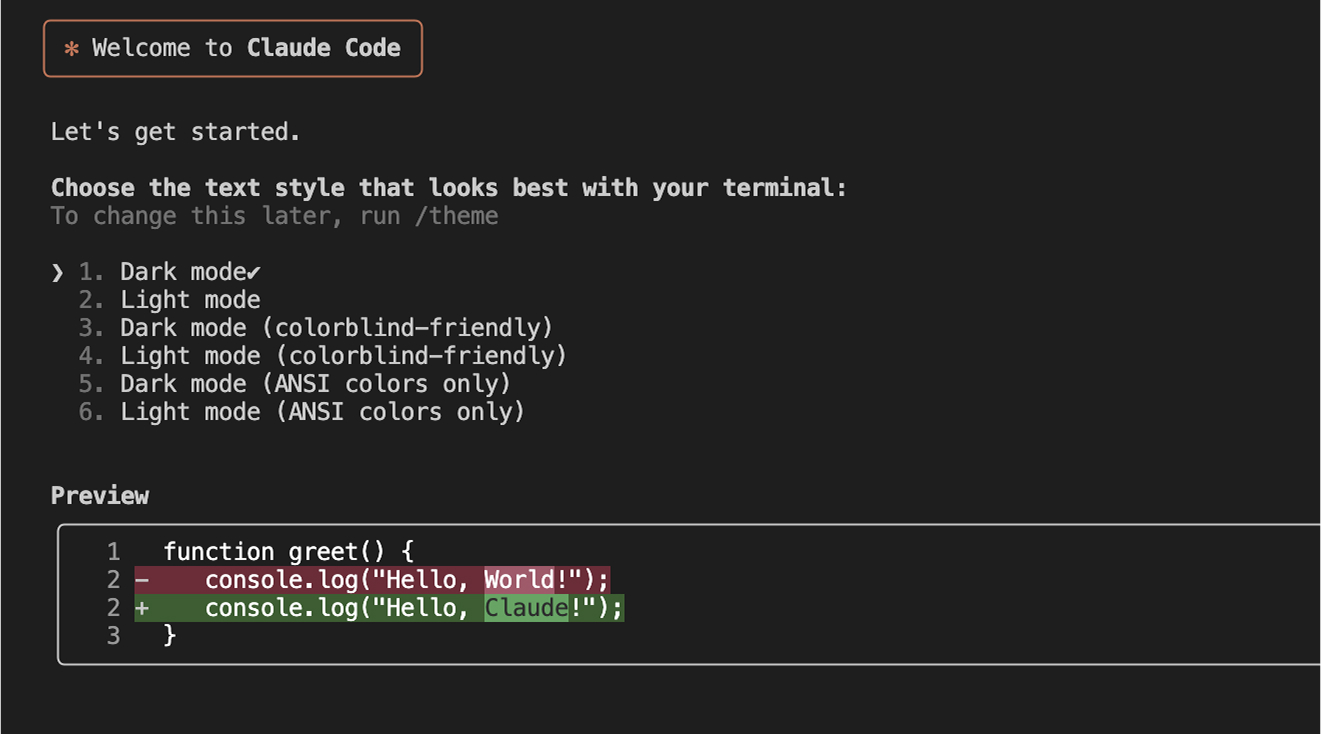

After running the command, your terminal will look like this:

Now, you can get started with Claude Code by running the command claude.

You can invoke it with commands like claude -p to perform actions such as editing files, running tests, or committing code. It supports shell piping and scripting and can be embedded directly into build workflows. For example, I run a command:

claude -p "Run integration tests, fix failures, and explain the changes."

These executed tests applied relevant fixes and returned a structured explanation, all within your shell session. It also supports multi-directory context, custom prompt files, and .claude project folders for consistent configuration.

On the other hand, I found Cursor to be a fully featured IDE that integrates AI into the familiar VS Code environment. Since it’s a fork of VS Code, I didn’t have to adjust to a new workflow; it supports everything I rely on daily: tabbed file editing, built-in terminal, Git integration, debugging tools, and all the extensions I already use.

With Cursor, I can trigger inline completions with Cmd+K, chat in the side pane using Cmd+I, or use Agent Mode to automatically plan and apply multi-step changes. So, it’s designed for developers who want AI help without leaving the editor.

Cursor includes features like diff previews, versioned checkpoints, and an integrated terminal, all supporting a smooth, GUI-driven workflow. It has Bug Bot, a built-in tool that reviews files or projects using AI during the coding process. Since Cursor is a fork of VS Code, I could import my existing extensions, themes, and keybindings using the built-in VS Code migration tool, which made the transition frictionless.

Who Wins?

No one wins here. Both are very different, and your choice depends heavily on whether you prefer terminal-first workflows or a GUI-centric coding experience. Claude Code excels in automation, scripting, and multi-environment workflows, while Cursor is tailored for interactive, in-editor development with visual feedback and agent assistance.

AI Prompting and Instruction Following

Regarding how well the tools understand and follow natural language instructions, Claude Code and Cursor take fundamentally different approaches, shaped by their underlying design philosophy and interfaces.

Claude Code is built on Anthropic’s Claude models (Sonnet and Opus), which are known for strong language understanding and multi-turn reasoning. This gives it a major advantage when handling open-ended like:

claude -p "Refactor this module to use async/await, remove duplication, and improve readability."

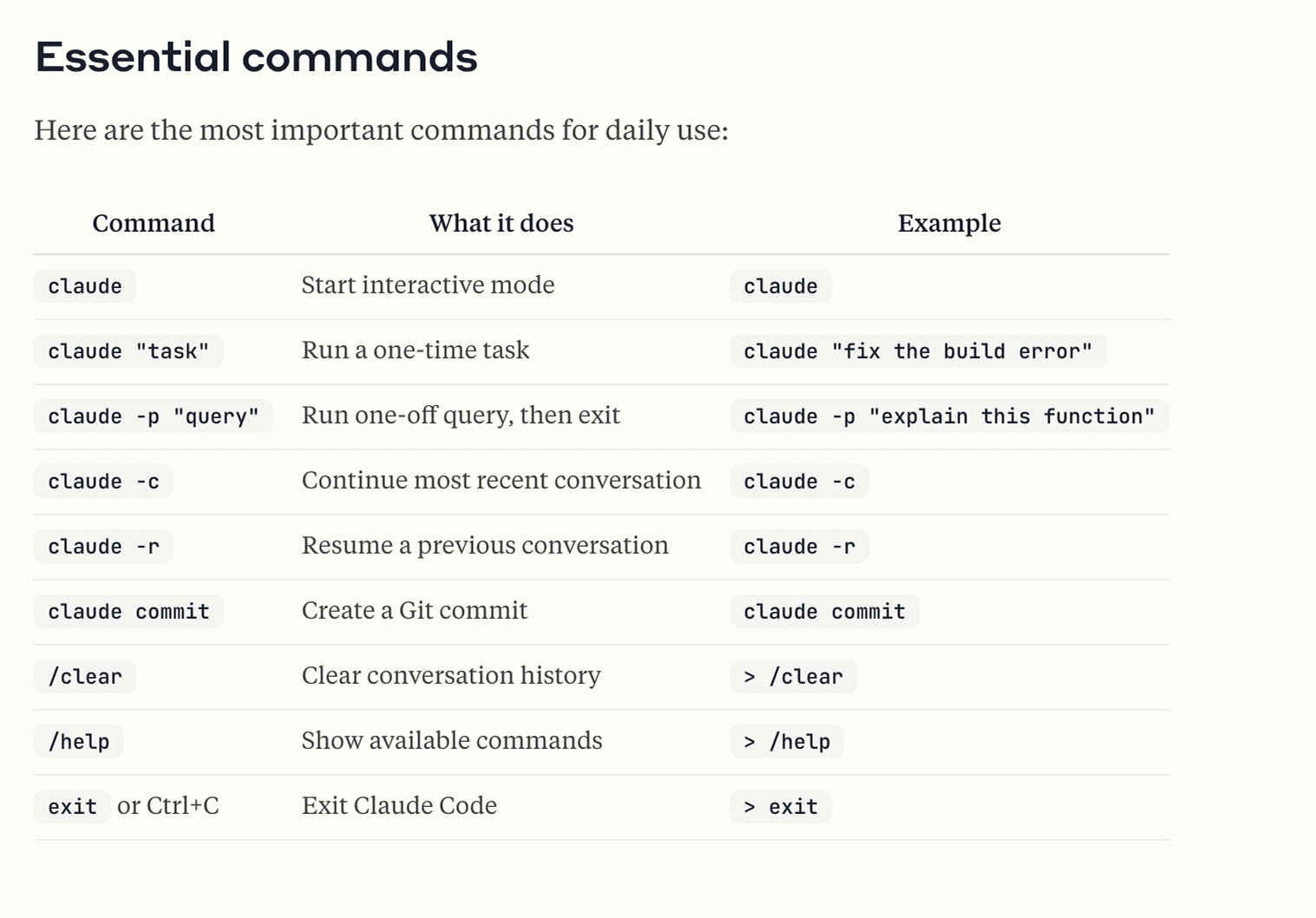

There are several commands that you can use for Claude Code. I have shared a list of them in the image below:

Conversely, Cursor’s documentation highlights that it supports multiple “Agent” modes tailored to developer prompts: Ask, Manual, and Agent.

- Ask mode: Ideal for planning tasks; Cursor prompts the AI to break down the task, clarify ambiguities, and propose steps.

- Manual mode: Executes highly precise user instructions without exploratory autosuggestions.

- Inline/Cmd‑K: Best for single-file edits with natural-language prompts like “Refactor this to async/await.”

- Background: run agents in backgrounds

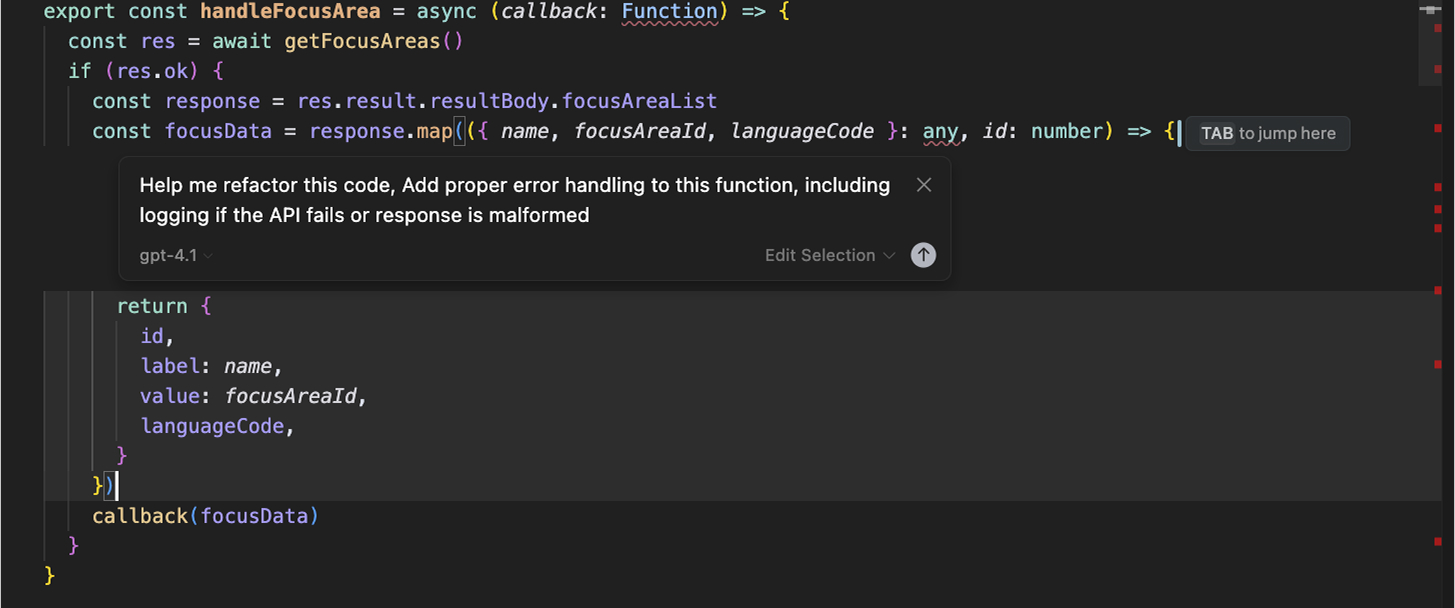

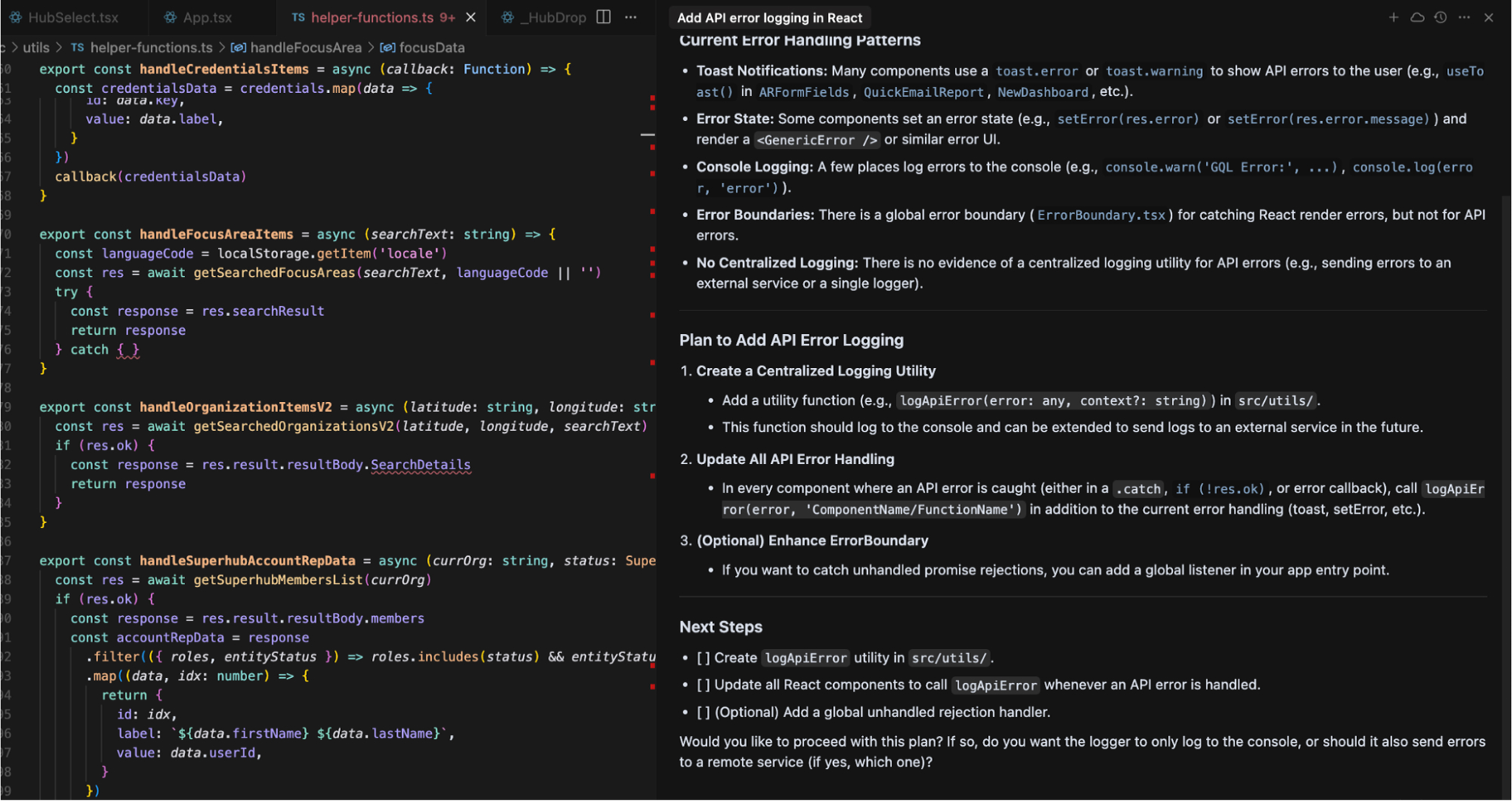

For example, in the below image, I have a helper function that asynchronously fetches a list of focus areas, transforms each entry into a UI-friendly format, and passes the result to a provided callback.

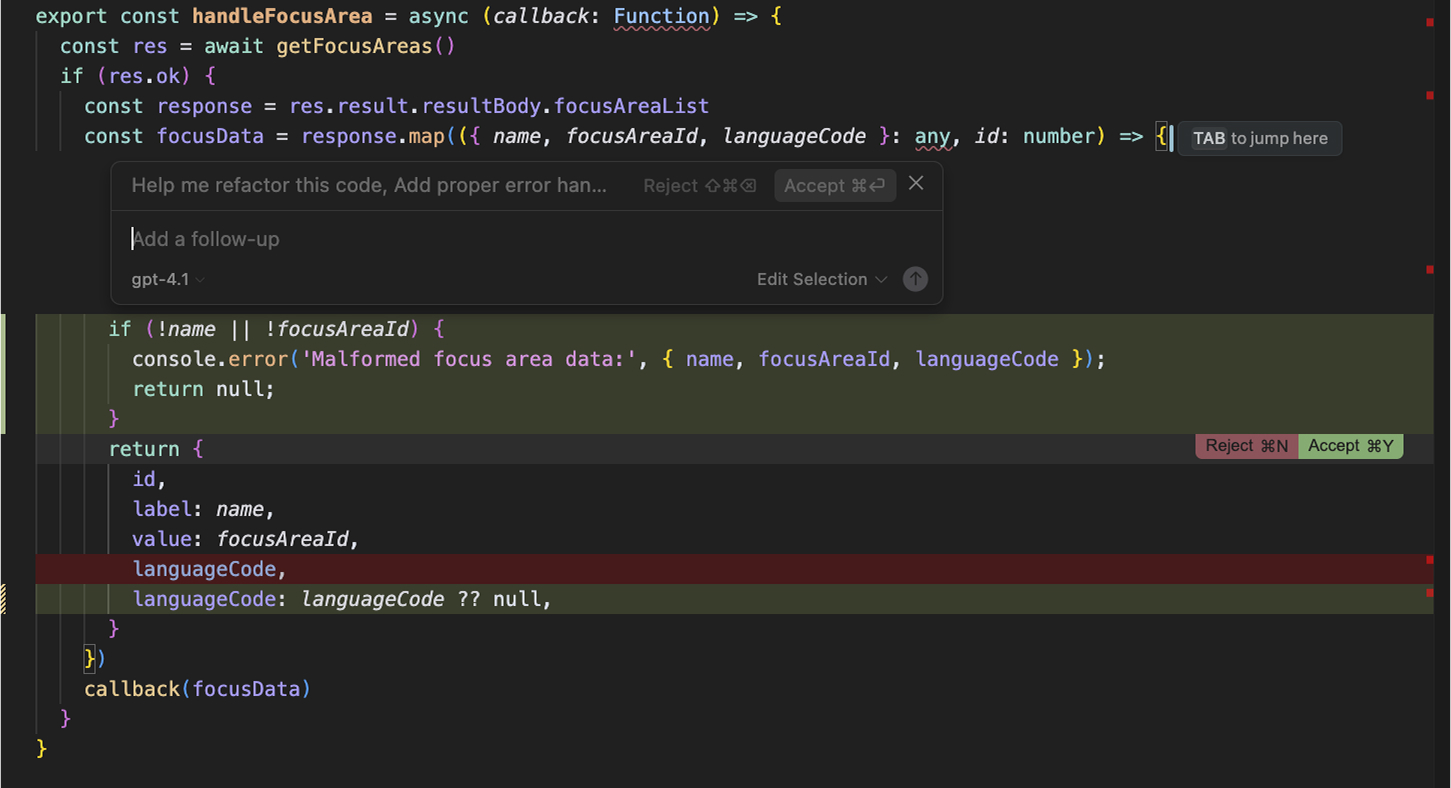

I wanted to add proper error handling to this function, including logging if the API fails or the response is malformed, to just this helper function in the entire file, so I just used Cmd+K and it opened the chat where I entered my prompt. I can use this from the Agent, too, using Cmd+I will give the same output.

Cursor suggested a quick improvement by adding a validation check inside the .map() mloop. The updated version now logs any entries missing name or focusAreaId. I found the work very simple and easier to interact with.

However, when you’re using any AI coding tools for refactoring or vibe coding, you need to be very specific about your prompts. Without enough specificity, it may either over-edit or miss edge cases. That’s why many developers split tasks into steps, first asking the Agent to plan and then to implement.

Who Wins?

Claude Code offers superior instruction following, especially in terminal-based or multi-file workflows. Cursor is better for granular, in-editor guidance, provided prompts are scoped. For a deep, automated understanding of developer intent, Claude is the more robust choice.

Multi-agent or Workflow Automation Capabilities

Claude Code and Cursor both support multi-step task execution, but they differ significantly in how they handle orchestration, autonomy, and developer control.

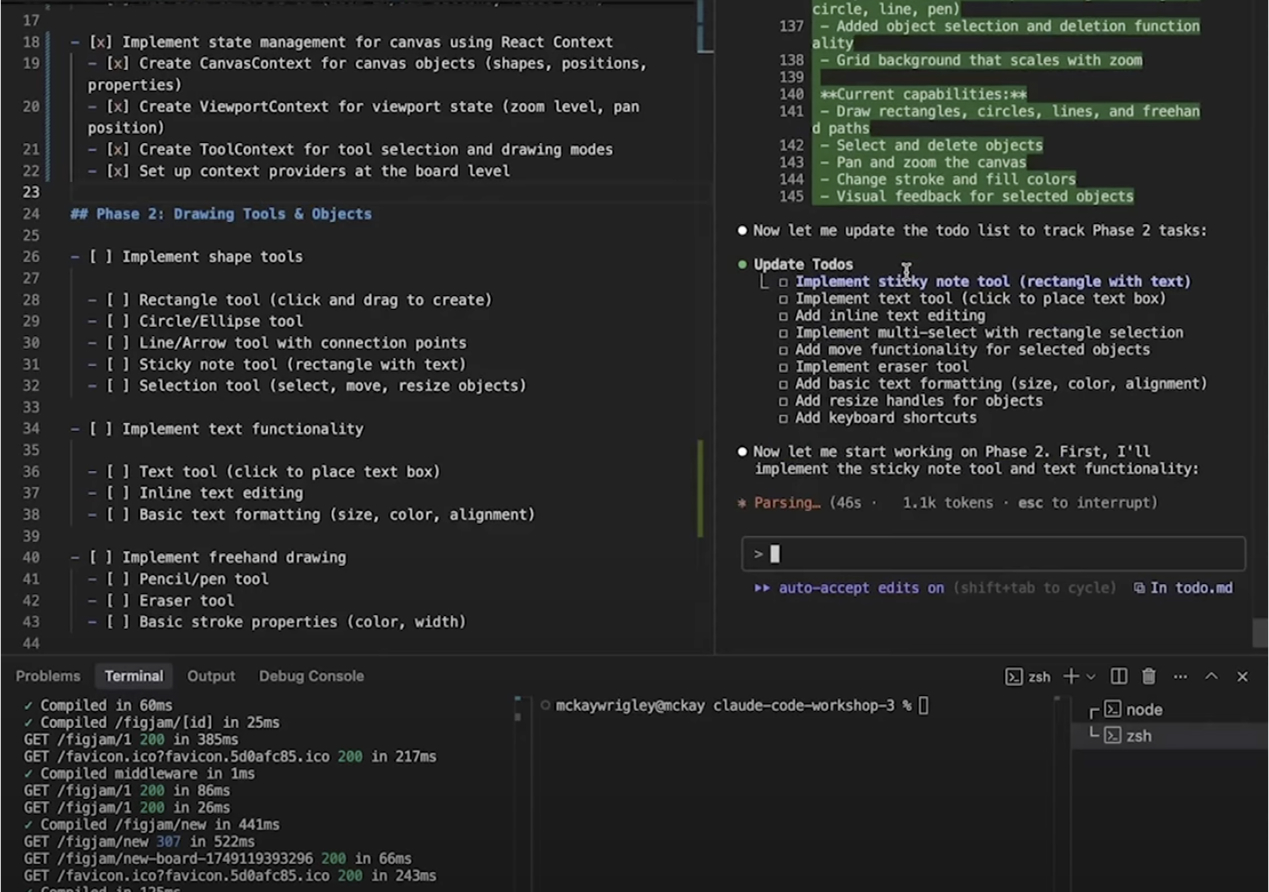

Claude Code supports agent parallelism through a task-splitting mechanism. This means that instead of processing tasks individually, Claude can delegate multiple actions to run simultaneously, improving efficiency, especially in large, modular codebases.

This feature allows you to launch multiple sub-agents for parallel reading, editing, testing, or analysis. The main agent then coordinates the work and consolidates results for a summary.

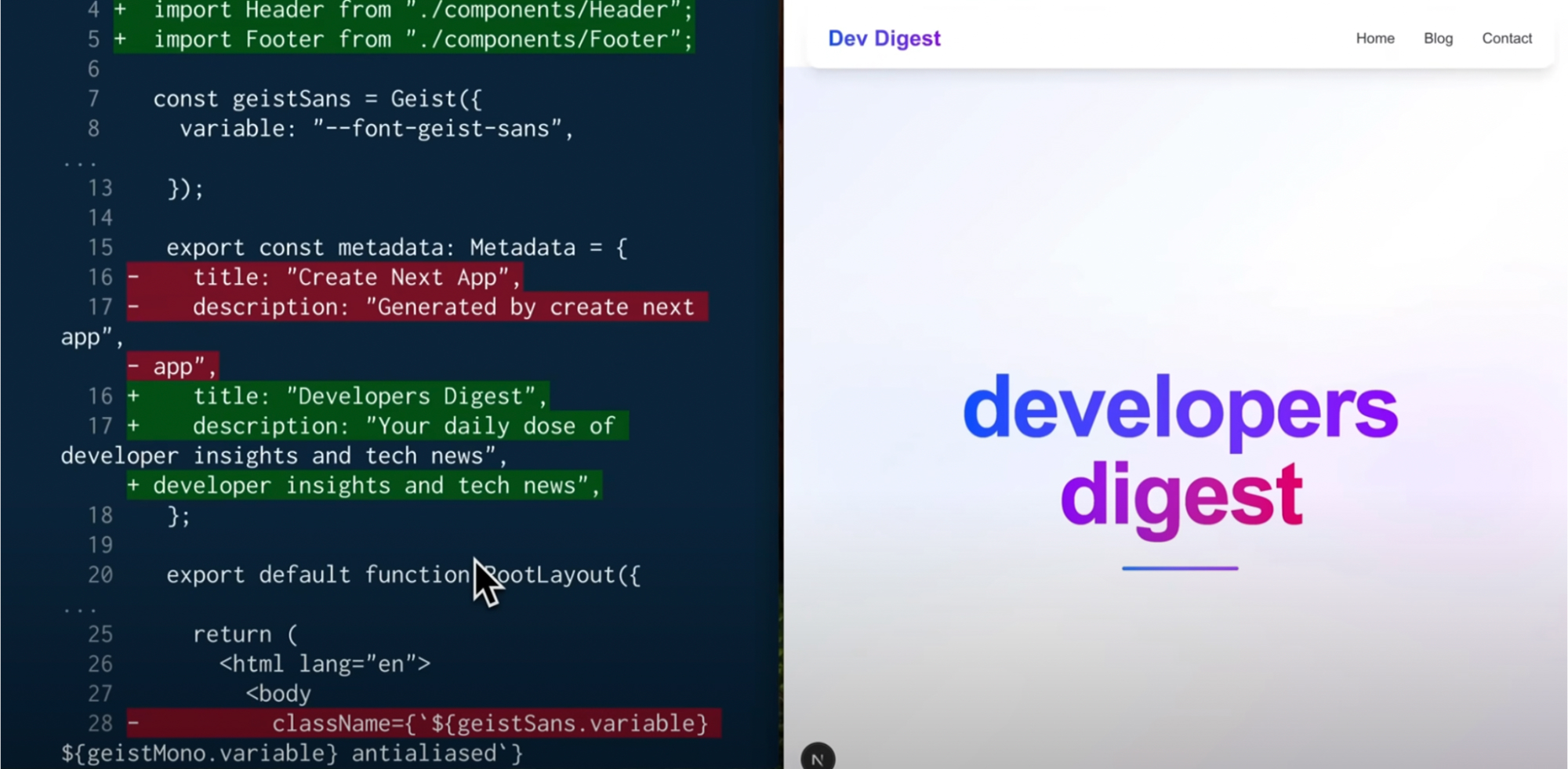

In one of my recent projects, I used Claude Code to create a website with a glass morphism theme. I started by generating a header and footer component, then moved on to designing a contact and blog page. Claude handled the entire flow in the terminal: it created a directory structure, scaffolded components, and even applied layout changes across files.

Here’s a snapshot of the header component that it created:

Now, in the image above, Claude not only refactored file content but also injected the right import paths (./components/Header, ./components/Footer) automatically. It replaced generic titles with more descriptive ones like “Developers Digest,” showing it understood the branding/theme.

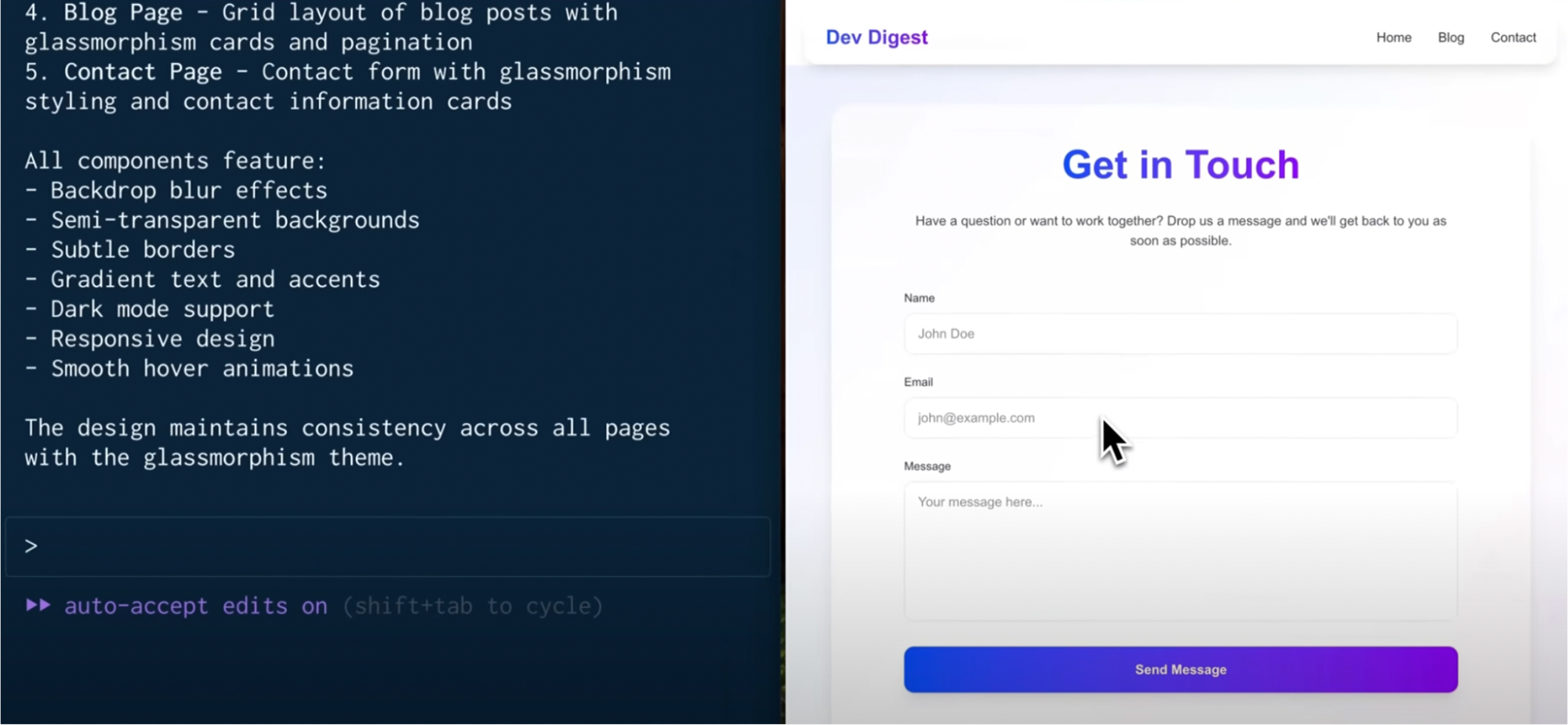

In the final stage of the project, Claude Code ensured the UI design remained consistent with the glass morphism theme across all components. It suggested and applied styling principles such as backdrop blur effects, gradient text, subtle borders, and smooth hover animations. These enhancements were reflected uniformly on pages like the contact form, which was styled with a translucent card layout and responsive design.

It also supports custom-scripted workflows via .claude directories. For instance, I created a create-feature.js script that generated a boilerplate, updated routes, and added test stubs, all triggered through a single Claude run command. These workflows stay tightly scoped to project context and honor conventions defined in CLAUDE.md.

Claude allows direct access to files and directories beyond the project root. Use the --add-dir flag to give Claude access to additional directories outside your main working folder, making it better suited for monorepos, shared libraries, or multi-service architectures that span beyond the boundaries of a single workspace. However, you need to explicitly set this in commands, as this is not automatic.

In the case of Cursor, it approaches automation through its Agent Mode, which runs entirely inside the IDE. When you activate it via Cmd+I, it launches a multi-step execution flow where the AI first plans the task, outlines a sequence of actions, and then proceeds to modify files, run terminal commands, or suggest edits.

Cursor also allows for shell-level control through its command-line tools. You can install cursor and code commands to interact with Cursor from your terminal, enabling you to open files and folders and integrate them with your development workflow.

For example, when I asked Cursor to

“Add API error logging across all React components.”

Here’s a snapshot of what Cursor gave me:

It reviewed existing error patterns like toast.error, setError, and missing centralized logging, then outlined a plan to create a logApiError utility, update all API handlers to use it, and optionally extend the global ErrorBoundary.

The output also included a checklist and follow-up questions, showing that Cursor approaches broad prompts with code awareness and practical implementation guidance, rather than just inserting snippets mindlessly.

Moreover, I like that Cursor supports external tool chaining through its Model Context Protocol (MCP) integration. Developers can configure multiple MCP servers, such as a doc-string generator and a GraphQL schema linter, via a .cursor/mcp.json file or the UI. When an agent runs, it can invoke these tools sequentially.

Who Wins?

Claude Code wins for automation flexibility. It supports parallel agents, custom scripts, and system-level orchestration across directories and environments, all from the terminal. Cursor handles multi-step tasks well inside the IDE but remains limited to editor-visible files and scoped workflows.

Automated Code Review

Claude Code allows terminal-driven code reviews and edits across your codebase. During one of my component-based projects, I used Claude Code to run a structured code review before beginning implementation.

It started by pointing out several foundational issues like inconsistent use of var, let, and const, functions written in mixed styles, and hardcoded values spread across the codebase. It also identified missing input validations and flagged API routes that were exposed without any rate limiting or authentication checks.

Claude parsed the prompt, updated the checklist in real-time, and executed changes across relevant files. This setup allowed me to work incrementally while Claude handled the implementation, edits, and file coordination directly from the terminal within my editor.

After applying those changes, I prompted Claude again:

“Are there any other issues?”

This second round surfaced deeper architectural gaps. Claude added a complete test suite, ESLint and Prettier configs, helper utilities, input validation wrappers, and a new README. It also introduced environment variables we hadn’t planned, improving the foundation without heavy refactors.

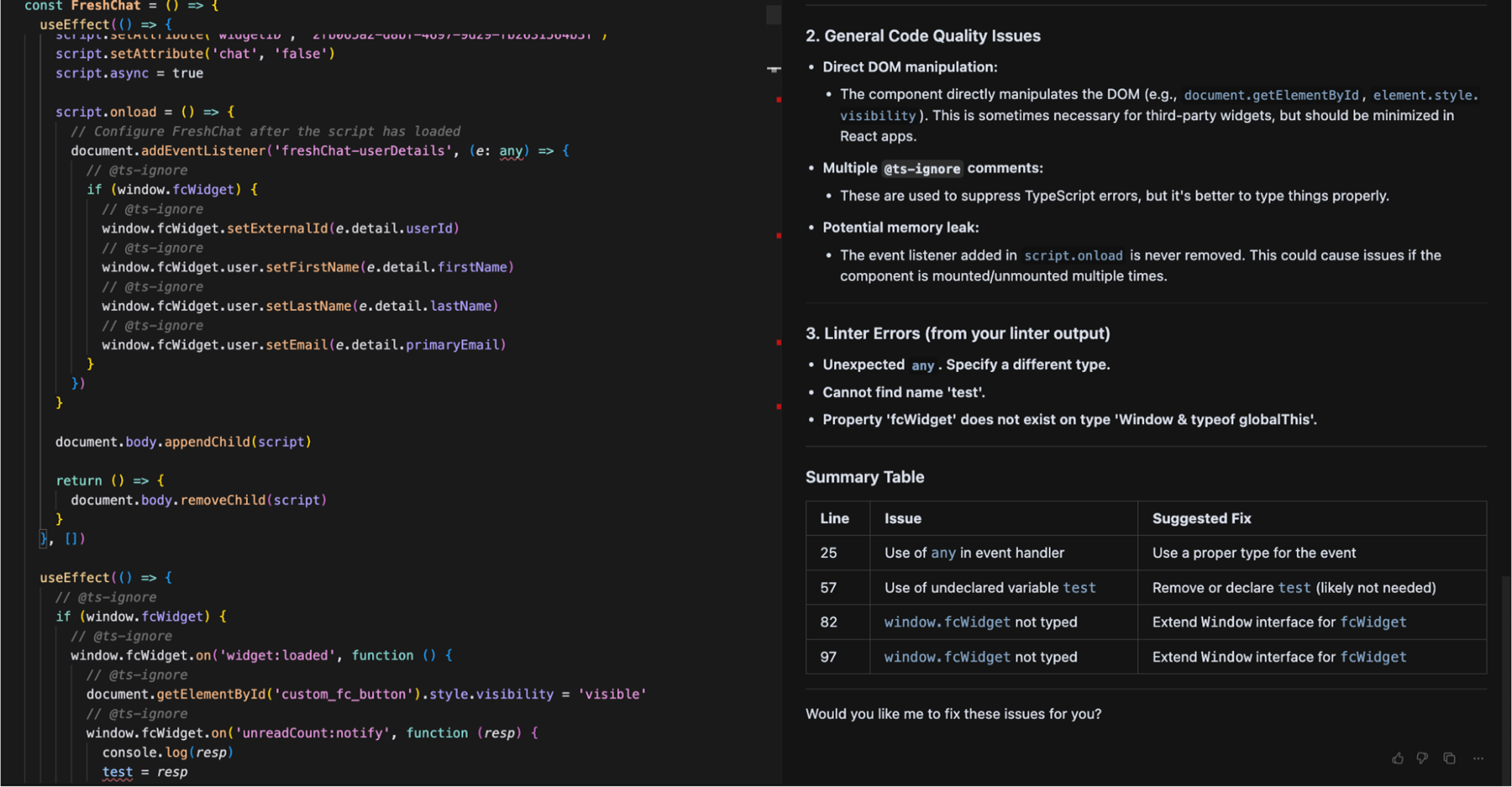

Cursor provides built-in code review capabilities directly inside the IDE through Agent Mode and inline assistants. According to its official documentation and user examples, you can ask Cursor to review a file, and it will analyze the code for common issues like TypeScript errors, code quality problems, linter violations, and architectural inconsistencies.

I asked Cursor to review the FreshChat.tsx component by simply prompting,

“What are the issues in this file?”

It returned a clear breakdown of problems across three categories: TypeScript errors, general code quality issues, and linter violations. Cursor flagged the use of any in-event handlers, pointed out an undeclared variable (test), and noted that window.fcWidget was being used without extending the global Window interface.

Here’s a snapshot of the output:

It also highlighted direct DOM manipulations and unreleased event listeners that could lead to memory leaks. What made this useful was the structure; it even gave a summary table with line numbers and suggested fixes. This helped me fix type safety, clean up debug code, and avoid potential runtime bugs in just one review cycle, all without needing a manual code audit.

One major difference I found is that Claude Code performs review and refactoring in the same flow. When you ask it to review a file or suggested fixes, it doesn’t just list issues; it often generates a structured diff immediately with proposed fixes already applied. Cursor, on the other hand, separates diagnosis from resolution. It does not apply any fixes automatically unless you explicitly click to apply them.

Who Wins?

Claude Code reviews and fixes code in one go, identifies issues, and applies changes automatically across files, making it ideal for broad cleanups. Cursor lists issues first with clear suggestions and waits for your approval before making any changes. Claude Code suits batch improvements, while Cursor offers more control for precise edits.

Pricing Models & Plan Limits

Claude Code and Cursor follow very different pricing structures, one based on usage and model access, the other on tiered subscriptions with optional metered limits.

Claude Code uses a usage-based billing model tied to Anthropic’s Claude models. The CLI is included in the Pro plan at $20/month, which gives access to Claude 3.5 Sonnet and basic usage of Claude Code. The Max 5× plan ($100/month) provides higher usage limits, priority model access (Claude 4 Opus), and faster sessions for heavier workflows.

In contrast, Cursor follows a tiered subscription model. It comes with a Hobby Plan with limited agent requests. The Pro plan ($20/month) offers unlimited inline completions, around 500 fast agent requests using frontier models, and access to Max Mode, BugBot, and basic team features. Teams ($40/month) and Ultra ($200/month) plans raise those limits for heavier users.

Unlike Claude, Cursor handles model usage internally, though requests to premium models like Claude 4 Opus are still metered. Cursor also allows using your API key to avoid markups and directly control usage.

Who Wins?

Claude offers deeper system access but at a higher operational cost, especially if you rely on Opus frequently. Cursor is more predictable for editor-based tasks, and its flat pricing suits day-to-day development better, though Max Mode users should still watch for token limits.

Why Teams Prefer Qodo in Enterprise Workflows?

Enterprise teams prefer Qodo because it delivers reliable, context-aware AI workflows by tightly integrating with their actual development environment across the CLI, IDE, CI/CD pipelines, and Git-based collaboration systems.

This reliability stems from Qodo’s use of Retrieval-Augmented Generation (RAG), which ensures that AI responses are scoped to the project context. Instead of guessing based on a generic prompt, Qodo fetches relevant code, configuration files, documentation, or tests using static and semantic indexing, then uses that scoped context to generate precise suggestions.

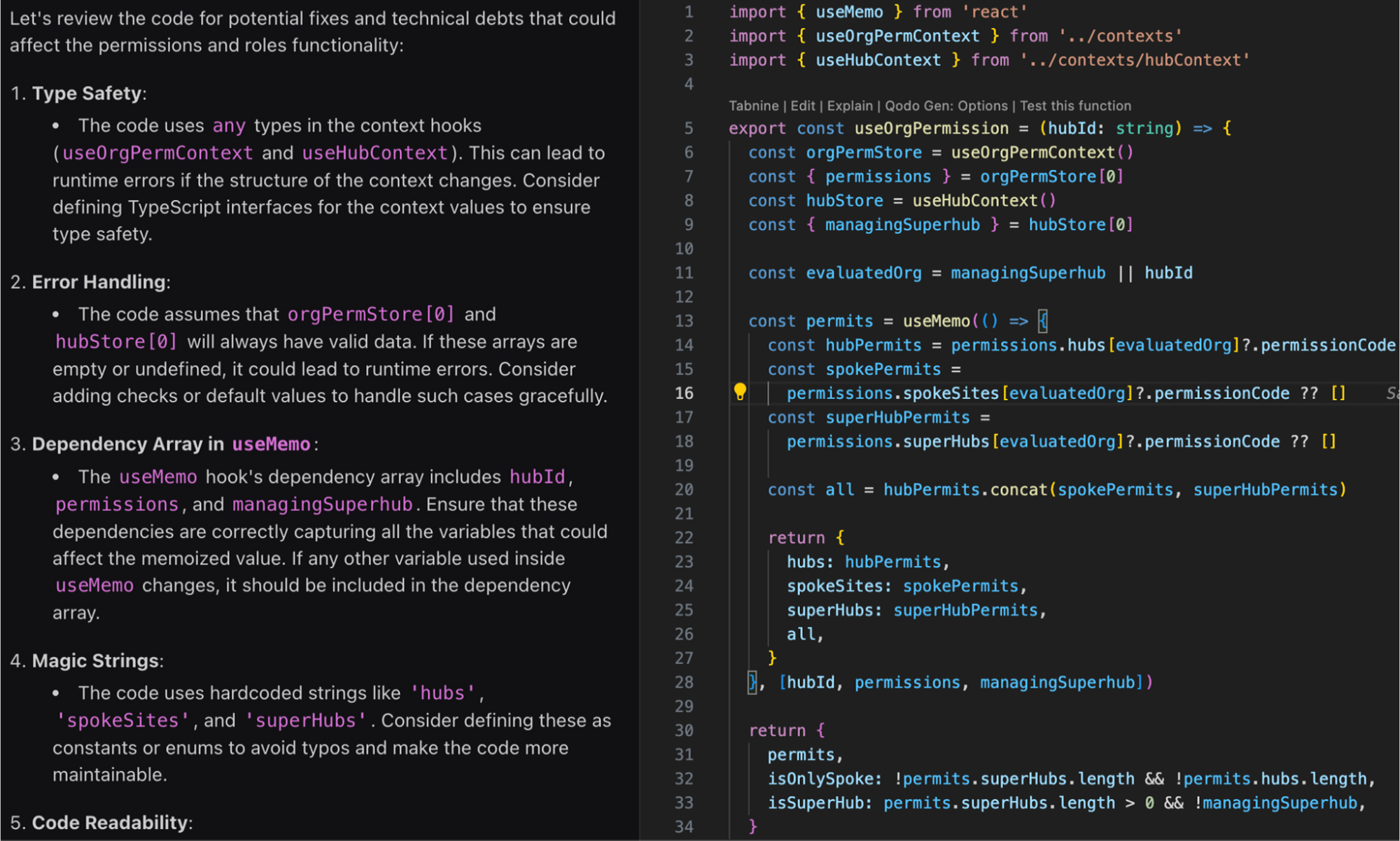

To demonstrate better, let me give you an example. I was reviewing a PR that modified the useOrgPermission() hook, specifically how it evaluates hub and superhub permissions using managingSuperhub || hubId. On the surface, the logic looked fine; it memoized permissions from three sources and returned role flags like isOnlySpoke.

I prompted Qodo:

/review find fixes and tech debts in this file that may hinder the permissions and roles

In the above image, Qodo caught that both useOrgPermContext and useHubContext were using any and suggested adding proper types to avoid runtime surprises. It also flagged missing safety checks around orgPermStore[0] and hubStore[0], which could break if the context doesn’t load as expected. It looked at the useMemo dependency array and ensured nothing was leaking outside the tracked scope.

What helped most was how Qodo used actual project context, not just what’s in the file, but also linked definitions and past changes, to catch issues I’d likely miss in a normal review. It saved me from digging through multiple files or commits manually.

Additionally, Qodo’s CLI-based commands (/review, /implement, /ask) and .toml-defined custom agents allow teams to define and standardize multi-step workflows, like generating tests only when coverage gaps are found or summarizing changes after merging PRs.

From a governance standpoint, Qodo meets enterprise standards with SOC 2 compliance, private cloud or on-premise deployment options, and allow-listed tool policies. Teams can securely adopt Qodo within regulated environments, ensuring that AI-generated outputs meet internal security and compliance requirements.

Conclusion

Claude Code and Cursor represent two approaches to AI-assisted development; one focused on terminal-first automation, the other on in-editor productivity. Claude Code stands out for its deep reasoning, multi-agent workflows, and flexible CLI-based orchestration, making it ideal for large-scale projects, CI/CD pipelines, and infrastructure-level tasks.

Cursor, by contrast, offers a polished IDE experience with features like inline prompting, diff previews, and agent modes suited for day-to-day application development within the editor.

While both tools are powerful in their domains, their effectiveness depends on how your team works. Claude Code is the better fit if your workflows demand system-wide automation, large context windows, and programmable agents. If you prefer a guided, GUI-driven development experience with fast iterations and structured prompting, Cursor delivers that with minimal setup.

For enterprise teams seeking control and adaptability, Qodo combines the best of both worlds, offering secure, context-aware automation, agent-based workflows, and integration across the full development lifecycle. As the AI coding landscape evolves, choosing the right tool isn’t just about features; it’s about fit.

FAQs

1. What are the limitations of Claude Code?

Claude Code is designed for terminal-based workflows, making it well-suited for developers who prefer command-line control and custom scripting. Teams looking for visual features or inline interactions may find their interface better aligned with automation and system-level tasks rather than GUI-based development.

2. What is the best Cursor alternative for enterprise?

Qodo is a strong option for enterprise teams. It supports CLI, IDE, and CI integrations with customizable agent workflows and scoped RAG-based context. Its architecture is designed for large-scale environments, supporting team workflows, governance, and deployment flexibility.

3. Can Claude Code or Cursor handle enterprise-scale code securely?

Yes, both tools can be used securely at scale. Claude Code supports local usage and can be deployed through AWS Bedrock or GCP Vertex services. Cursor includes workspace scoping and privacy settings to ensure controlled access to project data.

4. Are Claude Code and Cursor SOC2 compliant?

Yes, both tools meet SOC 2 compliance standards. Claude Code, developed by Anthropic, is SOC 2 Type I and Type II certified, with additional certifications such as ISO 27001 and ISO 42001. Cursor is also SOC 2 Type II certified and supports enterprise features like SAML authentication, team access control, and privacy mode without data retention.

5. What is the best Claude Code alternative for Enterprise?

Qodo is well-positioned for enterprise use. It offers secure, agent-based automation across the full development lifecycle, covering code generation, reviews, changelogs, and integrations while supporting governance and compliance through private deployments and policy controls.