Effective AI code suggestions: less is more

TL;DR

In our quest to generate effective code suggestions with LLMs at Qodo Merge, an AI-powered tool for automated pull request analysis and feedback, we discovered that prioritization isn’t enough.

Initially we tried telling the model to prioritize finding bugs and problems in pull requests, while still allowing lower-priority suggestions for style, code smells, and best practices. We discovered that this approach was ineffective, and despite the explicit prioritization, the model got overwhelmed by the easier-to-spot style issues, leading to developers flooded with low-importance suggestions.

The breakthrough came when we stopped asking for different priority levels altogether, and instead limited the model to look only for meaningful bugs and problems. This laser-focus approach improved bug detection rates and signal-to-noise ratio, resulting in code suggestions that developers actually wanted to implement, with suggestion acceptance rates jumping 50% and overall impact increasing by 11% across pull requests.

The key takeaway – sometimes the best strategy with LLMs isn’t to prioritize or add complicated instructions, but to eliminate distractions entirely.

Initial approach: casting a wide net

Our initial strategy was ambitious. The model was instructed to prioritize critical issues in its review process, looking first for major problems before considering style-like improvements. Each review would contain up to 5 suggestions, prioretized by severity:

Primary Concerns:

- Critical bugs and problems

- Security vulnerabilities

Secondary Improvements:

- Code style

- Best practices

- Potential enhancements

This hierarchical review system seemed robust and effective. We leveraged modern prompting techniques like YAML-structured output formats to ensure consistency and machine-parseable responses, with each suggestion containing well-defined metadata fields.

The unexpected challenge: style suggestion overflow

In practice, we encountered an interesting phenomenon. Despite our careful prompt and flow engineering strategy, the model consistently gravitated toward style-related suggestions. For example:

- Variable naming conventions

- General exception handling

- Minor refactoring opportunities

- Documentation improvements

While these suggestions weren’t incorrect, they often overshadowed more critical issues. The abundance of minor suggestions often drowned out genuinely important findings. In addition, the model would sometimes misclassify style improvements as major concerns. The core problem wasn’t the model’s ability to spot serious bugs – it was that these easier-to-detect style issues dominated the output

We tried to combat this overflow by adding explicit instructions like “don’t suggest documentation improvements” to our prompt, but it proved futile. The sheer volume of potential style improvements was overwhelming. Even a single mention of ‘code enhancement suggestions’ in our instructions seemed to hijack the model’s attention – there were simply too many possible minor improvements for the model to maintain its focus on truly critical issues.

The signal-to-noise problem

The ‘Casting-wide-net’ approach revealed significant challenges:

- Developers struggled to locate critical issues buried within numerous style suggestions

- The high volume of suggestions led to “suggestion fatigue,” where developers started ignoring the feedback altogether rather than going through numerous style fixes to find the few critical issues.

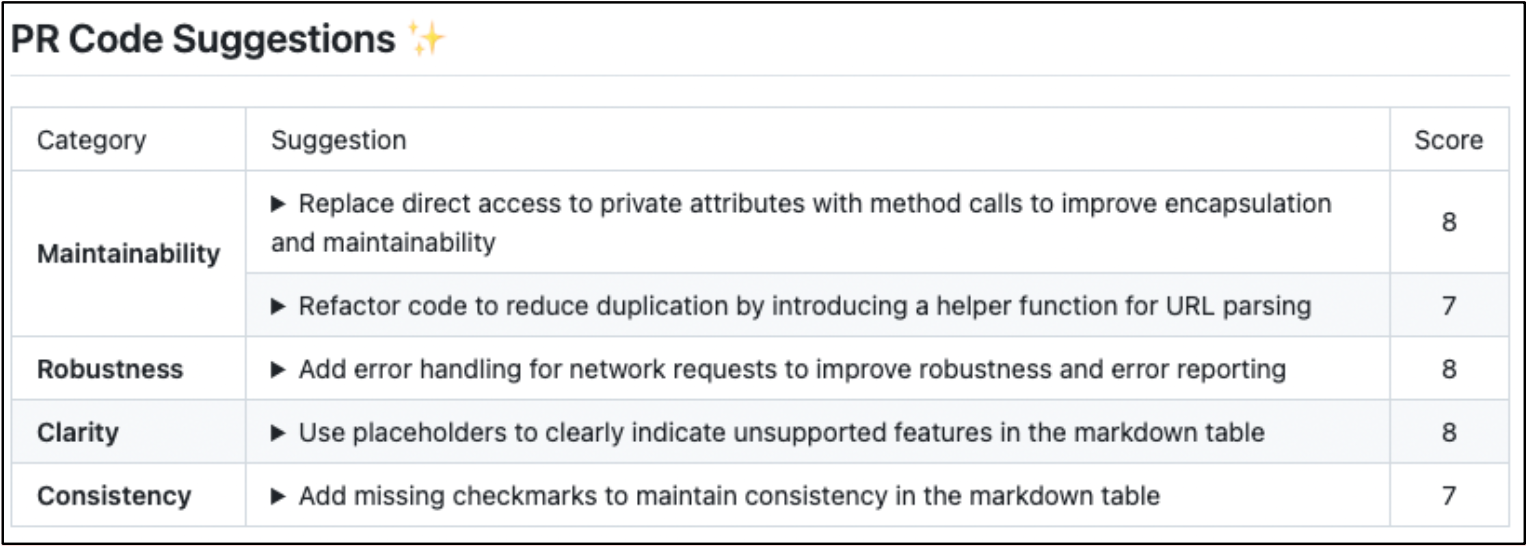

Beyond adding explicit exclusion rules to our prompts, we implemented both importance scoring and suggestion categorization (‘bugs’, ‘enhancements’, ‘best practices’). But even this proved insufficient – the labels and the importance score weren’t accurate enough to truly separate critical issues from nice-to-have improvements.

User feedback revealed a fundamental truth: we needed to show only critical ‘problem-finding’ suggestions by default – everything else was often seen as noise.

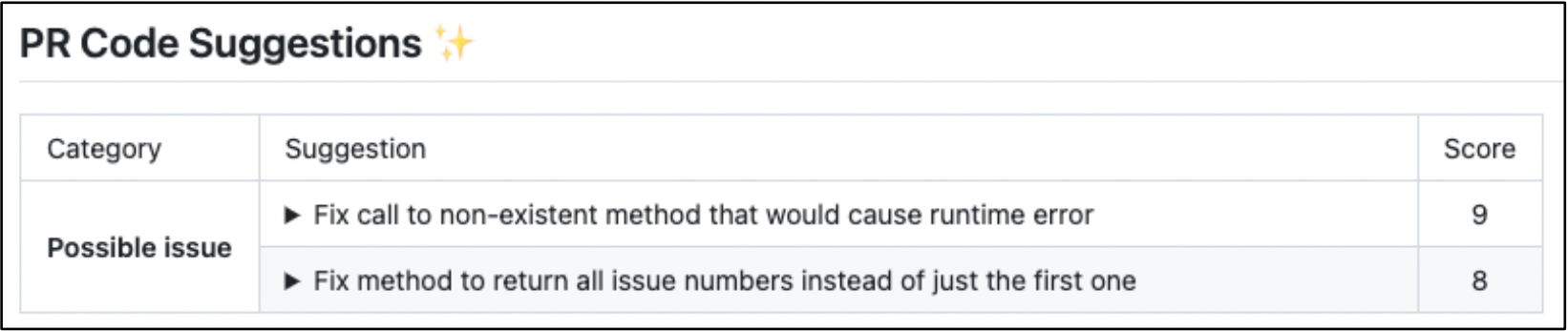

The breakthrough: laser focus on problems and bugs

What ultimately worked was a paradigm shift in our approach. Instead of trying to manage priorities across different types of suggestions, we simplified the model’s task to a single focus: identifying only meaningful problems that could result in bugs and issues in production. Our new prompt was ruthlessly focused:

“Only give suggestions that address major problems and bugs in the pull request code. If no relevant suggestions are applicable, return an empty list”

The results were dramatic:

- The acceptance rate of each code suggestion increased by 50%

- The impact level, meaning the percentage of pull requests where at least one suggestion was applied, increased by 11%

The power of this approach lies in its singular focus. Rather than explicitly excluding certain types of suggestions, we simply directed the model’s attention entirely toward finding meaningful code problems.

This clarity of purpose had two key benefits:

(1) it eliminated the complexity of evaluating and prioritizing different types of suggestions, and (2) it prevented the model from being overwhelmed by the sheer volume of potential style improvements that could otherwise dilute its attention from critical issues.

Code best practices: a parallel solution

While maintaining our focused approach to problem detection, we developed a separate solution for style and best practices code suggestions.

Rather than attempting to enforce every possible best practice – an almost infinite set, we enabled teams and organizations to define their own standards. Qodo Merge now operates through two distinct routes: one dedicated to finding critical problems, and another for evaluating code against company-specific best practices. Teams can also utilize our learning system that analyzes which suggestions developers actually implement – either to establish an initial set of best practices, or to continuously refine and enhance future recommendations.

The success of this dual approach validated our strategy: developers trust and implement our critical bug suggestions because they know each one represents with high probability a genuine problem that needs fixing, while still having the flexibility to maintain their team’s coding standards through configurable best practices.

Final thoughts: the power of scope constraint

This separation reflects a broader insight: sometimes the best strategy isn’t to create complex prioritization schemes or scoring systems – it’s to ruthlessly eliminate scope until you’re left with only what truly matters. This might seem counterintuitive in an era where AI promises to handle increasingly complex tasks. However, our experience suggests that the path to better AI assistance lies not in just adding more complexity, but in being more thoughtful about what we ask these systems to do in the first place.

The principle extends beyond just code review: Instead of trying to handle everything at once, we can try to handle important aspects well but separately. When working with LLMs, this focused approach yields better results than attempting to juggle multiple priorities simultaneously.