Greptile Alternatives in 2025: From Open-Source Agents to Enterprise-Grade PR Automation

TL;DR:

- Greptile is strong at repo-wide graph reviews for single repositories, catching hidden dependencies and patterns, but often produces noisy output and lacks multi-repo scale, compliance, or governance features.

- Qodo extends review across the entire SDLC (IDE to PR to post-merge), with enterprise-grade compliance, documentation automation, custom agents, and on-prem/VPC deployment, best for regulated or large-scale teams.

- CodeRabbit focuses on lightweight, adaptive PR reviews with inline comments and severity ranking. It learns from team feedback to reduce noise, making it ideal for small-to-mid teams or open-source projects, but it lacks governance and compliance.

- Gemini, Aikido, Codacy, and Devlo each target niches: Gemini is GitHub-native with severity labels and one-click patches, while Aikido is security-first with SAST and secrets scanning, Codacy enforces static analysis and quality gates with self-hosting options, and Devlo acts as an AI teammate that can review PRs, summarize, and even open new PRs.

Greptile has positioned itself as an AI-first pull request reviewer. Instead of looking only at diffs, it builds a repository-wide graph to catch things developers often miss: hidden dependencies, inconsistent patterns, and side effects that ripple beyond a single file.

In September 2025, the company raised $25 million in Series A funding to further develop this approach. The plan is to expand into a universal code validation platform, with Greptile v3 already shipping improvements like enhanced bug detection, deeper context awareness, and more customizable rules. Upcoming integrations (Jira, Notion) and an MCP server will make its standards consumable across IDEs and agents.

But powerful analysis alone isn’t enough. One of the biggest challenges with AI review is noise. A reviewer who leaves ten small comments on a minor pull request isn’t helping; it’s teaching engineers to tune it out. What teams need is less output, more signal: feedback that prioritizes critical issues, adapts to what developers actually act on, and cuts repetitive nits. Done right, the reviewer becomes a quiet guardrail across IDE, CLI, and PR, enforcing standards without slowing work down.

At the enterprise level, however, pull request review by itself rarely provides the complete picture. Larger organizations expect earlier checks in the IDE/CLI, compliance enforcement tied to tickets, and automation for tasks such as tests, documentation, and changelogs, as well as deployment flexibility (SaaS, private VPC, or on-premises). That’s when teams start looking at alternatives: platforms that extend beyond PR review and embed governance across the full software delivery lifecycle..

When to Look for Greptile Alternatives

Greptile’s strength is in doing deep, context-aware reviews across a whole repository. That level of analysis is useful, but it isn’t always the right fit. Teams usually start looking at alternatives when their needs shift in one of these directions:

Larger or More Complex Projects

Greptile works best in a single repo setup. In monorepos or multi-repo environments, you often need more than repository graphs, cross-repo dependency tracking, historical commit analysis, and consistency across services. Tools that build in multi-repo awareness or global rules can be a better fit here.

Pre-PR Validation and Compliance

If reviews only happen at the PR stage, risk has already entered the system. Alternatives that push checks into the IDE or CLI help teams block issues earlier. These tools often tie commits back to tickets, enforce org-wide coding standards, and integrate with compliance workflows before the PR even exists.

Automated PR Enrichment

Comments alone aren’t enough for many teams. Alternatives extend the workflow by automatically adding missing unit tests, updating documentation, generating changelogs, or applying repetitive fixes directly in the PR. This reduces review cycles by closing gaps upfront.

Workflow Fit and Performance

Full-repo analysis is powerful, but sometimes it’s overkill. Teams that want lightweight, diff-only feedback may prefer tools designed for speed over depth. Others may prioritize specialized workflows, for example, auto-fixing bugs, or mentorship-style reviews that help less experienced developers ramp up quickly.

Security and Integration Requirements

Greptile integrates with GitHub and GitLab, but not Bitbucket. If your team runs on Bitbucket or has very specific security needs, other platforms may be a better fit. Some alternatives lean heavily into security, running tens of thousands of static analysis checks or combining SAST, IaC, and secrets scanning into a single workflow. These can be attractive for teams that want security consolidated in the same pipeline as code review.

Comparison of Greptile Alternatives: From PR-Only Review to SDLC-Wide Guardrails

Each alternative to Greptile comes with a different angle; some focus on enterprise compliance, some on speed and simplicity, and others on open-source flexibility.

Let’s start with Qodo, one of the most enterprise-focused platforms in this space.

1. Qodo: From Pre-Commit Validation to Post-Merge Governance

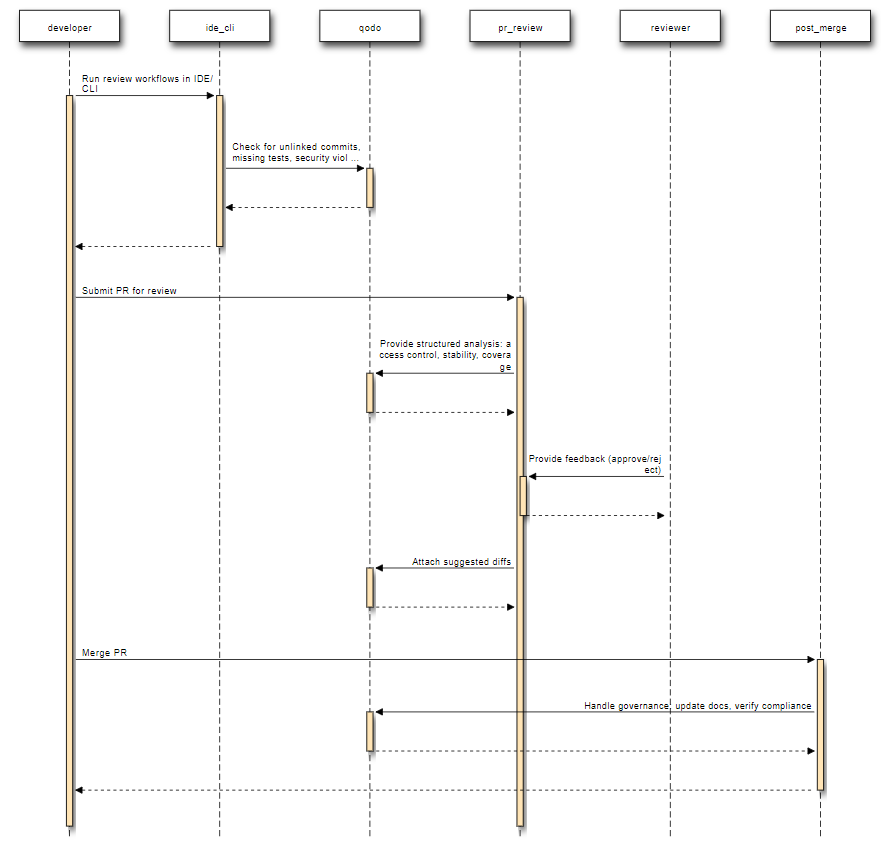

Qodo is engineered to enforce code integrity at every stage of development, not just inside pull requests. Instead of treating review as a one-time gate, it anchors checks from the first commit through to deployment. Here is the flow to illustrate Qodo’s code integrity process at every stage of development:

Explanation of Stages in the Sequence Diagram:

- Before the PR: Runs review workflows directly in the IDE or CLI, blocking issues early, from unlinked commits and missing tests to violations of organizational rules or security constraints.

- During the PR: Provides structured analysis by grouping findings into categories (e.g., access control, runtime stability, test coverage), ranking their importance, and attaching suggested diffs. The emphasis is on fewer, higher-value comments that directly affect viability in production.

- After merge: Handles governance tasks automatically, updating documentation, verifying compliance requirements, and documenting audit logs so merged code remains consistent with organizational standards.

This staged approach shifts review from a narrow check on diffs to a ongoing process that preserves viability, correctness, and maintainability throughout the lifecycle.

Hands-On: Reviewing an Express RBAC Service

To evaluate Qodo, I ran it against a minimal Node.js/Express service (auth-svc) that implements role-based access control (RBAC) with simulated authentication via x-user headers.

I raised a pull request where I had updated src/server.js with targeted changes:

- Auth middleware: Fixed to always resolve x-user if present, while keeping public GET routes open and enforcing auth only for protected endpoints.

- PUT /employees/:id: Moved authorization into the handler. Now only Admin, Manager-of-employee, or listed Editor can update. Admin/Manager exclusively control managerId and ACL (viewers, editors).

- New /me endpoint: Returns the resolved user for quick testing.

- Centralized handlers: Added JSON 404 and error responses with consistent logging

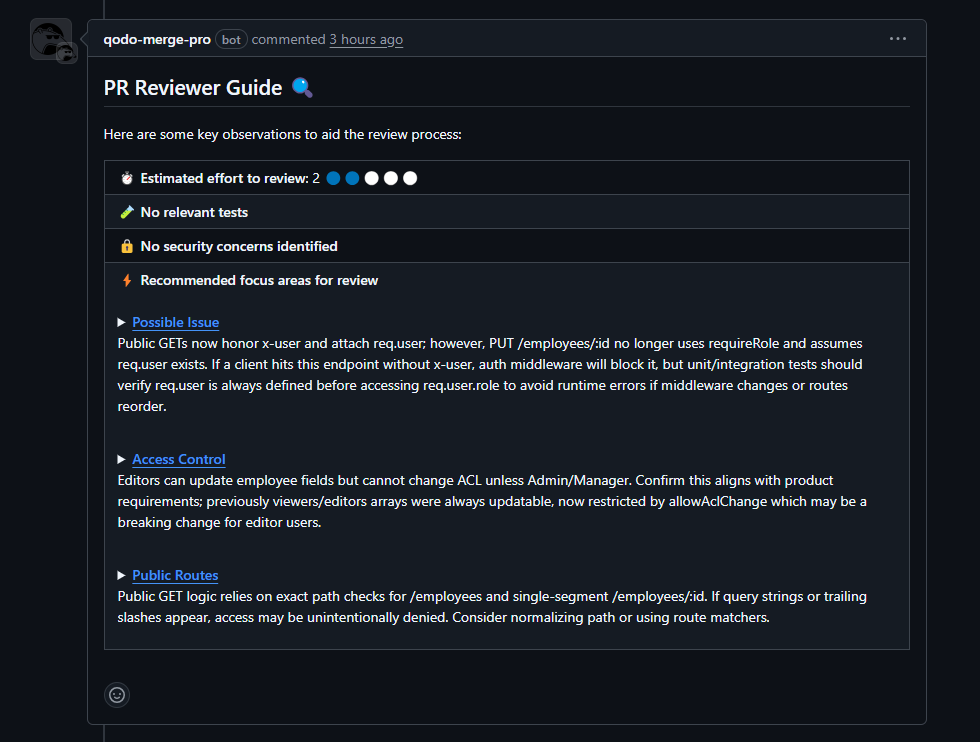

When the PR was opened, Qodo’s merge-pro bot left two structured review comments:

1. PR Reviewer Guide

- Estimated effort rating for the review.

- Flags for “No relevant tests” and “No security concerns identified.”

- Focus areas highlighted: Access control, public routes, and potential runtime issues with req.user.

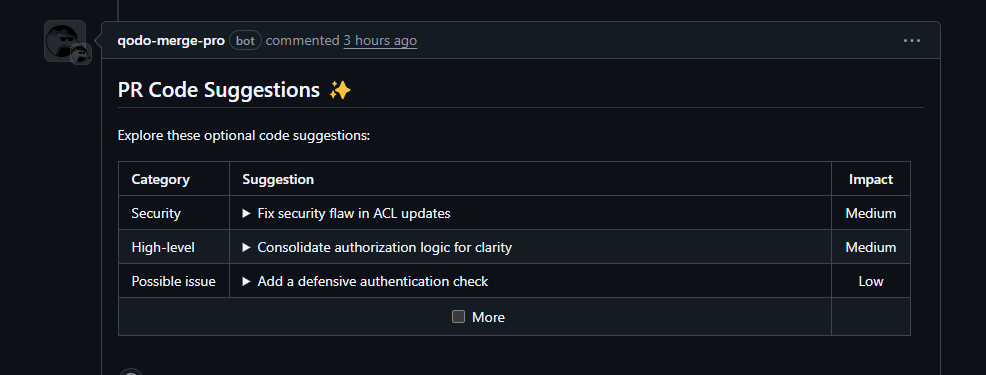

2. PR Code Suggestions

- Security: Block unauthorized ACL updates explicitly (importance score: 8/10).

- High-level: Consolidate authorization checks into a single checkPermissions block for clarity (importance: 7/10).

- Possible issue: Add a defensive req.user check at the start of PUT /employees/:id to avoid edge-case crashes (importance: 6/10).

What stood out was the structured nature of the review. Instead of scattering inline nitpicks, Qodo grouped feedback into categories (security, high-level clarity, possible issues), prioritized them, and attached concrete diffs. The “effort to review” and “focus areas” gave quick scoping before diving into the code. This is where Qodo shows its strength: fewer, higher-signal comments with explicit prioritization. For teams dealing with large volumes of PRs, this kind of structure helps reviewers focus on what matters most while avoiding noise.

Pricing

Qodo targets enterprise buyers. It’s available in SaaS, private VPC, and fully on-prem tiers, with pricing structured for large-scale adoption rather than individual developers.

Pros

- Covers the entire SDLC: shift-left validation, PR review, shift-right governance.

- Strong cross-repo intelligence, including commit history and dependency mapping.

- Extensible via custom agents tailored to team workflows.

- Built-in compliance and governance features suited for regulated industries.

Cons

- Heavier to roll out than lightweight PR-only tools.

- Best fit for larger teams or enterprises; less suited for small side projects.

How Qodo Changes the Shape of Review

Qodo reframes review from “leaving comments on a diff” to ensuring code integrity across the lifecycle. With Qodo Aware providing deep repository context and structured prioritization, reviews are less about catching surface-level issues and more about ensuring that merged code is correct, viable, and compliant at scale.

Next up in my list is CodeRabbit, an open-source option that takes a different approach by letting teams define and evolve their own review rules.

2. CodeRabbit: PR Reviews That Adapt Over Time

CodeRabbit is a pull request review assistant that integrates directly into GitHub and GitLab. Instead of analyzing an entire repo graph, it works at the PR level, leaving targeted inline comments and adapting to your team’s feedback over time. The platform is built to keep reviews lightweight and natural, minimizing noise by learning which suggestions your team accepts and which ones you ignore.

Hands-On: Catching Runtime and Access Control Pitfalls

Here’s how the PR looked when I ran it through CodeRabbit on our Express RBAC service. The review immediately identified two problem areas that were both quite fine.

The first one was in the middleware, where we resolve the x-user header. If you look at the screenshot here:

You’ll see that I was trying to reject unknown users right away, before I even checked if the route was public. That meant if somebody hit /, /health, or a GET /employees with a bad header, the request would still get a 401, even though those endpoints are supposed to be public. In other words, just having an invalid x-user header was enough to take down routes that should be anonymous. CodeRabbit suggested a cleaner flow: parse the header, attach the user only if it resolves, and then decide later whether to 401 based on whether the route is public. It also suggested differentiating between a missing header and an unknown user in the error response. That feedback was spot on; it wasn’t just a style nit, it was a runtime bug that could break public endpoints in ways normal tests wouldn’t catch.

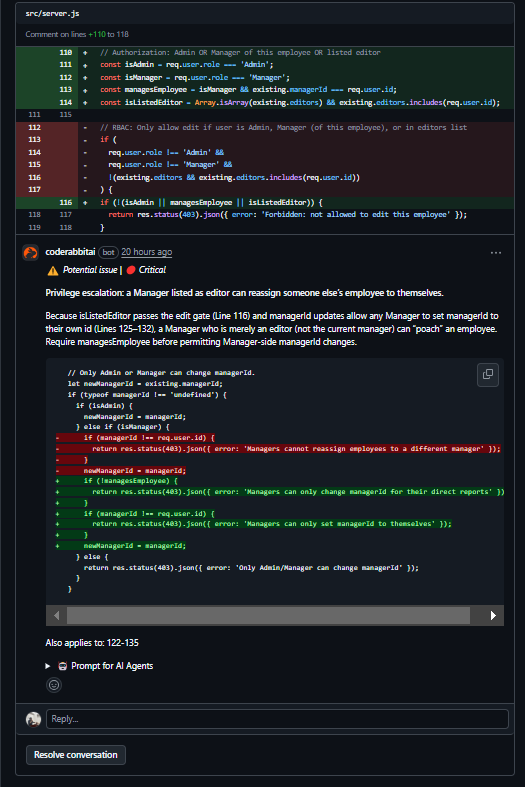

The second issue was more serious and came up in the PUT /employees/:id handler. Take a look at this comment below:

The way I had written the gate, a Manager who was also listed as an Editor could slip through and update the managerId field. That effectively let them reassign an employee they didn’t manage and designate themselves as the new manager. It’s a neat little privilege escalation: any Manager who was also an Editor could “poach” employees. CodeRabbit’s patch tightened it up by requiring that a Manager must actually manage the employee before they can touch managerId, and also restricted Managers so they could only assign the field to their own ID, never someone else’s. Admins still bypass as before. That nailed the problem exactly; the bug was coming from conditions overlapping in a way that created a hole.

What made this review actually useful is that CodeRabbit didn’t drown me in noise. It flagged the middleware bug as Major, the manager escalation as Critical, and kept the rest of the small style stuff collapsed. The explanations were in plain language and tied directly to runtime behavior and security impact. Instead of me digging through scattered nitpicks, the tool highlighted the exact two things that could cause real issues in production. It felt less like static analysis and more like having another senior reviewer on the PR who knew how to prioritize.

Pricing

CodeRabbit is a SaaS with subscription tiers based on team size. A free plan is available for open-source projects, making it accessible to smaller teams or community work.

Pros

- Installs in minutes, no heavy setup.

- Inline PR comments that feel like natural peer review.

- Learns from your feedback, minimizing noise over time.

- VS Code plugin supports pre-PR validation.

- Free tier for open-source projects.

Cons

- No enterprise-grade compliance or audit features.

- Limited to GitHub and GitLab; no Bitbucket support.

- Focus is on PR efficiency, not broader lifecycle automation (e.g., no auto-generated tests or changelogs).

Where CodeRabbit Fits Best

CodeRabbit is best if you want to keep reviews lightweight and adaptive. It doesn’t try to enforce policies across your org, but it does make PR reviews faster and more consistent. For small to mid-sized teams, or open-source maintainers, it’s an easy way to add a reliable “extra reviewer” without the overhead of enterprise review platforms.

3. Gemini: Google’s On-Demand Reviewer Inside GitHub

Gemini Code Assist works as a GitHub pull request reviewer. Once installed, it automatically joins PRs, posts summaries of changes, and adds inline comments. Instead of bots that always run on every push, Gemini is prompt-driven; you can type /gemini review or /gemini summary in a PR comment to re-run it on demand.

Hands-On: Hardening a Service Health Dashboard

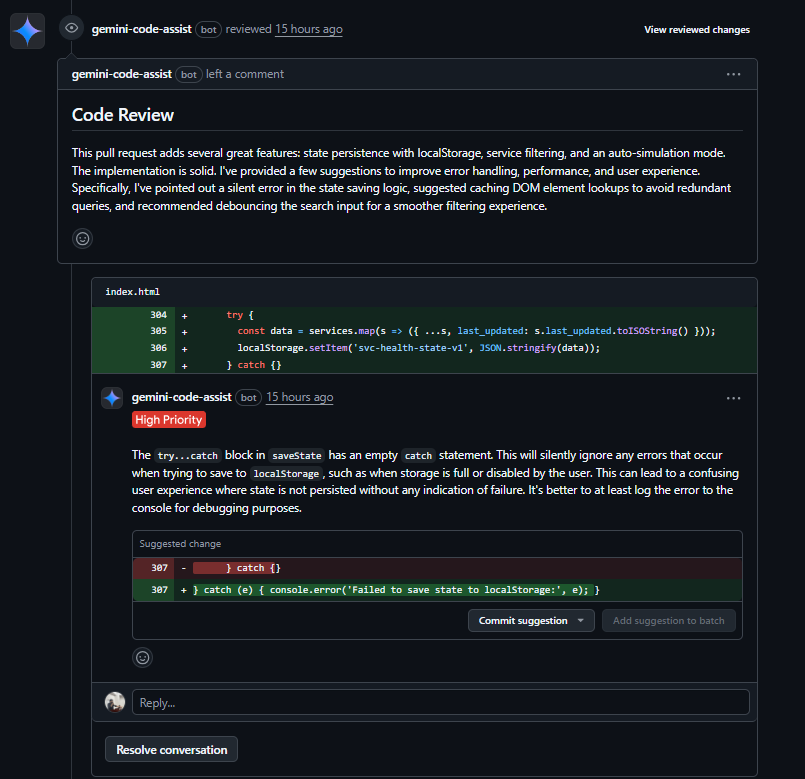

I pushed up the Service Health Dashboard changes on a feature branch that bundled persistence with localStorage, search, status filtering, and the auto-simulation interval toggle. Functionally, everything worked fine: I could update statuses, trigger randomization, reload the page, and the state was restored as expected. But when Gemini reviewed the PR, it pointed out a few places where the code could be more reliable and maintainable.

Here is how Gemini gave the suggestions:

As shown in the above review, the first thing it caught was in the persistence logic. In saveState I had wrapped the localStorage write in a try…catch, but left the catch block empty. That meant if localStorage was full or disabled, the error would vanish silently and the user wouldn’t know why their state wasn’t saving. Gemini flagged this as high priority and suggested logging the error to the console so that at least developers would see what went wrong. You can see the comment here:

It made the same point for the loading state as well. Parsing malformed JSON was also failing silently and falling back to mock data. Logging those errors would make it clear when persistence was broken instead of hiding the problem.

From there, it turned to the auto-simulation feature. Right now, startAutoSim and restartAutoSimIfEnabled both grab the #autoSimulate and #autoInterval elements with document.getElementById every time they’re called. Gemini pointed out that those references never change, so it’s cleaner to cache them once on init and reuse them. It was also noticed that startAutoSim was redundantly checking the checkbox even though its only caller had already handled that. And the event listeners for the checkbox and interval input were written separately when they could just be collapsed into a loop that attaches the same handler. Small changes, but they make the code easier to read and maintain.

The last thing Gemini flagged was the search input. Every keystroke triggers a re-render of the table, which is fine when you only have a handful of services, but it starts to feel rough when the list grows. The recommendation was to debounce the handler so that the table only re-renders after the user pauses typing for a short time, which keeps the UI smooth without unnecessary work.

What I liked about this review is that it didn’t nitpick the features themselves; it confirmed persistence, filtering, and autosim all worked, but it zeroed in on the kind of refinements a senior reviewer would care about. Don’t swallow errors that will make debugging impossible. Cache DOM lookups instead of repeating them. Trim down redundant checks. Debounce user input for performance. The features shipped either way, but Gemini’s feedback pushed the implementation toward something more solid and maintainable over time.

Pricing

Gemini is part of Google Cloud’s pay-as-you-go model. Usage costs depend on the underlying Vertex AI calls and GitHub integration. It’s not a flat per-seat SaaS subscription like CodeRabbit or Greptile.

Pros

- Integrated directly into GitHub pull requests.

- Manual control via slash commands is handy.

- Severity labels on issues (Critical to Low) make triage easy.

- One-click patches streamline fixes.

- Strong fit for teams already invested in Google Cloud.

Cons

- Only works with GitHub; no GitLab or Bitbucket support.

- Doesn’t maintain institutional memory across repos.

- Limited governance features compared to enterprise-first tools.

Targeted PR Feedback Inside GitHub

Gemini Code Review is a suitable option as a lightweight reviewer that lives inside GitHub. It adds context, severity labels, and ready-to-apply fixes, but doesn’t try to enforce org-wide standards. For teams already integrated with Google Cloud, it’s a practical way to add AI-assisted review without significantly altering existing workflows.

Next up in my list is Aikido, a security-first platform that brings static analysis, IaC checks, and secrets scanning into the review flow.

4. Aikido: Security-First Checks Embedded in the Review Flow

Aikido is less of a pure AI reviewer and more of a security-first tool that combines static code analysis, infrastructure scanning, secrets detection, and more under one umbrella. It integrates into your Git workflow, surfaces vulnerabilities in code, infrastructure-as-code, dependencies, and container images, and proposes fixes.

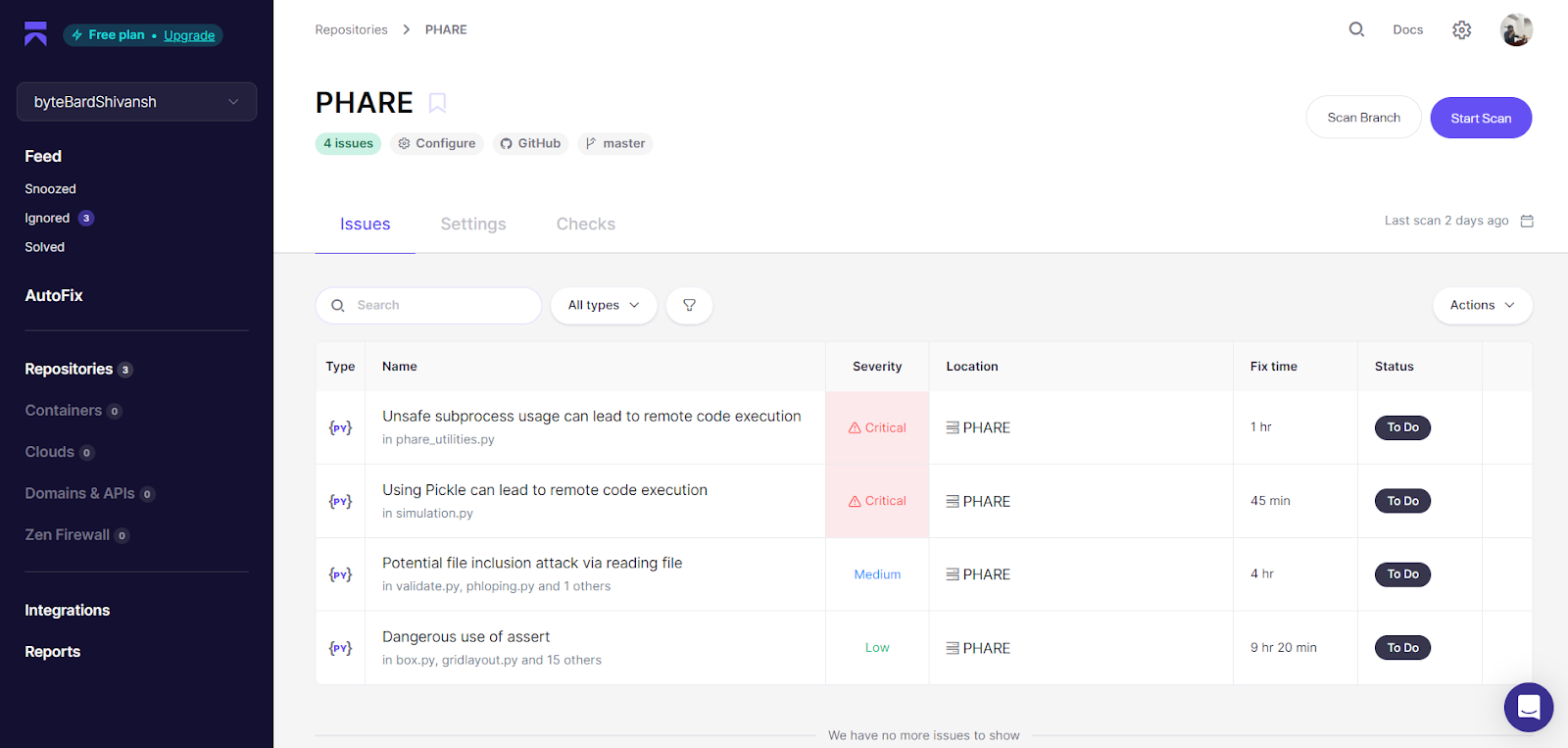

Hands-On: Scanning a Python Repo

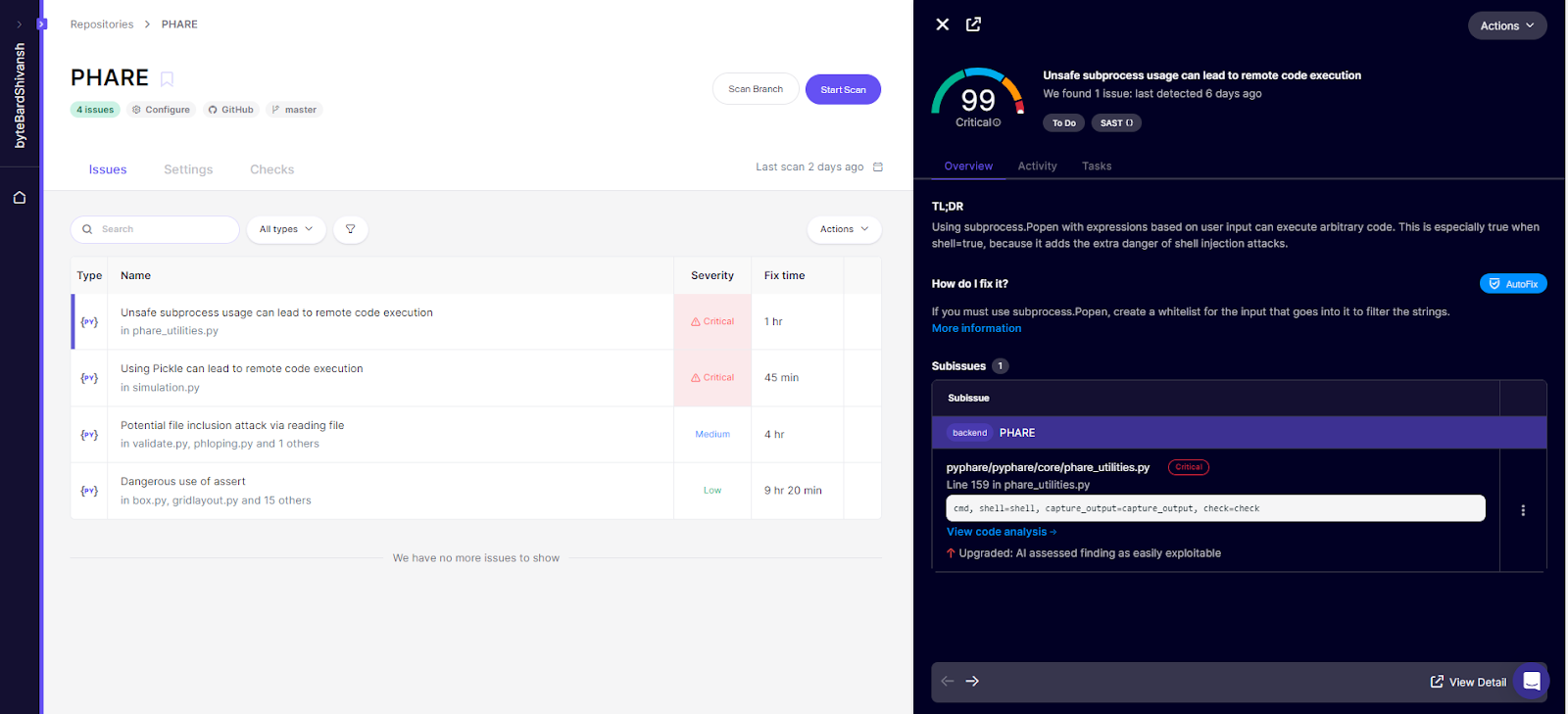

When I scanned the PHARE repository with Aikido Security, it identified four issues immediately: two marked as Critical, one as Medium, and one as Low. The issues dashboard gave me the severity, location, and even rough fix times, useful for prioritizing what to tackle first. Here’s what it showed me in a dashboard view after the repo scan:

The most concerning was unsafe subprocess usage in phare_utilities.py. Aikido flagged this as Critical because subprocess.Popen was being used with shell=True, which opens the door to remote code execution if any part of the command string is influenced by user input. That’s exactly the kind of minor but catastrophic risk you don’t want slipping into a scientific codebase.

Clicking into the issue brought up a detail panel:

The panel broke down the information really clearly. At the top, it labeled the severity as Critical with a risk score of 99, and under “TL;DR,” it explained in plain language why this was dangerous, using shell=True adds the extra danger of shell injection attacks. Right below that, it gave a practical fix: if subprocess.Popen really can’t be avoided; whitelist the inputs that get passed through.

It didn’t just stop at the theory. The “Subissues” section pointed me to the exact file and line (phare_utilities.py line 159) and showed the vulnerable snippet:

cmd, shell=shell, capture_output=capture_output, check=check

The panel even annotated that this finding had been “upgraded” by AI assessment as easily exploitable, meaning it wasn’t just a theoretical risk; it was a real RCE vector. That extra bit of triage is important because it signals what should be fixed first, before even considering medium- or low-priority issues.

Alongside this, Aikido flagged the second Critical issue: pickle usage in simulation.py. Same category of risk, deserializing untrusted input with pickle can hand over code execution. The Medium issue was potential file inclusion in validation scripts, and the Low one was bare assert usage, which can disappear entirely if Python is run with optimizations.

What worked well here is that Aikido didn’t just dump a lint-style report. It gave context, remediation guidance, file/line pinpointing, and even rough time estimates to fix. That combination makes it easier to prioritize; I know to patch the subprocess call before worrying about cleaning up asserts.

Pros

- Broad coverage: code, dependencies, IaC, containers, secrets.

- Integrates into PR workflows and CI/CD.

- Ability to propose fixes, not just raise issues.

- Noise reduction logic to filter out low-value alerts.

Cons

- The focus is more on security and risk than on deep code style or architectural reviews.

- Setup may require enabling features per repo and tuning checks.

- Some checks (especially for IaC and containers) may require an environment context or configuration to reduce false positives.

Aikido’s Role in the Review Process

If your priority is security, compliance, and catching high-risk issues early, Aikido is a suitable choice. It isn’t a drop-in alternative to tools like Qodo or Kodus when it comes to enforcing dev standards or generating tests, but it is effective in stitching security posture into your PR flow.

From security to maintainability: the next tool I examined was Codacy, which relies heavily on static analysis and repository-wide quality checks.

5. Codacy: From Security to Maintainability

Codacy takes a different angle. Its strength is static analysis, checking style, complexity, duplication, test coverage, and common security patterns across repositories. The goal isn’t to add inline AI-style suggestions but to enforce consistency and maintainability through measurable quality gates.

Hands-On with Codacy on custom auth-service:

Codacy’s scan on the auth-svc branch showed me there’s work to do before this should ever hit main. The dashboard flagged 8 issues: 4 tied to security, 3 to complexity, and 1 under best practices. Additionally, the complexity score was 33% with an issue density of 5.76 per KLOC. For a small service, those numbers are a warning sign; it means handlers or utilities are carrying too much logic, which makes them harder to test and more fragile over time.

The security findings lined up with what I’d expect in a rushed branch:

- inputs being passed through without validation,

- error handlers that could leak stack traces if something slipped through,

- and permissive route definitions that expose more endpoints than necessary.

None of these are going to blow up unit tests, but in production, they’re the exact kinds of footguns that come back later as real incidents.

Looking at the PR for “fix(auth): honor x-user on public GETs; enforce PUT RBAC in handler”, Codacy wasn’t impressed. It marked the PR as not up to quality standards and flagged two new medium-severity issues introduced in the diff.

One of them was in server.js: the error handler was defined with a next parameter that never got used. On paper, that looks harmless, but in an Express app, it’s misleading; the presence of next makes other engineers assume it’s part of a middleware chain. Leaving it in place wastes time when someone comes along later expecting errors to propagate. The fix is simple: drop next from the signature so the function reads exactly as it behaves.

That’s the value Codacy added here. It didn’t just bark about unused variables; it caught:

- branch-level risks: unvalidated inputs, unsafe defaults, high complexity;

- PR-level clarity issues: misleading code patterns introduced in the latest change.

For me, this is where Codacy fits in the stack. It’s not about catching the critical RBAC bugs CodeRabbit flagged or the runtime pitfalls Gemini spotted; it’s about ensuring the code that merges doesn’t become harder to maintain or mislead the next developer in line.

Pricing

Codacy is a SaaS with tiered pricing. There’s a free plan for open-source projects, and paid plans are per-seat for private repos. Enterprises can also run Codacy Self-Hosted on Kubernetes for full control.

Pros

- Strong static analysis with wide language coverage.

- Enforces quality gates and standards across all repos.

- IDE plugins (Guardrails) bring checks into local dev.

- Self-hosted option available for enterprises.

Cons

- Less interactive in PRs compared to tools like Qodo or CodeRabbit

- Focuses on metrics and gates rather than contextual explanations.

- Noise levels can be high if rules aren’t tuned properly.

Where Codacy Fits Best

Codacy is best for teams that want consistent quality checks enforced across multiple repos with minimal manual review. It’s not an AI reviewer in the conversational sense, but it works well as a guardrail for coverage, duplication, and standards. For orgs that need static analysis baked into CI/CD and the ability to run self-hosted, Codacy is a mature option.

6. Devlo: An AI Teammate That Can Review and Contribute

Devlo is pitched less as “just another review bot” and more like a teammate that happens to be an AI. It plugs into GitHub, watches your issues and pull requests, and you can literally tag it (@devloai) to review code, summarize a PR, or even draft a fix. Instead of static tools that only scan and comment, Devlo can also generate new PRs from issues, reply to review threads, or automate follow-ups.

Hands-On: Putting Devlo Into a PR

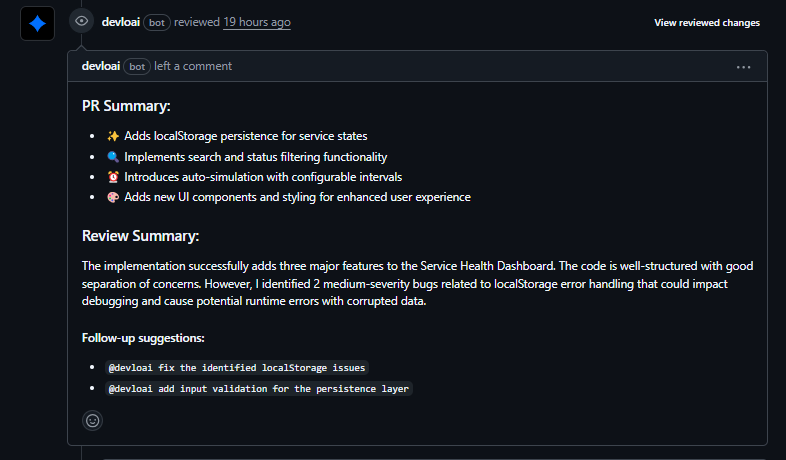

Here’s how the Devlo.ai review played out when I ran it against the Service Health Dashboard PR. Instead of just listing files and diffs, the bot scanned the whole change and came back with a structured summary: what the PR introduced (localStorage persistence, search/filtering, auto-simulation, UI tweaks), plus a quick assessment of overall quality. It didn’t stop there; it immediately called out two medium-severity bugs in the persistence layer that could cause data loss or runtime errors if left unchecked.

Here’s how the Devlo.ai scan summarized the PR, flagged the issues, and even suggested concrete follow-ups like fixing localStorage error handling and adding validation:

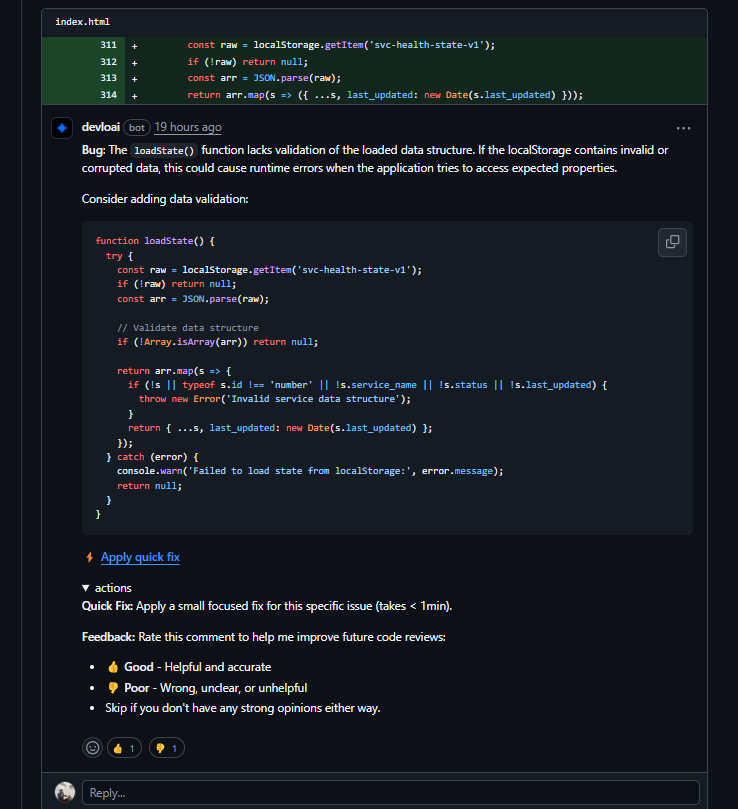

The first detailed comment zeroed in on the persistence functions. Both saveState() and loadState() used empty catch blocks, which meant if localStorage threw (quota exceeded, browser disabled, etc.), the app would silently fail and keep running as if nothing happened. That’s a recipe for confused users and hard-to-trace bugs. Devlo.ai’s suggestion was dead simple but effective: add console.warn with the error message so devs at least have visibility during debugging. This snapshot below shows the inline recommendation to replace the empty catch with proper logging so failures aren’t swallowed:

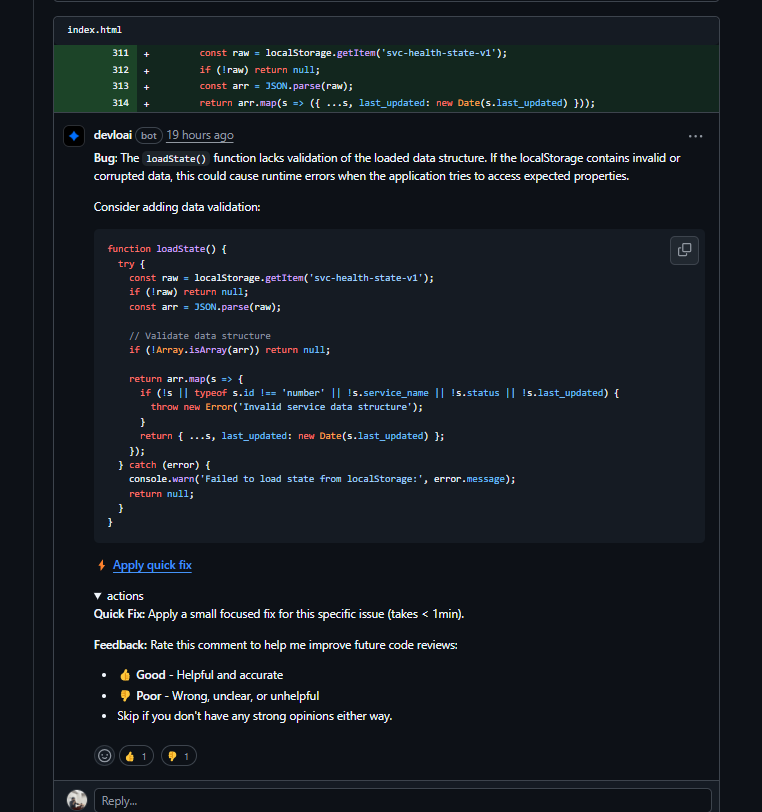

The second bug report drilled deeper into loadState(). Even if persistence succeeded, the function never validated the shape of what came back. Corrupted or tampered JSON could slip through, leading to runtime errors down the line when missing properties are accessed. The bot flagged this as risky and suggested validating the array and ensuring every service object has id, service_name, status, and last_updated. That’s defensive coding; you typically add only after burning your hands in production.

Here is how Devlo recommends adding schema-like checks and throwing an explicit error if the persisted data doesn’t meet expectations:

What stood out in this run was how Devlo.ai framed the findings:

- It treated these as runtime bugs, not cosmetic issues.

- It paired every flag with a drop-in patch, cutting out the guesswork.

- It explained why these failures matter: silent data loss and corrupted state leading to crashes.

This wasn’t a checklist-style review; it read more like a seasoned engineer saying, “Here’s where this will break in the wild, and here’s how to harden it right now.”

Pricing & Deployment

Devlo comes in free and paid tiers via the GitHub Marketplace. The free tier covers basic review and ticket resolution. Paid tiers unlock higher usage and more automation (like generating PRs at scale). Since it has write access to repos, you need to treat it with the same caution as a teammate who can merge code; permissions should be scoped carefully.

Pros

- Goes beyond review, can summarize, resolve comments, and even draft code changes.

- Adapts to your team’s feedback, allowing the noise level to drop over time.

- Tag-based control means you decide when it acts, instead of it spamming every commit.

- Useful for small fixes and repetitive tickets that burn reviewer time.

Cons

- Because it has broader permissions, a poorly suggested action applied blindly could be riskier than a comment-only bot.

- Coverage is wide, but depth varies; complex edge cases may still need human review.

- Works only in GitHub; no GitLab/Bitbucket support yet.

How Teams Typically Use Devlo

Devlo feels closer to an eager junior dev on the team than a lint tool. It’s best when you want to offload repetitive review and ticket work without setting up heavy compliance frameworks. You still need humans in the loop for judgment calls, but it can shave cycles off common reviews and keep small issues from stalling.

How These Tools Shape Code Review

These tools address distinct pain points in the enterprise review workflow: some optimize PR review by surfacing diff context or automating checks, others harden code pipelines with security scanning and policy enforcement, while a few emphasize governance controls or developer training. The table below maps out their differentiators so you can see where each fits into the review lifecycle.

| Tool | IDE Support | PR Enrichment (tests/docs/changelog) | Compliance / Governance | Security Focus | Deployment Fit |

| Qodo | VS Code, JetBrains | Yes – tests, docs, changelogs | Strong – org-wide standards, SOC 2, VPC/on-prem | Moderate | SaaS / VPC / On-prem |

| CodeRabbit | VS Code | No | Weak | Low | SaaS (GitHub/GitLab) |

| Gemini (Google) | GitHub App only | No | Weak | Low | Strong for Google Cloud orgs |

| Aikido | None | No – but scans PRs for issues | Moderate | Strong – SAST, IaC, containers, secrets | SaaS |

| Codacy | VS Code, IntelliJ | No – static analysis only | Strong – quality gates & self-host option | Moderate | SaaS / Self-host |

| Devlo | GitHub only | Partial – drafts, fixes & PRs | Weak | Low | SaaS (GitHub) |

Context-Driven Code Review Aligned with Enterprise SDLC Workflows

In large organizations, code review has grown far beyond catching bugs in a pull request. It’s about aligning changes with requirements, keeping dozens of repositories consistent, meeting compliance needs, and doing all of that without overwhelming engineers in trivial comments. The challenge isn’t generating feedback; it’s making that feedback precise, trustworthy, and connected to the bigger system.

Qodo solves this by grounding reviews in a deep understanding of the codebase. Its Qodo Aware engine indexes repositories, commit history, and dependencies, so reviews are never blind to the rest of the system. Suggestions don’t just flag issues in isolation; they reference patterns from past bugs, architectural dependencies, or standards defined by the team itself. This context is what cuts through noise and makes feedback actionable.

Standards live inside the repository in versioned files like .pr_agent.toml or best_practices.md. This means the rules evolve alongside the codebase, and every change is traceable. When a developer opens a PR, Qodo can respond with targeted commands:

- /review: runs structured analysis, checking both code context and ticket alignment.

- /improve: proposes concrete diffs rather than vague advice.

- /implement: applies accepted feedback automatically, minimizing rework.

- /add_docs and /update_changelog: keep supporting artifacts in step with the code.

Every suggestion is delivered as a before/after diff, and maintainers remain in control. Qodo also tracks which recommendations are accepted or dismissed, fine-tuning its checks over time to emphasize what’s genuinely valuable.

From development to release, Qodo integrates code review into the entire lifecycle:

- Early in development: IDE guidance helps developers apply best practices before opening a PR.

- During review: context-aware analysis, compliance checks, and automated fixes.

- After the merge: updated documentation, validated standards, and an auditable record of applied rules.

The outcome is not an AI bot that sprinkles comments, but a code review system built for the SDLC itself, scalable, auditable, and tuned to the realities of enterprise engineering.

FAQs

What is the best Greptile alternative for an enterprise?

There isn’t a single “best” choice; it depends on priorities. Qodo is often chosen for lifecycle-wide governance, compliance, and on-prem deployment. Aikido stands out when security scanning is the top concern, while Codacy fits teams that want static analysis and self-hosting. Enterprises usually evaluate based on scale, compliance needs, and deployment flexibility.

What is the best Greptile alternative for an enterprise?

There isn’t a single “best” choice; it depends on priorities. Qodo is often chosen for lifecycle-wide compliance and on-prem deployment. Aikido stands out when security scanning is the top concern, while Codacy fits teams that want static analysis and self-hosting. Enterprises usually evaluate based on scale, compliance needs, and deployment flexibility.

Which AI code assistants suggest best practices during code review?

Several tools showcase best practices during reviews. For example, Qodo let teams define organizational rules that reviews are checked against. CodeRabbit and Gemini adapt over time, learning from accepted or ignored suggestions to highlight improvements in maintainability and clarity.

Which alternatives tend to fit enterprise teams?

Enterprises typically seek features such as cross-repository awareness, compliance enforcement, deployment flexibility (SaaS, VPC, on-premises), and integration with ticketing systems. Tools like Qodo or Codacy are often chosen in these contexts, while Aikido is preferred when security scanning is the priority.