Maximizing Automated Testing in AWS Serverless Architecture for qodo Users

Learning to write tests makes you a better programmer, but learning to write tests faster and better is a superpower. qodo (formerly Codium) developers generate automated code tests that mock AWS services deployed via AWS Lamba, AWS’s serverless service.

But many other developers hate to write code tests for their code. It feels like a loop, until this is you…

Low code testing tools like qodo (formerly Codium) have been helpful so far. But how does it work for AWS-related tasks?

Let’s take a tour…

Case Study: AWS Systems Manager and Lambda Function Integration for Scheduled Maintenance

The table format:

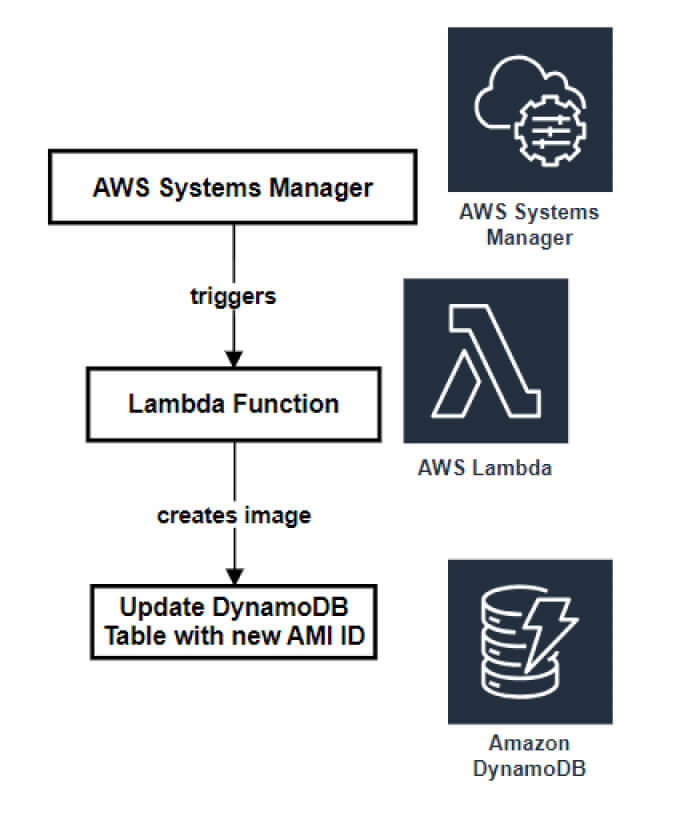

An interesting use case is one where a developer uses the AWS Systems Manager to schedule a maintenance window that triggers a Lambda function.

In this article, we’ll see how to test a simple serverless Python script defined inside a Lambda function. The function creates a virtual machine image, aka an Amazon Machine Image (AMI), out of an instance ID. It also saves the AMI ID as a state in a DynamoDB database.

In a more complex use case, this AMI can also be copied into another region. Then, at every maintenance window, the previous AMI and its copy will be deleted, and a new one will be created. Also, the DynamoDB items will be created and maintained via popular infrastructure as code (IaaC) tools like Terraform or Cloudformation.

[blog-subscribe]

Required Python Packages

Before diving into AWS testing with qodo (formerly Codium), ensure you have the following Python packages installed:

- Boto3

- Pytest

- Moto

- Pytest-mock

Install the Python packages using the following commands:

pip install boto3 pytest moto pytest-mock

The name of the file is script.py. Below is a breakdown of what the code does.

import boto3 from datetime import datetime

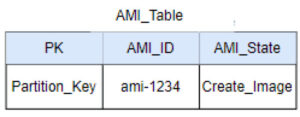

Create Dynamodb Table and Put Item

This function is created for test purposes.

def create_dynamodb_table_and_put_item():

"""

Create a DynamoDB table and put an item in it.

"""

dynamodb = boto3.client('dynamodb')

# Create a DynamoDB table

dynamodb.create_table(

TableName='AMI_Table',

KeySchema=[

{'AttributeName': 'id', 'KeyType': 'HASH'}

],

AttributeDefinitions=[

{'AttributeName': 'id', 'AttributeType': 'N'}

],

ProvisionedThroughput={

'ReadCapacityUnits': 5,

'WriteCapacityUnits': 5

}

)

# Wait for the table to be created (this is important!)

dynamodb.get_waiter('table_exists').wait(TableName='AMI_Table')

# Usually the put item is deployed and managed using Cloudformation, Terraform, or an SDK or CDK.

# The put item is specified here to mock the database.

put = dynamo.put_item(

TableName='AMI_Table',

Item={

'PK': {'S': 'Partition_Key'},

"REGION_AMI_ID": {"S": "ami-123"},

"AMI_State": {"S": "Create_AMI"}

}

)

Create AMI

# Function to create a new AMI

def create_ami_and_update_attribute(InstanceId):

table_name = 'AMI_Table'

key = {'PK': {'S': 'Partition_Key'}}

ec2 = boto3.client('ec2')

dynamodb = boto3.client('dynamodb')

# Get the current time to use as the AMI name

current_time = datetime.datetime.utcnow().strftime('%Y-%m-%d / %H-%M')

try:

create_image = ec2.create_image(

InstanceId=InstanceId,

# Use the current time as the image name

Name=f'{current_time}_AMI'

)

# Update the region_ami_id variable

region_ami_id = create_image['ImageId']

# Store the region_ami_id value as a state in a dynamodb ami_table

update_ami_id = dynamo.update_item(

TableName=table_name,

Key=key,

# (:) in :region_ami_id is used to define a placeholder for

# attribute name or a value that will be set.

UpdateExpression="SET REGION_AMI_ID = :region_ami_id",

# Use :region_ami_id as a placeholder, its actual value is desired_state.

ExpressionAttributeValues={

':region_ami_id': {

'S': region_ami_id

}

}

)

# Return the value so that when the function is passed to a variable, a value exists.

return region_ami_id

# InstanceId is not found in event data when creating a new AMI

except Exception as e:

return f"Error creating AMI: {str(e)}"

Lambda Function

# Function to create a Lambda Handler

def lambda_handler(event, context):

table_name = 'AMI_Table'

key = {'PK': {'S': 'Partition_Key'}}

# Initialize the AWS clients

region = 'eu-west-2'

dynamo = boto3.client('dynamodb', region_name=region)

# Get ami_id value from dynamodb AMI_Table

get_ami_id = dynamo.get_item(TableName=table_name, Key=key)

ec2_source = boto3.client('ec2', region_name=region)

region_ami_id = get_ami_id['Item']['REGION_AMI_ID']['S']

# Read the dynamodb table

db_read = dynamo.get_item(TableName=table_name, Key=key)

# Get the AMI State from the dynamodb table

ami_state = db_read['Item']['AMI_State']['S']

if ami_state == 'Create_AMI':

# Extract the InstanceId from the event dictionary

InstanceId = event.get('InstanceId')

# Check if InstanceId value exists

if InstanceId:

# Create DynamoDB table and put an item

create_dynamodb_table_and_put_item()

# Create an AMI and update DynamoDB attribute using the instance ID

create_ami_and_update_attribute(instance_id)

return f'Created new AMI: {region_ami_id}'

# When InstanceId value does not exist

else:

raise ValueError('InstanceId not found in event data.')

else:

raise ValueError('Invalid state')

Generating Tests

Now, you want to highlight all the code and execute the prompt below in the qodo (formerly Codium) interactive chat so a test is generated.

/test Use Pytest

After executing the prompt, several test use cases are suggested. Simply run the tests.

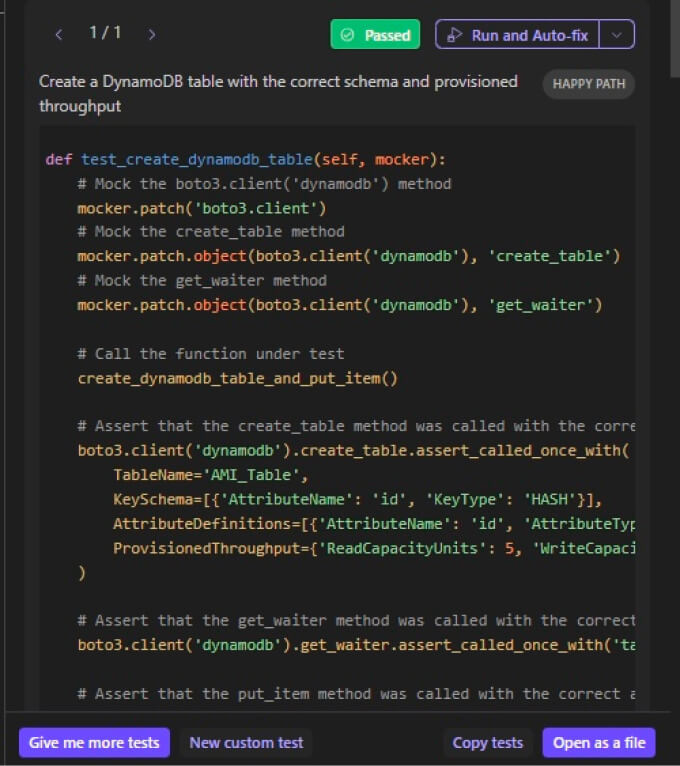

Function: test_create_dynamodb_table

Below is the generated test code.

Suggested codes are sometimes not perfect. But 90% of the time, they are. To test it locally, uncomment the multi-comment line, and replace the defined function.

Generated Test Code:

'''

import boto3

from script import create_dynamodb_table_and_put_item

def test_create_dynamodb_table(mocker):

'''

# Create a DynamoDB table with the correct schema and provisioned throughput

def test_create_dynamodb_table(self, mocker):

# Mock the boto3.client('dynamodb') method

mocker.patch('boto3.client')

# Mock the create_table method

mocker.patch.object(boto3.client('dynamodb'), 'create_table')

# Mock the get_waiter method

mocker.patch.object(boto3.client('dynamodb'), 'get_waiter')

# Call the function under test

create_dynamodb_table_and_put_item()

# Assert that the create_table method was called with the correct arguments

boto3.client('dynamodb').create_table.assert_called_once_with(

TableName='AMI_Table',

KeySchema=[{'AttributeName': 'id', 'KeyType': 'HASH'}],

AttributeDefinitions=[{'AttributeName': 'id', 'AttributeType': 'N'}],

ProvisionedThroughput={'ReadCapacityUnits': 5, 'WriteCapacityUnits': 5}

)

# Assert that the get_waiter method was called with the correct argument

boto3.client('dynamodb').get_waiter.assert_called_once_with('table_exists')

# Assert that the put_item method was called with the correct arguments

boto3.client('dynamodb').put_item.assert_called_once_with(

TableName='AMI_Table',

Item={'PK': {'S': 'Partition_Key'}, 'REGION_AMI_ID': {'S': 'ami-123'}, 'AMI_State': {'S': 'Create_AMI'}}

)

Function: test_read_item_from_dynamodb_table

Generated Test Code:

'''

import boto3

from script import create_dynamodb_table_and_put_item, lambda_handler

def test_read_item_from_dynamodb_table(mocker):

'''

# Read an item from the DynamoDB table

def test_read_item_from_dynamodb_table(self, mocker):

# Mock the boto3.client('dynamodb') method

mocker.patch('boto3.client')

# Mock the get_item method

mocker.patch.object(boto3.client('dynamodb'), 'get_item')

# Call the function under test

lambda_handler({}, {})

# Assert that the get_item method was called with the correct arguments

boto3.client('dynamodb').get_item.assert_called_once_with(

TableName='AMI_Table',

Key={'PK': {'S': 'Partition_Key'}}

)

The suggested test cases provide a good starting point, but it’s important to customize them to fit your specific use case and requirements. Ensure that you test all possible scenarios and edge cases to have comprehensive coverage and validate the functionality of your code.

Best Practices for qodo (formerly Codium) Users

When writing tests for serverless-related tasks, there are a few best practices to keep in mind.

- Simulate IaaC Defined Services: Not all your deployments will be defined using your favorite SDK. Most of them are written via infrastructure as a code (IaaC) tools like Terraform, Pulumi, or Cloudformation. So this makes it difficult to write proper tests. It’ll be best if you simulate the required services in your SDK code, just like I did in this function: create_dynamodb_table_and_put_item().

- Install Both Unit-Test and Pytest: If you are generating Python tests, qodo (formerly Codium) may generate tests using the unit-testing or pytest. If you are comfortable using both libraries, you can install both and ignore specifying further prompts. Hence, you only use /test.

- Use Mocking Libraries: To simulate AWS services, use mocking libraries like Moto. This will help you mock responses from AWS services without making actual API calls.

Benefits of Using an Automated Code Testing Tool like qodo (formerly Codium) for AWS Code

- Improved Code Quality: It helps identify bugs and errors in your AWS code, ensuring that it functions as intended and meets the desired requirements. By detecting issues early on, you can fix them before they cause problems in production.

- Time and Effort Saving: Writing tests manually can be time-consuming and repetitive. This tool automates the process, generating test cases for your AWS code quickly and efficiently. This saves valuable time and effort, allowing you to focus on other important tasks.

- Increased Test Coverage: It generates a comprehensive set of test cases, covering various scenarios and edge cases. This helps ensure that your AWS code is thoroughly tested and that all possible scenarios are considered, improving the overall test coverage.

- Consistency: It provides consistent testing practices across your AWS codebase. They follow predefined testing patterns and guidelines, reducing the chances of human error and ensuring consistent and reliable test results.

- Easy Test Customization: While it provides a starting point for your tests, they can be easily customized to fit your specific use case and requirements. You can modify the generated test cases to include additional assertions, edge cases, or specific scenarios that are relevant to your AWS code.

- Enhanced Collaboration: It provides a common testing framework that can be easily understood and used by the entire development team. This promotes collaboration and enables developers to contribute to the testing process, improving the overall quality of the AWS codebase.

- Rapid Feedback Loop: It provides quick feedback on the functionality and correctness of your AWS code. By running the generated tests, you can quickly identify any issues or regressions, allowing for timely resolution and preventing the introduction of new bugs.

- Cost Savings: It helps identify and fix issues early in the development process, reducing the cost of fixing bugs in production. By catching and resolving issues at an early stage, you can avoid potential downtime, customer dissatisfaction, and costly troubleshooting efforts.

- Facilitates Refactoring and Maintenance: It makes it easier to refactor and maintain your AWS code. As your codebase evolves, the generated tests serve as a safety net, ensuring that modifications or refactoring do not introduce unintended consequences or break existing functionality.

By leveraging an automated testing tool like qodo (formerly Codium) for your AWS code, you can reap these benefits and ensure the reliability, functionality, and quality of your serverless applications and infrastructure.

Conclusion

qodo (formerly Codium) can greatly enhance the testing process for AWS serverless architectures. They offer the advantage of saving time and effort by automating the generation of test cases, ensuring high code quality, and increasing test coverage. These tools also provide consistency and easy customization and foster enhanced collaboration among developers. By leveraging our solution, you can establish a rapid feedback loop, achieve cost savings, and facilitate the refactoring and maintenance of your AWS code. By incorporating such tools into your development workflow, you can create more reliable and robust serverless applications and infrastructure while ensuring a high level of quality.