The $8 Million CSS Bug: Lessons from Code Quality Issues

The $8 Million CSS Bug: Lessons from Code Quality Issues

A single line of CSS changed. No database migrations. No backend logic alterations. Just a style property updated by a developer who had no idea they were about to cost their company $8.7 million.

It was peak shopping season. The homepage section responsible for 60% of conversion flow went blank for three hours. The immediate loss? $2.3 million in sales. But the real damage came after: 15% spike in cart abandonment, 23% drop in repeat customers, and competitors capturing 40% of their traffic permanently.

The bug passed code review perfectly. No linter warnings. Clean syntax. Perfect compilation. From a technical perspective, it was “simple”—the kind of change that takes 30 seconds to review.

The Fatal Assumption

Traditional code review operates under a dangerous premise: technical complexity equals business risk.

We scrutinize complex algorithms but wave through simple CSS changes. We demand architectural reviews for refactors but rubber-stamp feature flag updates. We’ve engineered a system that protects against complicated failures while remaining blind to simple ones that cost millions.

Microsoft research shows we spend most review time on code comprehension, leaving almost nothing for business impact assessment. Organizations currently burn 20-40 engineer-hours daily on review processes, with senior developers stuck in queues trying to understand code instead of assessing risk.

Meanwhile, the real threats slip through:

- A missing null check that passes static analysis

- A CSS rule that breaks mobile layout

- A feature flag defaulting wrong

- A query that cascades across systems

Every one typically passes traditional review without friction.

Risk-Based Classification: The Solution

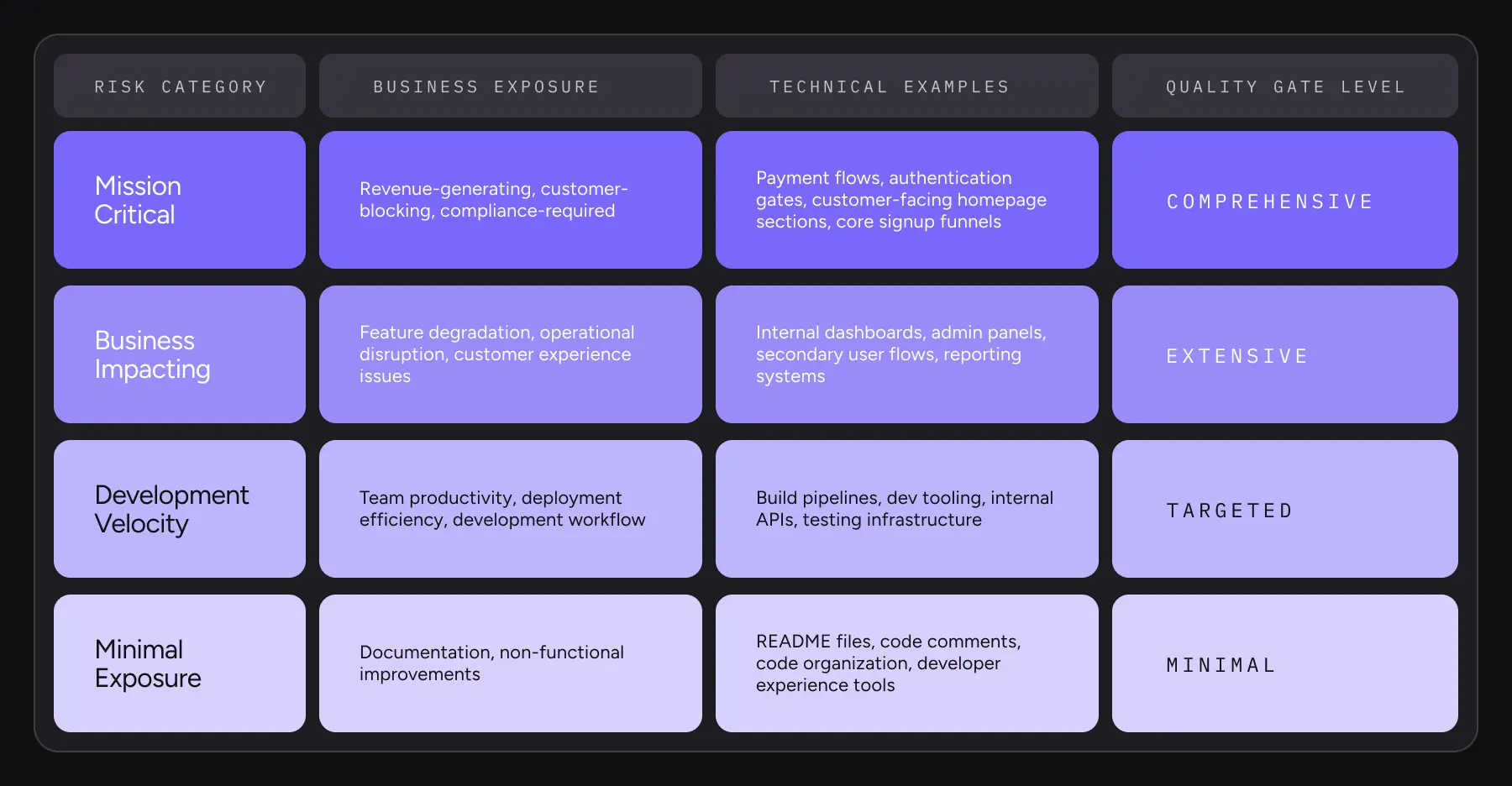

There are multiple questions to answer in this matrix: “Is this code complex?” and “What’s the blast radius if this breaks?”

Create four risk categories based on business exposure and complexity.

That CSS change was Mission Critical—touching core customer conversion. It needed comprehensive review: business sign-off, visual regression testing, multi-device validation. Instead, it got a 30-second syntax check.

What Changes

With risk-based classification:

- Context-aware processes: Junior devs can approve complex internal algorithms, but senior engineers validate simple homepage changes

- Differentiated quality gates: Mission Critical code gets comprehensive testing; Minimal Exposure gets syntax checks

- Actual incident prevention: You stop the $8 million bugs before they ship

Organizations implementing AI-powered, context-aware code review see:

- 60% improvement in defect detection

- 70-85% reduction in review cycle time

- 20-55% increase in developer productivity

- Significant decrease in preventable production issues

A Practical Framework

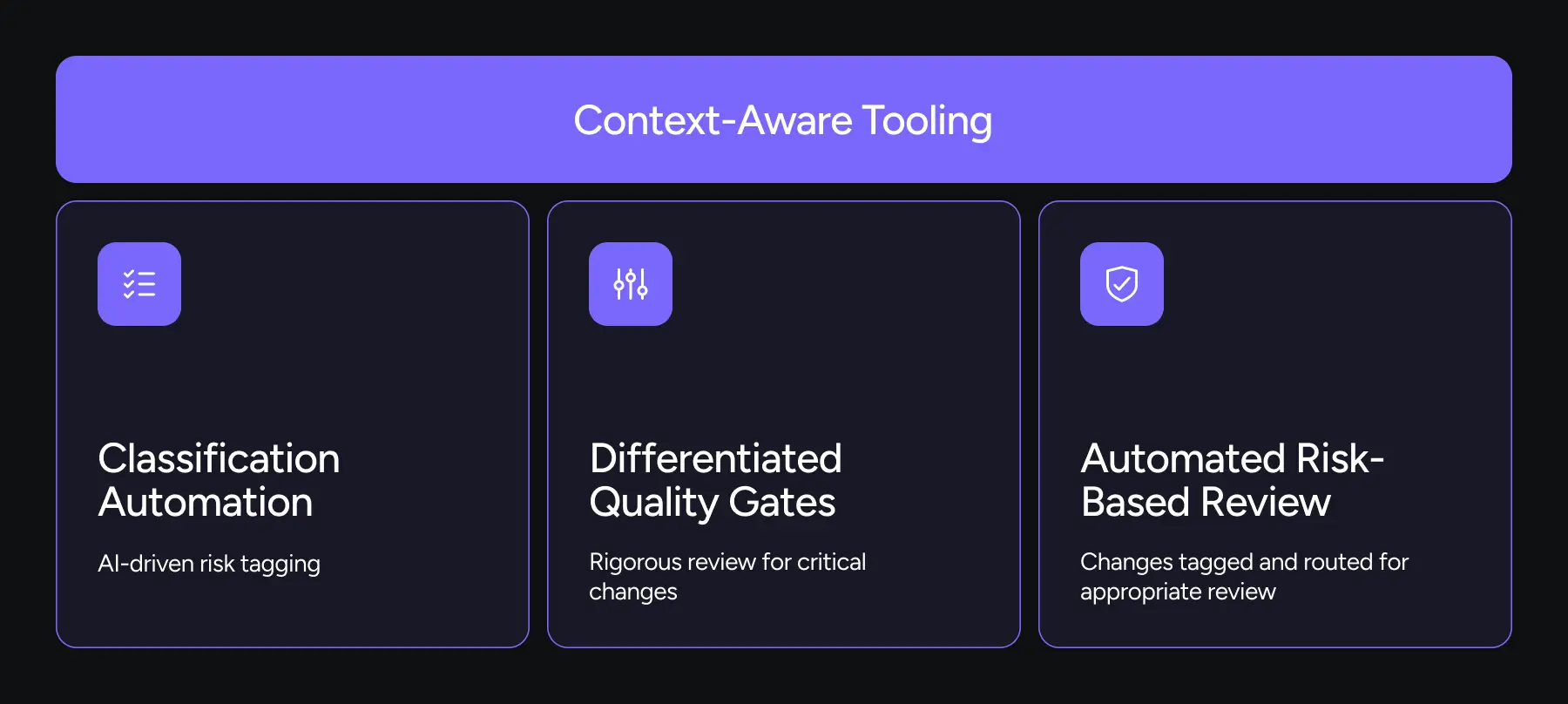

Implement three components:

- Classification Automation: AI that automatically tags PRs with business exposure level based on affected systems

- Differentiated Quality Gates: Mission Critical changes require domain experts, advanced testing, and business context validation

- Context-Aware Tooling: AI systems trained on your codebase, business rules, and risk model

How many $8 million changes are currently passing through your review queue as simple technical check-offs?

If you don’t have risk-based classification, the answer is: at least one.

Your engineering process should reflect your actual risk model. Start by identifying which changes have the highest blast radius and whether they’re getting the rigor they deserve.

Those simple changes touching customer-facing systems? They’re your biggest risks waiting to happen.