Thinking vs Thinking: Benchmarking Claude Haiku 4.5 and Sonnet 4.5 on 400 Real PRs

Not long ago, deep reasoning was something only the biggest models could pull off.

We’re hitting a milestone where even smaller models can think deeply enough to outperform their heavyweight siblings on complex code review tasks.

At Qodo, we wanted to know:

How do Claude Haiku 4.5 and Claude Sonnet 4.5 compare when they think, and how does that stack up to the earlier generation?

We ran two large-scale benchmarks, both on 400 real GitHub pull requests, using the Qodo PR Benchmark:

- One comparing Claude Haiku 4.5 vs Claude Sonnet 4 (standard mode)

- Another comparing Claude Haiku 4.5 Thinking vs Claude Sonnet 4.5 Thinking (4096 token budget)

Together, they reveal a clear trend: Haiku keeps winning: smaller, faster, and consistently more focused.

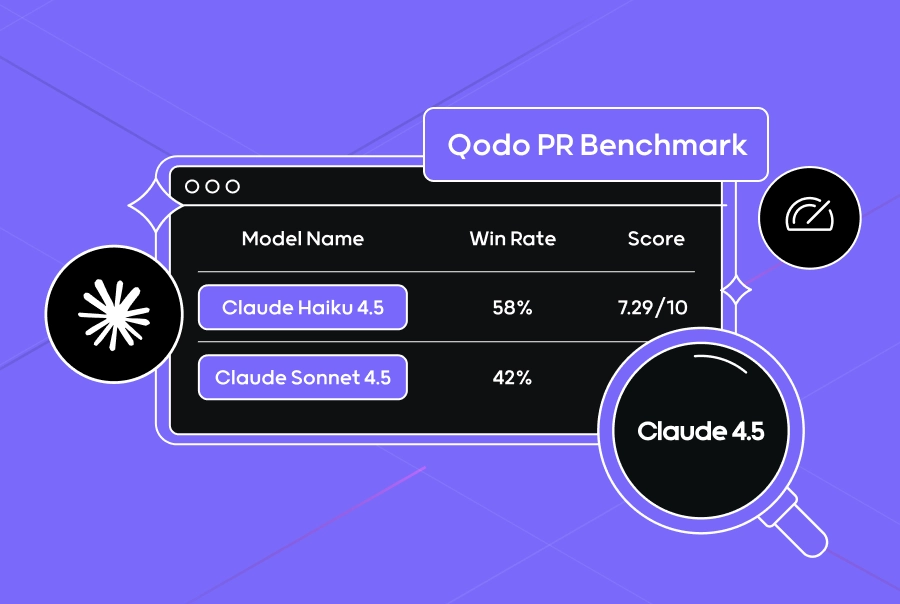

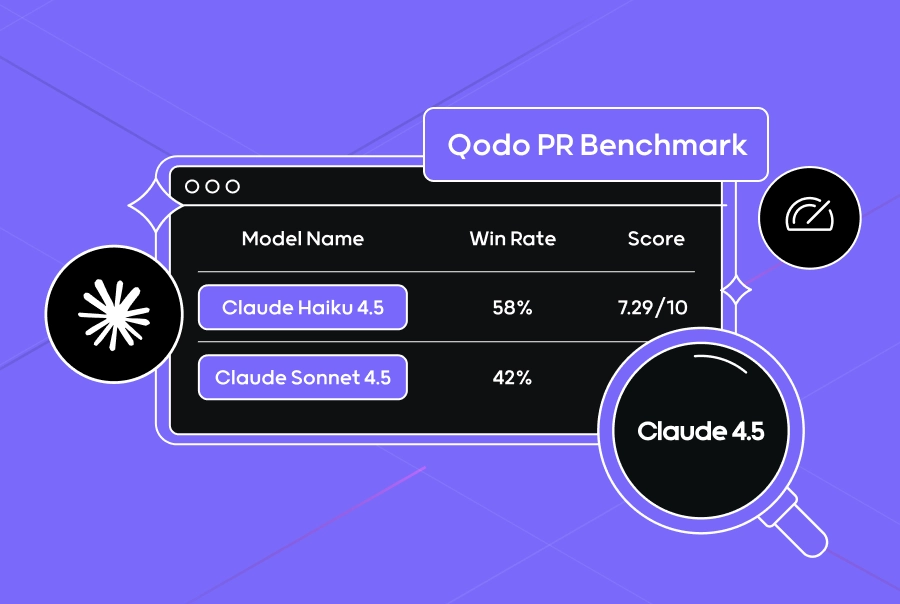

The Dual Benchmark at a Glance

| Benchmark | Models Compared | Win Rate | Avg. Code Suggestion Score | Judge |

| Standard Mode | Haiku 4.5 vs Sonnet 4 | 55.19 % (Haiku) / 44.81 % (Sonnet) | 6.55 (Haiku) / 6.20 (Sonnet) | GPT-5 (2025-08-07) |

| Thinking Mode (4096) | Haiku 4.5 Thinking vs Sonnet 4.5 Thinking | 58 % (Haiku) / 42 % (Sonnet) | 7.29 (Haiku) / 6.60 (Sonnet) | Gemini-2.5-Pro |

What the Data Shows

Upgrading from Sonnet 4 → Haiku 4.5 (Standard Mode)

For engineering teams already relying on Sonnet 4, switching to Haiku 4.5 delivers superior performance in ⅓ the price:

- Higher code suggestion quality score: 6.55 vs 6.20

- More wins: 55 % vs 45 % in head-to-head comparisons

- Simple migration: Haiku 4.5 fits existing review pipelines without workflow changes

This makes Haiku 4.5 a practical upgrade, not an academic one, a faster path to better code review results.

Haiku 4.5 Thinking vs Sonnet 4.5 Thinking

When both models switch to thinking mode, Anthropic’s extended reasoning framework, Haiku 4.5 continues to outperform.

It wins 58 % of comparisons, and its suggestions receive higher average quality scores (7.29 vs 6.60).

Sonnet 4.5 Thinking still shows strong multi-file and architectural reasoning, but for day-to-day review tasks, Haiku 4.5 Thinking is consistently more reliable and precise.

How We Benchmarked

This study used the Qodo PR Benchmark, a real-world evaluation framework that tests models on actual pull requests instead of synthetic exercises.

Each model received identical context: PR diff, description, and repository hints, and was evaluated blindly using structured rubrics.

Benchmark Scope Disclaimer

These benchmarks measure single-pass code-review reasoning quality only. They do not yet evaluate tool calling, code execution, repository-level navigation, or multi-step agentic behavior.

Such capabilities are essential for production AI systems, and additional evaluations of these agentic workflows are in progress.

The Bottom Line

For practitioners, these benchmarks offer a practical case to upgrade from Sonnet 4 to Haiku 4.5 and to enable thinking mode for deeper reasoning tasks.

For researchers, they highlight an emerging trend: smaller, more efficient models can now deliver superior reasoning quality.