What is an MCP Server? 15 Best MCP Servers That Will Help You Code Smarter

TL;DR:

- MCP (Model Context Protocol) lets AI models like GPT-4 actually do things,not just generate text. With MCP, an AI can create GitHub issues, run test scripts, query APIs, or even deploy code by calling real tools through a structured JSON-RPC interface. An MCP server acts as the backend; it connects the model to tools, validates inputs, runs the command (locally or remotely), and returns structured results. This turns AI into something usable in production workflows, not just for suggestions, but for actual execution.

- You can wire up local scripts (via shell) or external services like Jira, GitHub, or Supabase. In platforms like Qodo Gen, these integrations are handled natively, allowing agents to discover tools, validate schemas, and execute actions, all from within your IDE. With proper permission controls, execution stays safe and auditable.

- There are many purpose-built MCP servers, each focused on a task like issue tracking, test automation, code navigation, or security. Once connected, they let your agent run workflows end-to-end without human intervention.

- Agentic systems are changing how we approach automation. Instead of wiring up static workflows or relying on brittle scripts, we’re starting to build environments where AI agents, like those in Qodo, can deploy code, run tests, manage infrastructure, and interact with tools through prompt-driven automation.

As an AI engineer working on production systems, I’ve seen how LLMs like GPT-4 are mostly used to generate artifacts, Terraform modules, shell scripts, CI jobs, config files. You feed in some context, maybe a snippet of your codebase, a repo name, or a YAML template, and the model returns something syntactically correct. But beyond that, it’s disconnected. It can’t see the actual project state, run tests, create issues, or trigger deployments. Everything it does needs to be manually reviewed and executed, which breaks the flow when you’re trying to build fully automated systems. Without proper context, like access to the file system, Git history, or running containers, the LLM remains a generative assistant, not an active agent. That’s exactly what the Model Context Protocol (MCP) solves, by giving generative models structured access to tools and real-time context.

MCP gives models a structured way to interface with tools, using a simple JSON-RPC protocol. Instead of generating a curl command or shell script, the model calls a real operation behind the scenes: deploy_service, run_tests, open_pull_request, scale_cluster. That call goes through a secured, monitored channel with scoped credentials and logging integrated.

But implementing this into production takes serious engineering effort. You need concurrency limits so that a model doesn’t accidentally spin up 50 pods and crush your cluster. You need thread pooling, memory caps, sandboxing, and audit trails for every agent action. And you have to protect every exposed function with strict access control, no exceptions.

And this direction is gaining traction across the dev community. In this thread on r/ExperiencedDevs, one senior engineer described how they wired an agent to enforce data contract consistency across services. It didn’t just generate definitions, it validated them, triggered enforcement checks, and caught schema mismatches before staging. That’s the kind of shift we’re talking about, LLMs as operational actors, not just code assistants.

In this post, I’ll walk you through what MCP is, how it fits into production infrastructure, and how we’ve implemented it inside Qodo Gen 1.0 to make LLMs not just helpful, but actually useful in real-world engineering teams.

What Is an MCP Server?

An MCP server is a backend service that lets an AI agent (like GPT-4) call real tools in your environment, things like creating a GitHub issue, running tests, querying a database, or triggering a deploy, through a clean, JSON-RPC interface.

An MCP server exposes a set of tools (or actions) to the model. Each tool is defined with:

- a name (like create_issue or run_tests)

- a schema (inputs and outputs in JSON)

- and a function handler (the actual code or API it connects to)

The model doesn’t guess what tools are available. It explicitly asks using list_tools and gets back a machine-readable list of all the actions it can call.

When the model wants to use a tool, it sends an invoke_tool request with JSON input parameters. The MCP server then:

- Validates the input against the tool’s schema

- Runs the underlying code (this could be a shell command, Python script, or API call)

- Returns a structured JSON result back to the agent

For local tools such as test runners, linters, or file system access, the server communicates with the tool using stdio or UNIX sockets. This is useful when the tool runs inside the same container or process environment as the agent.

For remote tools like GitHub, Jenkins, or Slack, the server uses HTTP or Server-Sent Events (SSE) to send and receive data over the network. This flexibility allows the MCP server to integrate both tightly-coupled local scripts and distributed cloud services under a consistent interface. Now that we understand what are MCP servers, let’s look at what actually powers these tools. The answer to this lies in your data stack.

Why Data Access Shapes Agent Intelligence

For AI agents to actually work in production, it’s not enough to just expose tools; they need fast, reliable access to data. Without that, your MCP setup turns into a bottleneck. A recent TechTarget article clarified this: your MCP server is only as useful as the data layer it’s connected to.

Here’s why this matters from an engineering perspective:

1. Agents need structured, real-time data

Let’s say your agent wants to validate a customer’s billing tier before provisioning new infra. That data might live in Postgres, a warehouse like Snowflake, or behind a GraphQL API. If your MCP server can’t reach it fast, or if the data’s out of the agent, it either stalls or makes a bad decision.

This is where the underlying data platform becomes critical. You need systems that support:

- Fast read access (low-latency queries)

- Up-to-date records (replicated or real-time synced sources)

- Structured schemas (so tools don’t need to guess at fields)

2. You don’t want to hardcode access in tools

If you’re embedding SQL queries or API calls directly into your MCP tool logic, it becomes hard to change, secure, or reuse. Instead, you want the MCP server to interact with a unified data layer, something like a data API, view layer, or document store tools that can just consume structured inputs and move on.

This means using platforms that support:

- Declarative access (e.g., views, GraphQL layers, REST endpoints)

- Fine-grained access control (row-level or schema-level policies)

- Built-in metadata (tracking schema versions, query history, etc.)

3. Governance and observability are non-negotiable

MCP isn’t a one-off integration; it’s a runtime layer. When agents start making decisions like auto-approving changes, creating resources, or syncing systems, you need full traceability. The data platform backing your MCP setup should support:

- Audit logging

- Access policies

- Change history

- Query tracing

This isn’t optional. You don’t want an agent to decide based on stale or unauthorized data.

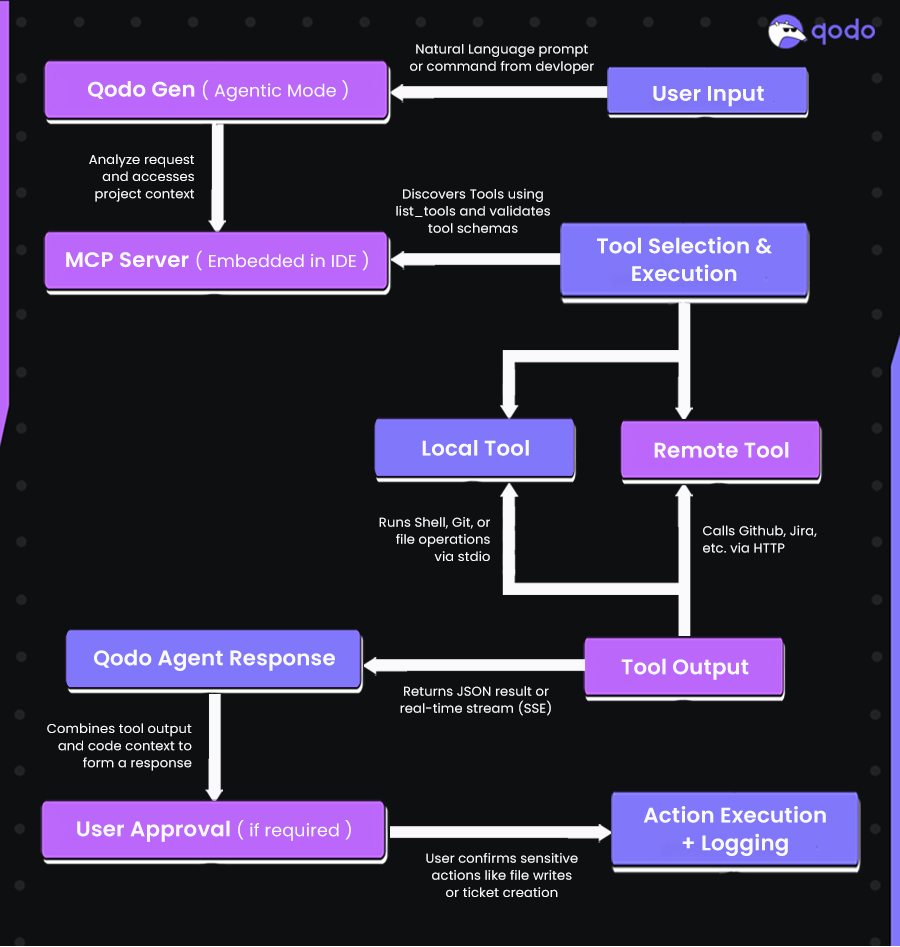

Qodo Gen 1.0 was designed from the ground up to support agentic workflows, where AI doesn’t just answer questions but performs tasks by interacting with your environment. At the core of this system is the Model Context Protocol (MCP), which Qodo uses to securely and reliably connect language models to real tools, services, and project context.

15 Best MCP Servers That Will Help You Code Smarter

As more teams integrate LLMs into production environments, choosing the right MCP servers becomes important. Below is the 15 best MCP servers from the MCP repo, grouped by functionality. Each server follows the MCP spec offering list_tools, tools/call, JSON Schema-based validation, and either HTTP or stdio transport.

Developer Workflow

GitHub MCP

The GitHub MCP server gives your agent full control over GitHub interactions, from creating issues to editing files and managing pull requests. It’s designed for fine-grained GitHub automation via OAuth-scoped APIs.

Features

Through its MCP servers, GitHub provides automated interactions by integrating with GitHub’s API as follows:

- Tools: createIssue, commentOnPR, getRepoInfo, createBranch, updateFile

- GitHub OAuth integration with scoped access

- Full JSON Schema for input/output contracts

- HTTP-based transport

Jira MCP

Simplifies Jira ticket management. Agents can query assigned issues, create tickets, transition states, and add comments, all using structured schemas.

Features:

- Tools: createTicket, addComment, listAssignedIssues, transitionIssue

- Supports custom fields and Jira workflows

- Uses personal API tokens or OAuth

- Error-safe input validation

GPT Pilot MCP

An orchestration layer for full-stack AI workflows. This MCP manages everything from code generation to CI/CD to deployment steps, ideal for autonomous dev agents.

Features:

- Tools: generateCode, runTests, triggerDeploy

- Handles chained tasks and multi-step execution

- Real-time log streaming for long-running jobs

- Supports branching logic and retries

Memory & Planning

Memory Bank MCP

Persistent memory for agents. This server allows storing and retrieving structured context between tool calls and across sessions.

Features:

- Tools: storeEntry, queryEntries, deleteEntry

- TTL support for auto-expiry

- Supports both JSON blobs and structured fields

- Pluggable storage backends (in-memory, DB)

Knowledge Graph MCP

Maintains an internal graph of nodes and edges, useful for reasoning, entity linking, and dependency management.

Features:

- Tools: addNode, addEdge, queryGraph

- Schema-bound node/edge types

- Graph traversal and querying support

Sequential Planner MCP

Ideal for breaking complex tasks into sequenced steps. This MCP allows agents to generate, store, and manage task chains.

Features:

- Tools: createPlan, getNextTask, markTaskDone

- Tracks execution state across sessions

- Supports hierarchical planning

Automation & Testing

Playwright MCP

Browser automation using Playwright, driven entirely via MCP. Useful for UI testing, regression suites, or validating frontend flows.

Features:

- Tools: openPage, click, type, assertVisible, screenshot

- Accessibility-aware selectors

- Streams logs and test results

Puppeteer MCP

Offers similar browser control but uses Puppeteer. Great for scraping, UI checks, and fast DOM interactions.

Features:

- Tools: navigate, clickElement, extractText, screenshot

- Works with Chromium

- Base64 output for images/media

Selenium MCP

Classic browser automation with support for real browser environments like Chrome or Firefox.

Features:

- Tools: navigate, findElement, click, sendKeys, getPageSource

- Uses Selenium WebDriver protocol

- Works in both headless and full UI mode

Execution & Workflow Control

Desktop Commander MCP

This is your local system command and file manager. Useful for file operations, shell scripting, and general automation.

Features:

- Tools: execCommand, readFile, writeFile, listDirectory

- Transport over stdio for fast local execution

- Path scoping and sandbox enforcement

OpenAgents MCP

Supports coordination among multiple agents. One agent can delegate tasks to others and poll their status.

Features:

- Tools: registerAgent, sendTask, getStatus, listAgents

- Enables multi-agent orchestration

- Includes internal task routing

Continue MCP

Checkpointing server for agents. Save intermediate states and resume tasks when needed.

Features:

- Tools: saveCheckpoint, resumeCheckpoint, listCheckpoints

- Stores structured state per session/task

- Useful in long-lived workflows or crash recovery

Data & Security

Fetch Service MCP

Makes it easy to ingest content from the web. Agents can fetch pages, parse them, and convert them into useful formats.

Features:

- Tools: fetchHTML, fetchMarkdown, fetchJSON

- Handles sanitization, CORS, and rate limiting

- Streaming and caching support

Supabase MCP

Provides structured access to Supabase databases using parameterized queries.

Features:

- Tools: select, insert, update, delete

- Table-safe, typed interactions.

- Schema introspection for dynamic agents

Snyk MCP

Enables security checks during development. This MCP runs scans and returns structured results with fix suggestions

Features:

- Tools: runScan, getIssues, generateFixPR

- Integrates with Snyk’s API

- Produces vulnerability trees and CVE data

These awesome MCP servers are used because they handle real engineering tasks like running Playwright tests, creating Jira issues, updating GitHub PRs, or fetching deployment data from Supabase. Each server in the above list of mcp servers is built to work reliably with LLMs, supports structured input/output via JSON, and can be integrated directly into agent workflows.

How Qodo Uses MCP to Power Agentic Workflows

Qodo Gen 1.0 was designed from the ground up to support agentic workflows, where AI doesn’t just answer questions but performs tasks by interacting with your environment. At the core of this system is the Model Context Protocol (MCP), which Qodo uses to securely connect language models to tools, services, and project context.

Here’s a workflow of how Qodo uses the embedded MCP server in your IDE:

Agentic Mode

In Agentic Mode, Qodo goes beyond a traditional “prompt-response” model. Instead of executing whatever instruction you give it, the agent:

- Analyzes your request

- Inspects the tools available (via list_tools)

- Choose the most appropriate tools

- Executes them, possibly in sequence

- Returns a structured result

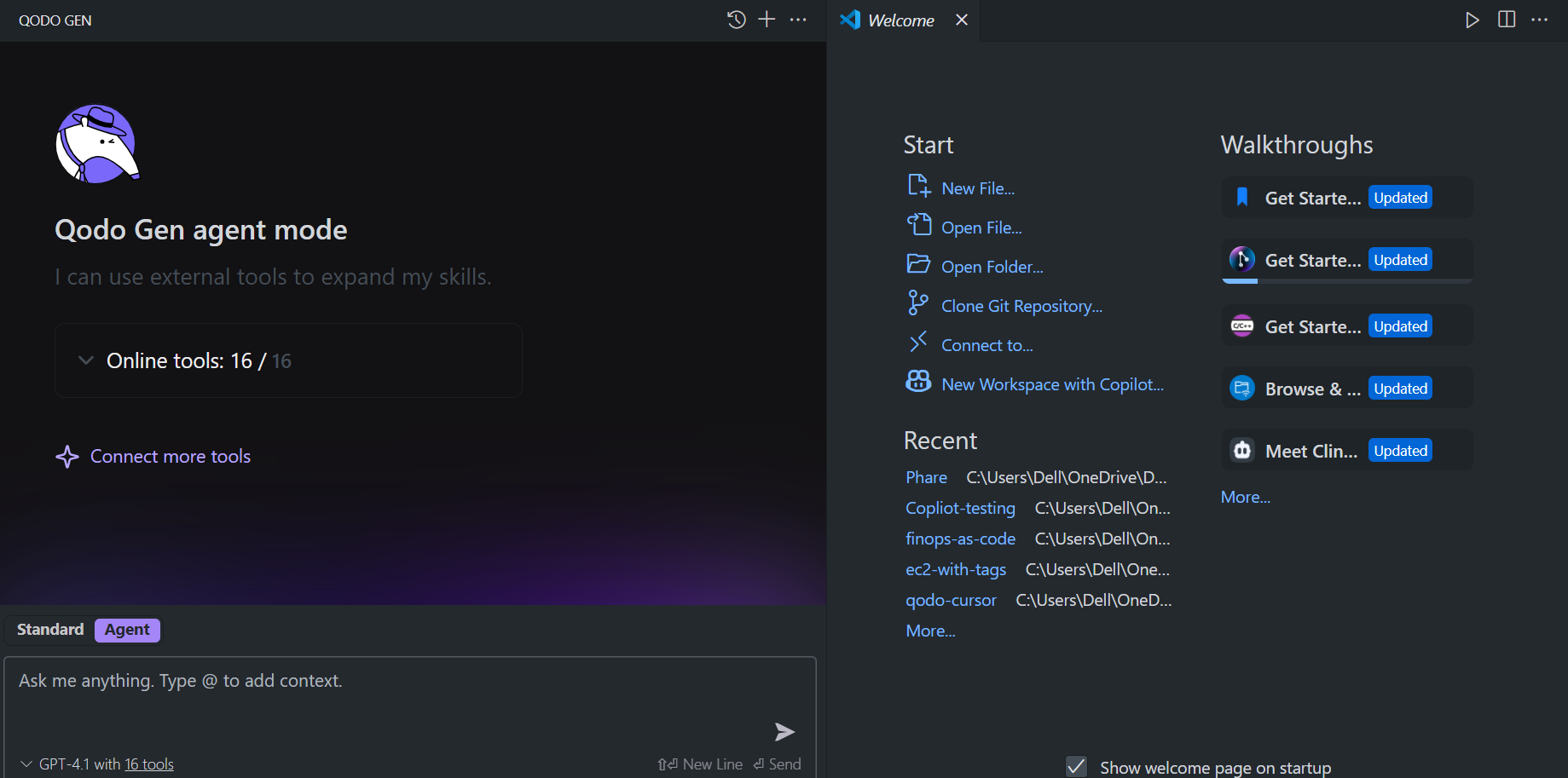

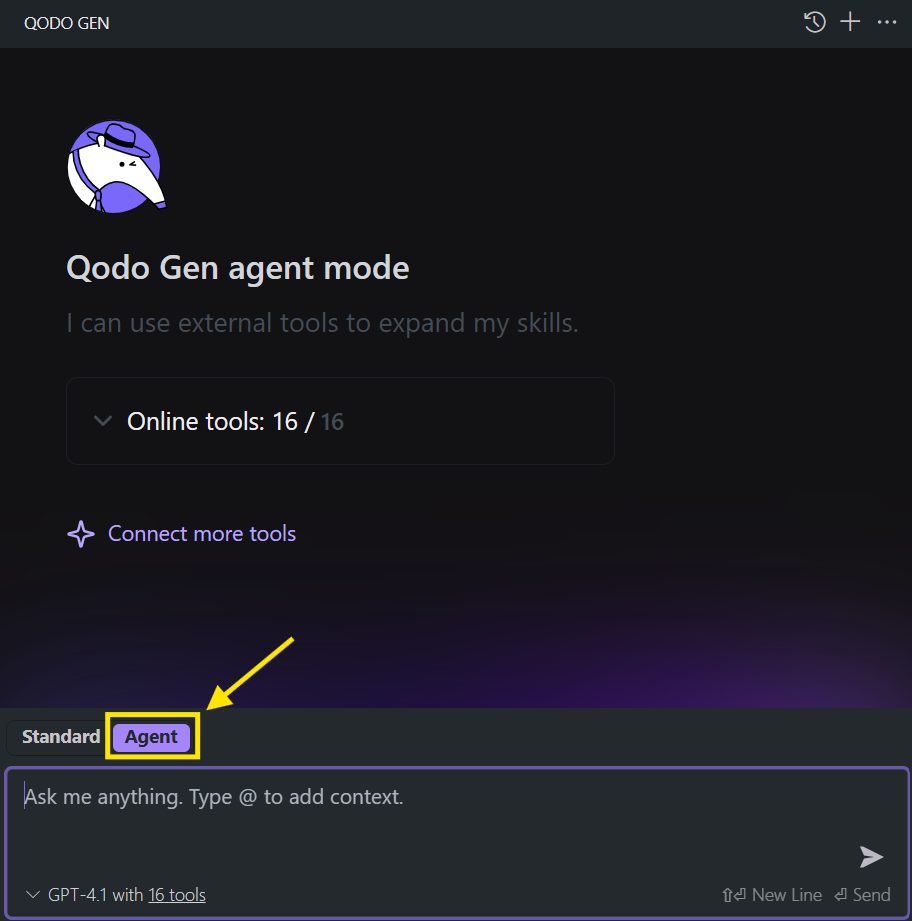

Here’s a snapshot of how Qodo embeds the most popular MCP servers directly into your IDE (VS Code in the current snapshot).

All of this happens using MCP under the hood. This allows Qodo to combine a deep understanding of your codebase with the ability to act, like running git commands, searching project files, querying Jira, or executing shell commands.

Local and Remote Tooling

Qodo supports both local and remote MCP tool execution:

- Local tools: These run in your IDE or local dev environment and include shell commands, test runners, filesystem operations, and terminal utilities.

- Remote tools: These connect to APIs and external systems like GitHub, Snyk, Supabase, or Jira.

This dual execution model makes it easy to go from idea to action, whether you’re working on a personal project or inside a corporate monorepo.

Built-in Agentic Tools (via MCP)

Qodo includes a set of pre-configured tools exposed through MCP:

- Git Tools: git_changes, git_file_history, git_remote_url

- Code Navigation: find_code_usages, get_code_dependencies

- Filesystem: read_files, write_to_file, search_files, directory_traversal

- Terminal: terminal_execute_command, terminal_get_latest_output

- Web: fetch_html, fetch_markdown, web_search_tool

- Integrations: Tools for Jira, commit message generation, and branch analysis

You can also define custom Agentic Tools using JSON schemas and MCP-compliant interfaces. Qodo will automatically discover them and include them in the agent’s toolset.

Streaming & Permissions

- Streaming Results: For long-running tools like deployments or test jobs, Qodo uses SSE or local streaming to provide real-time updates to the model and the user interface.

- Fine-grained Permissions: Every tool is scoped and permissioned, so you control exactly what the agent can do, whether it’s allowed to write to files, push code, or terminate processes.

How MCP Tools Are Defined

In an MCP server, a “tool” isn’t just a function; it’s a callable unit with strict input validation, structured output, and runtime discoverability. If you want your LLM agent to use tools correctly and predictably, this structure is non-negotiable.

In Qodo Gen, these tools are called Agentic Tools and are built using the Model Context Protocol (MCP), an open standard that defines how tools are exposed to LLMs. These tools let agents interact with everything from shell commands to GitHub and Jira issues, right from a structured chat interface or agent workflow.

Depending on how you configure the tool, it can run locally in your environment using commands like npm or remotely as a hosted HTTP or SSE service. This flexibility is what makes MCP tools both powerful and composable.

Here’s what defines an MCP tool:

1. Name and Description

Each tool is registered with:

- Name: A unique string (like createGitHubIssue) that the model uses to call the tool.

- Description: A plain-text explanation of what it does. This isn’t for users — this is what the LLM sees when doing tool selection. Be precise. Don’t write “Creates something on GitHub.” Instead, say:

“Creates a new GitHub issue using title, body, and optional labels.”

These values are exposed via the tools/list call so the model knows what’s available at runtime.

2. Input Schema

Every tool must declare an input schema using JSON Schema Draft 7. This schema is validated when a tool is invoked via tools/call. It defines:

- Required vs optional fields

- Exact types (string, array, number, etc.)

- Value constraints (e.g., enum, minLength)

- Defaults where applicable

Example schema for a GitHub issue tool:

| { “type”: “object”, “required”: [“title”, “body”], “properties”: { “title”: { “type”: “string” }, “body”: { “type”: “string” }, “labels”: { “type”: “array”, “items”: { “type”: “string” }, “default”: [] } } } |

If the input doesn’t pass validation, the handler won’t run. No exceptions.

3. Tool Invocation

LLMs don’t access tools directly. They invoke them using a structured JSON-RPC method:

| { “method”: “tools/call”, “params”: { “name”: “createGitHubIssue”, “input”: { “title”: “Fix CI job failure”, “body”: “Job fails when running tests”, “labels”: [“ci”, “bug”] } } } |

The server validates the input payload against the registered schema. If it passes, the corresponding handler function executes. If not, a structured error is returned — no silent failures.

4. Transport and Execution

Tool execution depends on how the MCP server is deployed:

Local MCPs: Run tools directly within the agent’s runtime. These are configured via command-line utilities (e.g., npx, docker) and are ideal for shell utilities, file system access, or internal scripts.

| { “github”: { “command”: “npx”, “args”: [“-y”, “@modelcontextprotocol/server-github”], “env”: { “GITHUB_PERSONAL_ACCESS_TOKEN”: “<YOUR_TOKEN>” } } |

}

Remote MCPs (SSE-based): Hosted externally, these tools expose a URL endpoint and may include headers for auth. They are preferred in Enterprise environments for integrating with services like Jira, Semgrep, or internal APIs.

| { “semgrep”: { “url”: “https://mcp.semgrep.ai/sse” } } |

Qodo Gen respects these transport declarations when invoking tools. For security and observability, SSE MCPs are recommended in production setups.

5. Output Format

All tool responses are wrapped in a ToolCallResult object. This ensures consistency in how the agent handles responses.

Success:

| { “isError”: false, “content”: [{ “type”: “text”, “text”: “Issue created: https://github.com/…” }] } |

Failure:

| { “isError”: true, “content”: [{ “type”: “text”, “text”: “GitHub API returned 401 Unauthorized” }] } |

This structure enables agents to intelligently handle retry logic, user-facing messages, or fallback paths without needing to guess or parse raw strings.

6. Agent Integration in Qodo

In Qodo Gen, these tools are utilized by agents, which are purpose-built AI copilots defined using TOML files. Each agent specifies:

- A description and behavior (instructions)

- The tools it can use (tools)

- Execution mode (act for immediate or plan for multi-step)

- Optional input/output schemas

- An optional exit_expression for CI/CD success criteria

For example, a GitHub agent might reference the GitHub MCP server, filter down to just createGitHubIssue, and run in plan mode to validate steps before execution.

| tools = [“github”] execution_strategy = “plan” |

You can also define shared MCP servers globally in mcp.json or restrict them at the agent level.

How to Add MCP Servers in Qodo Gen

Integrating an MCP server into Qodo Gen is a simple process, but under the hood, it ties your agentic workflows directly to structured tools that execute real operations. Whether you’re connecting to local shell scripts or remote services like GitHub or Jira, Qodo handles tool discovery, schema validation, and runtime permissions for you. Since the source code of most MCP servers is publicly available, you can audit how each server works, extend it for your own workflows, or debug integration issues

Below is a detailed walkthrough for developers and DevOps engineers setting up MCPs within Qodo Gen.

From the UI: Connecting Tools via Agentic Mode

- Open Qodo Gen in Agentic Mode: Switch Qodo Gen to Agentic Mode to enable dynamic tool usage by the agent.

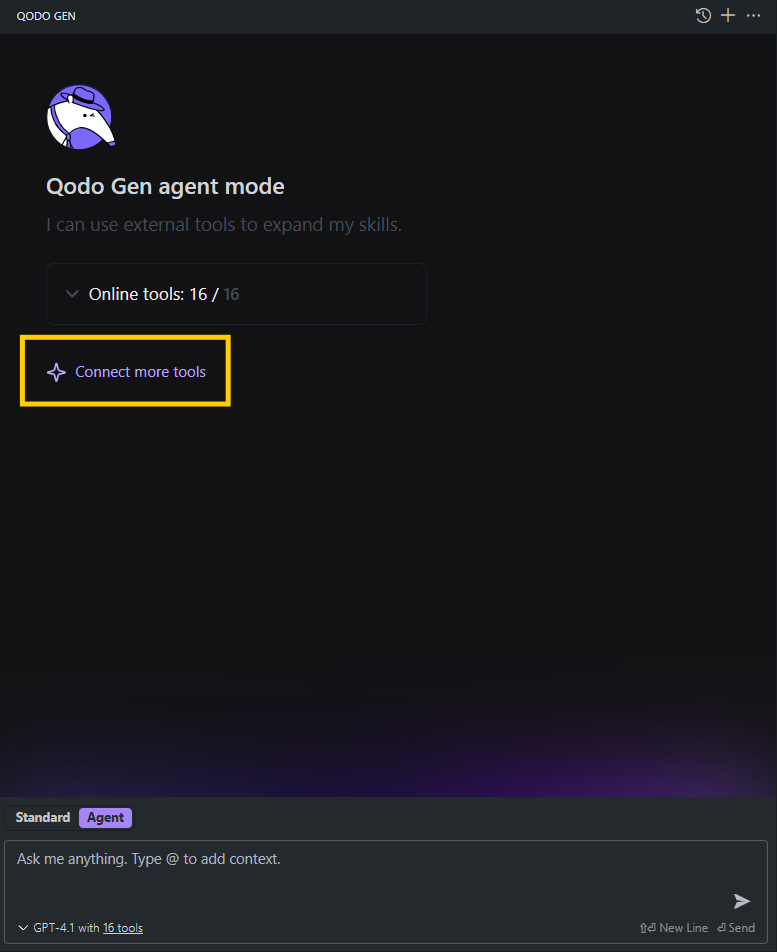

2. Go to Tools Management: Click “Connect more tools” or the small tools icon under the chat window. This opens the tool configuration interface as shown in the snapshot below.

3. Add New MCP: Click “Add new MCP” → paste in a JSON configuration (example below) for your local or remote MCP.

4. Validate Connectio

- A green dot means the MCP is reachable and all sub-tools have been discovered.

- A yellow dot indicates config errors. Check your tokens, endpoints, or headers.

5. Manage Sub-tools

Each MCP server can expose multiple sub-tools (like createIssue, fetchHTML, etc.).

- You can toggle each tool on/off.

- Optionally enable Auto Approve to let tools run without any approval.

From the CLI: Registering an MCP Server

For developers who prefer working in the terminal or need to automate agent workflows, the Qodo Gen CLI (currently in alpha) gives you full control over how MCP servers are configured and run.

You can register tools locally, serve agents over HTTP, or plug them into CI pipelines, without needing the UI.

Start an Agent as an MCP Server

To run an agent as an MCP service locally (listening on port 3000):

| qodo <agent-name> –mcp |

Run as Webhook Server

To expose the agent as an HTTP API (for CI tools, bots, or webhooks):

| qodo <agent-name> –webhook |

List Available MCP Tools

To view all registered local or remote tools:

| qodo list-mcp |

Use Custom Configs

You can load a specific agent or MCP config file using:

| qodo –agent-file=./my-agent.toml –mcp-file=./my-tools.json |

Enable CI Mode

If you’re scripting or running Qodo in pipelines, add:

| –ci –log=output.txt –resume=<session_id> |

This allows you to run agents in a non-interactive mode, log output, and resume tasks.

Configuration Format (Local Example)

For local tools (running via stdio), Qodo supports command-based registration. Here’s an example config for the GitHub MCP server:

| {

“github”: { “command”: “npx”, “args”: [ “-y”, “@modelcontextprotocol/server-github” ], “env”: { “GITHUB_PERSONAL_ACCESS_TOKEN”: “Paste your github personal access token” } } } |

Qodo will start the tool process inside your local environment and establish communication via JSON-RPC over stdio. This setup is suitable for workflows where the developer wants to keep full control of tool execution and logs locally, ideal for shell utilities and Git automation.

After configuring the local GitHub MCP, here’s a practical test that was performed to verify it works by prompting Qodo(in agent mode) as:

“In the terraform-ec2-pipeline repo, create a new issue titled ‘Add Auto Scaling Group support’ with body: ‘We need to extend this Terraform pipeline to support ASGs in production environments. Suggest implementation details and tag it under ‘enhancement’.”

Model behaviour:

- Queried the repo owner > resolved as “Github-Username”

- Triggered the createIssue tool from the GitHub MCP

- Created the issue with correct structure, metadata, and labels

Result:

The issue was successfully created in the repo using tool-driven automation via MCP as shown below:

Remote MCP Setup (SSE-Based)

Remote MCPs, ideal for services like Jira or security scanners, require only a URL:

| { “jira”: { “url”: “https://mcp.jira.my-org.com/sse”, “headers”: { “Authorization”: “Bearer <your_api_key>” } } } |

This configuration is ideal for teams using centralized service accounts or internal APIs.

Conclusion

MCP acts as the missing interface layer between LLMs and your actual development environment. Instead of just suggesting what could be done, it gives the model a way to do it by invoking real tools with typed inputs, explicit permissions, and structured outputs.

In Qodo Gen, this is implemented using both local and remote with public MCP servers, allowing agents to interact with files, terminals, services like GitHub or Jira, and even CI/CD workflows. It’s not magic, just a standardized RPC mechanism that allows AI to operate within the same constraints and safety boundaries as any developer tool.

If you’re building AI-driven developer tools or infrastructure workflows, understanding how to define, expose, and secure MCP tools is now table stakes. The protocol is minimal by design, but how you implement it determines how well your system can scale, debug, and interact across services.

FAQs

How do I test an MCP server?

Start by wiring up the MCP server in your tool (like Qodo or any JSON-RPC client). Run a tools/list call to make sure it exposes the expected tools. Then call a specific tool using tools/call with valid inputs. If you’re using something like the GitHub MCP, try creating a test issue. If the response is OK and you see the result reflected (like an issue on GitHub), your setup’s working. This helps catch misconfigured schemas or permission issues early.

Who created MCP?

MCP was created by Anthropic in late 2024. They introduced it as a standard way for AI models like Claude to interact with real-world tools using a simple JSON-RPC interface. Since then, it’s been picked up by other vendors and is now used broadly across agentic systems.

What is a remote MCP server?

A remote MCP server is one that runs outside your machine, hosted on some server, accessible via HTTP. The model connects to it over JSON-RPC, usually using Server-Sent Events (SSE) for streaming output. Tools like Jira, Supabase, and security scanners are good fits here. You just give Qodo or your agent the server URL and any auth headers, and it takes care of the rest.

What is SSE in MCP?

SSE (Server-Sent Events) is how remote MCP servers stream results back to the agent. It’s a simple HTTP-based stream: the client subscribes, the server pushes data as it becomes available. MCP uses it for long-running tasks like CI/CD jobs or web scraping, where you don’t want to block waiting for a full response.

Can MCP servers run locally?

Yes, local MCPs are just processes running on your machine that speak JSON-RPC over stdio. They’re great for fast, private tasks like shell commands, reading files, or local Git work. Qodo starts them in your environment, passes tool inputs, and listens for outputs without hitting the network.