Your Cursor Rules Won’t Scale: AI Code Needs an Adaptive Rules System

Steering AI code to align with rules defined in markdown files is a big quality boost. But there are some major gaps I’m seeing with this in practice.

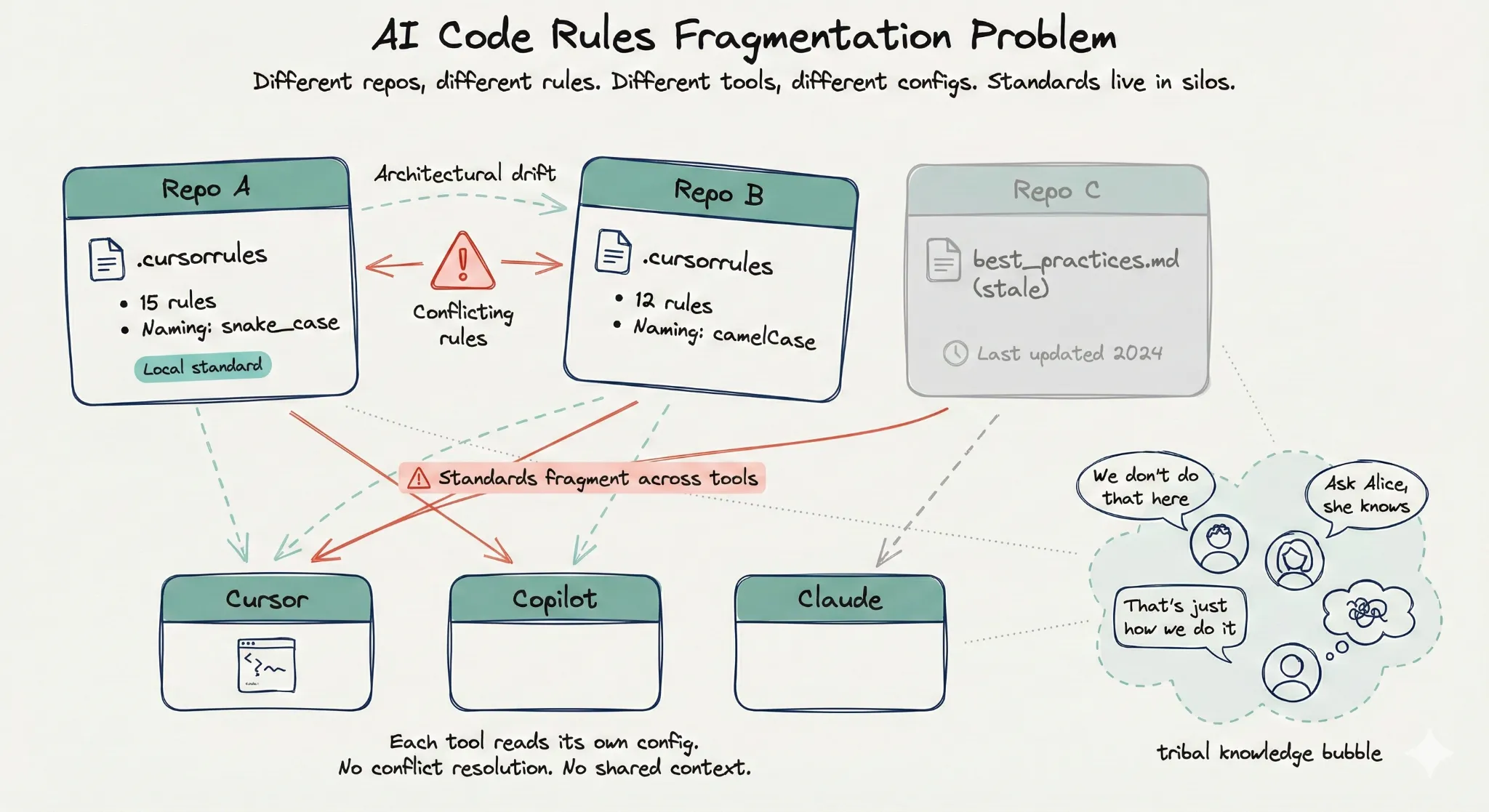

The Problem: Scattered Standards Can’t Scale

Think about it:

A .cursorrules file someone wrote six months ago, a best-practices.md in a random repo, tribal knowledge accumulated in some engineers’ heads (that disappears if they leave)…

When AI helps you ship 1,000 PRs a quarter, this approach becomes a liability. Which is unfortunately measured in incidents, rework, and architectural drift.

We know AI accelerates code generation. But it also exposes how fragile code quality management is under the weight of code generation speed.

When standards (best practices, rules, team conventions, “PLEASE AVOID” lists) are static markdown files or personal preferences, you have no way to enforce them beyond how you prompt agents in the moment. There’s no clear way to measure if they’re working – or how to change them as your codebase changes.

You could ask your agent to update its own rules. But based on what context? And how often? Are rules conflicting with each other across your coding agent tools?

AI accentuates what already exists. If there’s scattered information, AI will only amplify that. And it’s incredibly taxing trying to build “productivity” AI dev workflows under those conditions.

AI coding tools (Cursor, Copilot, Claude Code) read whatever config file you point them at. But they don’t reconcile conflicts. They don’t flag when rules decay. They don’t tell you which standards are helping versus creating noise. They treat every instruction as equally valid, even when two rules contradict each other.

And if standards aren’t maintained, risks accumulate.

Rules need to be living systems working for developers.

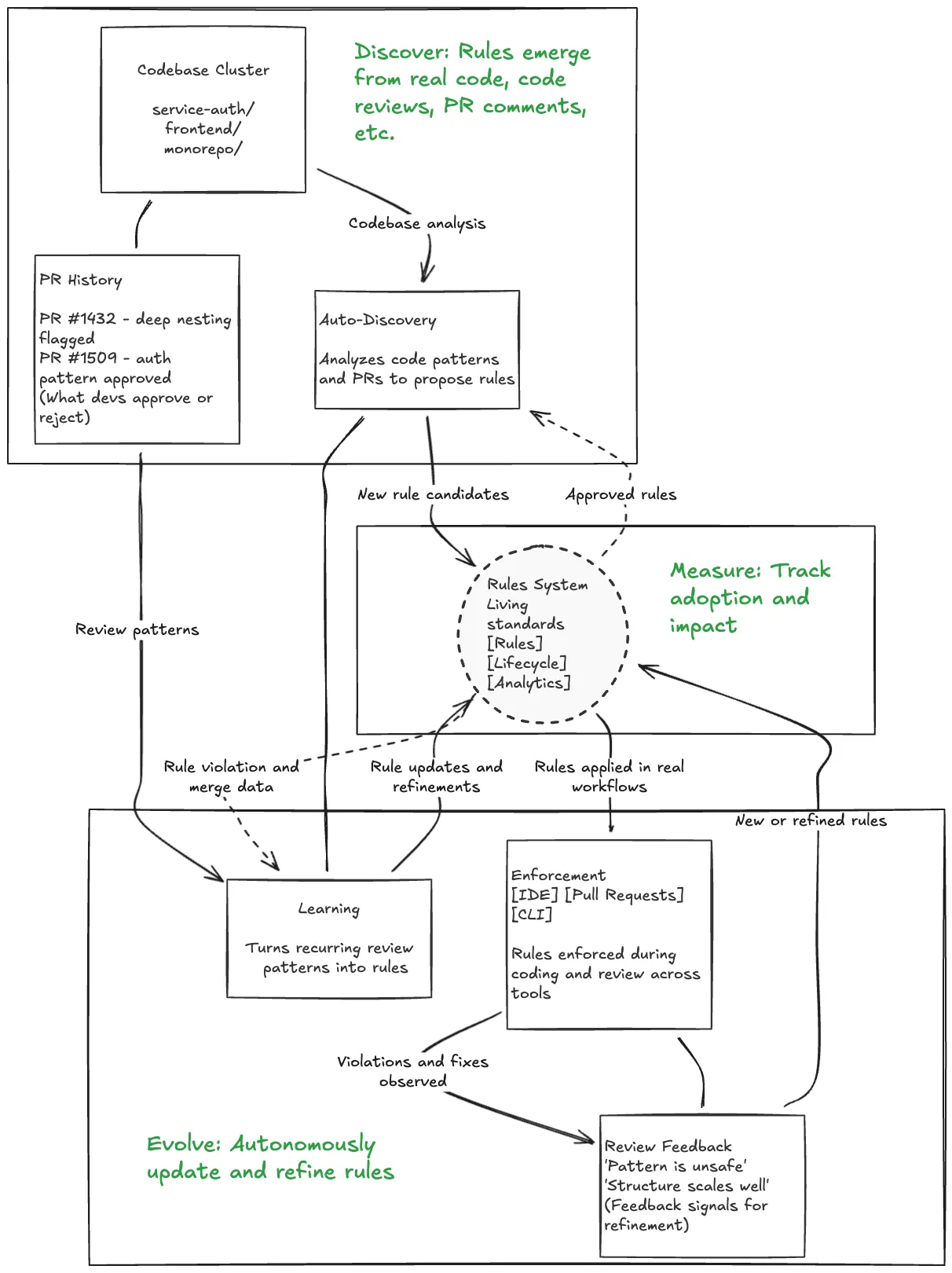

A Scalable Solution: Rules as Living Systems

We’ve been thinking a lot about what it means to enforce and therefore improve code quality with AI. And how to integrate that seamlessly into dev workflows and AI dev tool stacks.

That’s when we realized that rules won’t survive as static files. And workflow commands for updating them won’t suffice either. We had to think bigger, in terms of scalability and manageability.

They need to evolve with your codebase, learn from your team’s decisions, and adapt as your systems change.

A living rules system does four things that static config files can’t do.

1. Rules discover themselves from actual behavior

Instead of manually writing “use early returns instead of nested if statements,” the system learns this pattern by analyzing your codebase and PR history. It sees that reviewers consistently flag deep nesting. It surfaces as a rule candidate. You can either approve or reject!

Auto-discovery means your standards reflect how your team writes software.

2. Rules maintain their own health

Static files decay. They contradict each other. They become outdated when architectures change.

A living system continuously monitors for conflicts, duplicates, and obsolete standards. It flags when two rules pull in opposite directions. It detects when a rule hasn’t triggered in months and suggests deprecation.

Maintenance becomes automated, not a quarterly cleanup ritual that never happens.

3. Rules prove their value with analytics

You can’t improve what you don’t measure.

A living system tracks:

- Adoption rates: Which rules developers follow versus ignore

- Violation trends: Whether issues are decreasing or accumulating

- Fix rates: How often violations get resolved versus dismissed

- Impact: Which rules remain relevant and which ones should be deprecated

This turns standards from aspirational principles into measurable outcomes.

4. Rules enforce consistently across tools

Your standards shouldn’t fragment across Cursor, Copilot, Claude, and your code review process.

A living system maintains one source of truth and exports to every surface where code gets written or reviewed. Same rules in the IDE, in the PR, in the CLI. Same enforcement logic. Same evolution.

This is the closed loop that makes rules scalable: rules feed the review process, and code review feedback feeds the rules system through its continuous learning mechanism.

When a recurring PR comment becomes a rule, and that rule prevents the same issue in future PRs, you’ve automated institutional knowledge. When rule analytics show which standards are working, you’ve made quality measurable. When rules evolve based on real violations and fixes, you’ve built a system that gets smarter over time.

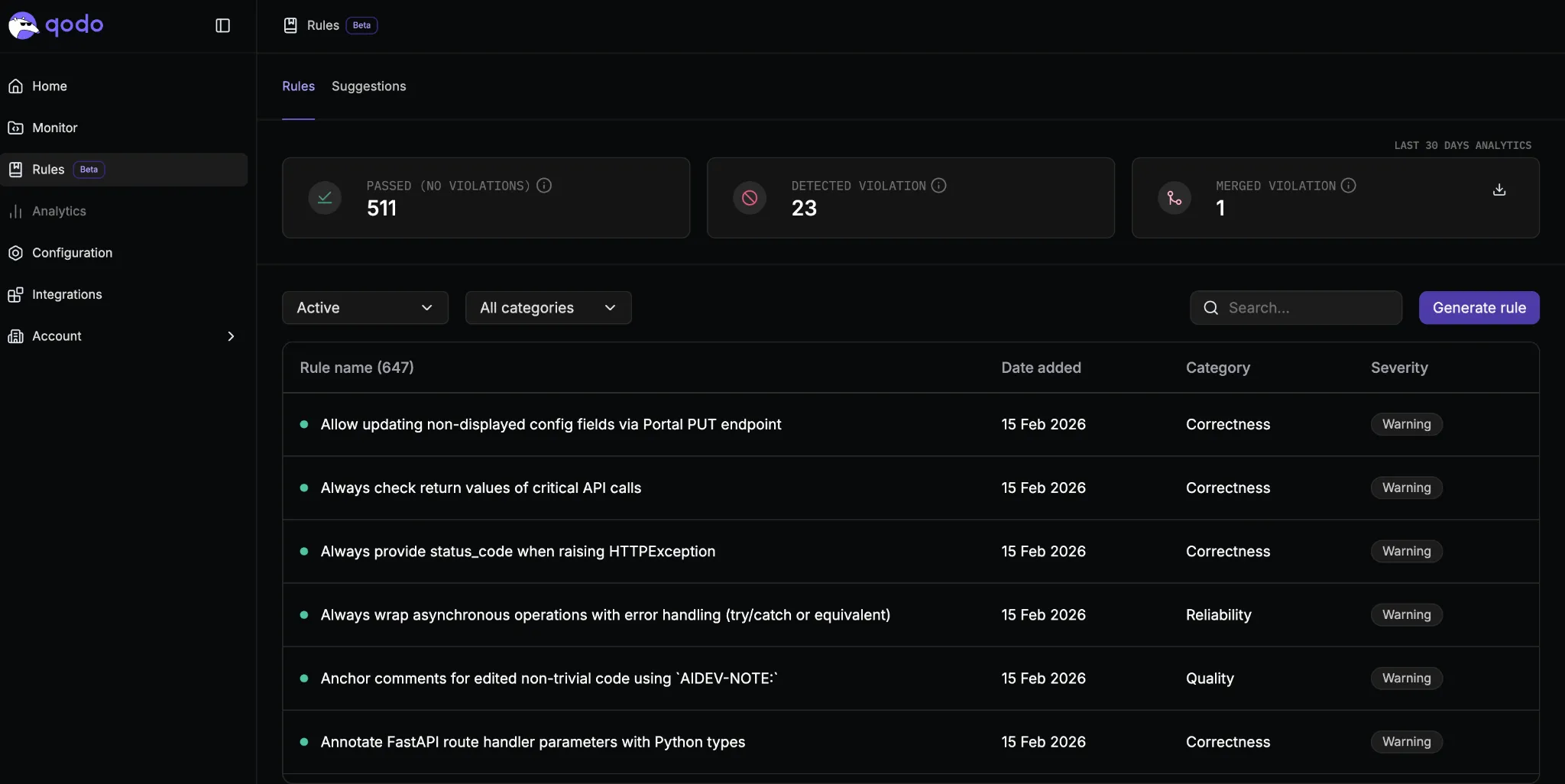

How the Rule System Works in Qodo

We built rules in Qodo as a living system from the ground up. Here’s how the architecture works:

Auto-Discovery: Rules That Create Themselves

The Rules Discovery Agent analyzes your codebase and PR history to generate rule candidates automatically.

It looks for patterns:

- Architectural decisions that appear consistently across files

- Reviewer feedback that surfaces repeatedly in PR comments

- Security practices that your team already follows

- Naming conventions and code structure preferences

Instead of starting with a blank file and manually writing rules, you start with standards your team already practices. The system codifies what “good” looks like based on your codebase, code reviews, PR comments, plus any existing rules you’d like to port over from your other tools.

You review suggested rules, approve the ones that matter, and they become enforceable immediately.

Lifecycle Management: Rules That Maintain Themselves

The Rules Expert Agent continuously monitors rule health by detecting various factors:

- Conflicts: When two rules contradict each other

- Duplicates: When multiple rules enforce the same pattern

- Decay: When rules haven’t triggered in months and might be obsolete

- Noise: When rules generate high violation rates but low fix rates

You get visibility into which rules are helping versus hurting, allowing you to deprecate outdated standards.

This is the Discover → Measure → Evolve lifecycle. Rules shouldn’t be set once and forgotten. They adapt as your codebase and team priorities change.

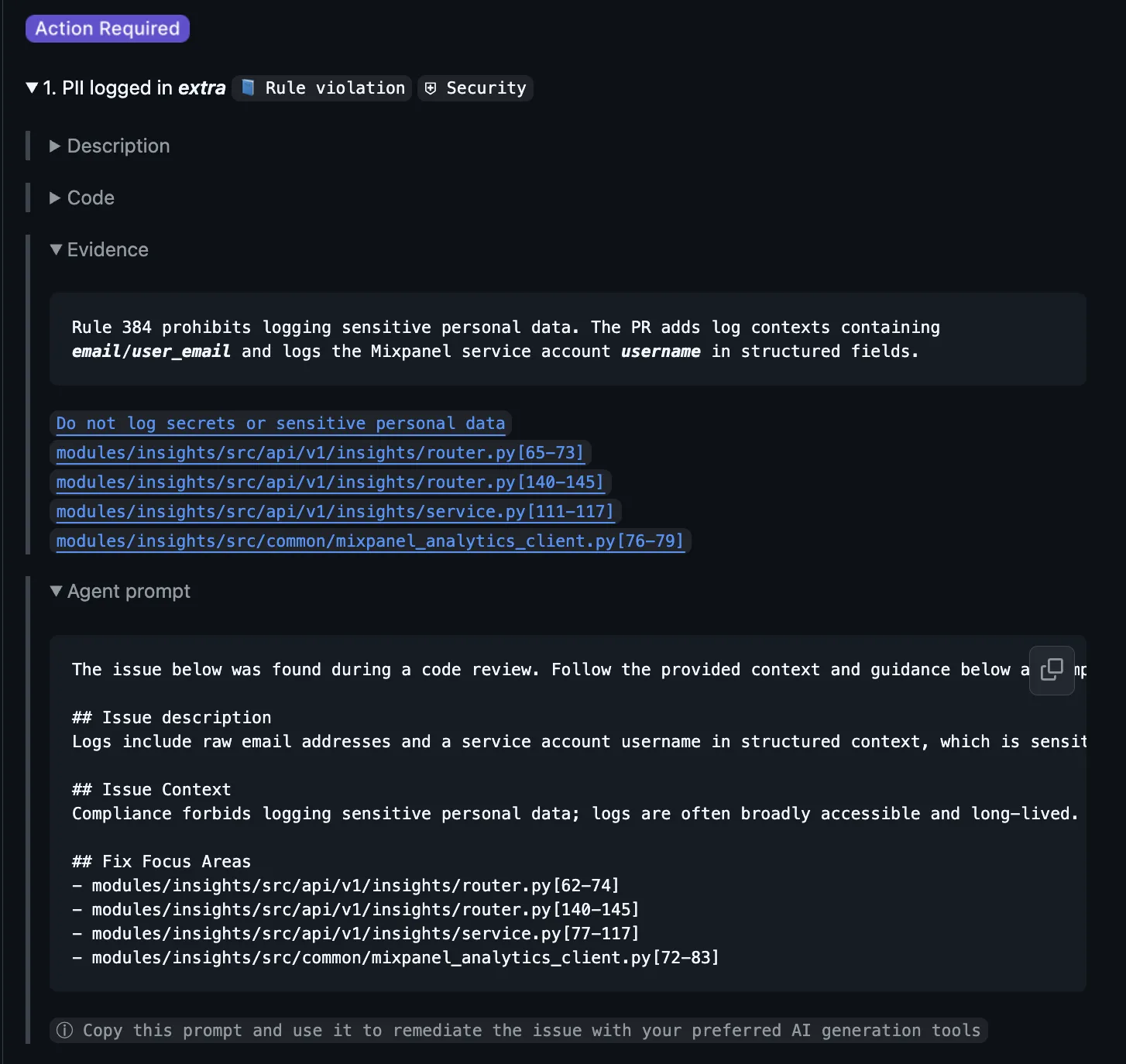

Enforcement: Rules That Work Everywhere

Rules in Qodo are enforced automatically during code review, both in pull requests and locally in your development environment.

When a developer writes code that violates a rule:

- In the IDE: Qodo flags the issue locally with structured remediation guidance

- In the PR: Review agents catch violations before merge and suggest fixes

- Across repos: Same standards apply whether you’re working in the monorepo or a microservice

Developers see why the rule exists, what the preferred pattern is, and how to fix it. The feedback loop is immediate and actionable.

Analytics: Rules That Prove Themselves

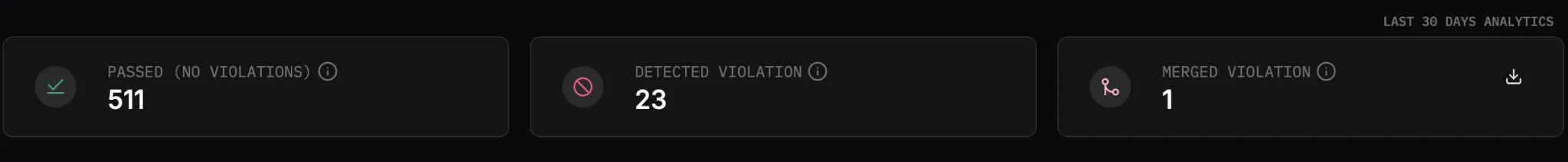

The analytics dashboard signals a few things.

- Adoption trends: Are developers writing code that adheres to the rules or ignoring it?

- Violation patterns: Where do issues cluster?

- Fix rates: How often do violations get resolved in PRs?

- Impact over time: Is code quality improving?

This is how you move from “we think our standards matter” to “here’s data showing which standards prevent real issues.”

Engineering leaders get visibility. Developers get clarity. Standards become measurable.

Cross-Tool Export: One Source, Many Surfaces

Rules in Qodo live in one central portal. But they don’t stay there.

You can export your rules to

- Cursor

- GitHub Copilot (as instructions)

- Claude (as custom instructions)

- Any AI coding tool that reads config files

Same standards, consistent enforcement, no fragmentation.

Your developers see the same guidance whether they’re coding in VS Code, opening a PR in GitHub, or running a review in the CLI.

And in terms of scalability, you manage standards in one place, and they propagate everywhere.

The Goal is Engineering Velocity

When rules are living systems, the engineering process changes.

1. Onboarding becomes faster and more consistent. New developers don’t have to reverse-engineer standards from old PR comments. They see how code is supposed to be written because the rules are discoverable, enforced, and continuously updated.

AI tools stay aligned on your standards. Instead of every tool optimizing for its own context, they all follow the same governance layer. Code generation and code review speak the same language.

Quality becomes measurable. You’re not hoping standards are followed. You’re tracking adoption, measuring impact, and proving improvement with data.

The engineering process gets codified. That institutional knowledge becomes the discoverable rules that survive team changes, onboarding cycles, and architectural evolution.

This is what it looks like when standards scale with your team.

Bring Rule Systems into Your Code Quality Stack

Your team already has coding standards. They’re just scattered, static, and unenforceable.

Static config files are archaeology. Living systems are architecture.

AI’s velocity makes this distinction very clear. That’s why we need rules that create themselves, maintain themselves, and prove themselves for dev teams.

That’s what I call code quality governance with AI for enterprise software.

Learn more about rules in Qodo →