3 Steps for Securing Your AI-Generated Code

The rapid adoption of AI-generated code is reshaping software development, with tools like GitHub Copilot and ChatGPT enhancing productivity and creativity. These tools automate repetitive tasks, streamline workflows, and enable developers to focus on more complex problems. However, this convenience comes with a caveat: security risks.

While powerful, AI-generated code may lack built-in safeguarding for secure programming, and may propagate vulnerabilities, insecure configurations, or errors that compromise source code security. This article outlines three practical steps to secure AI-generated code while still capitalizing on its benefits.

What Is AI-Generated Code?

AI-generated code refers to software created or supplemented by AI tools that leverage machine learning models trained on vast datasets.For instance, GitHub Copilot suggests lines of code as developers type, while ChatGPT generates entire functions based on prompts.

Benefits of AI-Generated Code

- Increased productivity: Automates mundane coding tasks, allowing faster project delivery.

- Enhanced creativity: Provides alternative solutions and code snippets to solve complex problems.

Risks of AI-Generated Code

- Security vulnerabilities: AI may generate insecure code that lacks validation or encryption.

- Insecure defaults: Without oversight, AI tools can introduce hardcoded credentials or unprotected APIs.

While AI tools are incredibly valuable, they aren’t a complete replacement for a developer’s expertise when it comes to secure code development. To mitigate risks, teams must prioritize source-code security at every stage of development.

Step 1: Training and Thorough Examination

AI tools like GitHub Copilot and ChatGPT can expedite development, but they lack the contextual understanding of secure programming principles,making developer expertise indispensable for ensuring code security. Developers trained in spotting potential vulnerabilities can critically evaluate AI-generated code, preventing flaws that could lead to severe security incidents, such as data breaches or compliance failures.

Examples of risks without training:

- Hardcoded credentials: AI-generated code might embed sensitive keys or passwords, exposing systems to unauthorized access.

- Insecure configurations: Default settings suggested by AI could open attack vectors.

- Data exposure: Improper handling of input/output may lead to sensitive data leaks.

Key Aspects of Training

To enhance source code security, developers should focus on:

1. Input validation

- Protect applications from injection attacks by ensuring all user inputs are sanitized.

- Use frameworks like OWASP ESAPI to validate inputs dynamically.

2. . SQL Injection prevention

- Implement parameterized queries or stored procedures to prevent attackers from manipulating SQL commands.

- ORM frameworks like Hibernate or SQLAlchemy inherently handle many SQL security issues.

3. Authentication protocols

- Adopt strong, multi-factor authentication (MFA) mechanisms and modern standards like OAuth 2.0.

- Ensure secure session management practices.

The Examination Process

AI-generated code should always undergo a rigorous examination. For maximum source code security, integrate these steps into the workflow:

Manual code reviews

- Conduct peer reviews to identify nuanced issues like logical errors, insecure access permissions, or subtle vulnerabilities missed by automated tools.

- Use collaborative tools such as GitHub or GitLab for structured code review processes.

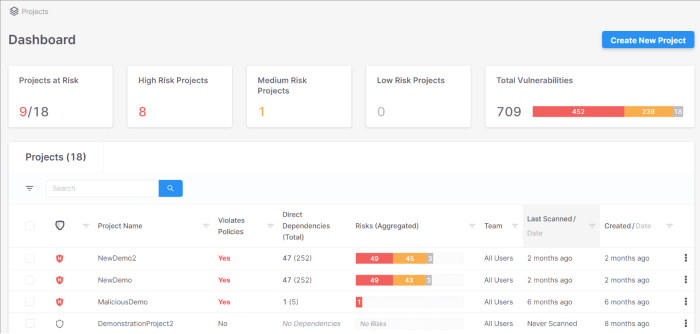

Automated security checks

- Employ DevSecOps tools like SonarQube, Veracode, or Snyk designed to detect unsafe dependencies, insecure libraries, or deprecated APIs in real time.

- Automated tools offer rapid insights but should complement, not replace, manual reviews.

Real-World Application

Researchers found that AI-generated code from Copilot often includes vulnerabilities, including hardcoded credentials, insecure defaults, and SQL injection flaws. One study demonstrated that nearly 40% of the code generated by Copilot in security-critical scenarios contained exploitable vulnerabilities–this stems from the fact that AI models often generate code based on vast datasets, including code with inherent flaws or outdated security practices.

Step 2: Continuous Monitoring and Auditing

Even after thorough reviews, AI-generated code requires ongoing monitoring to ensure source-code security. Runtime environments can reveal new vulnerabilities not apparent during static analysis or code reviews, such as edge cases, dependency conflicts, or unforeseen interactions between components. Continuous monitoring helps identify and address these risks in real time.

Types of Monitoring

1. Proactive monitoring with DevSecOps tools:

Integrating DevSecOps tools into CI/CD pipelines enables early detection of security flaws during development and deployment.

- SonarQube: Analyzes source code to find bugs, vulnerabilities, and code smells, highlighting issues like weak authentication logic or unsafe API usage.

- Snyk: Monitors for vulnerabilities in dependencies and container images, providing detailed remediation advice directly in development environments.

2. Log tracking and analysis:

Application and system logs are invaluable sources for identifying anomalies.

- Elastic Stack (ELK): Provides centralized log collection, real-time visualization, and anomaly detection.

- Amazon CloudWatch: Captures logs and metrics from AWS resources, enabling targeted alerts for unusual behaviors.

[blog-subscribe]

Auditing for Compliance and Security

While monitoring is real-time, auditing provides a retrospective analysis of code and practices to ensure they meet organizational standards and compliance regulations.

1. Key focus areas in auditing:

- Codebase integrity: Check for outdated or insecure libraries.

- Security practices: Review adherence to protocols like OAuth2 or TLS configurations.

- Compliance: Verify compliance with standards such as GDPR, HIPAA, or PCI-DSS.

2. Essential tools for continuous auditing:

- Amazon Inspector: Evaluates EC2 instances, Lambda functions, and container images for vulnerabilities.

- Dependabot: Automates dependency updates, flagging and patching outdated or vulnerable libraries.

Best Practices for Continuous Monitoring and Auditing

- Establish a feedback loop: Insights from monitoring should directly inform patches and updates. For example, detecting repetitive injection attempts in logs might indicate the need to strengthen input validation in the codebase.

- Simulate attack scenarios: Conduct penetration tests to uncover gaps that monitoring tools might miss, such as logical vulnerabilities in multi-step workflows.

- Automate wherever possible:

- Use tools like Jenkins or GitLab CI to automate security scans during each build.

- Schedule periodic vulnerability scans and audits to ensure ongoing code integrity.

- Use real-time alerts: Set up automated notifications for critical vulnerabilities. For example, Amazon SNS can be used with AWS Inspector to send alerts to development teams for immediate remediation.

Step 3: Implement Rigorous Code Review Processes

Code reviews are a cornerstone of secure code development, as they help identify vulnerabilities and improve the overall quality of AI-generated code. While automated tools like static analysis and linters can catch many common vulnerabilities, they can’t replace the nuanced understanding that human reviewers bring to the table.

Manual reviews allow for a deeper inspection of business-critical logic, authentication flow, or edge cases that automated tools might miss. They also ensure that the AI-generated code aligns with both security requirements and industry best practices.

In secure development, even AI-generated code requires critical human oversight. AI tools are trained on large datasets, which may include insecure code or outdated practices. Therefore, developers who review AI-generated code can apply modern security principles and business requirements, ensuring the resulting code is both secure and relevant.

Best Practices for Code Reviews

1. Adopt secure coding guidelines

Establishing a set of clear and enforceable coding standards that focus on security is essential. These guidelines should cover:

- Secure input validation practices.

- Proper handling of authentication tokens and credentials.

- Guidelines for secure API integrations, including avoiding common pitfalls like cross-site scripting (XSS) and cross-site request forgery (CSRF).

Tools like OWASP’s Secure Coding Practices and the CIS (Center for Internet Security) Benchmarks can serve as valuable references when developing internal guidelines.

2. Encourage peer reviews

Peer reviews leverage the collective expertise of the development team. When multiple developers review the code, the likelihood of spotting vulnerabilities increases.

For example, one developer might be an expert in authentication flows, while another might have experience with securing APIs. By involving multiple reviewers, teams can ensure that AI-generated code adheres to security principles in all aspects.

3. Leverage security tools

Static analysis tools like SonarQube, Checkmarx, or Fortify help identify low-hanging fruit vulnerabilities. These tools automatically scan for things like:

- Unused variables or dead code, which might expose attack surfaces.

- SQL injection vulnerabilities due to unsafe query handling.

- Hardcoded secrets or configuration issues, such as weak default passwords.

Linters like ESLint for JavaScript or Pylint for Python can be used to automate checks for known coding anti-patterns that can lead to security flaws, ensuring consistent adherence to secure coding practices.

Balancing Automation and Manual Reviews

A balanced approach to code reviews integrates both automated tools and manual inspection to catch vulnerabilities across different levels.

1. Automated reviews

Automated tools, such as SonarQube, Snyk, and Codacy, can scan code quickly and identify easily detectable issues. They are particularly effective for:

- Identifying insecure coding patterns, such as improper exception handling.

- Scanning for common vulnerabilities like buffer overflows or insecure data storage.

- Checking for outdated or vulnerable third-party dependencies.

Automated scans should be integrated into CI/CD pipelines, ensuring every code change is automatically analyzed before being deployed. This integration helps detect issues early in the development cycle, which reduces the cost and time spent on patching after deployment.

2. Manual reviews

While automated tools can flag obvious vulnerabilities, manual reviews are essential for catching more complex issues. Developers should manually review the following:

- Inconsistent usage of secure libraries or outdated security patterns.

- Edge cases and failure modes that could lead to unintended data leakage, such as improper handling of error messages or race conditions.

Example: In an AI-generated API implementation, an automated tool might miss a subtle issue like authorization bypass, where an endpoint doesn’t properly validate permissions. A manual reviewer would catch this issue by carefully inspecting the business logic and ensuring role-based access controls are applied consistently across all endpoints.

Conclusion

The productivity gains of AI-generated code are undeniable, but they also come with risks that demand proactive measures. By following these three steps:

- Training and thorough examination: Equip developers with the knowledge to identify and mitigate risks.

- Continuous monitoring and auditing: Use DevSecOps tools to detect runtime vulnerabilities.

- Rigorous code review processes: Combine automated and manual reviews to ensure comprehensive source code security.

…organizations can confidently leverage AI tools for secure code development while minimizing vulnerabilities. As AI evolves, so too must our security strategies, ensuring that innovation and safety go hand in hand.