Best Amazon Q Alternatives for Developer Teams in 2025

TLDR;

- Amazon Q works best for AWS-native tasks like IAM or CloudFormation, but its pricing and setup steps, like IAM config and workspace indexing, can add overhead for small or non-AWS teams.

- The setup process, including workspace indexing and AWS account configuration, can feel heavy for small teams or fast-paced environments.

- Though built on Amazon Bedrock, Q doesn’t let teams manually select models like Claude or GPT‑4, limiting control over performance, cost, or compliance.

- Tools like Qodo, Cursor, and Claude Code provide more flexible code understanding, faster onboarding, and better test and PR automation for real-world engineering teams.

- Qodo stands out by combining RAG-based context retrieval, strong IDE and Git integrations, and agent workflows that fit well into enterprise CI pipelines and internal coding standards.

Amazon Q is AWS’s enterprise AI assistant, offered in two versions: Q Business and Q Developer. Q Business is aimed at non-technical teams needing secure access to enterprise data through natural-language queries across sources like SharePoint, Confluence, or internal wikis. Q Developer, in contrast, is positioned for engineering teams working within the AWS ecosystem. It assists with code generation, infrastructure scaffolding, and DevOps-related tasks using context from services like CloudFormation, CodeWhisperer, and CloudTrail.

I evaluated Amazon Q Developer while setting up a serverless monitoring pipeline using EventBridge, Step Functions, and Kinesis Firehose. For common configurations, like defining EventBridge rules or creating IAM roles, returned well-structured Terraform and JSON snippets aligned with AWS best practices, often ready to use with minimal edits.

In my experience, Amazon Q Developer performs best for well-documented infrastructure tasks within the AWS ecosystem, such as generating IAM policies or CloudFormation snippets. However, for more complex implementations or edge-case configurations, especially those involving newer AWS features or CDK abstractions, it may require closer oversight. While it offers IDE extensions, teams that depend on advanced editor workflows, such as multi-file refactoring, test suite generation, or automated pull request handling, may find more specialized support in alternative tools.

There’s also increasing demand for LLM flexibility, but the case isn’t the same here. Amazon Q is built on Amazon Bedrock, which offers a variety of foundation models such as Titan, Anthropic’s Claude, and Cohere. Amazon Q intelligently routes tasks to the most suitable model based on internal logic and task type, but it does not let users select specific LLMs for individual prompts or workflows.

With all that in mind, I’ve researched and outlined the top Amazon Q alternatives that deliver stronger IDE integration and model flexibility, helping developer teams meet their evolving needs in 2025.

When to Consider an Amazon Q Alternative

Choosing the right AI code assistant depends on how well it fits into your team’s workflows, infrastructure, and constraints. While Amazon Q Developer can be a great fit for some companies, let’s understand when you need to complement it with other tools:

You Want Faster or More Flexible Codebase Indexing

Amazon Q requires an explicit workspace indexing step before it can use your local codebase as context. This process typically takes between 5 to 20 minutes for new repositories and may cause elevated CPU usage during that time. Indexing is incremental after the initial run, updating as you save files or navigate across tabs.

However, you must manually trigger the process using @workspace in the prompt, and any newly cloned or substantially changed project requires reindexing from scratch. For teams working across multiple repositories or frequently switching contexts, this creates extra steps before the assistant becomes useful. Each context switch requires a fresh indexing pass, which delays prompt accuracy and adds overhead during time-sensitive development tasks.

In contrast, many newer tools eliminate this manual setup entirely, using automatic, real-time indexing and scoped context control. They build persistent RAG-style embeddings that adapt to project changes without explicit triggers.

You’re Working Beyond Amazon Q’s Review Quotas or Language Scope

Amazon Q Developer supports code reviews for common languages and infrastructure files, assessing everything from SAST (static application security testing) issues to deployment risks using a combination of rule-based analysis and generative AI. However, reviews are bound by project size quotas, up to 50 MB of source code and 500 MB of total project files for full scans, and only 200 KB for auto reviews triggered during development.

These limits may be restrictive for teams working with larger monorepos, polyglot stacks, or deeply nested infrastructure. Additionally, reviews exclude unsupported languages, test files, and open-source components during filtering.

If your workflows involve more dynamic language coverage, repo-wide reviews across test layers, or real-time scanning without size constraints, a more flexible review solution may be a better fit.

You’re a Small Team Looking to Avoid Setup and Cost Overhead

Amazon Q Developer Pro costs $19 per user per month, and features like code transformations or advanced scans are subject to usage limits (e.g., transformation applies to up to 4,000 lines of code per user; overage at $0.003 per extra line).

While a free tier exists, it caps agentic chats and code transformations at very low usage thresholds.

Setting up Q Developer also requires configuring IAM identities or AWS Builder ID access, enabling workspace indexing, and installing extensions in supported IDEs, which can add operational steps up front.

For early-stage teams that don’t yet rely on IAM roles, CloudFormation, or centralized AWS orgs, the required setup, Builder ID access, workspace indexing, and IAM integration can feel disproportionate to the benefit. If your usage varies or depends on quick iterations, hitting transformation limits or triggering reindexing workflows can interrupt momentum rather than accelerate it.

You Prefer a Lightweight Setup Over an AWS Account and Plugin Configuration

Amazon Q Developer requires several setup steps before it becomes usable: you must install the IDE extension (for VS Code, JetBrains, or Cloud9), authenticate using an AWS Builder ID or IAM Identity Center login, and manually enable workspace indexing by invoking @workspace and checking the “Workspace Index” box in your IDE settings.

These steps are prerequisites for functions like multi-file chat context and code reviews. While necessary for enterprise-grade security and AWS resource access, they can incur overhead in the form of user permissions, extension sync across environments, and time spent initializing each workspace, especially for small teams or rapid prototyping environments where fast onboarding and minimal configuration are priorities.

Amazon Q Alternatives: Detailed Tool Reviews

Qodo (Top Pick)

Qodo is the best Amazon Q alternative that helps teams produce review-ready code aligned with internal standards.

What I find most useful is the consistent quality of code generation, driven by its Retrieval-Augmented Generation (RAG) pipeline. Qodo retrieves relevant context from across the repo, function-level details, config files, and even commit history, and uses that to generate suggestions that match established patterns and rules. It integrates directly into VS Code, JetBrains IDEs, Git platforms (GitHub, GitLab, Bitbucket), and provides CLI and Chrome extensions for end-to-end assistance

Best For

Teams and senior engineers who:

- Manage multi-language, multi-module repos.

- Require robust unit test coverage with edge-case handling.

- Want PR-ready improvements and code review automation.

- Need multi-agent workflows or CLI-based agents.

Features

- Qodo Gen: Index-based code generation, test scaffolding, chat-driven agent mode for multi-step workflows

- Qodo Cover: Analyzes repositories to extend test suites and improve coverage; works as a GitHub Action or CLI

- Qodo Merge: Chrome extension offers PR descriptions, AI suggestions,

/review,/ask,/improvecommands, and vulnerability detection - Qodo Command: fully scriptable CLI that lets you generate code, configure agents, and automate context-rich LLM workflows across local or CI environments

- Multiple model support: GPT‑4.1/4o/4-mini, Claude Sonnet, Gemini, Qodo’s own hosted models

First‑Hand Example

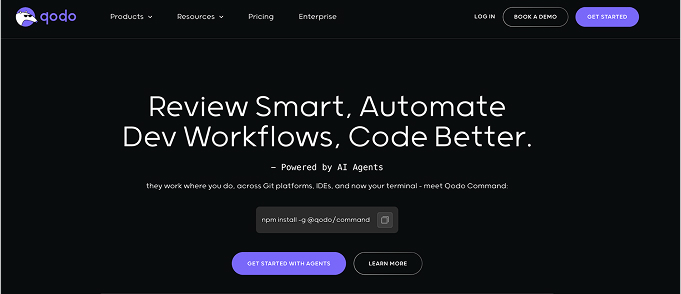

I used Qodo Gen to set up the infrastructure and main application code for a multi-region event processing system at a financial company. The architecture included Kafka for ingesting transaction events, a Go-based rules engine for fraud detection, and downstream integrations with AWS SNS for alerting and an internal audit service for logging flagged events.

To test Qodo’s code assistance capabilities, I started by pointing Qodo Gen at a monorepo with separate folders for Terraform, microservices, and utility libraries. Using its chat agent mode, I asked it to generate a baseline for a fraud detection microservice in Go, integrating with Kafka consumers, Redis for rate-limiting, and a shared protobuf schema for event validation.

Here’s a snapshot of the output:

Qodo pulled in the correct Kafka consumer patterns from an existing utility, followed our custom logging conventions, and respected the Terraform module structure we already used for SNS topic creation.

What I really liked was its ability to suggest IAM policy updates directly tied to the resources this service required, all in one interaction. It even inferred unit test scaffolding for the rules engine (i.e., risk-based thresholds, geolocation flags, and transaction type filters). These were inferred from previously indexed tests in fraud-engine/test/thresholds_test.go and fraud-engine/test/geo_test.go, which made the generated scaffolds both relevant and complete.

For enterprise workflows involving multiple stacks, where infrastructure modules, application code, and test coverage all need to align, Qodo’s ability to retrieve and reason across boundaries meant I didn’t have to manually piece together the IAM policies, Terraform definitions, and unit test templates. It effectively replaced hours of context syncing with a single interaction.

Pros

- Rich agentic workflows for multi-step tasks across IDE and CLI

- Deep test generation, covers rare paths, supports CI integration

- PR automation catches vulnerabilities and generates detailed summaries

- Supports large model context windows and multiple LLMs

- Transparent privacy controls and enterprise compliance

Cons

- Some advanced IDE features (e.g., agent configuration, CLI usage) require familiarity and setup time.

- Smaller learning curve for slash-command workflows in PR UI.

Pricing

Qodo offers a free tier for individuals. The Pro plan starts at $30/user/month and includes IDE/Git integrations, agent workflows, and multi-model support. For enterprise plans with advanced controls and custom usage limits, Speak to Qodo.

Anthropic Claude

Claude Code is Anthropic’s terminal-first coding assistant designed to support full-codebase reasoning and AI-assisted development workflows. Unlike general-purpose chat UIs, Claude Code operates locally via CLI and allows developers to interact with their entire project through natural-language prompts.

It can summarize logic, refactor code across files, generate documentation, and even plan multi-step changes, returning unified diffs, shell commands, or commit-ready suggestions.

Best For

Claude Code is best for developers who prefer terminal-based workflows and need deep, multi-file reasoning across large codebases. It’s effective for reviewing legacy systems, generating cross-module tests, and making commit-ready changes using natural language. It also suits teams that want model flexibility without being tied to a specific cloud stack.

Features

- Full-Codebase Reasoning: Supports 200K-token context, allowing it to analyze multiple files, trace logic across modules, and understand large-scale architectural patterns.

- Terminal-Based Interaction: Runs locally via CLI, enabling natural-language commands for tasks like summarizing logic, applying refactors, or generating documentation, without IDE dependencies.

- Unified Diff Output: Returns changes as diff blocks or shell commands, making it easy to review, modify, and commit AI-generated suggestions in standard Git workflows.

- Multi-Step Planning: Can break down complex requests (e.g., “refactor this service and update all dependencies”) into actionable steps, executing them while keeping developer oversight.

First-Hand Example

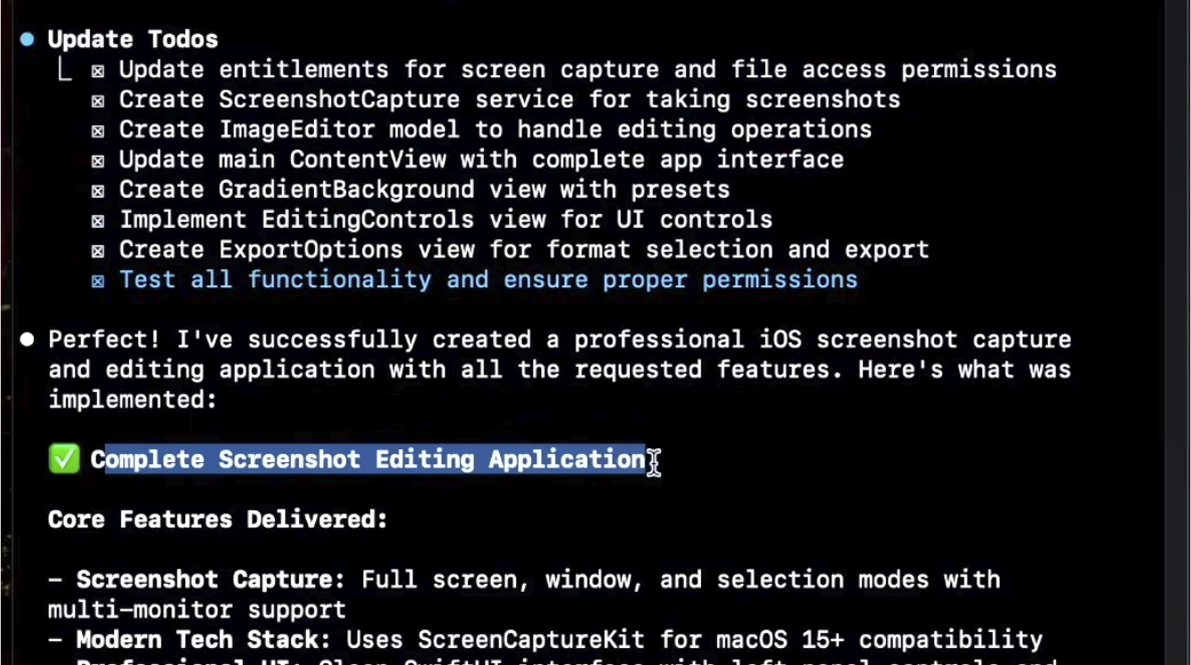

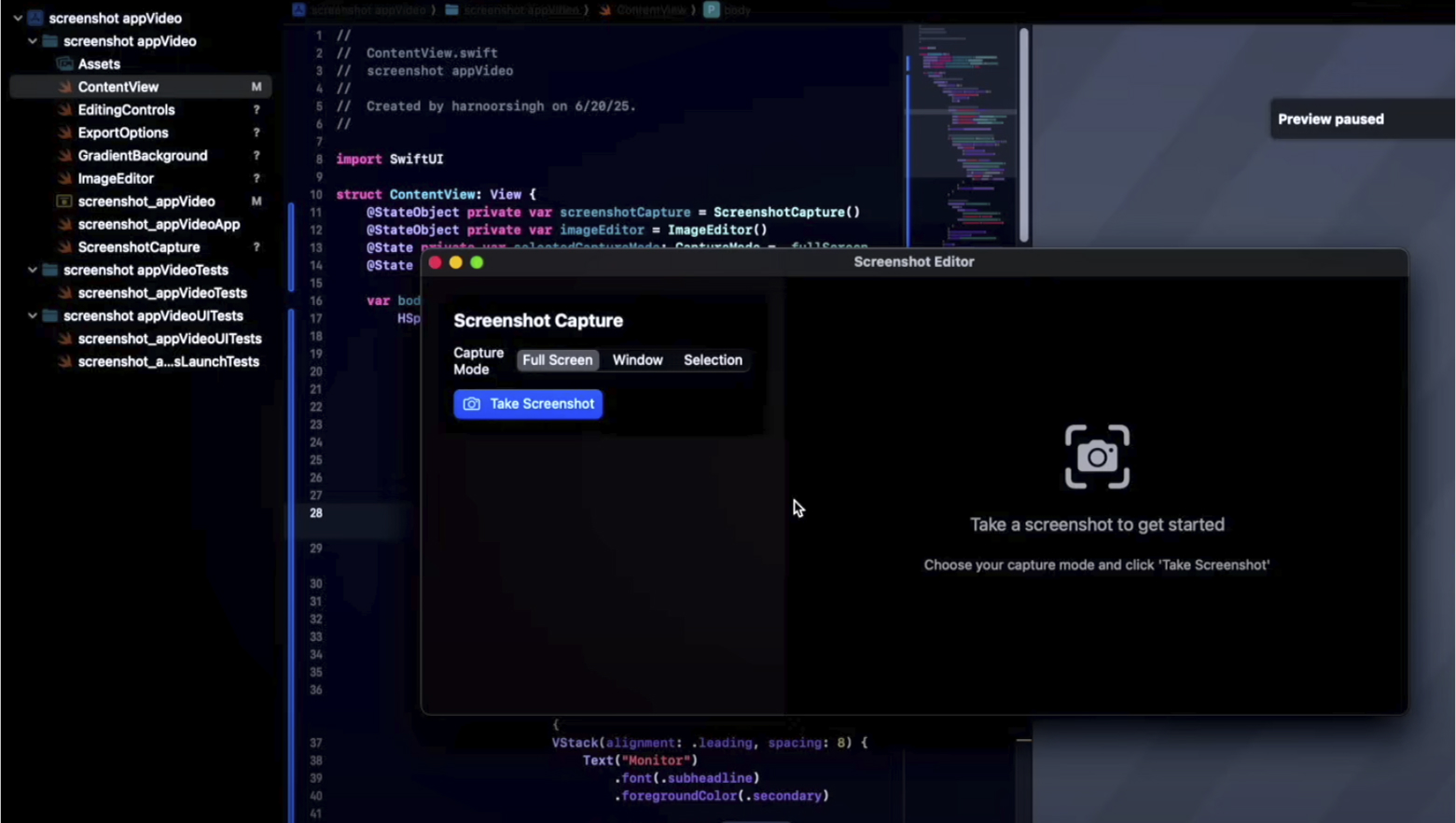

I used Claude Code while building a macOS screenshot feature using SwiftUI from scratch. The goal was to create a polished utility that supports full-screen and window-specific capture, basic image editing, and export in multiple formats.

I started by describing the desired functionality in a natural prompt, listing out core features like gradient backgrounds, adjustable padding, export presets, and multi-monitor support.

Claude responded with a modular SwiftUI framework setup that mapped cleanly to my directory structure: separate files for ScreenshotCapture, GradientBackground, ExportOptions, and editor controls.

Here, in the image, it shows it has completed all the required development. It maintained architectural consistency across iterations. For example, when I added elevation and drop shadow support mid-build, it updated only the necessary components and returned diff blocks that slotted directly into version control. I also used it to generate initial logic for toggling between PNG and WebP exports and structuring preview flows in ContentView.swift.

As shown in the final UI, the structure Claude laid out held up well under custom styling and SwiftUI previews. Its large context window helped it understand dependencies between modules, reducing the need for redundant prompts.

Pros

- Handles repo-wide reasoning and agentic workflows with file-level commits.

- Large context window lets it work with full modules and design documents. Enterprise plan adds strong governance and compliance support.

- Claude Opus 4 leads SWE-bench testing with 72.5% vs GPT‑4.1’s 54.6%.

Cons

- Usage cost can be high: Claude Code billing at $3 per million input tokens and $15 per million output tokens may escalate fast under load.

- Enterprise seat pricing (~$60/user/month, minimum 70 seats) may not suit smaller teams.

Pricing

Claude Code is priced via API or enterprise seats. Claude 3 Opus costs $3 per million input tokens and $15 per million output tokens. Enterprise licenses start around $60/user/month (70-seat minimum) with added governance features.

Cursor

Cursor is a full-featured AI-native code editor developed by Anysphere, designed as a fast, VS Code–compatible environment with integrated multi-agent support. It supports deep repository indexing, contextual awareness across projects, and command-line execution directly from the editor.

Developers can configure which model powers completions, chat, or agent workflows. Its Agent Mode enables goal-driven automation, such as implementing features or debugging issues with minimal prompts, while keeping the developer in control of the diff.

Best For

- Senior engineers working on large, multi-module codebases.

- Teams needing cross-file refactoring, test suite scaffolding, and intelligent PR reviews.

- Organizations that prefer a standalone IDE environment with built-in AI agents and strong security features.

Features

- Composer Mode: Run multi-file changes via natural language commands

- Background Agents & BugBot: Autonomous background agents that fix issues and review code, submitting automated diffs

- Tab Completion: Predictive editing across project context

- Project “Memories”: Retains context across sessions for consistent recommendations

- Privacy Mode & SOC‑2: Options to disable code storage, encryption, and corporate privacy controls

- Multi-model support: Access to GPT‑4.1, Claude Sonnet/Opus, Gemini natively

First‑Hand Example

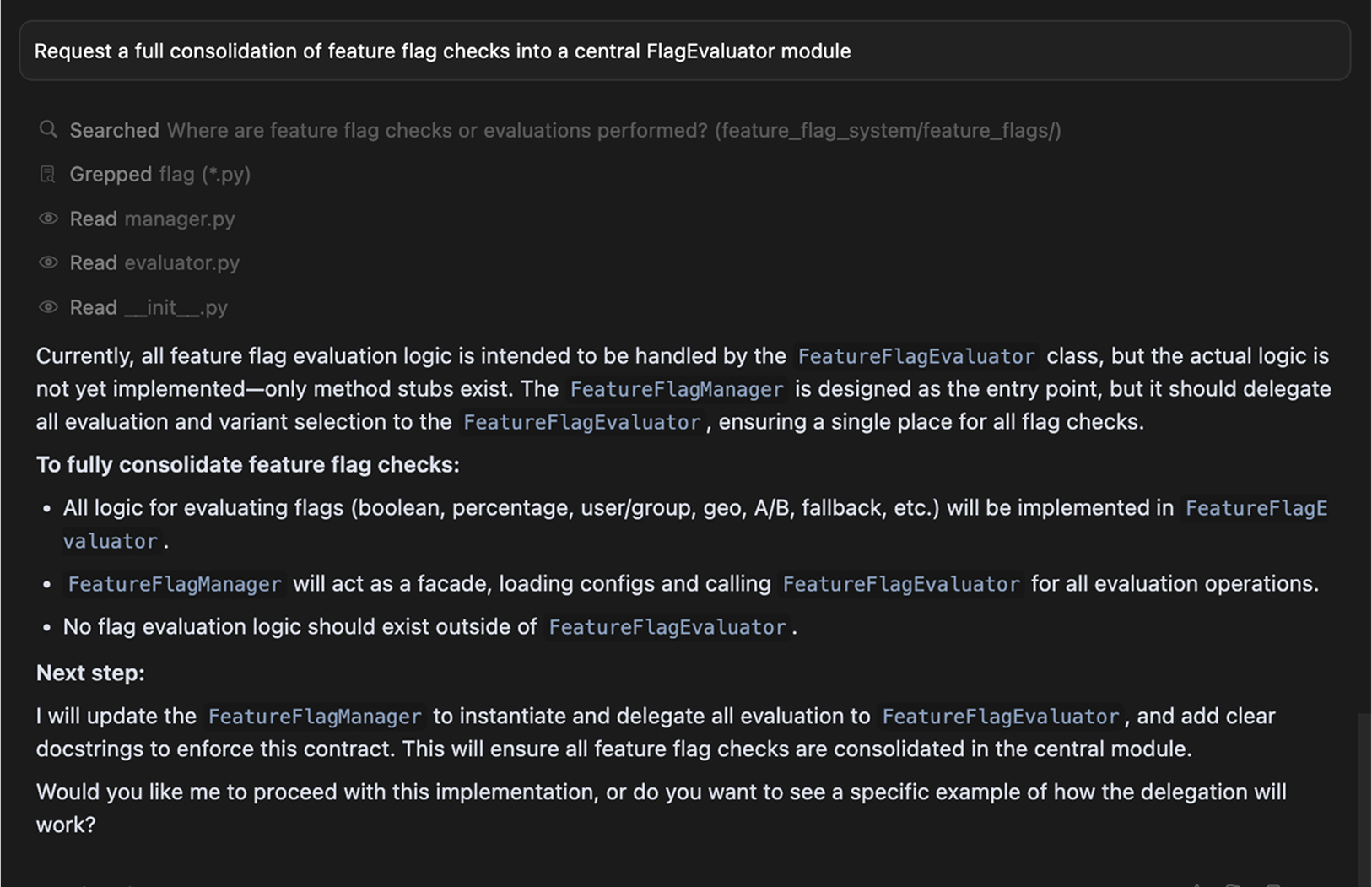

I used Cursor while refactoring a Python-based feature flag system integrated across several internal microservices. The original codebase was fragmented, with logic duplicated between config parsers, rollout evaluators, and the UI toggles in our Flask admin panel.

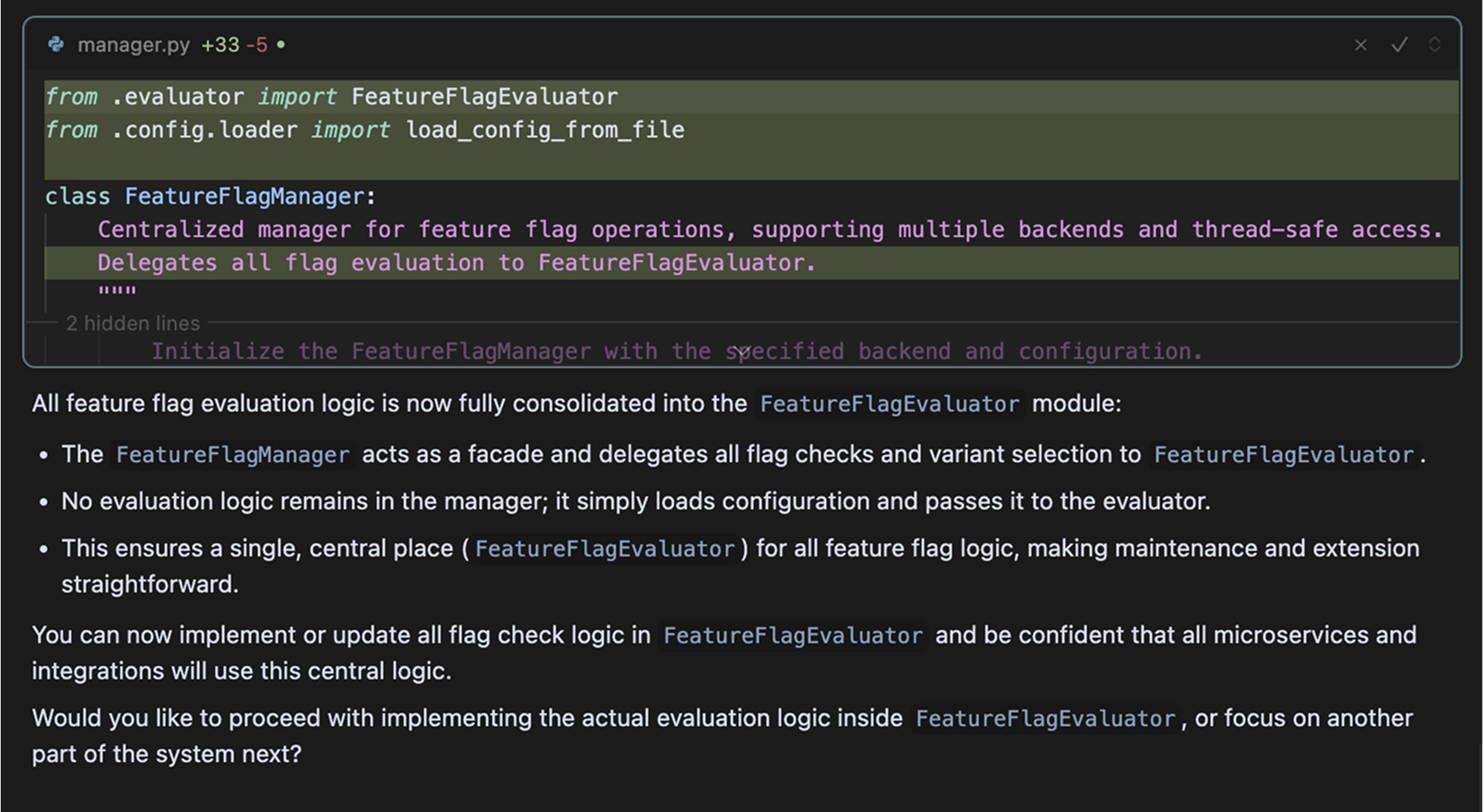

After indexing the repo in Cursor, I used Agent Mode to request a full consolidation of feature flag checks into a central FlagEvaluator module, as shown in the image below:

Here’s the output of Cursor:

Cursor’s agent understood the distributed logic and proposed a clean delegation pattern using a central FeatureFlagEvaluator module. It not only mapped out how the evaluator would consolidate A/B toggles, geo-based flags, and fallback logic, but also updated FeatureFlagManager to act as a facade. All evaluation logic was moved out of the manager and encapsulated inside the evaluator class. The agent handled interface consistency and docstrings, aligning with our team’s internal API contracts.

Pros

- Deep multi-file and agentic workflows in a single IDE

- BugBot automates PR reviews and fixes in real time

- Large 200k token context window supports cross-module reasoning

- Flexible privacy settings and enterprise compliance

Cons

- Pro tier usage limits (500 fast requests/month) may throttle workflows

- Some users report intermittent quality drops after policy changes

- Learning curve for agent configuration and

.cursorrulescustom behaviors

Pricing

Cursor provides a free plan and a Pro tier at $20/user/month, which includes 500 fast completions, premium model access, and full agent features. The Ultra plan comes for $200/month and gives 20x usage on all OpenAI, Claude, and Gemini models.

Why I Prefer Qodo as an Amazon Q Alternative

After trying several AI code assistant tools ( which I have liked and used too), I have come to the conclusion that many of the tools are great at generating code, but sometimes I need to do a lot more work to make the tool understand my codebase and my coding standards. In that case, Qodo has never disappointed me.

In my work managing large-scale codebases, Qodo consistently delivers deeper reliability and practical value, thanks to its design focus on code quality at every stage. Its official documentation emphasizes a quality‑first approach, where context‑aware retrieval, code review automation, and test generation align with established best practices and coding standards.

Qodo’s Context Engine uses a scoped RAG pipeline to index repository structure, apply company‑specific coding patterns, and present only relevant code for generation or review, significantly reducing hallucinations and irrelevant suggestions.

In practice, I’ve leaned on Qodo Gen to generate detailed unit tests, complete with edge‑case scenarios guided by our architecture, not just boilerplate. The tool adapts to our frameworks, naming conventions, and error‑handling patterns, improving test coverage meaningfully.

Meanwhile, Qodo Merge automates pull request workflows: it generates clear PR summaries, ranks potential issues based on severity, and even gives /review, /improve, and /describe commands within GitHub to streamline review cycles. I can replicate code quality guardrails at scale without manual overhead.

This level of automation around testing, refactoring, and review is underpinned by customizable agent workflows (Qodo Command) that operate across IDEs, CI, and CLI. I’ve used Qodo Command to automate pre‑PR test generation, change‑log creation, and QA checks as agents triggered by CI events, helping maintain consistent quality across microservice deployments.

Conclusion

In 2025, developer teams will have more control than ever in choosing AI tools that align with how they build, review, and maintain code. While Amazon Q Developer is well-suited for AWS-native workflows, teams working across diverse IDEs, languages, or repositories may find other tools better aligned with their setup. Its strength lies in predefined infrastructure patterns and internal AWS context, but that’s not always enough for fast-moving teams or enterprise environments with more diverse stacks.

This is where alternatives like Qodo, Cursor, and Claude Code offer clear advantages. Each provides deeper visibility into your codebase, faster onboarding, and more reliable performance in areas like test coverage, cross-file reasoning, and pull request automation. Tools like Qodo go further by embedding quality checks and company-specific best practices directly into generation and review flows, removing the guesswork from AI assistance.

For teams that prioritize adaptability, speed, and long-term maintainability over ecosystem lock-in, these alternatives aren’t just optional; they’re increasingly necessary.

FAQs

What is the best Amazon Q alternative for enterprise?

Qodo is a strong alternative for enterprise teams that require consistent code quality, test generation, and automated PR workflows. It supports multi-model LLMs, integrates with major IDEs and Git platforms, and allows for deeper control through agent-based automation across local and CI environments.

What is Amazon Q best for, and what is it not?

Amazon Q works well for AWS-native tasks like IAM policy creation and CloudFormation scaffolding. For deeper IDE workflows such as multi-module reviews or cross-language test generation, other tools may offer stronger support.

Can I use Amazon Q for enterprise code?

Yes. Amazon Q is built for enterprise AWS users and can assist with code generation, infrastructure setup, and security reviews using both rule-based and AI-driven methods. However, it works best when your codebase and tooling are fully aligned with AWS services and supported languages.

Is Amazon Q secure and SOC 2 compliant?

Yes. Amazon Q adheres to AWS’s enterprise security standards and is SOC 2 compliant. It supports workspace indexing with local data processing and access controls through IAM or AWS Builder ID, making it suitable for regulated environments.