Best Python Automation Tools for Testing

TL;DR:

- Pytest is the go-to framework for unit, functional, and API testing. It’s flexible, has a strong plugin ecosystem, and integrates smoothly with CI/CD pipelines.

- Playwright and Selenium WebDriver dominate web testing. Playwright is modern, fast, and stable with auto-waits and parallel execution, while Selenium offers the widest browser support and ecosystem for legacy and cross-platform needs.

- Robot Framework and Behave focus on collaboration-friendly testing. Robot uses a keyword-driven approach for acceptance and ATDD, while Behave uses Gherkin-based BDD for readable, business-oriented tests.

- Appium is the best choice for mobile app automation, supporting iOS, Android, hybrid, and mobile web apps with easy CI/CD integration.

- Locust handles load and performance testing at scale, while Faker generates realistic test data quickly for APIs, databases, and UI testing.

- Qodo brings AI-powered test generation, mocking, and smart regression management, making it ideal for secure, large-scale, or regulated environments.

Python is one of the most practical languages for automation testing. Its clear syntax, fast development cycles, and a mature ecosystem of libraries make it a natural fit for QA teams. In web and app development, Python frameworks have also evolved rapidly: async-first tools like FastAPI push API performance into the millisecond range, Django handles enterprise-scale workloads, Flask thrives in microservices, and cross-platform frameworks like Kivy and BeeWare make multi-device deployment more accessible.

Recently, in a r/QualityAssurance discussion about API testing tools beyond RestAssured, developers compared how they structure Python tests in real-world projects. Some favored Pytest for fixtures and plugins, others built lightweight setups with unittest and requests. Their priorities were consistent:

- Quick HTTP status checks for feedback in CI

- Schema validation against OpenAPI/Swagger specs

- Exact value assertions in JSON/XML payloads

- Keeping execution times low for large test suites

The takeaway: there’s no single “best” tool; what works depends on your app’s architecture, the team’s workflow, and how you balance coverage with speed. This guide reviews the 11 most useful Python automation tools for testing in 2025, grouped by category so you can match each one to your project’s needs, whether you’re building APIs, mobile apps, or full-scale web platforms.

Before diving into the specifics of the tools, let’s first explore the core Python Testing Frameworks, which serve as the foundation for automation testing in Python. Understanding these frameworks will help in choosing the right tools and strategies for efficient test automation in your projects.

What is a Python Testing Framework?

A Python testing framework is a structured set of tools that provides the building blocks for writing, organizing, and executing automated tests in Python. These frameworks help ensure that the code behaves as expected, making it easier to detect errors early in the development process.

Key Concepts of a Python Testing Framework

- Test Cases: A test case is the smallest unit of testing. In Python, it is typically defined as a method within a class that inherits from unittest.TestCase or a similar testing class. Each test method tests a specific functionality or unit of code.

- Assertions: Assertions are used to check if the output of a function or operation matches the expected result. Common assertions include assertEqual(), assertTrue(), and assertRaises(). If the assertion fails, the test is considered failed.

- Fixtures: Fixtures are methods that set up and clean up the environment for tests. They can be used to prepare test data, initialize objects, or perform other necessary setup before running a test. The setUp() and tearDown() methods in unittest are typical examples of fixtures.

- Test Runners: The test runner is responsible for executing the test cases. It finds the test methods, runs them, and provides feedback, including which tests passed or failed, and the errors or failures encountered.

How Python Testing Frameworks Organize and Automate the Testing Process

Python testing frameworks provide a structured, automated approach to testing that helps maintain clean, efficient test code. These frameworks enable developers to focus on writing high-quality, meaningful tests while automating repetitive tasks such as test discovery, execution, and reporting.

By organizing test code into modular, easy-to-manage test cases, frameworks make it easier to scale and maintain tests, even as the application grows in complexity. They also provide automation of the test execution process, ensuring that tests can be run repeatedly with minimal manual effort.

Frameworks like pytest and unittest also generate detailed reports, which are invaluable for debugging and improving code quality. This automation allows teams to integrate tests into their development pipeline seamlessly, reducing the likelihood of introducing bugs into production and ensuring faster delivery cycles.

Python Automation Frameworks: Example, Libraries, and Overview

Testing frameworks are foundational to validating individual components of an application, but Python automation frameworks extend that functionality by automating entire workflows. These frameworks integrate testing libraries with additional tools to automate tasks such as data processing, web scraping, or system-level operations. They enable you to go beyond just validating code correctness to automating larger parts of your development and deployment pipeline.

Python Automation Framework Example

A Python automation framework integrates various libraries and tools to automate repetitive tasks like testing or data processing. Here’s an example using Pytest, one of the most popular Python testing frameworks.

Example: Pytest Automation Framework

# test_operations.py

import pytest

# Fixture to set up test data

@pytest.fixture

def setup_data():

data = {'a': 2, 'b': 3}

return data

# Simple test case to check addition

def test_addition(setup_data):

result = setup_data['a'] + setup_data['b']

assert result == 5

# Simple test case to check subtraction

def test_subtraction(setup_data):

result = setup_data['b'] - setup_data['a']

assert result == 1

Breakdown of the Example

- Fixtures: The setup_data fixture prepares reusable test data, ensuring the tests remain isolated and independent.

- Test Cases: test_addition and test_subtraction are simple examples that use assertions to verify the correctness of operations.

- Automatic Test Discovery: Pytest automatically finds and runs the tests based on naming conventions (i.e., test_*), allowing the tests to scale easily.

This basic framework is highly extendable. As your application grows, you can introduce more complex fixtures, integrate other tools, and scale your tests to accommodate a broader range of use cases.

Python Libraries for Automation

Python provides a rich ecosystem of libraries that extend the capabilities of automation frameworks. These libraries enable a wide range of automation tasks, from system administration to web scraping and performance testing. Libraries can either be part of the Python Standard Library or third-party libraries available via PyPI (Python Package Index).

Python Standard Library for Automation

The Python Standard Library comes bundled with Python, providing a collection of modules that handle common automation tasks without needing external installations.

- os and shutil: For automating file and folder management, such as creating, moving, or deleting files.

- subprocess: Used to automate system commands and running external programs.

- datetime and time: Ideal for scheduling tasks based on time intervals.

- configparser: Handles configuration files (typically in INI format).

- email and smtplib: Used to automate sending emails, including those with attachments.

- csv and json: Useful for automating data input/output tasks involving CSV and JSON formats.

Popular Third-Party Automation Libraries

For more specialized automation tasks, third-party libraries from PyPI provide powerful tools:

GUI and Desktop Automation

- PyAutoGUI: Automates mouse and keyboard actions to control GUI applications, ideal for apps without APIs.

- Pywinauto: A library for automating GUI tasks on Windows applications.

Web Automation and Testing

- Selenium: A widely used tool for automating web browsers. It’s often used for web scraping, automated testing, and repetitive browser tasks.

- Playwright: A modern open-source framework by Microsoft for web testing and browser automation.

Data Processing and Analysis

- Pandas: A powerful data manipulation and analysis library that’s essential for automating data processing tasks.

- Requests: A simple HTTP library used to interact with APIs and web services.

- BeautifulSoup: A library for parsing HTML and XML files, commonly used for web scraping tasks.

Network Automation

- Paramiko: A library for automating SSHv2 connections, often used for remote server automation and file transfers.

- Netmiko: Built on Paramiko, it simplifies automation tasks for network devices like routers and switches.

Scheduling and Monitoring

- schedule: A lightweight library for scheduling tasks to run at specific times or intervals.

- Watchdog: Monitors file system events and triggers automated actions when changes are detected.

Advanced Workflow Automation

- Celery: A robust distributed task queue system for managing asynchronous workflows, commonly used in large web applications.

- Invoke: Organizes shell-oriented tasks and simplifies the creation of command-line tools for automation.

Python Automation Framework Overview

A Python automation framework combines several components to automate testing and repetitive tasks across the software development lifecycle. Here’s how a typical framework is structured:

Core Components:

- Test Runner: A test runner (e.g., Pytest or unittest) is responsible for discovering and executing tests, providing feedback on test results.

- Test Cases: Test cases are defined as methods within classes, typically focusing on a specific unit of functionality.

- Fixtures: These are reusable functions that prepare data or environments needed for tests. This ensures consistency and isolation for each test case.

- CI/CD Integration: Python automation frameworks integrate seamlessly with CI/CD systems (like Jenkins, CircleCI, or GitHub Actions) to trigger automated testing with every code change, ensuring continuous validation.

- Reporting: Automation frameworks generate detailed reports on test results, including which tests passed and which failed. Tools like pytest-html provide user-friendly HTML reports.

Scalability and Maintainability

Python automation frameworks are scalable and easy to maintain. With reusable test cases, fixtures, and integration into CI/CD pipelines, these frameworks are designed to handle growing codebases efficiently. They ensure that as your application expands, testing remains efficient, manageable, and integrated seamlessly into your development lifecycle.

Now, let’s go through how I selected the best Python automation tools for my projects. By understanding the criteria I used, you’ll gain insight into what to consider when choosing tools for your own automation framework, ensuring that they fit your specific needs and project requirements.

How I Selected the Best Python Automation Tools in this List

As a Python developer who regularly uses AI coding tools and automation frameworks, I carefully selected these testing tools based on my daily development needs.

Several of these tools integrate with the best AI for coding, making test creation and maintenance much more efficient. Here’s what guided my choices:

- Python Ecosystem Integration: Each tool I chose integrates well with Python’s testing ecosystem and common development environments.

- Automation Capabilities: I looked for tools that could effectively automate different types of testing – from unit tests to end-to-end testing.

- Active Community: Every tool I’ve included has strong community support within the Python ecosystem.

- Testing Scope: I chose tools that cover different testing needs – from simple unit tests to complex end-to-end automation.

These criteria helped me select tools that actually make Python testing easier and more efficient. Whether you’re automating unit tests or setting up end-to-end testing, these selections reflect what works in real Python development.

10 Best Python Automation Tools for Testing – 2025 List

Top Performance & AI-Powered Testing Tools

Top Testing Frameworks:

Top Web Automation Tools:

Top GUI Automation Tools:

Quick Comparison Table: Best Python Automation Tools 2025

| Tool | Primary Use Case | Pricing | Best Fit For |

| Qodo | AI-powered test automation | Free + Paid ($19/user/mo) | AI-driven testing & smart automation |

| Pytest | Unit, functional, & integration tests | Free | Flexible Python-based testing frameworks |

| Selenium WebDriver | Web app automation | Free | Browser automation & cross-platform testing |

| Robot Framework | Acceptance testing & ATDD | Free | Collaboration-friendly test cases |

| Playwright | End-to-end web automation | Free | Modern, high-speed web automation |

| Behave | BDD testing | Free | BDD-driven projects with business focus |

| TestComplete | GUI & functional testing | Starts at $4108/year | Enterprise-level GUI & regression testing |

| PyAutoGUI | Desktop GUI automation | Free + Paid | Lightweight desktop GUI automation |

| Locust | Load & performance testing | Free | High-scale performance testing |

| Faker | Test data generation | Free | Generating mock data for testing |

Now let’s get into the complete breakdown of each tool, starting with its core use case, key

Now let’s get into the complete breakdown of each tool, starting with its core use case, key strengths, limitations, and where it fits best in a Python testing workflow.

1. Qodo

I’ve been using Qodo, an advanced AI-powered testing tool that completely revolutionizes test automation and management throughout the software development lifecycle. It integrates directly into my IDE, providing smart, context-aware testing solutions that adapt to my development workflow.

Pros

- Intelligent test generation: Automatically creates comprehensive test suites based on code changes and commits.

- Context-aware testing: Analyzes code relationships and dependencies for more accurate test cases.

- Built-in mock creation and management: Reduces the need for boilerplate code.

- Automated identification of testing gaps and redundancies: Ensures more thorough testing coverage.

- Real-time test execution and failure fixes: Integrated directly within the IDE.

- Smart regression testing: Automatically identifies affected code areas to optimize testing.

- Seamless integration: Works smoothly with existing development workflows and testing frameworks.

Cons

- Premium features: Advanced automation and extensive code analysis are locked behind a paid subscription, which could be costly for smaller teams or individual developers.

Hands-on:

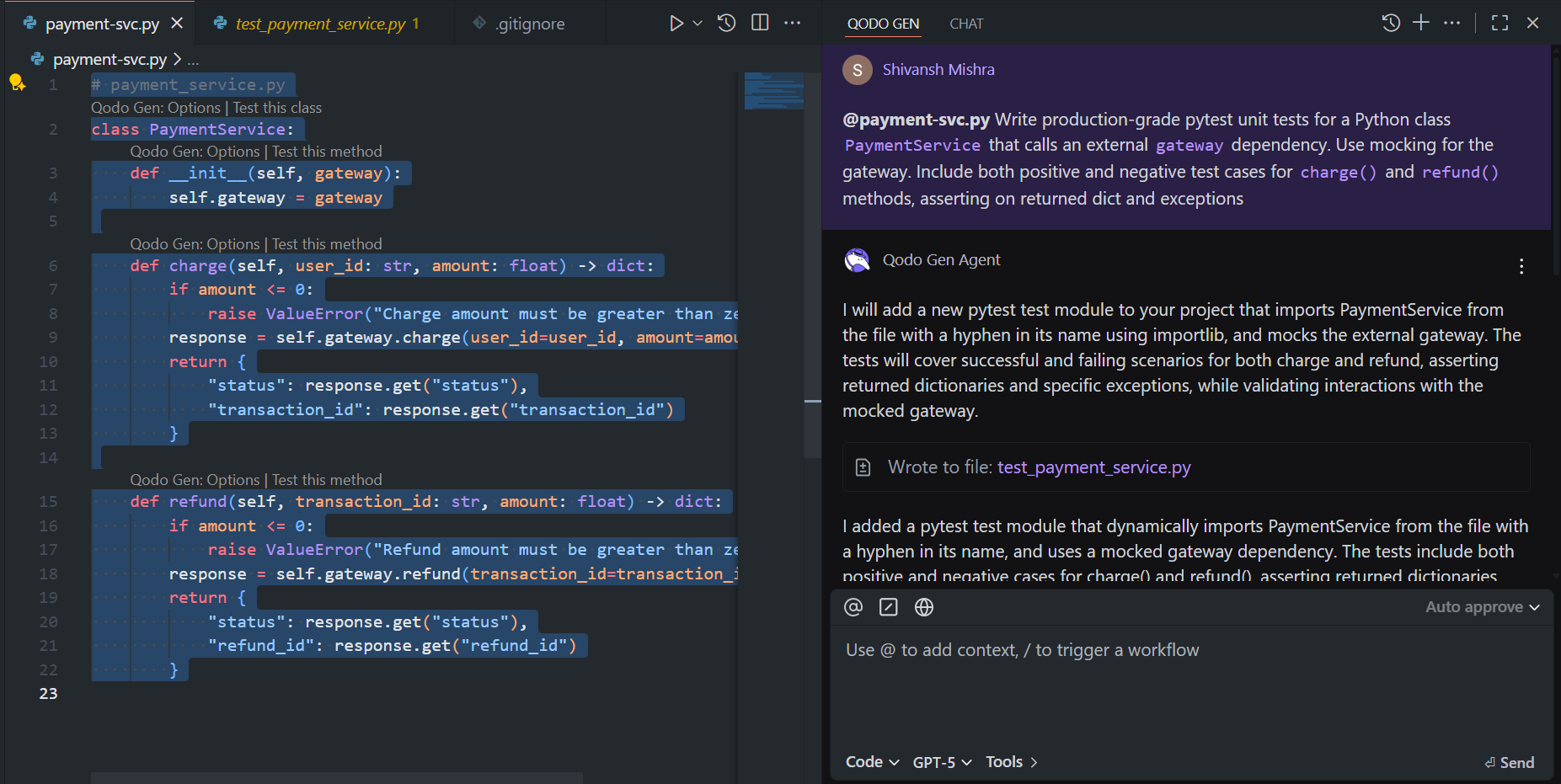

I prompted Qodo Gen Chat to generate production-grade pytest unit tests for the PaymentService class by using the following request:

@payment-svc.py Write production-grade pytest unit tests for a Python class PaymentService that calls an external gateway dependency. Use mocking for the gateway. Include both positive and negative test cases for charge() and refund() methods, asserting on returned dict and exceptions.

Qodo immediately analyzed the payment-svc.py file, detected the external gateway dependency, and generated a comprehensive test module named test_payment_service.py. The snapshot below shows the Qodo interface where the prompt was executed, alongside the generated test module:

Here’s how Qodo presented the results as individual test cases. For the charge() method, Qodo created three well-structured scenarios to ensure full coverage.

In the successful payment flow, Qodo mocked gateway.charge() to return {“status”: “success”, “transaction_id”: “tx_123”}. The test asserted that the returned dictionary contains only the expected keys status, and transaction_id, ignoring any unrelated fields. It also verified that gateway.charge() was called exactly once with the correct parameters: user_id=”user_1″ and amount=49.99. For invalid amount handling, Qodo generated parameterized tests for amount = [0, -0.01, -100] to validate edge cases. These tests asserted that a ValueError is raised with the precise message Charge amount must be greater than zero and confirmed that the gateway mock was never called when invalid inputs were provided.

In the gateway failure simulation, Qodo tested error-handling behavior by mocking gateway.charge() to raise a RuntimeError(“gateway down”). The test confirmed that the exception was correctly propagated by the PaymentService, ensuring robust handling of upstream failures. For the refund() method, Qodo followed the same structured approach with three focused scenarios. In the successful refund flow, gateway.refund() was mocked to return {“status”: “success”, “refund_id”: “rf_456”}, and the test asserted that the service correctly returned only the status and refund_id fields. It also verified that gateway.refund() was called with the correct arguments: transaction_id=”tx_999″ and amount=15.50. For invalid refund amounts, Qodo generated parameterized tests for amount = [0, -0.01, -250]. These tests asserted that a ValueError with the message Refund amount must be greater than zero is raised, confirming that the gateway method was not invoked for invalid scenarios.

Lastly, in the refund failure simulation, Qodo mocked gateway.refund() to raise a RuntimeError(“refund failed”) and verified that the exception propagated correctly through the PaymentService.

Pricing

There’s a free plan with basic features and a team plan that costs $19 per user per month. The team plan is worth the cost because it saves a lot of time during development and code reviews.

2. Pytest

Pytest is a flexible and extensible third-party testing framework for Python. It simplifies test case creation and organization, allowing developers to focus on writing clean, readable test code. Pytest supports various types of tests, including unit tests, functional tests, and integration tests.

Pros

- Simplicity: Pytest’s simple syntax and intuitive test discovery make it easy to get started with testing.

- Extensibility: Pytest offers a rich plugin ecosystem – a collection of external modules or extensions that you can integrate with it. These plugins can add new features, modify existing behavior, or integrate Pytest with other tools or frameworks. The plugin architecture allows developers to customize the testing process according to their specific requirements without modifying the core codebase.

- Some examples of plugins in the Pytest ecosystem include pytest-cov (generates coverage reports, showing which parts of the code are covered by your tests), pytest-xdist (enables parallel execution of tests), pytest-html (generates HTML reports for test results, providing a more visually appealing and user-friendly representation), and pytest-mock (provides utilities for working with mock objects in tests).

- Fixture mechanism: Fixtures are functions that provide data or set up resources needed by your tests. They allow you to initialize objects, set up databases, configure environment settings, or perform any other setup actions before your tests run. Fixtures can also clean up after the test has been executed, ensuring that resources are properly released.

Cons

- Learning curve: While Pytest is easy to use for basic testing scenarios, mastering its advanced features may require some learning.

- Limited built-in assertion library: Pytest’s built-in assertion library is minimal compared to other testing frameworks, requiring developers to rely on external libraries for complex assertions.

- Lack of built-in parallel test execution: Pytest does not natively support parallel test execution, although this functionality can be achieved using plugins.

Hands-on:

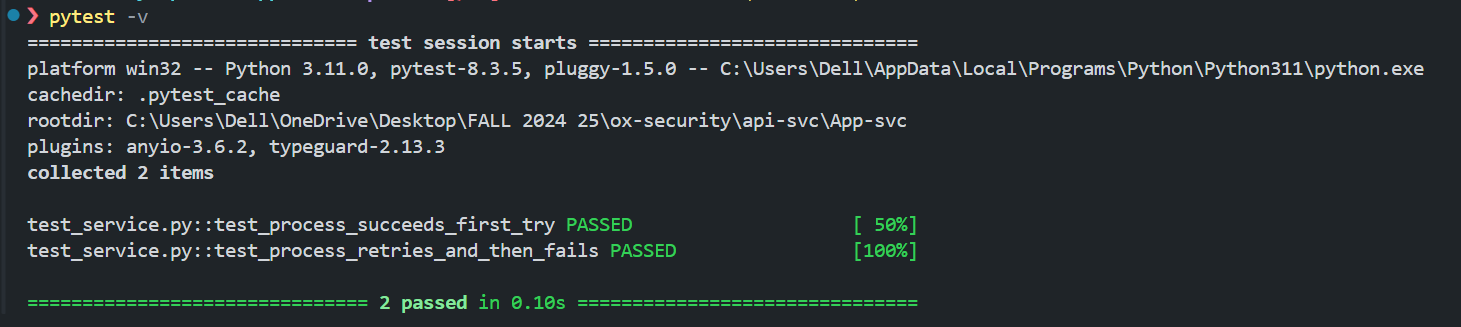

I used Pytest to test the PaymentRetryService implementation, which handles payment processing with retry logic when a payment gateway fails. The goal was to validate both successful and failure scenarios by mocking the external gateway dependency and testing the retry mechanism.

The service.py file contains the PaymentRetryService class, which attempts to charge a user up to a configured number of retries. If the payment succeeds, it returns a response containing the transaction details. If all retries fail, it raises a RuntimeError.

Here’s how Pytest was used to validate the behavior through two focused test cases:

For the successful payment scenario, a mock gateway was created using MagicMock. The mock was configured to return a successful response:

{“status”: “success”, “transaction_id”: “abc123”}.

The test asserted that:

- gateway.charge() was called exactly once with the correct parameters user_id=”user-1″ and amount=100.0.

- The result contained the expected status and transaction_id values.

This validated that no retries were triggered when the first attempt succeeded.

In the retry and failure scenario, the mock gateway was configured to raise an exception on every call:

mock_gateway.charge.side_effect = Exception(“network error”).

The test verified that:

- The service retried charging the user up to the configured max_retries=2.

- After exhausting retries, a RuntimeError was raised with the message Max retries exceeded.

- gateway.charge() was called exactly two times, matching the retry configuration.

Pricing

PyTest is an open-source tool that is available for free use.

3. Selenium WebDriver

Selenium WebDriver is a powerful tool for automating web application testing. It allows developers to simulate user interactions with web elements, such as clicking buttons, filling out forms, and navigating between pages. Selenium supports multiple programming languages, including Python, and offers cross-browser compatibility.

Pros

- Cross-browser compatibility: Selenium WebDriver allows testing across different web browsers, ensuring consistent behavior across platforms.

- Rich ecosystem: Selenium boasts a vast community and ecosystem, offering support for various programming languages and frameworks.

- Flexibility: Selenium can be integrated with other testing frameworks and code automation tools, enhancing its functionality and versatility.

Cons

- Setup complexity: Setting up Selenium WebDriver and configuring browser drivers can be challenging for beginners.

- Maintenance overhead: Web elements’ locators may change over time, requiring constant updates to test scripts.

- Limited support for non-web applications: Selenium is primarily designed for web application testing and may not be suitable for testing desktop or mobile applications.

Hands-on:

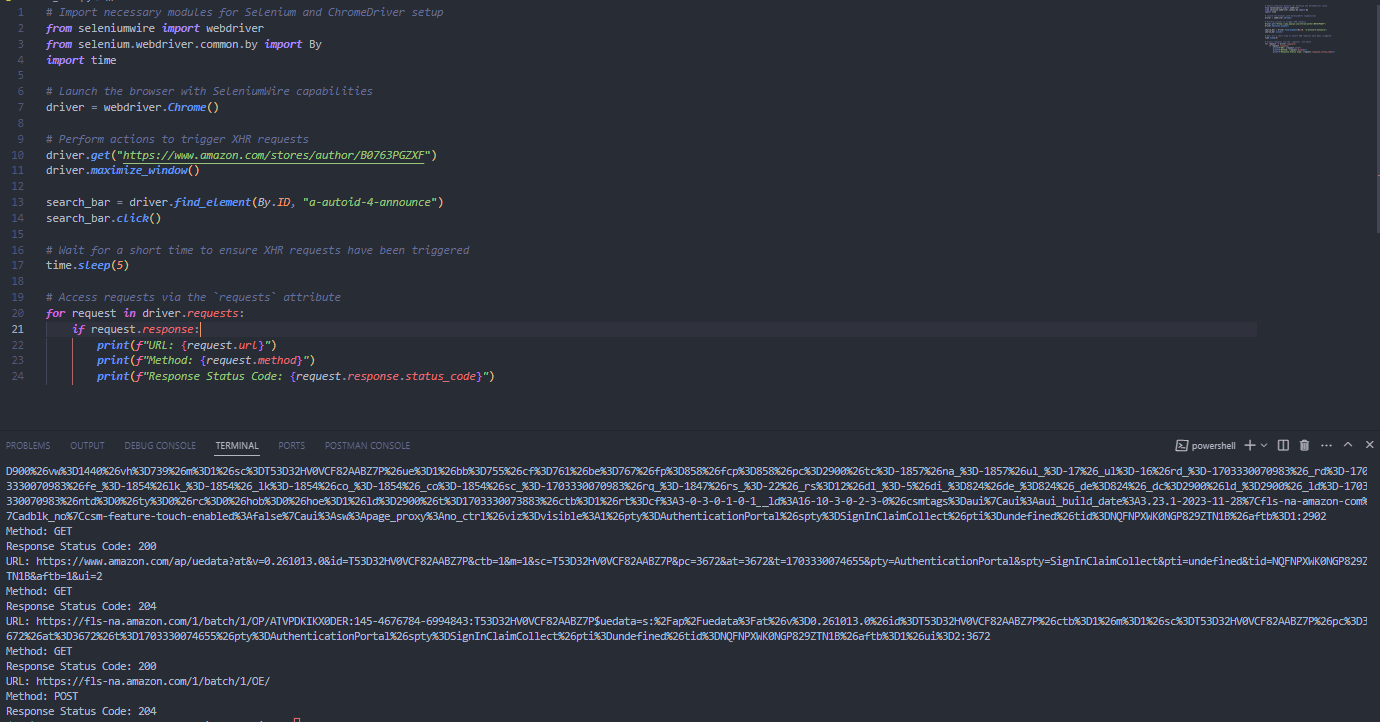

In this example, Selenium WebDriver was used with the selenium-wire wrapper to automate browser actions and capture network requests triggered by user interactions. The script launched Google Chrome, navigated to the Amazon author store URL, maximized the window, located the search element, and clicked on it to trigger background XHR requests. A short delay was introduced to ensure the requests were completed before accessing them through the driver.requests attribute.

The captured requests included details such as the request URL, HTTP method, and response status code, which were printed in the terminal for verification. The snapshot below shows the Selenium script on the left and the corresponding captured network activity in the terminal output.

The captured requests show successful GET and POST calls returning status codes 200 and 204. These include API calls to Amazon’s analytics endpoints and tracking services triggered by clicking the page element.

Pricing

Selenium is a widely used open-source automation tool that is freely available for download.

4. Robot Framework

Robot Framework is a generic open-source automation framework. It allows you to write high-level test cases in a tabular format and supports testing on different levels, such as acceptance testing and acceptance test-driven development (ATDD). Robot Framework uses a plain-text syntax and offers a rich library of pre-built keywords for automating test cases.

Pros

- Human-readable syntax: Plain-text syntax makes test cases easily understandable by non-technical stakeholders, promoting collaboration between teams.

- Rich ecosystem: Provides a wide range of libraries and integrations, including SeleniumLibrary for web testing and DatabaseLibrary for database testing.

- Extensibility: Can be extended using Python or Java, allowing developers to implement custom keywords and libraries.

Cons

- Performance overhead: Slower execution speed than other testing frameworks, especially for complex test suites.

- Limited IDE support: While compatible with various text editors and IDEs, it may not offer the same level of integration and tooling as other frameworks like Pytest.

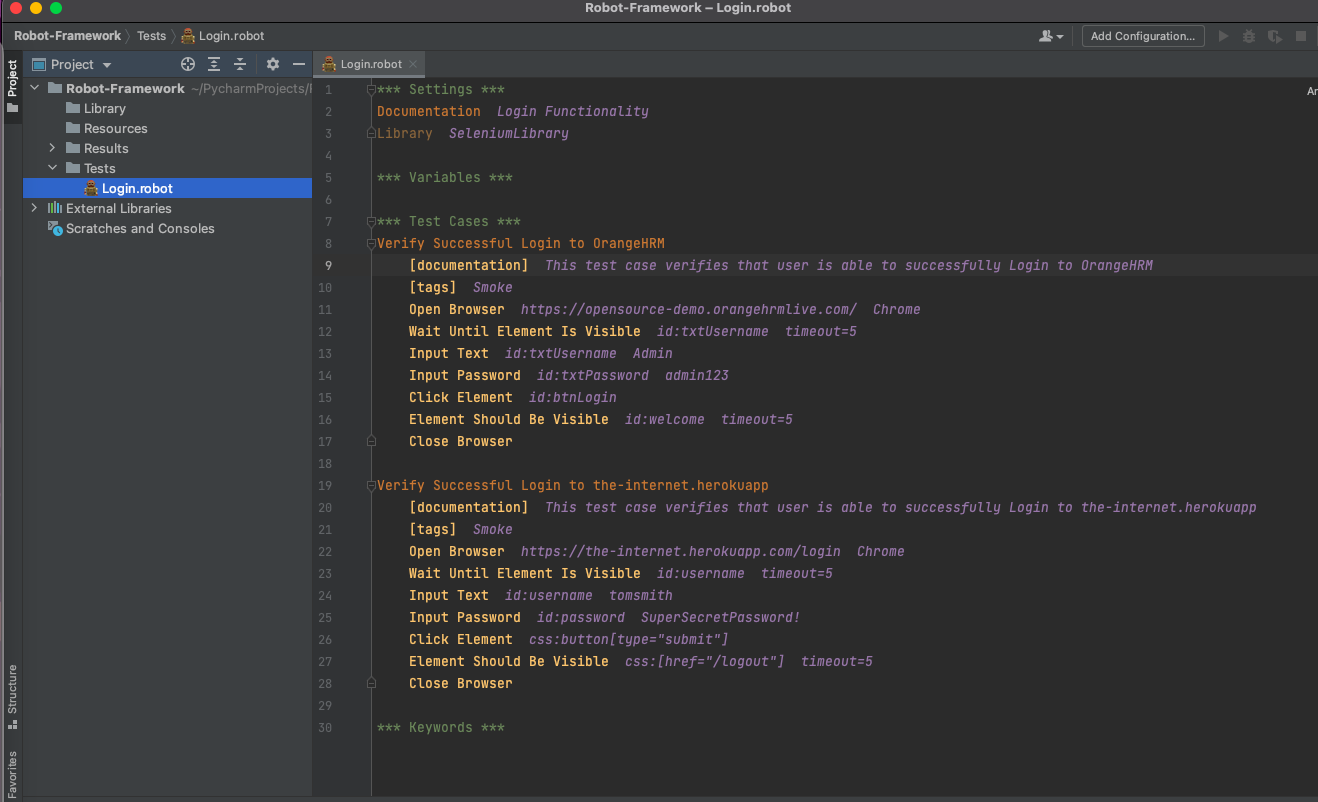

Hands-on:

In this example, Robot Framework was used with the SeleniumLibrary to automate end-to-end login flows for two web applications (OrangeHRM Demo and the-internet.herokuapp). The suite opened Google Chrome, navigated to each site’s login page, waited for the username field to be visible, populated valid credentials, and clicked the login/submit button. Explicit waits were used to stabilize timing, and ID/CSS selectors targeted the input fields and action buttons. Post-login verification asserted the presence of a welcome banner in OrangeHRM and a logout link in HerokuApp, confirming successful authentication before the browser session was closed.

As shown in the above snapshot, the test logic was organized in a single Login.robot file with clear Settings, Variables, and Test Cases sections, mirroring the structure shown in the editor snapshot. Each test was tagged as Smoke and relied on concise, human-readable keywords, Open Browser, Wait Until Element Is Visible, Input Text, Input Password, Click Element, and Element Should Be Visible, to keep steps declarative and maintainable.

Execution produced standard Robot Framework artifacts (log.html, report.html, output.xml) and terminal output summarizing both scenarios as PASS. The results demonstrated stable interactions across both targets, with explicit waits eliminating flakiness and locator strategies (ID for OrangeHRM; a mix of ID and CSS for HerokuApp) ensuring resilient element selection.

Pricing

Robot Framework is an open-source tool, making it freely available for use.

5. Playwright

Playwright is an open-source automation framework developed by Microsoft for end-to-end web application testing. It supports multiple browsers, including Chromium, Firefox, and WebKit, and provides a unified API for seamless browser automation.

Pros

- Cross-browser support: Works with Chromium, Firefox, and WebKit using a single API.

- Cross-platform compatibility: Runs on Windows, Linux, and macOS, with mobile emulation for Android and iOS.

- Multi-language support: Supports Python, JavaScript, TypeScript, and Java.

- Headless and GUI modes: Offers both headless execution for speed and GUI mode for debugging.

- Automatic waiting: Waits for elements to be ready before executing actions, reducing test flakiness.

- Advanced testing features: Includes network interception, geolocation testing, and mobile emulation.

- Parallel testing: Enables running multiple tests simultaneously for better efficiency.

Cons

- Asynchronous programming: Requires knowledge of async programming, which may be challenging for some developers.

- Smaller community: Has a less mature ecosystem compared to well-established frameworks like Selenium.

- Learning curve: Developers new to Playwright, especially those unfamiliar with TypeScript or Node.js, may face an initial learning curve.

Hands-on:

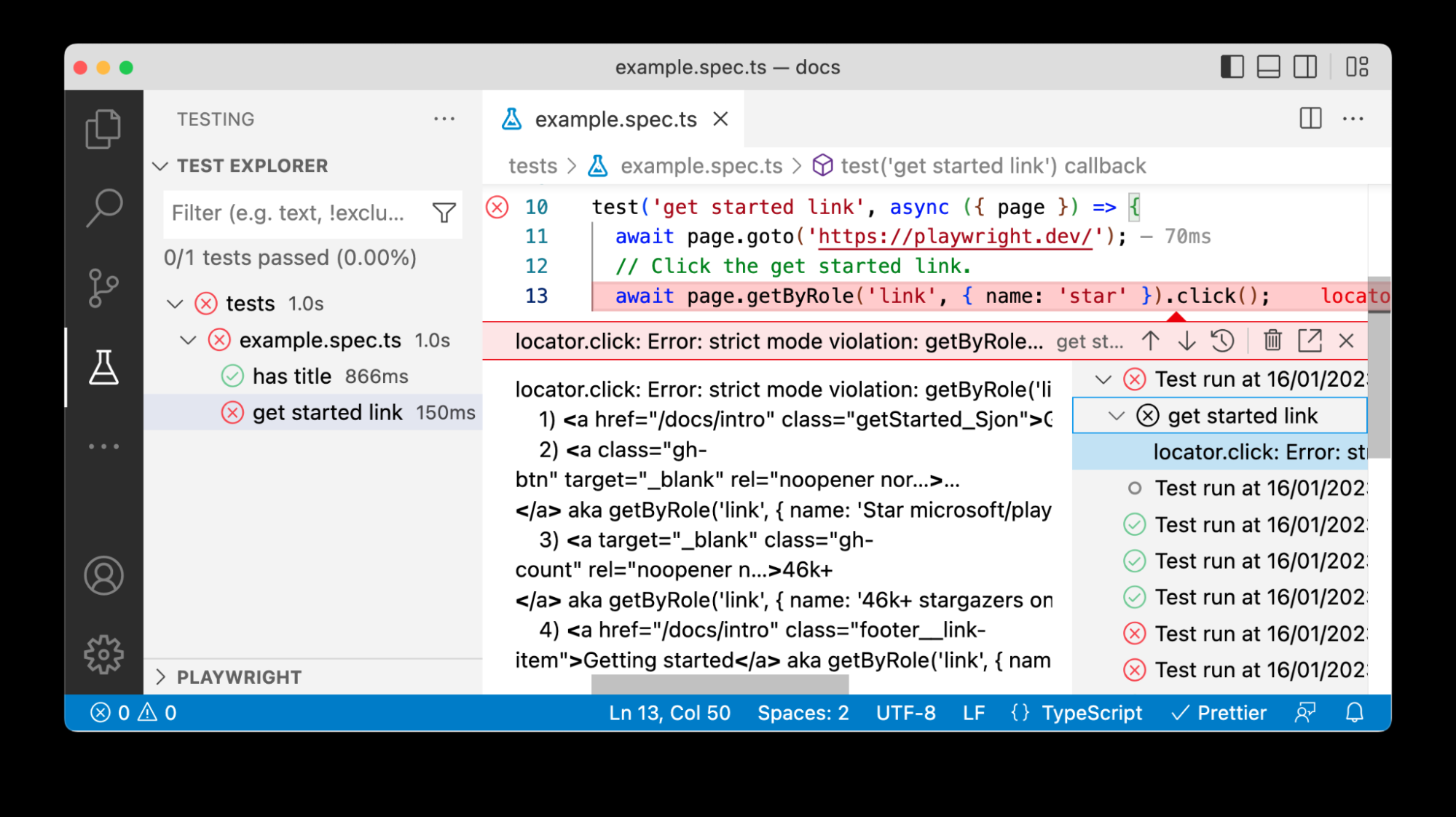

In this example, Playwright was used with TypeScript to automate browser actions and test a web application. The script launched the browser, navigated to the Playwright documentation site, and attempted to click a specific link using the getByRole locator strategy. The test file, example.spec.ts, contained two test cases: one verifying the page title and another interacting with the “Get Started” link.

The code snippet shown in the editor demonstrates the second test case, where the script navigated to https://playwright.dev/ and attempted to locate and click a link using page.getByRole(‘link’, { name: ‘star’ }).click(). However, during execution, the test failed due to a strict mode violation caused by multiple matching elements. Playwright reported several potential matches for the given role and name, making it ambiguous which element to interact with.

The Visual Studio Code Test Explorer displayed the results, showing one passed test (has title) and one failed test (get started link). The detailed error output in the terminal highlighted the locator issue and listed the matching elements, helping to debug and refine the selector strategy.

In this scenario, the test execution validated Playwright’s locator behavior and error reporting. By adjusting the selector, for example, using a more specific locator like page.locator(‘a[href=”/docs/intro”]’), the issue can be resolved, ensuring stable and accurate test automation for interactive web elements.

Pricing

Playwright is an open-source tool available for free use.

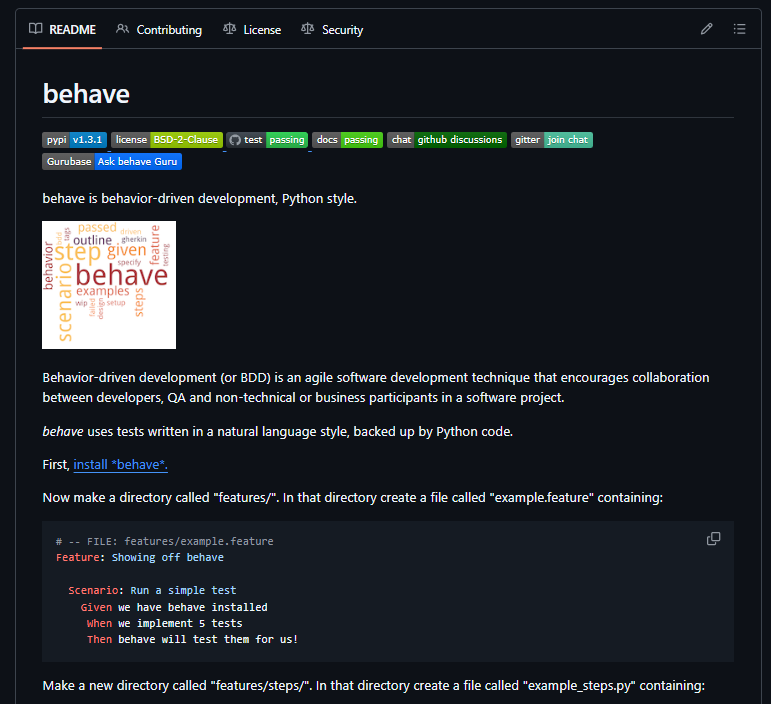

6. Behave

Behave is a behavior-driven development (BDD) framework for Python that allows developers to write tests in a human-readable format using Gherkin syntax, making them accessible to non-technical stakeholders. By focusing on user behavior and business requirements, Behave fosters clearer communication and ensures that software development remains aligned with business objectives.

The best choice between Behave and Robot Framework depends on your specific needs, preferences, and the context of your project. You may want to try out both frameworks and evaluate which one better fits your requirements and workflow.

Pros

- Clarity and transparency: Gherkin syntax promotes clear communication and ensures that test cases remain aligned with business objectives.

- Reusability: Encourages the reuse of step definitions across different scenarios, reducing duplication and improving test maintainability.

- Integration with Python: A Python-based framework allows developers to leverage existing Python libraries and frameworks.

Cons

- Learning curve: BDD approach may require a paradigm shift for developers accustomed to traditional testing frameworks.

- Limited community support: Has a dedicated user base, but its community is as extensive as other testing frameworks like Pytest or Selenium.

- Overhead in writing step definitions: Writing and maintaining step definitions can be time-consuming, especially for large and complex test suites.

Hands-on:

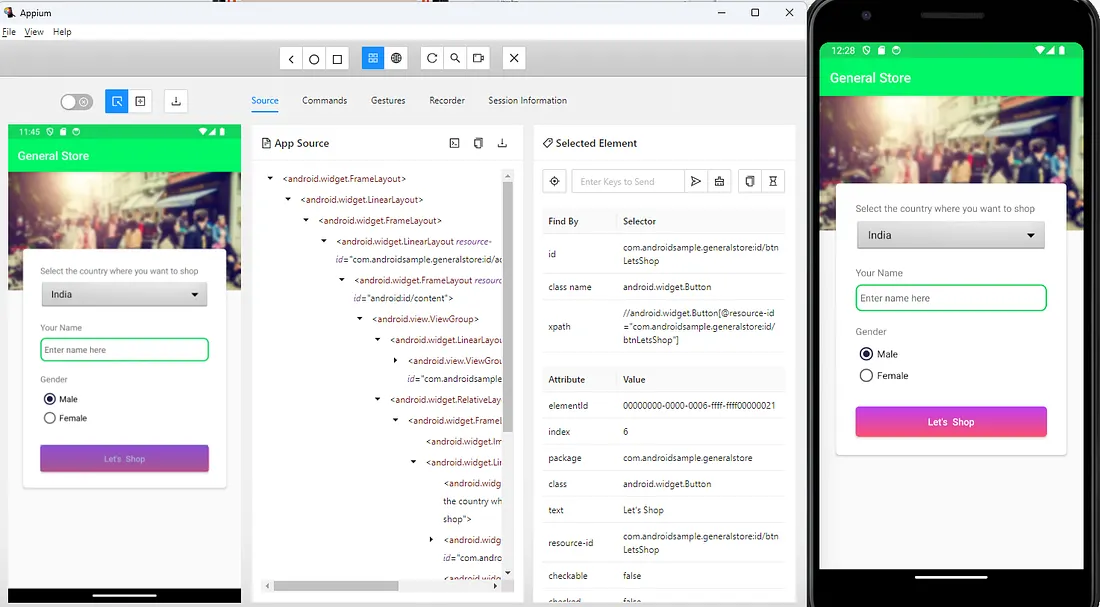

In this example, Appium was used to automate the onboarding flow of the General Store sample mobile application, as shown in the attached screenshot. The Appium Inspector was used to identify UI elements and their attributes, including the country dropdown, name input field, gender selection radio buttons, and the Let’s Shop button. As shown in the snapshot below, the inspector displayed the complete app hierarchy on the left panel and detailed attributes for the selected element on the right panel, such as resource-id, class name, and XPath, making it easier to locate and interact with these elements during automation.

The process involved launching the General Store app, selecting a country from the dropdown, entering a name, choosing a gender, and clicking the Let’s Shop button. Once the button was clicked, Appium triggered the navigation to the product listing screen, which could then be validated to confirm the successful flow.

The terminal output displayed the execution steps of the automation process, including the creation of the session, identification of elements based on the provided locators, interaction logs, and confirmation of a successful test execution.

Pricing

Behave is an open-source automation tool that is free to use.

7. TestComplete

TestComplete is a comprehensive automated testing tool that supports automating functional, regression, and GUI testing of desktop, web, and mobile applications. It offers a record-and-playback feature for visually creating tests, simplifying test creation for beginners, and providing advanced scripting capabilities for experienced testers. It has a robust object recognition engine, which ensures reliable test execution across different platforms and environments.

Pros

- Multi-platform support: Supports testing on various platforms, including Windows, macOS, iOS, and Android, making it suitable for testing diverse applications.

- Rich feature set: Offers a wide range of features, including object recognition, data-driven testing, and cross-browser testing, empowering testers to handle complex testing scenarios.

- Record and Replay: Offers a record-and-replay feature that allows testers to record user interactions with an application and then replay those interactions as automated tests. This makes it easy to create test scripts without requiring extensive programming knowledge.

Cons

- Cost: As a commercial tool, its pricing may be prohibitive for small teams or individual developers.

- Learning curve: Mastering TestComplete’s advanced features and scripting capabilities may require significant time and effort.

- Dependency on proprietary technology: Relies on proprietary technologies for object recognition and automation, which may limit flexibility and portability.

Hands-on:

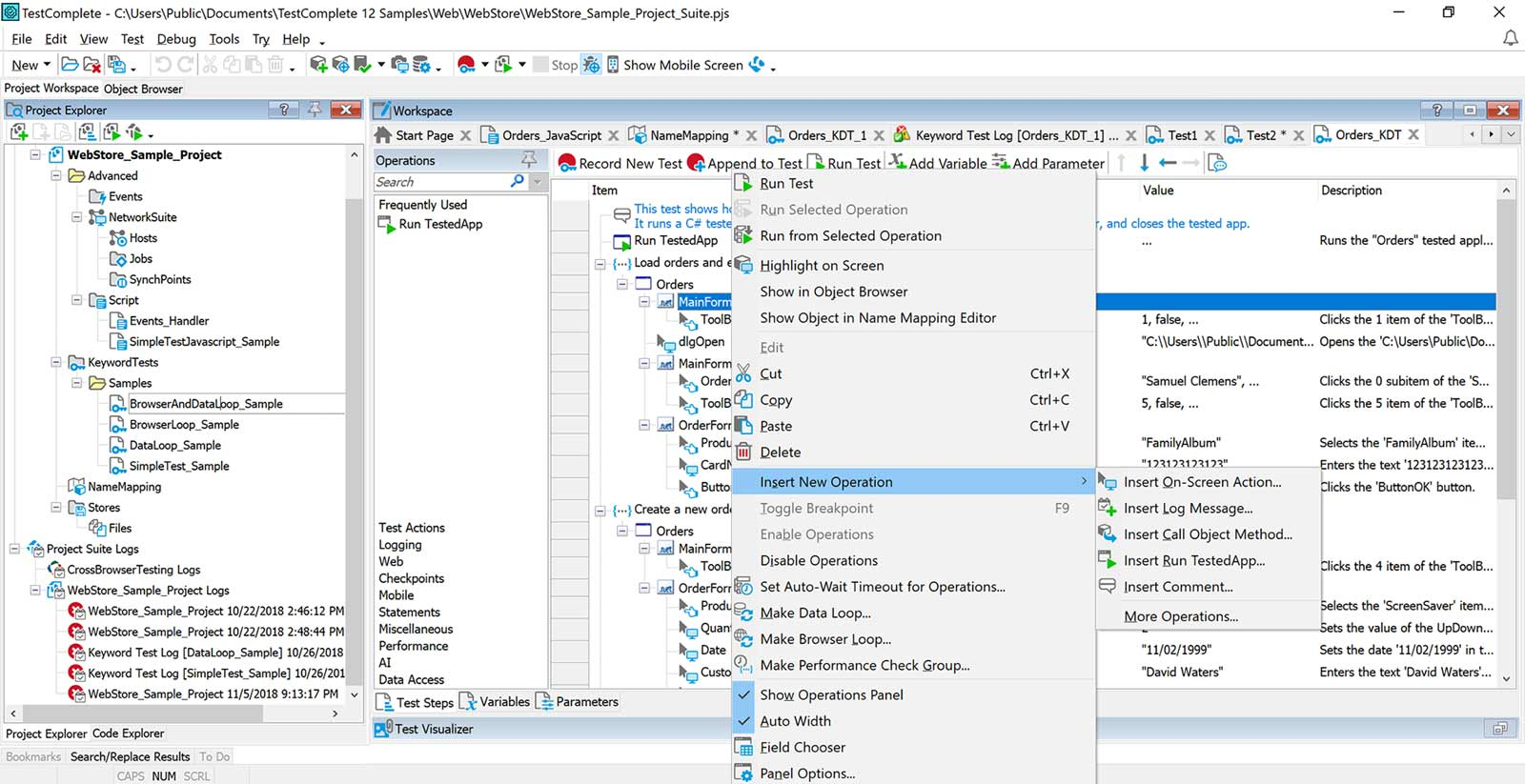

In this example, TestComplete was used to automate the functional testing of a sample WebStore application, as shown in the attached screenshot. The interface demonstrates how testers can visually create and manage automated test scripts using the Keyword Tests feature without writing code. The Project Explorer on the left displays the test suite structure, including scripts, keyword tests, and data-driven test samples. The workspace on the right shows the currently selected test steps with details such as the object being interacted with, the performed operation, and corresponding parameter values.

The above screenshot highlights the “Insert New Operation” option, which allows testers to add new actions to an existing keyword test. Using this, testers can insert various operations such as on-screen actions, log messages, method calls, running additional tested applications, or even adding custom comments. This enables building reusable, modular, and highly maintainable test cases.

During hands-on testing, a typical flow involves recording user actions on the application, reviewing the recorded steps in the Test Visualizer, inserting or editing specific operations where required, and running the complete automated test suite. The terminal/log output generated by TestComplete displays execution details, including test step results, passed or failed validations, and captured screenshots for failed checkpoints.

Pricing

TestComplete pricing starts from $4108 per year.

8. PyAutoGUI

PyAutoGUI is a cross-platform Python testing library for automating GUI interactions, such as controlling the mouse and keyboard, capturing screenshots, and simulating user inputs. It is ideal for automating desktop applications and performing repetitive tasks.

Pros

- Simplicity: Straightforward API makes it easy to automate GUI interactions without the need for complex setup or configuration.

- Cross-platform compatibility: Works on Windows, macOS, and Linux, allowing developers to write platform-independent automation scripts.

- Accessibility: Simplicity and ease of use make it accessible to developers of all skill levels, from beginners to seasoned professionals.

- Image recognition: Provides functions for image recognition, allowing you to locate and interact with GUI elements based on their appearance on the screen. This can be useful for automating the testing of applications that do not expose a standard API or GUI toolkit for automation.

Cons

- Lack of advanced features: May not offer the same level of functionality and customization as other GUI automation tools like TestComplete or Sikuli.

- Reliance on screen coordinates: Relies on screen coordinates to interact with GUI elements, which may lead to issues on systems with different screen resolutions or configurations.

- Performance limitations: May not be suitable for high-performance or time-critical automation tasks due to its reliance on simulating user inputs.

Hands-on:

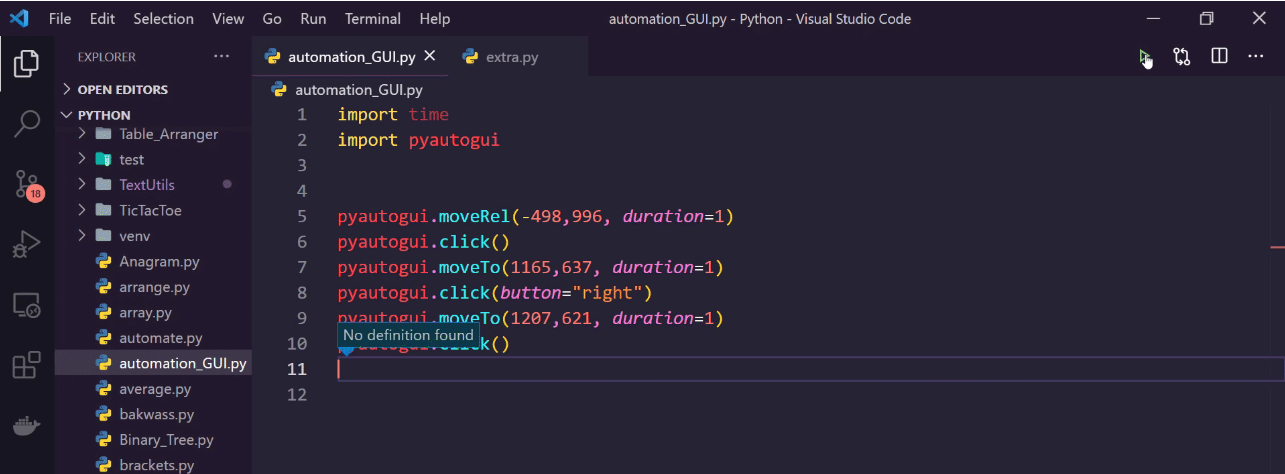

In this example, PyAutoGUI is demonstrated for automating GUI-based tasks using Python, as shown in the attached screenshot. The code snippet automates mouse movements and clicks on specific screen coordinates, simulating real user actions. PyAutoGUI is commonly used for desktop application automation, GUI testing, and performing repetitive tasks efficiently.

The highlighted script in the above snapshot imports the time and pyautogui modules to interact with the screen. It performs tasks such as moving the mouse to specific coordinates, performing single and right clicks, and navigating between different areas on the screen. These operations help automate sequences like opening applications, selecting options, or interacting with software interfaces without manual input.

Pricing

PyAutoGUI offers a free plan as well as paid options.

9. Locust

Locust is an open-source load-testing tool that allows developers to simulate thousands of concurrent users and measure the performance of web applications to test their scalability and performance. It is designed to be highly scalable and easy to use.

Locust makes it easy to define user behavior and generate realistic load scenarios. Its distributed nature enables horizontal scaling, allowing testers to simulate large-scale traffic without the need for complex infrastructure. By identifying performance bottlenecks early in the development cycle, Locust empowers teams to deliver performant applications with confidence.

Pros

- Scalability: Distributed architecture enables horizontal scaling, allowing testers to simulate large-scale traffic without the need for complex infrastructure.

- Python-based scripting: Uses Python to define user behavior and scenarios, making it familiar and accessible to Python developers.

- Real-time reporting: Provides real-time insights into test results, including response times, throughput, and error rates, allowing testers to identify performance bottlenecks quickly.

Cons

- Lack of built-in reporting: Built-in reporting capabilities may be limited compared to commercial load testing tools, although they can be extended using plugins or integrations.

Hands-on

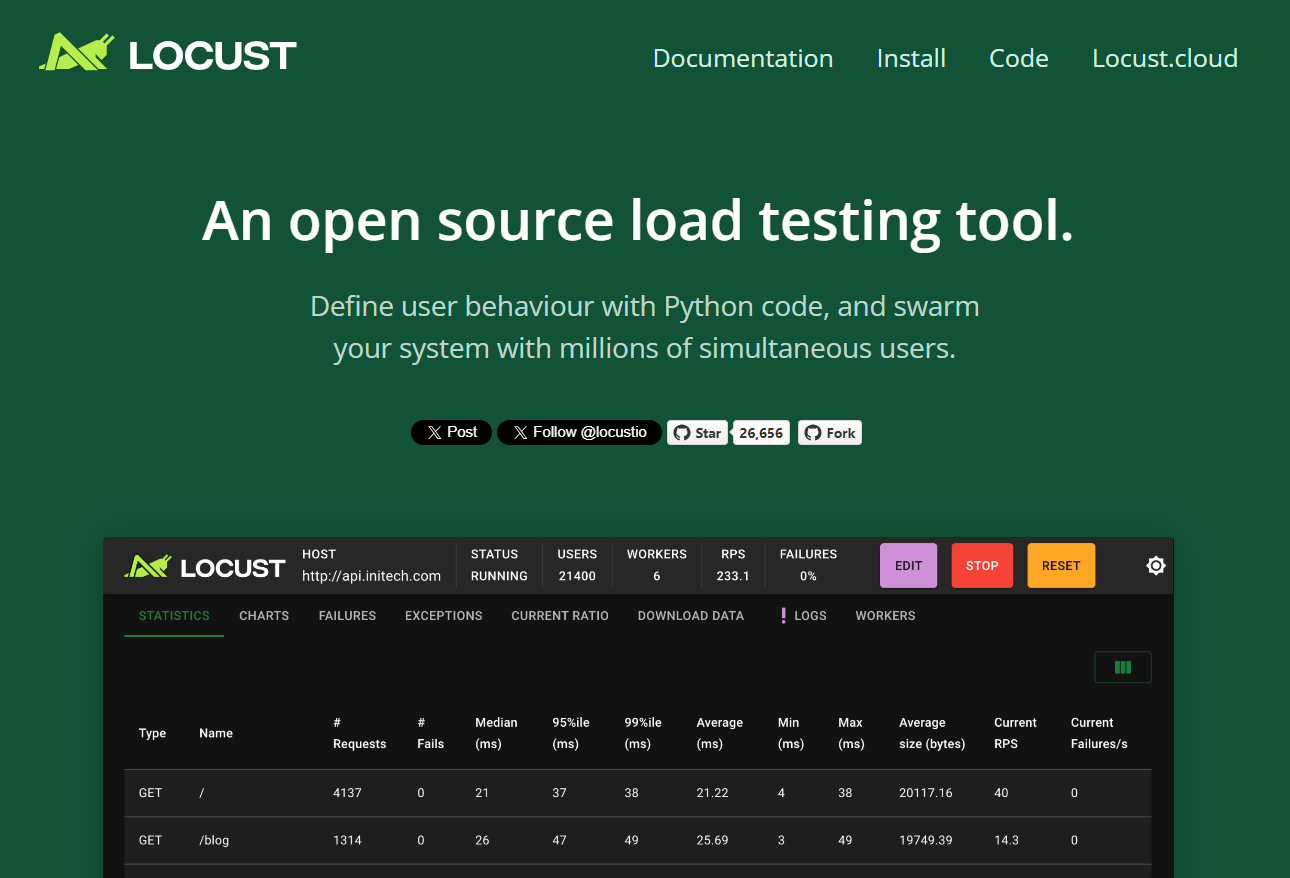

In this hands-on exercise, Locust was used to perform performance testing on a local API by simulating user behavior through a custom Python script. The locust file (locust-file.py) defines a class UserFlow that mimics real user interactions such as logging in, fetching user profiles, and updating user details. The script uses the HttpUser class to simulate authenticated requests by first performing a POST login and retrieving an access token for subsequent GET and PUT operations. A random user ID is chosen during each run to ensure varied testing coverage, and the wait_time parameter introduces delays between requests to simulate realistic user activity.

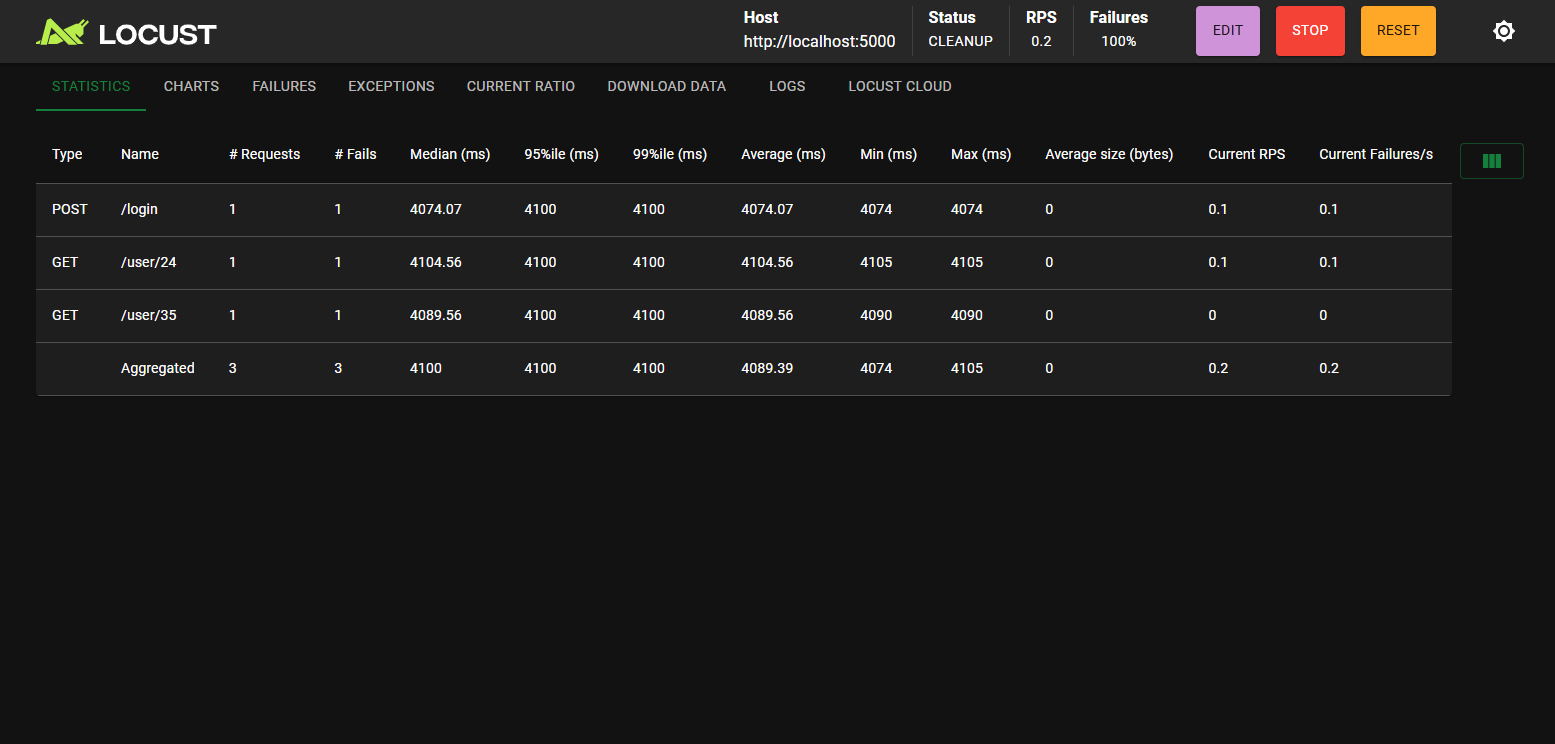

When Locust was started using the command locust, it initialized version 2.38.1 and launched a web interface at http://localhost:8089. From there, the test was executed with one simulated user ramping up at a rate of one user per second. As shown in the snapshot below, the Statistics Dashboard displayed key performance metrics for different endpoints, including /login (POST) and /user/{id} (GET). It showed request counts, failure rates, response times, and throughput:

From the results, three requests were made in total:

- POST /login: Median response time was ~4074 ms.

- GET /user/24 and GET /user/35: Median times were around 4104 ms and 4089 ms, respectively.

- The aggregated data indicated a 100% failure rate, suggesting possible authentication issues or server-side errors.

The Locust UI provides valuable insights such as 95th and 99th percentile latencies, minimum and maximum response times, and request success rates. These metrics are useful for identifying bottlenecks, unstable endpoints, and performance issues under load.

Pricing

Being an open-source tool, Locust is available for free.

10. Faker

Faker is a library that generates fake data for testing and other purposes. Faker can be used to generate random and realistic test data for populating databases, forms, and other parts of your application.

Pros

- Efficient test data generation: Provides a convenient way to generate a large variety of fake data, including names, addresses, phone numbers, email addresses, dates, and more. This makes it easy to create diverse and realistic test data for your automated tests.

- Time-saving: Manually creating test data for automated tests can be time-consuming and error-prone. Faker helps save time by automating the generation of test data, allowing testers to focus on writing and executing test cases.

- Repeatability: Generates pseudo-random data based on predefined rules and algorithms. This ensures that the generated data is consistent and repeatable across multiple test runs, making it easier to reproduce and debug issues.

Cons

- Limited Realism: Generates realistic-looking data but may not always accurately represent real-world scenarios. For example, the generated names, addresses, or email addresses may not correspond to actual individuals or locations.

- Limited Validation: Does not provide built-in validation mechanisms to ensure the correctness or integrity of the generated data. It’s essential to validate the generated data against the expected data formats and constraints within your test cases.

Hands-on:

In this hands-on, the Faker library in Python was used to generate realistic fake data for testing and development purposes. The script, generate-fake-data.py, initializes a Faker instance and creates two sets of data.

First, it generates a single fake data entry, which includes a randomly generated name, address, email, and phone number. These values are created dynamically, ensuring that every execution of the script produces fresh and unique data.

Second, the script demonstrates the generation of a bulk dataset by creating a list of five fake user records. Each user record consists of a random name, email, and properly formatted address. This approach is useful for scenarios such as seeding test databases, API testing, or UI prototyping, where dummy user data is required.

After running the command:

python generate-fake-data.py

The terminal displays the dynamically generated dataset, showcasing both single and bulk user details.

The screenshot above illustrates the successful execution of the script, where unique user details are displayed. The first section presents individual fake information, while the bulk section displays a structured list of five fake users, each with unique names, emails, and addresses.

This makes the Faker library extremely valuable for developers and testers who need realistic datasets without exposing actual user information.

Pricing

Since Faker is a Python library, it is completely free to use for generating fake data in your code.

Why Qodo is Ideal for Enterprise Python Automation Testing

Having already explored various Python testing tools, it’s clear that while these tools provide reliable solutions for different aspects of automation testing in Python, the real challenge lies in integrating and scaling them effectively in a large enterprises.

In enterprise-level environments, Python automation testing involves more than just writing individual tests. It’s about building scalable, reusable frameworks, integrating them into CI/CD pipelines, and maintaining consistency across large teams and complex systems. Senior engineers lead this effort, focusing on designing frameworks that are modular, adaptable, and capable of handling a variety of testing requirements, from functional testing to performance and security testing.

Qodo offers a powerful solution for addressing these enterprise-specific challenges in Python automation testing. Here’s how:

AI-Powered Test Automation

Qodo leverages AI-driven agents to automatically generate and maintain tests based on codebase changes. These agents function as task-specific assistants, allowing teams to define testing behaviors and automatically generate tests without manual intervention. This drastically reduces the overhead of writing and maintaining tests, making it especially useful in large, evolving codebases.

Customization and Scalability

Qodo provides highly customizable agents tailored to specific tasks, workflows, or testing needs. By defining agents in TOML configuration files, senior engineers can ensure testing is aligned with their enterprise systems. Whether it’s for UI testing with Selenium, API testing with requests, or performance testing with Locust, Qodo’s agents integrate with various tools and environments, offering a scalable solution for enterprises.

Seamless Integration with CI/CD

In modern enterprises, automated tests must be tightly integrated into the CI/CD pipeline for continuous validation. Qodo seamlessly fits into this workflow, automatically generating and executing test cases as part of the CI process. This ensures tests are always up-to-date, providing fast feedback on code changes and reducing the likelihood of introducing bugs into production.

Collaboration and Consistency

Qodo’s agent-based approach enhances team collaboration. Agents can be shared, versioned, and built upon, allowing teams to collaborate on testing strategies while maintaining consistency across projects. This collaboration is crucial in enterprise environments where multiple teams work on different parts of the system but still need to adhere to the same testing standards.

Maintaining Test Stability and Reducing Flaky Tests

Qodo’s AI-driven agents reduce the risk of flaky tests by automatically adapting to code changes. This ensures that tests remain stable and relevant, even as the system evolves. Qodo is especially valuable in enterprises, where test maintenance can become a bottleneck, particularly when working with large, complex systems.

Conclusion

Python automation tools play a pivotal role in streamlining the testing process and ensuring the delivery of high-quality software solutions. Whether you’re automating web application testing, performing load testing, or simulating user interactions, there’s an automation tool to suit your needs. You may combine several of them to create a comprehensive testing solution or select a single one that best fits your requirements.

FAQs

Is Python Good for Automation Testing?

Python is excellent for automation testing due to its simplicity, readability, and extensive testing framework ecosystem. Its large community provides robust support and resources, while libraries like Selenium, PyTest, and Robot Framework offer powerful testing capabilities. Python’s gentle learning curve also makes it accessible for testers transitioning from manual to automated testing.

How do Python Automation Tools Improve Software Testing Efficiency?

Python automation tools enhance testing efficiency by enabling rapid test script creation, parallel test execution, and comprehensive reporting. They facilitate test reusability through modular frameworks, support continuous integration/deployment pipelines, and provide detailed debugging capabilities. This automation reduces manual effort, minimizes human error, and accelerates the testing cycle significantly.

What are the key Features to look for in a Python Automation Testing Tool?

Essential features include robust cross-browser support, detailed reporting capabilities, clear documentation, and active community support. The tool should offer easy test case management, support for different testing types (functional, integration, UI), and integration with CI/CD pipelines. Additionally, look for features like parallel execution, screenshots on failure, and custom command support.

How can Teams Choose the Right Python Automation Tool for their Testing Needs?

Teams should evaluate tools based on their specific requirements, including application type (web, mobile, API), team expertise, and project scale. Consider factors like learning curve, integration capabilities with existing tools, maintenance costs, and community support. Start with a proof of concept using different tools to assess their practical effectiveness in your environment.