Code Quality in 2025: Metrics, Tools, and AI-Driven Practices That Actually Work

TLDR;

- In 2025, AI copilots make it easy to generate working code quickly, but speed often hides deeper flaws. I’ve seen AI output functions that compile and pass unit tests yet introduce redundancy, fragile logic, or compliance risks. The challenge is no longer writing code but validating if it’s production-ready.

- Code quality in 2025 is a workflow, not a checkpoint. Static analysis tools like PMD are valuable for catching surface-level issues such as indentation, but they fall short on deeper risks like architectural duplication. Real quality comes from reviews that enforce architectural fit, maintainability, and long-term context across the system.

- Effective code review forces teams to ask deeper questions: does the code fit future system evolution, support maintainability under pressure, and integrate cleanly with existing architecture? These checks prevent repeated rework and long-term failures.

- AI-generated code often looks correct but can contain subtle flaws. Context-aware review tools like Qodo identify high-risk areas, enforce organizational standards, and catch issues such as unsafe caching, schema mismatches, or outdated helper functions before they reach production.

- Qodo enhances the entire SDLC: shift-left detection in the IDE, context-driven PR review across repositories, and one-click remediation turns insights into immediate fixes, saving hours of manual correction and preventing important failures.

- Combining traditional metrics, defect density, test coverage, and churn with AI-driven, contextual code review creates a feedback loop that continuously improves code quality, making the review process a strategic advantage rather than a bottleneck.

As a Technical lead, I have seen developers use a lot of LLMs in their code over the past few months. This shift has changed how teams think about code quality; it’s no longer just about writing clean functions, avoiding duplication, and hitting coverage targets. These factors apply, but the focus is more on context, review, and validation. That means, with AI coding agents and copilots, generating a working implementation is no longer the challenge; it’s ensuring that the implementation is production-ready.

While AI can generate working implementations quickly, speed without context often leads to garbage values and code that looks correct initially but introduces redundancy, inconsistency, or hidden flaws. The real challenge, and equally the real opportunity, lies in the review process, ensuring that the code aligns with organizational standards, meets compliance requirements, and supports long-term maintainability.

The problem with AI-generated code is that it requires review and adaptation before it is production-ready, and many developers across communities are following the same trend. In a Reddit discussion on maintaining code quality with widespread use of AI coding tools, one engineer summarized the challenges clearly:

That is why code quality in 2025 is defined less by speed of generation and more by context, review, and architectural fit. With most teams now using AI copilots, quality means ensuring the code not only runs correctly but also remains understandable, consistent, and adaptable over time.

In this blog, I will discuss why AI-generated code often falls short of being production-ready and how tools like Qodo’s deeper code understanding can help bridge that gap.

Code Quality as a Workflow

Static analysis tools like PMD, SonarQube, and ESLint have long helped teams by catching unused imports, inconsistent naming, and overly long methods. While these checks reduce noise, they don’t guarantee real maintainability. Code quality becomes meaningful only when it’s understood as a workflow that connects every new change to the broader system.

For example, on a Java project I was working on, PMD (a static code analysis tool that detects coding style issues) flagged indentation issues, but missed that a method was duplicating pricing logic already implemented elsewhere. When a bug appeared months later, fixes had to be made in two different services. The real failure wasn’t formatting; it was the lack of architectural awareness.

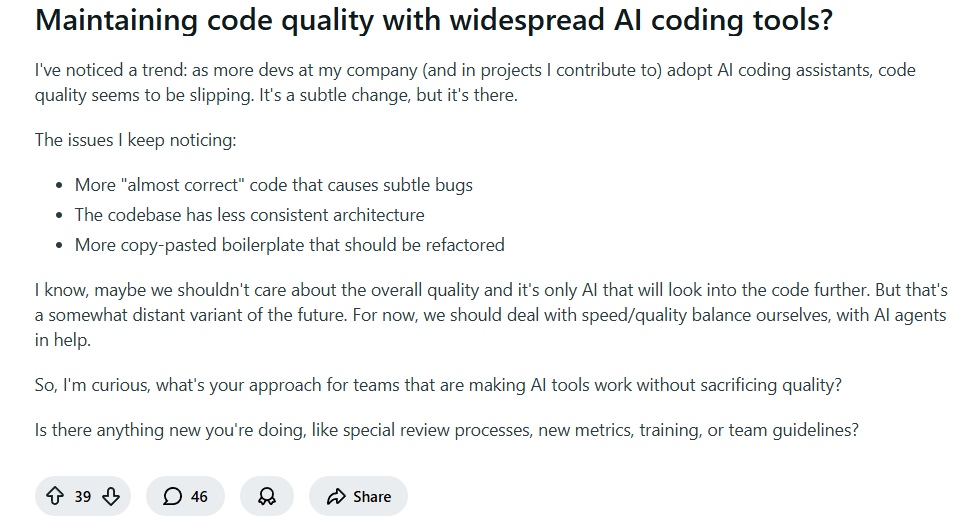

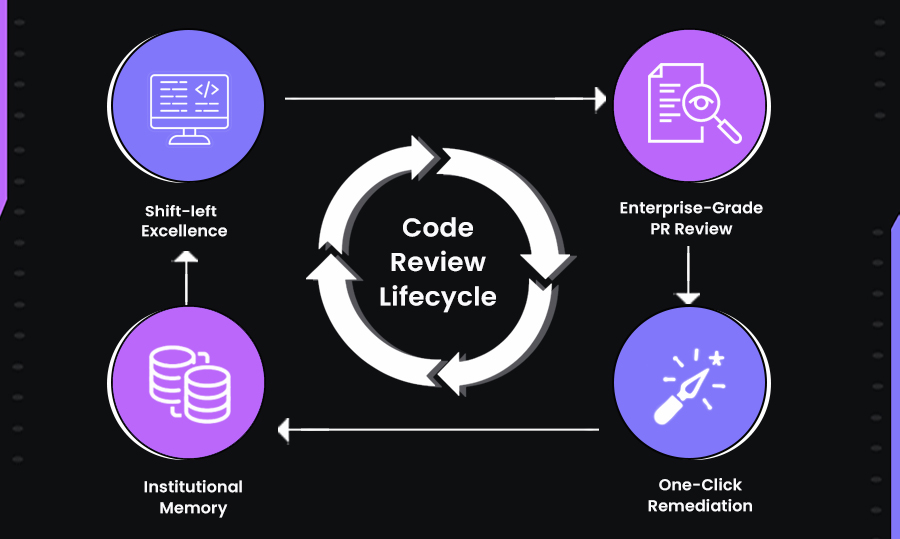

Code review is no longer confined to a single stage; it lives across the entire software development lifecycle. It starts in the IDE or CLI, where intelligent analysis and instant fixes prevent issues before they ever reach a pull request. Here’s a snapshot of a workflow diagram:

This is where the idea of a code quality workflow comes in. Code quality is not a single step; it unfolds as a sequence. At the start, static analysis tools help clear out low-value noise, but they are only the entry point. From there, code review adds domain knowledge and catches logic issues that tools miss. The next stage, architectural alignment, ensures the change fits within the broader system

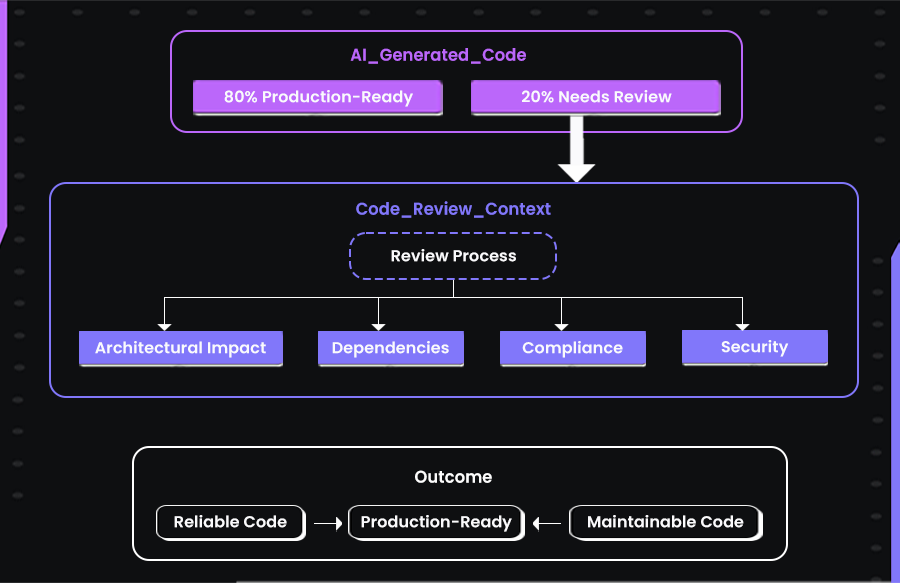

Refer to this diagram:

Inside the PR, reviews become context-aware, enforcing standards and enabling true collaboration around logic and design. After the merge, agentic remediation and learning ensure that any gaps are addressed in production and that those insights feed back into future work.

This shift-left, in-PR, and shift-right approach makes quality a continuous journey rather than a checkpoint. From the first line of code to safe integration in production, every stage contributes to long-term stability and maintainability.

Why are Code Reviews the New Gatekeeper?

AI-assisted development accelerates coding by generating solutions that often run and pass initial checks. However, the final stage of development, known as the “last mile,” is where subtle flaws can appear. The last mile refers to the portion of code that determines whether changes are fully production-ready. It includes catching issues like race conditions, resource leaks, undocumented assumptions, or minor compliance gaps that automated tests or static analysis might miss.

And the problems come when these issues are compounded as the application scales. More developers are leaning on AI to write code, which means more changes and more pull requests entering the pipeline. Review queues are growing, and with higher volume, the risk of missing important issues increases. Code reviews have become the gatekeeper not just because they catch what tools miss, but because they are the last safeguard in a workflow increasingly driven by AI-generated contributions.

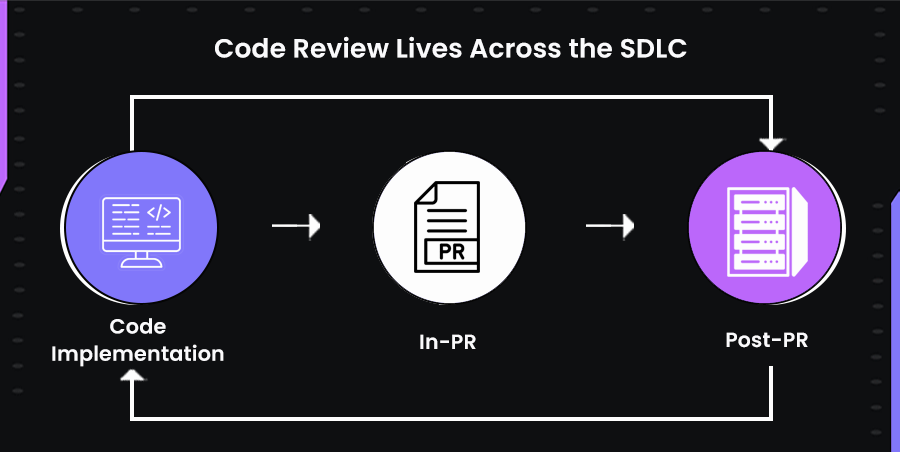

Here’s a diagram that explains how the last mile of the code needs review can degrade the code quality of your project:

The image above shows how code quality is defined by context. It shows that while the majority of AI-generated code can be used directly, the last mile requires a structured review process. Reviews examine architectural impact, dependency management, compliance, and security, factors that automated checks cannot fully capture. The outcome is code that is not only functional but also reliable, maintainable, and truly production-ready.

For example, an AI generates a function to process and cache user session data in a high-concurrency environment. The function passes all linting and unit tests, but it uses a naive in-memory cache without eviction policies.

In a live system, this can cause memory spikes, inconsistent session states, and subtle race conditions. A context-aware review identifies these risks, flags the unsafe caching strategy, and suggests using the team’s established distributed cache pattern, ensuring reliability under production load.

Code quality depends on understanding how changes interact with the system, dependencies, and organizational standards. Metrics indicate areas that might need attention, but contextual review determines whether the code is truly production-ready.

The AI Development Shift: More Code, More Risk

AI-driven tools have changed how teams write and ship software. Developers can now generate and review code at a pace that was unthinkable a few years ago. But with more code entering production, the risks scale alongside the productivity gains.

Real-World Incidents Highlighting the Risks

Even with careful guidance, AI-generated code can introduce unintended behavior if safeguards aren’t in place. :

- Replit reported that its AI coding tool produced unexpected outputs during testing, including erroneous data and test results. While no malicious intent was involved, the incident underscores the importance of thorough review, validation, and safeguards when integrating AI-generated code into production systems.

- Amazon’s AI Coding Agent Breach: In July 2025, Amazon’s Q Developer Extension for Visual Studio Code was compromised by a hacker who introduced malicious code designed to wipe data both locally and in the cloud.

Although Amazon claimed the code was malformed and non-executable, some researchers reported that it had, in fact, executed without causing damage. This incident raised concerns about the security of AI-powered tools and the need for stringent oversight.

These real-world examples highlight the importance of using AI tools that are deeply integrated with your development context. When AI understands your codebase, dependencies, and team standards, and includes built-in guardrails for security and compliance, it can safely accelerate development.

Implementing automated security scanning, AI governance policies, and continuous developer training further ensures that AI-generated code aligns with your system architecture and organizational requirements, reducing the risk of vulnerabilities and operational issues.

How Enterprises Should Measure Code Quality in 2025

Code quality has always been tied to metrics, but the way enterprises measure it in 2025 has shifted. Traditional metrics like defect density, test coverage, and churn still play a role, but they are no longer enough when most code is generated or assisted by AI. The challenge is not just correctness at the unit level but whether changes fit the larger system context and remain maintainable over time.

In my experience, an enterprise approach to measuring code quality should combine quantitative indicators with context-aware validation:

Defect Density in Production

Defect density measures the number of confirmed bugs per unit of code (usually per KLOC). It highlights whether a codebase that “looks clean” actually performs reliably in production. A low defect density shows stability and reliability. High density suggests fragile code that slips past reviews and automated checks.

For example, in a financial services backend, defect density exposed recurring transaction rollback failures even though the repo passed static analysis. This gap showed why measuring production outcomes is as important as measuring pre-release checks.

Code Churn and Stability

Code churn is the rate at which code changes over time, often measured as lines added/removed per module. High churn signals instability in design or unclear ownership. Stable modules that rarely change are usually well-designed. If churn is high, it often means technical debt or unclear system boundaries.

Take an example here, an authentication service in a microservices project had ~40% monthly churn. Qodo flagged it as a hotspot, and we discovered duplicated token validation logic. Consolidating it reduced churn and simplified future changes.

Contextual Review Coverage

Contextual review coverage measures whether code reviews go beyond syntax/style to catch deeper issues such as architectural misalignment, compliance risks, or missing edge cases. Static analysis won’t catch context-specific violations like ignoring API versioning or bypassing internal frameworks. Reviews ensure the code aligns with the system’s realities.

For example, in a retail API, AI-generated endpoints ignored the team’s versioning strategy. Contextual review caught this before release, preventing downstream client breakages.

Architectural Alignment

Architectural alignment ensures that new code fits within the established system design, patterns, and domain boundaries. It’s not enough for AI-generated code to compile or even pass unit tests; if it bypasses domain rules or reinvents existing components, it introduces hidden risks.

For example, I’ve seen AI suggest custom caching logic that worked locally but ignored the enterprise-standard distributed cache, leading to memory spikes once deployed. These misalignments often go unnoticed in static checks but resurface later as duplicated logic, performance bottlenecks, or fragile integrations.

For enterprises, this balance is especially important in the age of AI-generated code. Copilots can quickly produce functional code, but without guardrails, that code may introduce duplication, security gaps, or performance regressions. Code quality reviews, backed by metrics like test coverage, linting, dependency freshness, and architectural alignment, ensure that AI-generated contributions are production-ready.

Qodo’s Vision: Intelligent, Context-Aware Code Quality

In AI-assisted development, running code is no longer enough; code must be production-ready, compliant, and maintainable. Qodo tackles this by providing deep, context-aware analysis across the entire SDLC. It validates code against organizational standards, enforces architectural and compliance rules, identifies potential bugs, and ensures test coverage is meaningful.

Qodo is built entirely around ensuring code quality. Every line of code is reviewed with context before it reaches production, continuously validated against organizational standards, checked against specifications and compliance requirements, and assessed for bugs and test coverage.

With shift-left capabilities, Qodo catches issues early in local development and CI/CD, reducing costly downstream errors. Its intelligent remediation suggests fixes automatically, while continuous monitoring closes the feedback loop, turning insights into actionable improvements and making every line of code safer, reliable, and production-ready.

For example, an AI-generated API function might pass all linting checks and unit tests but use an in-memory cache in a high-traffic service, introducing potential memory spikes and race conditions.

Qodo identifies this risk through its contextual understanding of the code, cross-repository dependencies, and historical patterns, and it recommends the correct fix, such as replacing it with a distributed cache that aligns with the team’s architecture.

Let’s look at how Qodo transforms the code review lifecycle into a system that’s not only efficient but also context-aware and sustainable. Instead of treating code quality as a post-build checkpoint, Qodo embeds it directly into the development flow.

The Code Review Lifecycle is a continuous process that does more than just catch bugs. It’s about enforcing architectural consistency, maintaining institutional knowledge, and reducing long-term risks. Qodo strengthens this lifecycle through four key pillars:

- Shift-Left Excellence: Detects issues in the IDE or CLI before they reach the PR, preventing expensive late-stage fixes. For example, when adding a new caching layer, Qodo flagged potential race conditions and memory leaks before I even committed the code. This early detection prevents expensive fixes later in the development cycle.

- Enterprise-Grade PR Review: Provides scalable, context-aware pull request analysis, factoring in cross-repo dependencies and historical patterns. I’ve seen it catch subtle issues like a misaligned API contract between services or a function that bypassed internal authentication rules, problems that traditional code metrics or linters would have missed.

- One-Click Remediation: Suggests or applies fixes immediately, turning insights into actionable changes without back-and-forth. In one case, Qodo replaced a deprecated logging library in an AI-generated module with our approved company-standard library, eliminating manual edits and reducing review cycles.

- Institutional Memory: Maps knowledge across repositories, recognizes historical patterns, tracks dependencies, and analyzes contextual relationships to detect high-risk code accurately.

Now, I want to take a hands-on approach to show how Qodo actually helps maintain code quality through context-aware review. I’ll walk through real examples from my workflow to demonstrate how the tool catches the risky last mile of code that AI-generated tools often miss.

Comprehensive Code Review Across the SDLC

I’ve been exploring how to maintain high code quality throughout the software development lifecycle using Qodo. The platform is designed to focus on the last mile of code that carries the highest risk that standard metrics and AI-generated code often miss.

In my workflow, Qodo integrates at every stage: from local development to pull requests, providing context-aware insights, historical pattern recognition, and one-click remediation to ensure production-ready code.

I’ve been exploring Firefly III, an open-source personal finance manager built on a Laravel backend. It’s a powerful tool for tracking income, expenses, and budgets, and it exposes a REST API for integrations. Given its financial nature, code quality is important; even small logic flaws or inconsistencies could lead to data corruption or inaccurate reporting.

To see how Qodo helps enforce production-grade quality, I ran a set of prompts directly on the Firefly III codebase.

Local Development / Shift-Left

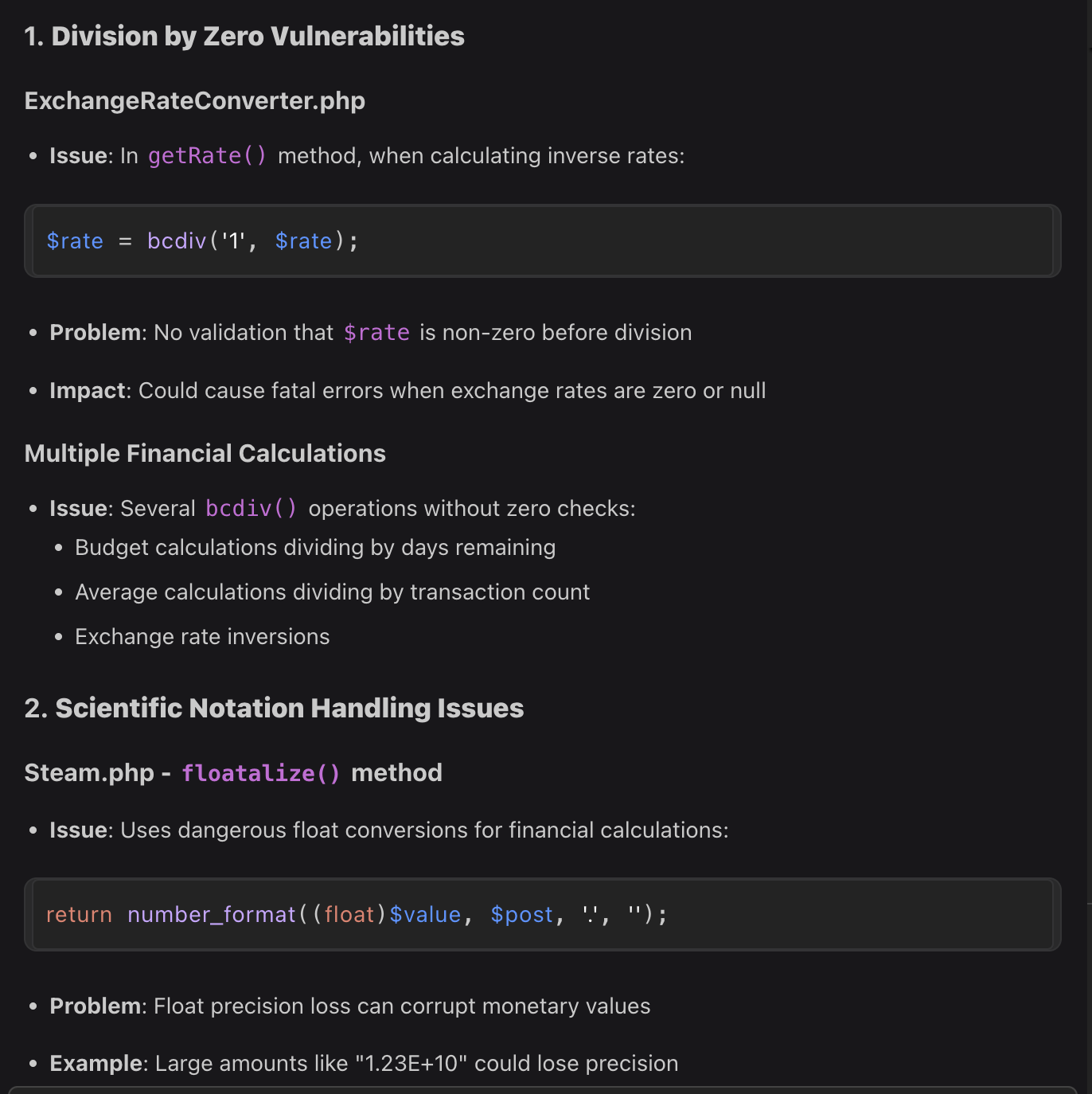

My first prompt was: “Analyze all backend functions for logical errors, edge cases, and incorrect calculations.”

Qodo immediately flagged several hidden risks. It pointed out division-by-zero vulnerabilities in ExchangeRateConverter.php, precision issues in Steam.php when handling scientific notation in amounts, and validation gaps in piggy bank targets where negative values were allowed. Here’s a snapshot:

It also detected type juggling problems in virtual balance arithmetic and subtle date boundary issues in controllers that could miscount transactions around midnight. These weren’t surface-level bugs, they were deeper logical flaws that could distort financial records.

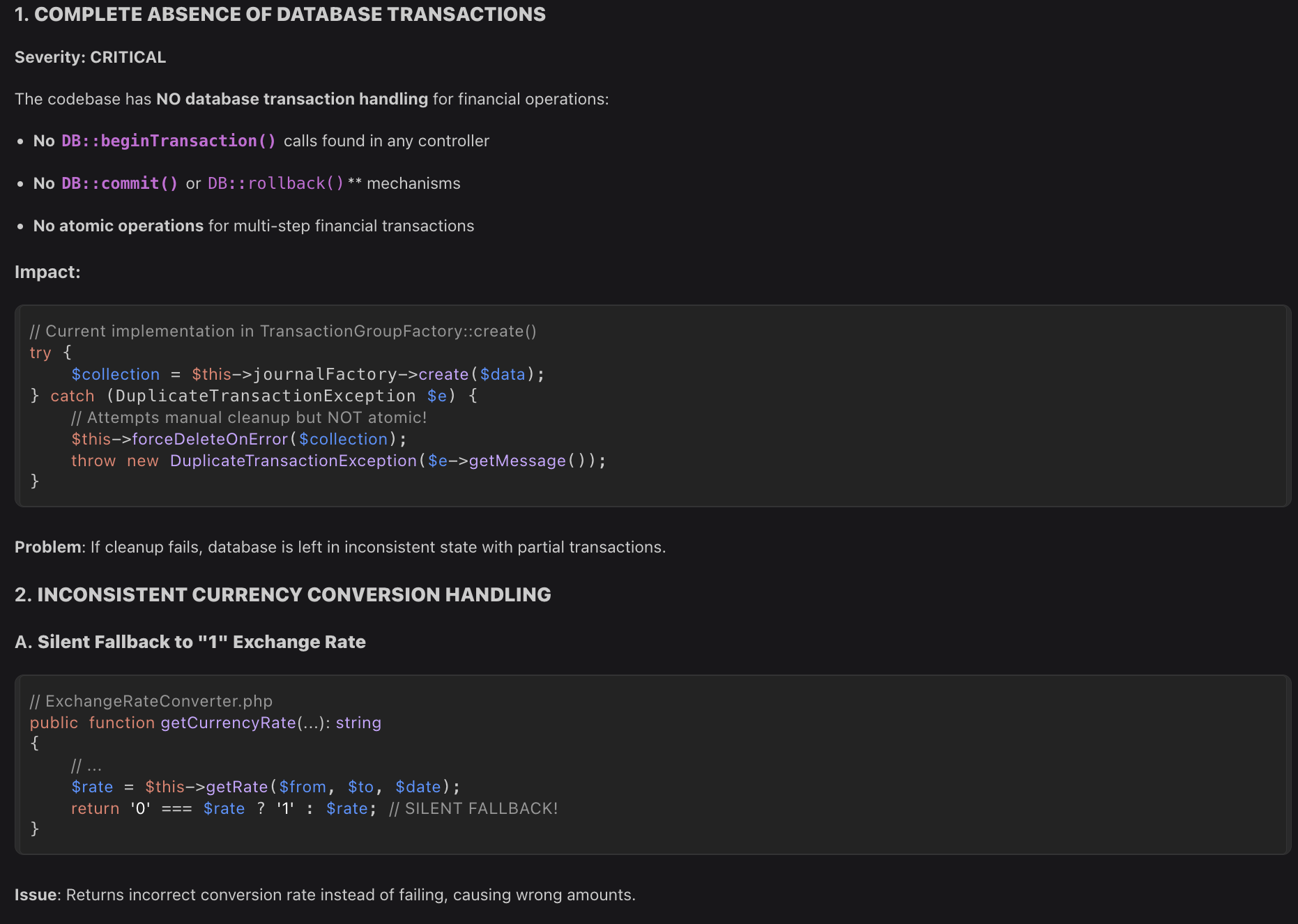

Next, I asked: “Check that transaction and budget endpoints handle rollback correctly on failures and identify inconsistent handling of currency conversions or category assignments.”

Here, Qodo revealed that Firefly III lacked proper database transaction handling altogether, no beginTransaction, commit, or rollback for important financial operations. Like, refer to the image below to see what Qodo gave in output:

It revealed quality gaps such as currency conversion logic silently defaulting to 1 when exchange rates were invalid, leading to inaccurate results. In category assignments, missing synchronization introduced race conditions that caused inconsistent mappings.

Budget checks also lacked atomic validation, which meant overspending scenarios could slip through undetected. These findings underscore how issues in concurrency handling and error management directly affect the reliability and maintainability of financial code.

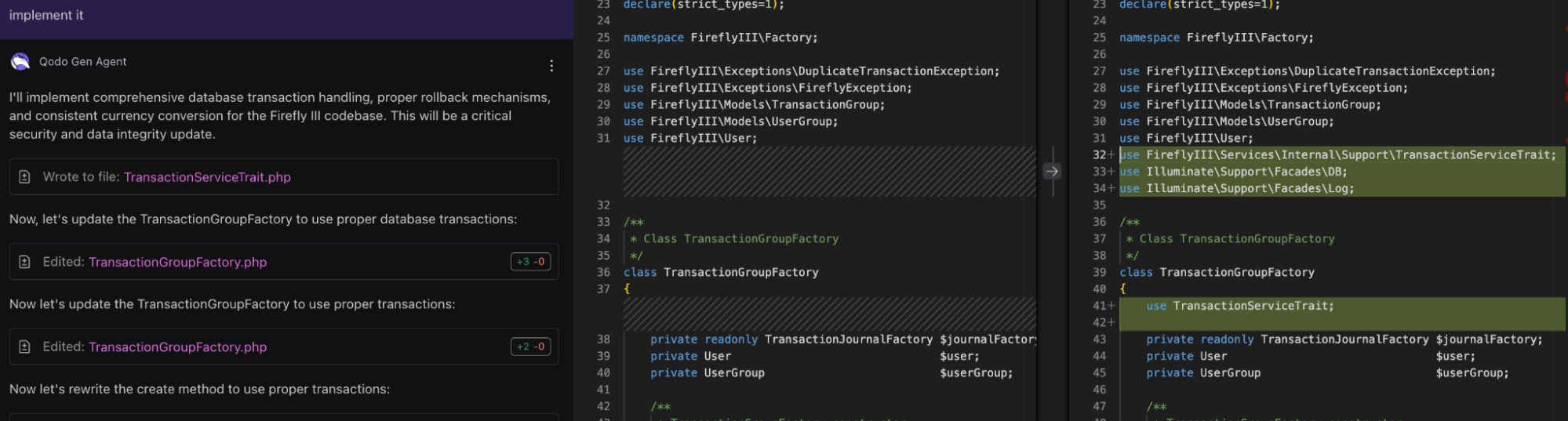

Then I prompted: “Implement comprehensive database transaction handling, rollback mechanisms, and consistent currency conversion for the Firefly III codebase.”

Qodo responded by generating a TransactionServiceTrait.php, updating TransactionGroupFactory.php, and refactoring key methods to include full transaction handling with rollback support. Here’s a snapshot:

It also enforced consistent error handling and logging, so invalid states wouldn’t slip by silently. This step turned abstract risks into concrete fixes that aligned with Laravel best practices.

Pull Request Review

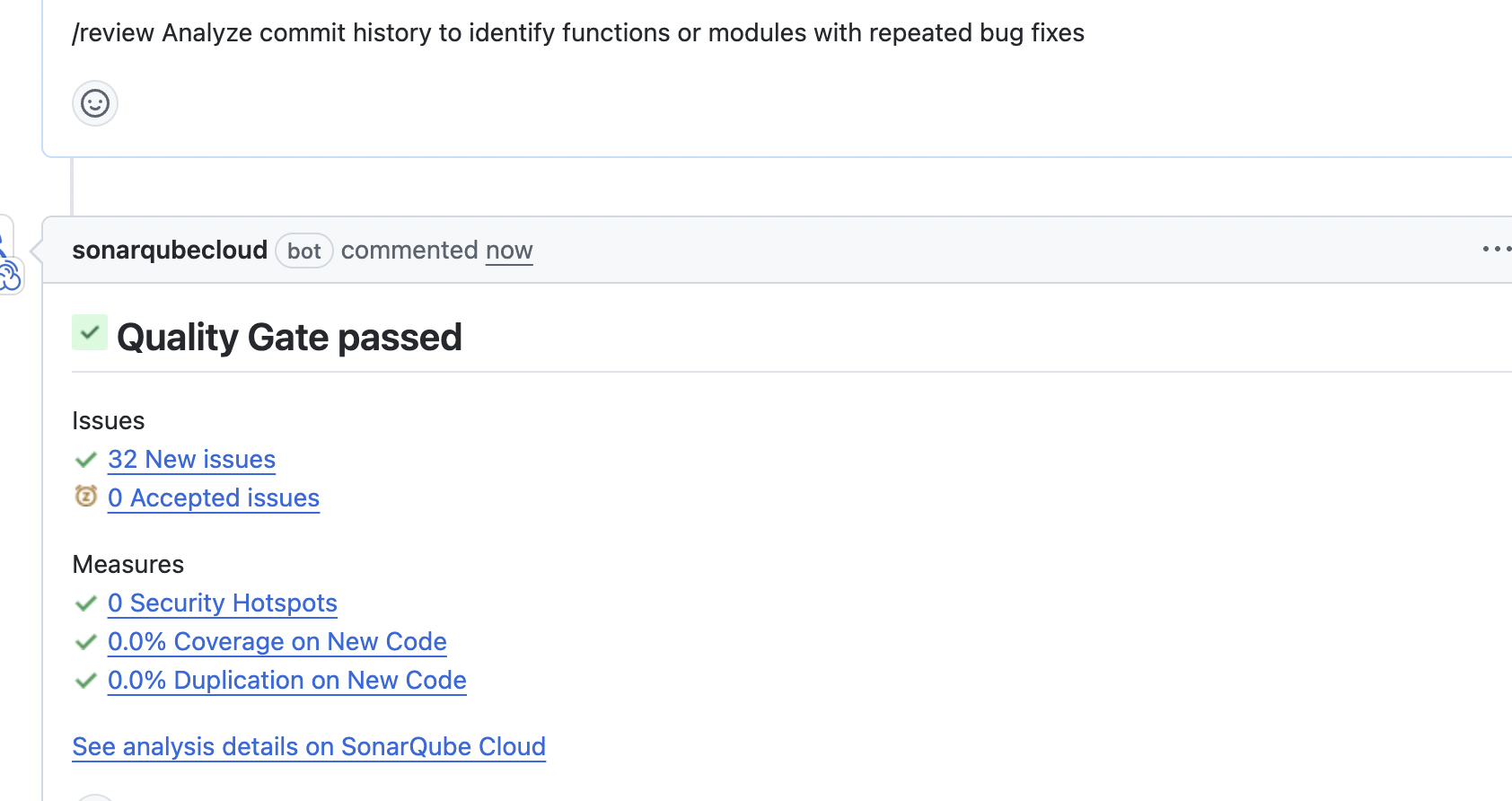

Once I opened a pull request for the Firefly III changes, I used Qodo Merge to validate the code in the context of the entire repository history. Instead of just pointing out surface-level diffs, Qodo Merge brought in architectural and historical context.

It flagged that the updated TransactionGroupFactory.php was still referencing an outdated helper, which had already been replaced in other parts of the codebase. Without this catch, the PR would have reintroduced inconsistencies in how transactions were grouped.

Qodo reported that the Quality Gate passed, but it also flagged 32 issues worth addressing. This is where code quality checks added real value: the gate ensured we weren’t blocked, but the additional insights highlighted how seemingly small problems can undermine long-term maintainability.

For example, trailing whitespaces and inconsistent list formatting might appear trivial, yet they directly affect consistency across the codebase. When every developer follows a uniform standard, reviews move faster, diffs stay cleaner, and debugging becomes less error-prone. By surfacing these issues early, Qodo prevented “style drift” that often leads to fragmented conventions and higher onboarding costs for new contributors.

Beyond style, Qodo’s categorization into maintainability and convention risks helped prioritize fixes. A misaligned list wasn’t just about aesthetics; it increased cognitive load for reviewers, making it harder to spot deeper logic flaws in the same function. In that sense, Qodo’s review wasn’t just about passing a quality gate but about ensuring the code was easier to read, safer to modify, and more consistent with team practices.

One-Click Remediation

Qodo didn’t just report problems. With a single click, it applied the correct fixes: updating the helper function across all affected modules, aligning state handling with the latest API schema, and removing sensitive logging globally. What could have taken multiple commits and hours of manual work was done automatically, ensuring the code was production-ready.

By applying Qodo hands-on across the SDLC, I was able to catch high-risk code early, validate it in context, and remediate issues immediately.

Also, I saw a strong emphasis on consistency. Variable naming, error handling, and async patterns were applied uniformly across the backend and frontend, which made reviewing and modifying the code straightforward. The generated functions were small and focused, with clear docstrings and comments, which improved readability and simplified debugging.

Moreover, commands like /generate-best-practices allow teams to generate a best_practices.md file that reflects their standards (naming, modularity, docstrings, etc.). Also, many tools in Qodo are configurable (which repos to include in context, how many suggestions, etc.) so that feedback aligns with team style.

Conclusion

In 2025, I’ve seen firsthand that code quality can’t be treated as an afterthought, especially as AI accelerates how much code teams produce. Generating working code is no longer the challenge; ensuring that it’s readable, maintainable, and production-ready is. Traditional static analysis and unit testing are useful, but they don’t scale to the complexity and velocity of modern development.

The difference now comes from applying AI intelligently within the review cycle. With tools like Qodo, AI moves beyond generating snippets to enforcing team-wide standards, surfacing risks in context, and addressing the hidden code that typically causes production issues.

In my experience as a technical lead, combining metrics like defect density, code churn, and test effectiveness with AI-driven contextual reviews creates a feedback loop that improves code quality over time, rather than just keeping pace with growing workloads. It’s this integration of human insight, organizational context, and intelligent automation that turns code review into a true driver of production-ready quality.

FAQs

What are the key metrics to measure code quality in 2025?

Metrics such as defect density, code churn, cyclomatic complexity, maintainability index, test coverage, and test effectiveness provide actionable insights into code reliability, maintainability, and overall quality.

How can teams ensure AI-generated code maintains high quality?

Teams need structured validation, automated testing, static analysis, and CI/CD integration to catch gaps in coverage, boundary checks, and unintended side effects, ensuring AI-assisted code meets team standards.

Why are readability and maintainability important for modern software development?

Readable and maintainable code allows developers to quickly understand, extend, and debug code months after it was written, reducing long-term technical debt and accelerating development cycles.

How does Qodo help enforce coding standards and reduce review overhead?

Qodo provides AI-driven suggestions within pull requests, highlights potential security issues, enforces team best practices, and uses retrieval-augmented generation to reference prior code patterns for consistency.

What unique features make Qodo effective for AI-assisted code quality?

Features like the /improve tool, contextual PR suggestions, best practices scaffolding, and configurable review guidance allow teams to maintain architectural consistency, improve test coverage, and reduce hidden technical debt.