Best CodeRabbit Alternatives for Developers in 2025

TL;DR

- CodeRabbit is an AI-powered code review tool that is suitable for teams looking to speed up PR reviews. It focuses on changed lines within a pull request, which helps reduce noise.

- Qodo Merge is capable of venturing beyond the scope of the reviewed PR (pull requests) by employing Retrieval-Augmented Generation (RAG). It searches your configured repositories for contextually relevant code segments, enriching PR insights and accelerating review accuracy.

- Greptile is a context-aware AI code reviewer that supports GitHub and GitHub Enterprise. It provides full repo insights using a dependency graph and is best for teams focusing on multiple repos in the patternsRepo field in greptile.json.

- Korbit delivers clear code review feedback designed to support learning and consistent code quality. It offers adaptive review modes, progress tracking, and detailed insights via its dashboard, helping teams visualize issue trends, review metrics, and identify focus areas in categories like performance, readability, etc.

What is CodeRabbit?

CodeRabbit is an AI-powered code review assistant designed to enhance workflows in GitHub and GitLab. It provides relevant feedback on PR (pull requests), with features like PR summaries and detailed line-by-line comments. This helps developers identify bugs, style issues, and gaps in test coverage. By automating repetitive review tasks, CodeRabbit aims to improve code quality and consistency for teams.

It is beneficial for small to mid-sized teams, fast-moving startups, and open-source projects where review bandwidth could be limited. With features such as automatic PR summaries and a conversational reviewer interface within the PR, CodeRabbit supports onboarding, team collaboration, and the early detection of common errors.

While CodeRabbit offers focused PR feedback and a simple setup, teams working with more complex architectures or organization-specific workflows may benefit from exploring additional tools. Alternatives can provide deep codebase context and deliver suggestions that align closely with team conventions and development practices.

With this in mind, let’s look at some alternatives that could add further capabilities to your review workflows, regardless of your team size or stage of growth.

Why or when to Look for CodeRabbit Alternatives?

With AI code review tools, developers can now automate much of the review process while preserving quality and context. CodeRabbit, for instance, works well for simple file-by-file reviews with projects or catching common issues. It integrates smoothly with VCS like GitHub, adding AI-generated comments right into PRs. You can also pair it with linters, like Semgrep, by adding a semgrep.yml file and configuring CodeRabbit to use it. This helps spot unsafe patterns or missing standard checks. For small teams or simple codebases, that’s usually enough.

As your codebase evolves, with more services, modules, and contributors, you might find yourself needing a code review tool that is strong at:

- Cutting through the noise and surfacing only high-impact comments without any tuning.

- Learning from your team’s feedback and adapting quickly.

- Giving more context-aware feedback and not being overwhelming.

Before jumping into alternatives, let’s take a closer look at what CodeRabbit does well, and where it might need a bit more support.

Pros:

- Easy GitHub Integration: Integrates with GitHub PRs, automatically adding AI-generated comments and summaries.

- Effective for General Code Quality Checks: Capable of identifying issues such as redundant code, style inconsistencies, and unused variables. That said, it may require additional tuning to catch more significant issues.

- Feedback-Driven Learning: Adapts over time based on reviewer corrections, reducing repetitive false positives.

Cons:

- Diff Only Analysis: Reviews the lines changed in a PR, missing the impact of changes across services or shared code.

- Summary Quality May Vary: PR summaries can occasionally be lengthy or less precise.

- Requires fine-tuning for better suggestions.

Best CodeRabbit Alternatives

When your codebase spans multiple services, languages, and deployment environments, you need tools that go deeper than line-by-line diff checks. The alternatives I’m about to share offer rich context analysis, contextual retrieval capabilities, strong integrations, and customizable rules to fit a complex, enterprise-grade codebase.

Let’s get started.

1. Qodo Merge

Qodo Merge enhances the code review process by offering rich post-diff interactivity directly within PRs. Developers can apply suggestions inline, trigger re-analysis, or request additional improvements, all without leaving the PR. It supports component-level actions like generating tests or documentation for specific functions and classes, making the review process more focused and actionable.

The /scan_repo_discussions feature further strengthens reviews by analyzing past PR discussions and surfacing team-specific best practices in a best_practices.md file, ensuring feedback stays relevant with less noise. Qodo Merge integrates with IDEs like VS Code and JetBrains and fits in CI pipelines with support for GitHub Actions by reviewing PRs. Teams can go beyond basic code formatting rules by enforcing custom practices, like requiring the use of safe query builders to prevent security risks.

When I tested it on a utility module, Qodo Merge’s PR agent for automated code reviews suggested cleaner and more maintainable code. For example, you can see below that it improved a nested list flattening function by replacing manual indexing with more Pythonic constructs:

Original code:

def flattenNestedList(input): flat_list = [] for i in range(len(input)): if input[i] != []: for j in range(len(input[i])): flat_list.append(input[i][j]) return flat_list

Qodo’s suggestion:

def flattenNestedList(input): flat_list = [] for sublist in input: if sublist: # This checks if sublist is not empty flat_list.extend(sublist) return flat_list

The revision above eliminated redundant range() calls and enhanced readability by using extend() and meaningful variable names. It’s a subtle but impactful change, especially important when similar patterns repeat across services.

Pros:

- Context-Aware Feedback: Analyzes entire repositories, not just diffs, to deliver deeper, more relevant suggestions.

- Customizable Best Practices: Learns from team-approved patterns and lets organizations define coding standards at scale.

- Enterprise-Ready: Offers RBAC, SAML SSO, audit logs, and on-premise or air-gapped deployment options for secure environments.

Cons:

- It sometimes might become quite descriptive in its suggestions, which can be very lengthy, even for small suggestions at times.

- It may be overkill for solo developers, who might get overwhelmed with suggestions because of the limited context of the codebase.

Pricing: Free for individuals and open-source with 250 messages/user. The Pro plan is $30/user/month and includes advanced features like pre-PR reviews and full access to the Qodo platform. For enterprise plans, Speak to Qodo.

2. Greptile

Greptile is an AI-powered code review tool that analyzes pull requests with a broader understanding of your codebase. It builds an internal graph of functions, classes, and file relationships to provide context-aware suggestions during reviews.

It supports Pattern Repos, allowing you to include related repositories in its analysis. For example, when reviewing a backend service, you can connect a frontend repo to give the reviewer more context across repos for referring front-end code when reviewing backend.

Teams can give feedback on Greptile’s suggestions using reinforcement learning by giving thumbs-up or thumbs-down reactions, helping the tool learn over time and focus on the types of feedback that matter most..

Pros:

- Full‑codebase context: To evaluate the code changes

- Reinforcement learning: Record your feedback.

- Broad language and platform coverage: Supports 30+ languages; GitHub & GitLab (cloud and enterprise).

Cons:

- Cost scales with head‑count: Per‑developer pricing can add up for very large teams

- Initial setup/indexing time: First‑time indexing and greptile.json tuning are required for big monorepos.

- Limited stylistic linting: For just basic reviews.

Pricing: Free trial available and $30 per active developer/month, unlimited repos & PR reviews, and custom pricing for enterprises.

3. Korbit

Korbit offers mentorship-style AI feedback for developers to identify areas such as readability, security, performance, functionality, etc. Its GitHub integration is simple, and it provides detailed explanations to teach best practices. However, it doesn’t scale well for complex systems or offer deep CI/CD or compliance features.

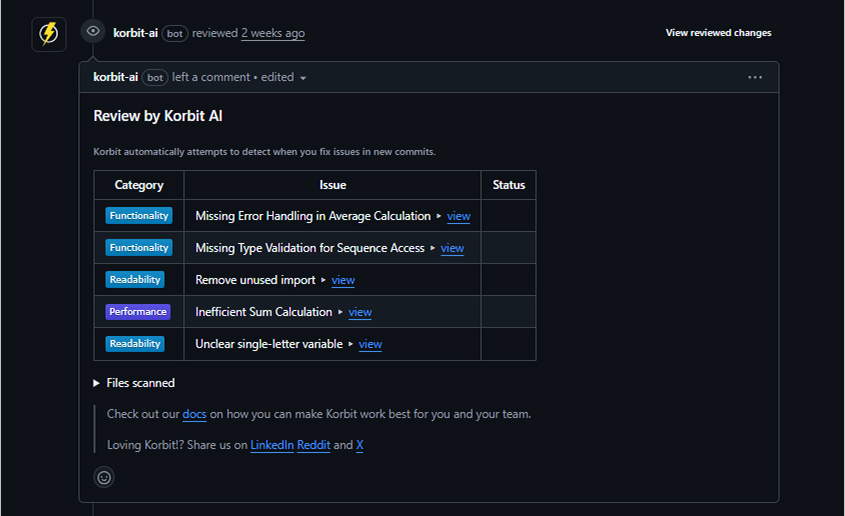

While reviewing a Python utility module, I integrated Korbit AI into my GitHub repo. Upon pushing the code, Korbit began by summarizing its findings in a structured format, categorizing them across functionality, readability, and performance. Here’s the interface it provided:

One example was this code block below, which was flagged by Korbit’s PR review agent.

def calcAverage(l): total = 0 for x in l: total += x return total / len(l) # no zero division check

Korbit AI flagged the lack of error handling for empty lists and non-numeric values. Here’s the suggestion it left:

"This will raise a ZeroDivisionError for empty lists and possibly a TypeError for non-numeric elements."

It recommended this fix:

def calcAverage(l):

if not l:

raise ValueError("Cannot calculate average of empty list")

if not all(isinstance(x, (int, float)) for x in l):

raise TypeError("All elements must be numeric")

return sum(l) / len(l)

The bot didn’t just highlight the issue; it explained why it needs to be fixed.

Pros:

- GitHub Integration: Integrates smoothly with GitHub pull request workflows.

- Dashboards: Provide visual reports on issue types, review trends, and code quality metrics to help teams monitor progress and identify areas for improvement.

- Team Activity Tracking: Enables filtering by developer, repository, or time range to support performance insights

Cons:

- Visual dashboards and metrics might feel unnecessary for smaller teams focused solely on fast code reviews.

- May miss deeper logic issues or flag minor ones unnecessarily, increasing noise.

Pricing: Free starter tier; Pro for $18/user/year, and Enterprise pricing available on request.

Why Choose Qodo Merge?

Qodo cuts through the noise by showing you the most relevant context PR. It can apply suggestions inline, re-analyze code after updates, or request additional improvements within the PR itself. It enables component-level actions, like generating tests or docs for specific classes or functions, helping teams move faster and with more context.

The feature that I liked the most is Qodo’s ability to analyze historical PR discussions. Using the /scan_repo_discussions command, it surfaces team-specific best practices in the best_practices.md file, ensuring suggestions align with how your team actually works, not generic linting rules.

Here’s a comparison table of four AI code review tools I’ve evaluated with multiple teams and contexts:

| Features | CodeRabbit | Qodo Merge | Greptile | Korbit |

| Context Analysis | PR Diffs only | Full repo & multi-service | Full repo + dependency graph | Full repo |

| Git Supported Platforms | GitHub Cloud, GitHub Enterprise, GitLab Cloud, GitLab Enterprise, Bitbucket Cloud, Bitbucket DC | GitHub Cloud, GitHub Enterprise, GitLab Cloud, GitLab Enterprise, Bitbucket Cloud, Bitbucket DC Azure DevOps, and more | GitHub (Cloud), GitHub Enterprises | GitHub Cloud, GitLab Cloud, Bitbucket Cloud, self-managed Git servers |

| Integrations | GitHub, Jira, Linear | GitHub, Jira, Linear | Conversations in PR | None |

| Compliance | Audit logs, No SAML, no on-prem | Audit logs, SAML SSO, on-prem | No on-prem, basic audit logs | Audit logs, SAML SSO, on-prem |

| Best Fit | PR-level automation | Code review with full-context suggestions | Large, multi-service codebases | Mid-size teams and full-repo analysis |

| Pricing | Free tier available.

Pro: $24/user/month Enterprise:Custom pricing |

Developer free tier: up to 250 messages/tools/month.

Teams: $30/user/month Enterprise: $45/user/month |

Free trial available.

Pro: $30/user/month Custom enterprise pricing |

Free tier available.

Pro: $9/user/year Custom enterprise pricing |

| Enterprise Deployment (On-Prem / Air-Gapped) | None | Fully supported | None | None |

| Latency Score | 8/10 | 9/10 | 6/10 | 7/10 |

If you’re serious about shipping faster without compromising on reliability or any kind of oversight, it’s time to move beyond patchwork code reviews, and I strongly recommend that you start using the Qodo platform for your enterprise.

Conclusion

In this article, we have explored 3 CodeRabbit alternatives. Ultimately, the choice of code review tool should be based on your team’s size, the complexity of your codebase, and the speed at which you are building and shipping features.

For large enterprises seeking a robust and scalable solution for code quality, Qodo Merge stands out as the best option.

FAQs

What is the best CodeRabbit alternative?

The best alternative depends on your needs. Qodo Merge is ideal for complex, multi-service codebases with enterprise requirements. Greptile is great for lightweight, customizable static analysis, and Korbit excels in mentoring new developers.

What is the best CodeRabbit alternative for an enterprise?

Qodo Merge is the top choice for enterprises. Its full-repo analysis and deep integrations with CI workflows and version control systems make it well-suited for large engineering teams working with complex codebases.