Best Cursor Alternatives For Engineers in 2025

TLDR

- Cursor can be used for both small and large projects, but working across multi-repo or deeply interconnected systems, like microservices architectures or monorepos, may require manual effort to maintain context, such as adjusting focus settings or opening relevant files.

- For large-scale projects, some teams prefer tools with multi-repo support, semantic search, and built-in review automation for CI workflows.

- The three Cursor AI Alternatives are Qodo, Tabnine, and OpenAI Codex. Tabnine focuses on privacy-first, fast code completions, making it suitable for individual developers and small teams. OpenAI Codex is often used to build custom AI-powered development tools, especially for internal automation. Qodo, on the other hand, is designed around enterprise-scale workflows.

- AI code assistant Qodo is a strong Cursor alternative for teams working in distributed systems. It supports Git-aware indexing, integrates with CI/CD pipelines, and provides traceable AI-generated code reviews, making it suitable for structured, multi-repo workflows.

Cursor is an AI-first IDE known for its code completions, GitHub Copilot integration (via plugin), and responsive interface. It’s lightweight, requires minimal setup, and includes a built-in assistant that handles local development tasks well.

As a Senior Technical Lead, I used Cursor extensively in a side project built around a microservice-based billing engine. For smaller, isolated repos, where each service lives in its own codebase with limited cross-dependencies, Cursor performed well.

However, in a client project involving a large monorepo with shared libraries and service dependencies, it was challenging to surface context beyond the currently open file (referred to as the “active file”). Project-specific patterns distributed across folders were harder to track, and support for private models or deeper CI/CD integration was limited.

These challenges are common across many AI development tools.

In a 2025 survey by Qodo, 65% of developers using AI for refactoring and approximately 60% for testing, writing, or reviewing reported that their AI assistant misses relevant context. Even among those who feel AI improves code quality, 53% still desire better contextual understanding.

Although Cursor automatically retrieves relevant context, enterprise teams often need deeper configurability and consistency in how context is structured across large-scale codebases and review pipelines.

This pushed me to explore other tools that could operate across complex repo structures, preserve context in multi-file operations, and integrate into our internal dev workflows. A breakdown of the best Cursor AI alternatives I’ve personally tested in high-scale, production-facing environments follows.

Why Look for Cursor AI Alternatives?

I’ve used Cursor in a few real-world setups over the past year. One was a fast MVP for a billing API in Node.js, where it helped scaffold routes, handle basic error cases, and speed up initial code generation. Another was during an enterprise integration project where we had to wire multiple internal services for auth, logging, and background jobs.

While it performs well in isolated, fast-moving environments, in my experience, several limitations consistently surfaced once the engineering complexity increased. Here are some reasons why I’m looking for alternatives to Cursor:

Single-File Focus for Context

Cursor primarily generates responses based on the currently open file (the “active file”). For tasks that require broader context, like multi-file refactors, architectural reasoning, or tracing logic across modules, developers often need to manually open additional files or adjust scope, which can slow down workflows.

Integration fatigue and rising usage-based costs

Cursor supports efficient local development and works well within its native environment. For teams that rely on custom CI/CD workflows, API-based automation, or tight integration with tools like Renovate or Danger, alternative editors may offer more built-in flexibility for pipeline-level customization.

As one Reddit user pointed out: “I’ve been using Cursor as my AI-powered IDE, and while I really like its features, the cost is starting to add up, especially with usage-based pricing for premium models like Claude 3.5 Sonnet.”

That reflects my own experience when model usage spiked during code review cycles.

Cursor currently supports both Claude 3.5 Sonnet and GPT-4, which gives developers access to top-tier LLMs, though the cost structure remains usage-based. This dual-model support is an advantage over tools that lock users into a single provider.

AI Suggestions Can Be Repetitive or Sticky

Cursor’s built-in AI assistant sometimes reuses outdated context or fixes patterns from earlier suggestions. For example, when correcting a language error, it may persistently suggest fixes based on an older version of the code, even after the issue has changed. This can lead to redundant or stale completions unless the context is manually refreshed.

Debugging across distributed systems lacked depth

In our payments pipeline, where a Kafka topic triggered chained events across three services, debugging needed a high-level understanding of event schemas, correlation IDs, and async error handling.

Cursor only handled local stack traces and didn’t recognize async flows across services or help reconstruct the event path. IDEs like JetBrains Gateway or VS Code with custom debugging adapters gave us far better control when stepping through cross-service events.

Detailed Tool Reviews

In my experience, Cursor works well for tasks within a single file or focused context. But when I’m working in environments with multiple repos and services, like Kotlin for core logic, Go for microservices, and GitHub Actions for CI, I often need extra tools (like IDE search or Sourcegraph) to handle shared types or trace protocol buffer references.

Since our team uses PR-based automation and tools like ArgoCD and Renovate, I’ve found that integrating those workflows requires additional setup outside of Cursor. For projects with complex pipelines, I tend to rely on other solutions that offer more built-in support.

To solve these gaps, I evaluated three alternatives to Cursor AI under the same engineering constraints. Each was tested for how well it handles multi-repo code understanding, cross-service debugging, integration with Git-based workflows, and team-level usage at scale.

Note: While this guide focuses on Qodo, Tabnine, and GPT-4 APIs, other AI code assistants like Amazon CodeWhisperer, Replit’s Ghostwriter, and Codeium may also be part of your evaluation. These tools vary in scope, from in-browser prototyping to private LLM deployment, and may suit different developer workflows depending on your scale, ecosystem, and security requirements.

Comparison Table: Cursor AI Alternatives at a Glance

| Feature | Qodo (Top Pick) | Tabnine | OpenAI Codex | Cursor |

| Multi-Repo Context Resolution | Deep Git-aware indexing | Single-repo only | Depends on implementation | No cross-repo support |

| Context-Aware Code Review | PR assistant with traceable AI | Basic suggestions | Custom build required | Shallow suggestions |

| CI/CD + DevOps Integration | Hooks for GitHub Actions, etc. | Manual setup | Via API | Not supported |

| Async/Distributed Debugging | Event-aware trace reconstruction | Local-only | Needs custom tooling | Stack trace only |

| Private/On-Prem Model Support | Available | Self-hosted available | Limited control | Doesn’t support on-prem yet and focuses on the cloud-version currently. |

| Pricing for Teams | Fixed plans available | Team plans, offline models | Usage-based | Unpredictable billing |

Qodo (Top Pick)

Qodo is one of the best AI code generators that I have personally used for enterprise-grade projects designed specifically for scale. Qodo uses semantic indexing and retrieval-augmented generation (RAG) to enrich its understanding of the codebase. Rather than relying solely on open buffers, it retrieves relevant code segments, commit history, and documentation to support accurate output

CI/CD awareness means Qodo understands how code changes flow through build pipelines, test stages, and deployment gates. For example, Qodo allows PR metadata and RAG insights to be integrated into CI workflows. It supports labeling PRs based on compliance (e.g., ticket links) and can prevent merges through GitHub Actions or other CI tools if conditions aren’t met.

Best For

Teams working in large, service-based architectures with shared modules and cross-language code. It’s suited for senior developers who need AI agents that understand Git diffs, cross-service impact, and system-wide patterns, like enforcing layering rules or updating shared logic across Go, TS, and YAML configs.

Key Features

- RAG-powered code generation and unified search

Pulls relevant logic, patterns, and documentation from across the org’s entire codebase or Confluence-like knowledge store to provide grounded outputs. - Agentic Code Review

Pre-built agents (like the review agent) analyze PRs for test coverage gaps, missing story references, and code quality issues. You can define custom review criteria via configuration.

- Flexible Agent Design via CLI

Developers can define agents using TOML configuration files that include trigger conditions, input sources, tools allowed, and result formatting. No need to build custom infrastructure.

- Pull Request Automation with Suggest/Review Commands

Developers can trigger AI suggestions and review workflows using simple commands like /review, /suggest, or /implement within PR threads. This allows fast, consistent feedback on architectural or test-related concerns without manually combing through diffs. - Native integrations

Integrates with JetBrains IDEs and VS Code (depending on the agent or CLI usage)

First-Hand Example

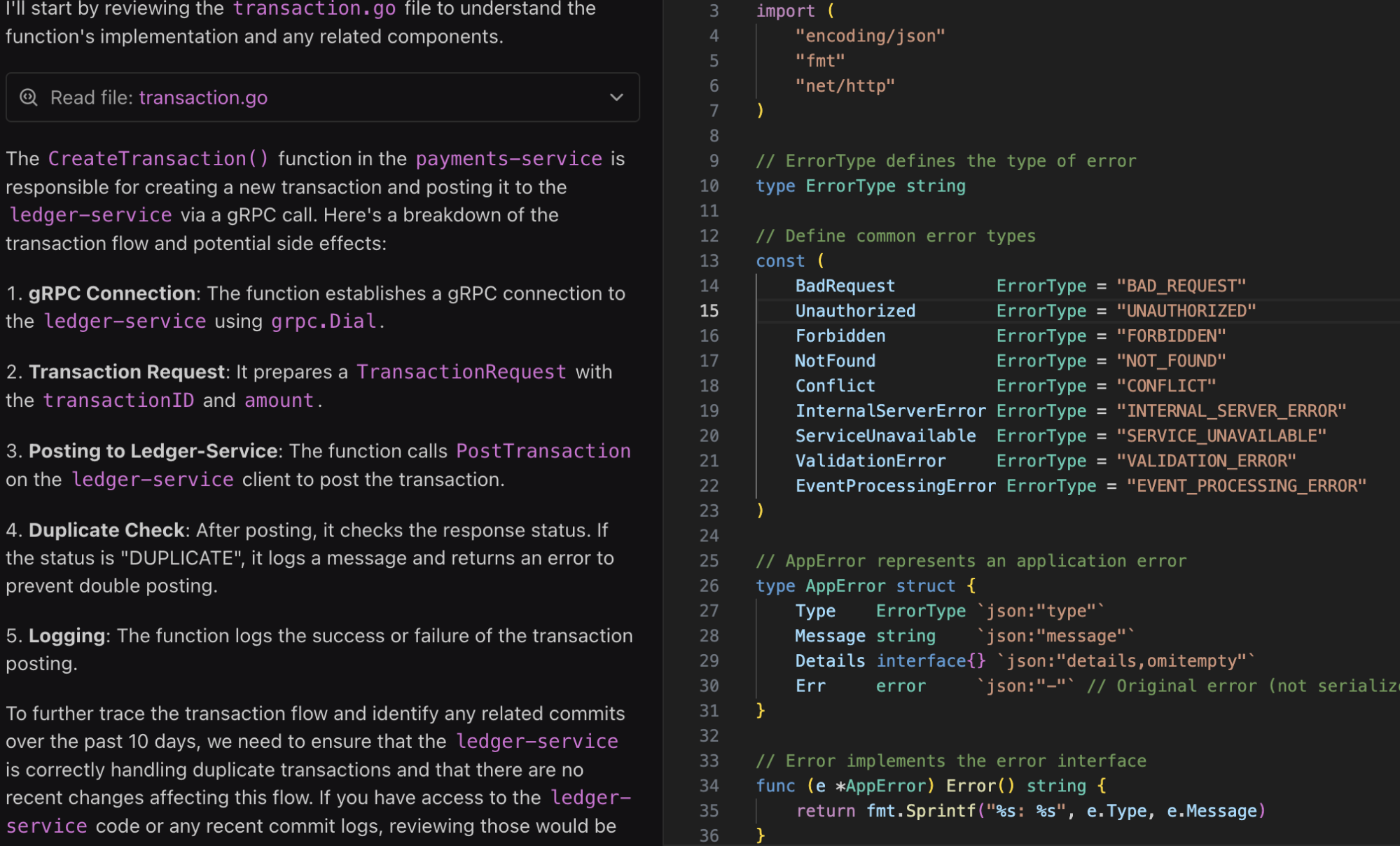

In a transaction processing backend for a fintech client, we managed five Go microservices communicating with a shared ledger system. We hit a debugging bottleneck around a double-entry posting issue that surfaced intermittently during concurrent balance updates.

I used Qodo’s prompt-in-PR feature with the following input:

Prompt:

“Trace the transaction flow for CreateTransaction() in payments-service, including any side effects or downstream gRPC calls that could trigger a double post to ledger-service. Check related commits over the past 10 days.”

Output:

Qodo AI Code Editor returned a full call graph, identified a recently merged commit that introduced an idempotency bug, and flagged a missing lock around a gRPC retry block. It also cross-referenced a previous fix in recon-service that handled a similar issue and suggested aligning the retry pattern.

Qodo AI Code Editor returned a full call graph, identified a recently merged commit that introduced an idempotency bug, and flagged a missing lock around a gRPC retry block. It also cross-referenced a previous fix in recon-service that handled a similar issue and suggested aligning the retry pattern.

While I don’t have internal metrics on specific bug prevention or time-to-resolution, Qodo Merge is documented to provide early context-aware feedback through tools like /review, which can flag high-risk areas in pull requests and integrate with CI pipelines to enforce review gates.

Its combination of static analysis, semantic retrieval, and self-reflection helps focus attention on potential issues before code reaches staging. The exact time and impact of detection will vary depending on the project and configuration, but Qodo’s tooling is deliberately designed to support earlier defect identification and smoother handoffs in the development lifecycle.

Pros

- Handles full-system reasoning, not just local context

- Plug-and-play support for multi-repo environments

- Model grounded in your codebase and docs via RAG

- Flexible deployment: self-hosted or managed

- Works with enterprise access controls and policies

Cons

- Requires initial onboarding to sync repo metadata

- Better suited for teams already using GitHub or JetBrains-based workflows

Pricing

Starts at $30/user/month for small teams with basic features. For enterprise plans (with private model support, compliance configurations, and full RAG workflows), Speak to Qodo for custom pricing based on seats and infrastructure.

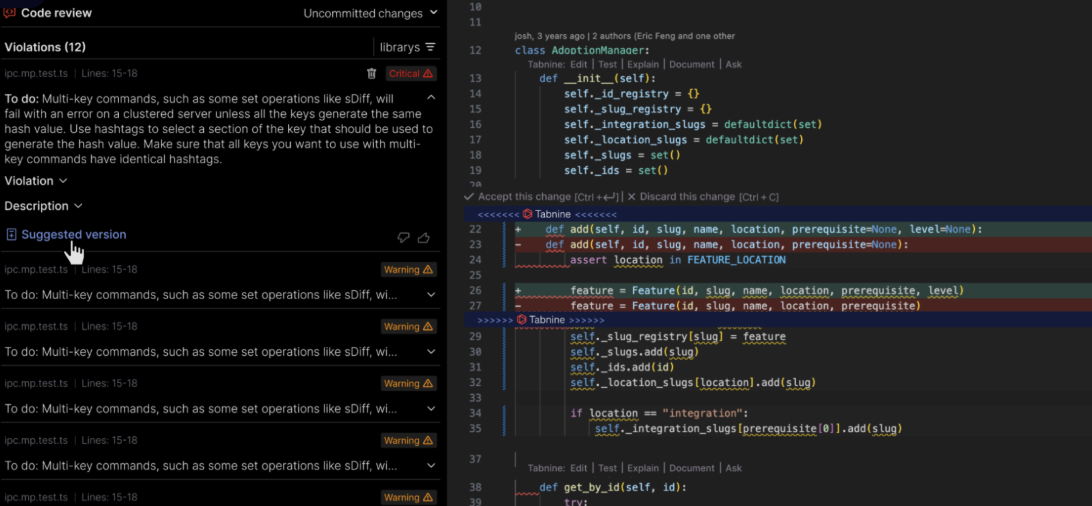

Tabnine

Tabnine is an AI-powered code completion tool focused on speed, privacy, and local performance. It supports private model deployment, customizable AI behavior, and offline usage, making it suitable for teams that prioritize low-latency code suggestions and data privacy over full-stack AI assistance.

Tabnine is an AI-powered code completion tool focused on speed, privacy, and local performance. It supports private model deployment, customizable AI behavior, and offline usage, making it suitable for teams that prioritize low-latency code suggestions and data privacy over full-stack AI assistance.

Best For

Freelance developers, small teams, or companies with strict privacy policies who want fast, lightweight completions and control over model hosting.

Key Features

- In-editor suggestions with VS Code, JetBrains

Instant completions across popular IDEs with minimal configuration. - Supports private/self-hosted models

Deploy your own LLM locally or on internal servers to keep code in-house. - Works offline or via cloud

Toggle between cloud-backed or offline models depending on project sensitivity. - Customizable completion models for team policies

Fine-tune AI behavior based on preferred coding patterns, banned functions, or compliance rules.

First-Hand Example

I used Tabnine in a contract project involving on-premise infrastructure and internal APIs for a cybersecurity client. Due to compliance rules, we couldn’t use cloud-based tools or send any code externally. We set up a self-hosted Tabnine model inside a VPN-restricted environment.

During development, Tabnine offered consistent, low-latency completions even while navigating an older Java codebase with custom annotations and DSLs. We fine-tuned it using internal style guides to avoid deprecated methods and surface safer alternatives. While it focused primarily on autocomplete, it worked efficiently within that scope, without offering advanced reasoning or debugging support.

Pros

- Works without sending code to external servers

- Fast and responsive in a large local project.

- Easy to configure with private infrastructure

- Suitable for secure and regulated environments

Cons

- Focused on code suggestions, without built-in review or debugging features

- Context is generally limited to the active file or buffer

- May require manual setup or guidance to tailor suggestions to specific codebases

Pricing

Free for individuals for 30 days. Pro plans start at $39/month per user. Enterprise pricing is available for private model deployment and SAML-based authentication.

OpenAI GPT-4o via API (Formerly Codex)

Codex was officially deprecated in March 2023, and OpenAI now recommends using GPT‑4.1, GPT‑4o, or the Assistants API for programmatic code generation. These newer APIs provide more accurate, multi-modal completions and support advanced developer workflows, including function calling, multi-turn reasoning, tool use, and long-context handling.

Teams building custom AI-powered developer tools now rely on GPT‑4.1 or GPT‑4o, depending on workload type. These models are accessible via the OpenAI API and integrate well into internal CLIs, dev platforms, CI pipelines, and review automation systems.

Best For

Teams building internal dev tools or automations that need programmatic code generation, refactoring, or task-specific agents with API access.

Key Features

- API-based code generation with GPT-4o

Supports natural language prompts and structured inputs, with improved function calling and multi-turn context management. - Multi-language support

Handles code generation across Python, TypeScript, SQL, Bash, and more, with strong reasoning on system-level logic. - Integrates with CI/CD and static analysis tools

Can be called during pipeline steps or reviews to generate test cases, suggest refactors, or validate code against policy. - Agent-style workflows via Assistants API

Developers can build multi-step agents with tools, memory, and file handling, ideal for internal migration, testing, and documentation tasks.

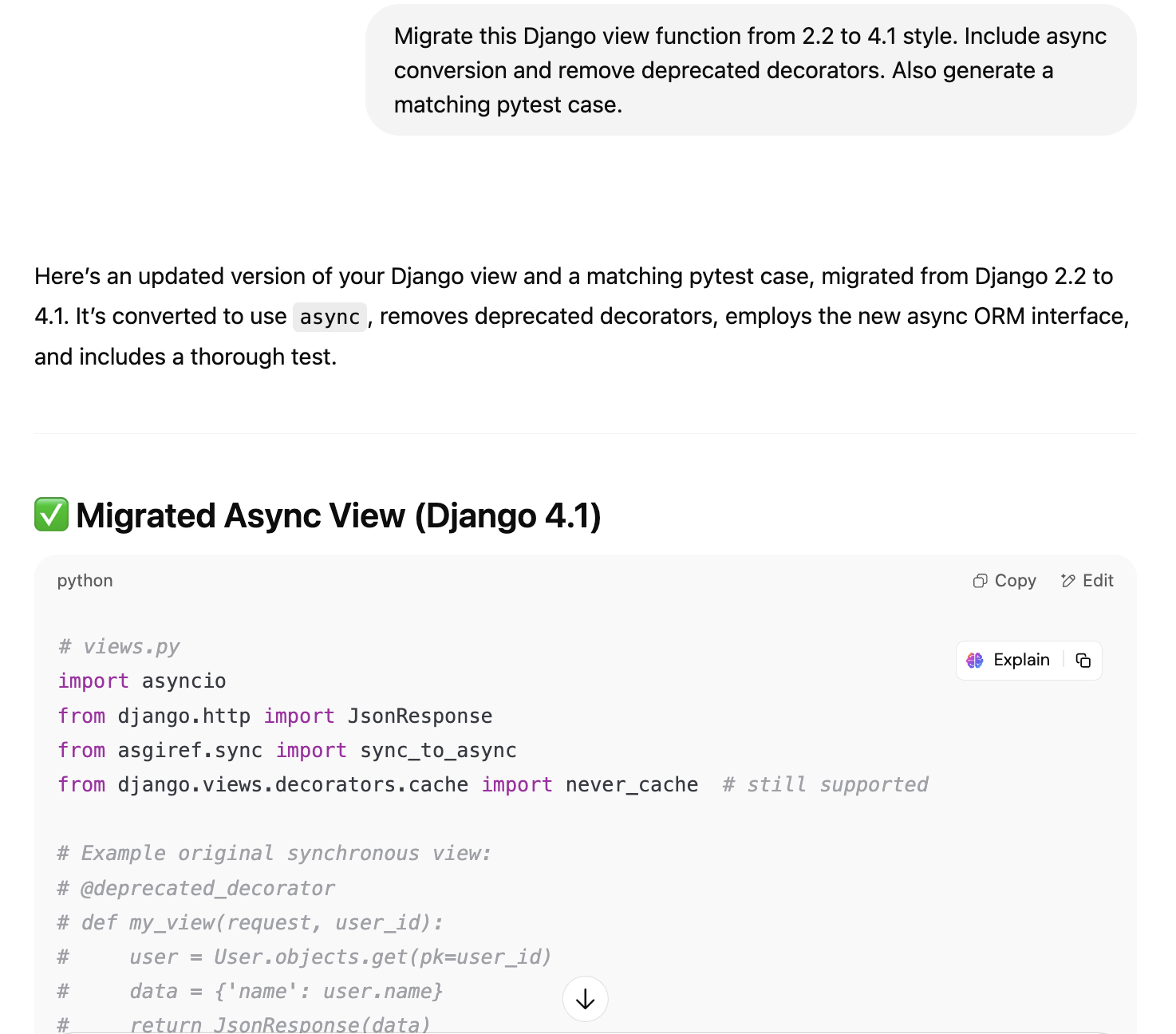

First-Hand Example

I created an internal “migration assistant” tool at a SaaS company during a framework upgrade from Django 2.2 to 4.1. The assistant took in endpoint definitions and returned updated code blocks, along with tests and migration notes. Prompting Codex with structured templates like:

Prompt:

“Migrate this Django view function from 2.2 to 4.1 style. Include async conversion and remove deprecated decorators. Also generate a matching pytest case.”

Input:

@api_view([‘GET’]) def fetch_data(request): …

The output required manual validation, but it reduced the repetitive effort involved in migrating over 90 endpoints. Its flexibility was useful, as the prompt pipeline could be adjusted to suit our specific use case.

Pros

- Supports complex, multi-turn developer workflows

- Highly accurate across programming languages

- Embeds easily into backend tools, pipelines, and dev portals

- Capable of reasoning over structured inputs, repos, and configs

Cons

- Context must be explicitly passed (e.g., repo structure, file history)

- Requires prompt engineering for high-accuracy completions

Pricing

Billed per token via OpenAI’s API platform. GPT-4o offers significantly improved price-performance over earlier models. Usage is metered by input/output tokens, with additional charges for tools or Assistants API stateful sessions.

Why Qodo is the Top Cursor AI Alternative for Enterprises

Qodo is designed for engineering teams working across multi-language, multi-service environments. It supports intelligent code generation, debugging, and refactoring by leveraging real-time context through a retrieval-augmented generation (RAG) system.

Qodo’s Git-native architecture enables pull request analysis that highlights architectural issues, test coverage gaps, and duplicated logic. It integrates with workflows across VS Code, JetBrains, GitHub, Bitbucket, and GitLab, while also offering enterprise-grade features such as role-based access control, audit logs, and private model deployments suited for regulated domains.

And when it comes to AI code reviews, Qodo is unmatched. Every AI-generated suggestion includes linked commit history, relevant documentation, and matching test cases, making the review not just fast but also reliable. The traceability built into every review cycle makes Qodo the only AI code assistant I’ve trusted to automate reviews at scale without compromising quality.

Conclusion

While Cursor delivers speed and simplicity for individual developers, it falls short in complex, enterprise-grade environments. For teams working across large codebases, regulated workflows, and multi-repo architectures, alternatives like Qodo, Tabnine, and OpenAI Codex offer more control, scalability, and real-world value.

Qodo leads the pack with its deep contextual understanding, robust Git integration, and enterprise-ready features like RAG-based code generation. Tabnine fits teams that need privacy and speed without cloud dependencies, while OpenAI Codex gives builders the flexibility to embed code intelligence wherever they need it.

Choosing the right tool depends on your workflow maturity and scale, but for high-performance teams, Qodo consistently delivers where others taper off.

FAQs

1. What is the best Cursor AI alternative for an enterprise?

Qodo is one of the best Cursor AI alternatives. It supports multi-repo systems, CI/CD, debugging, and offers explainable AI with enterprise-grade security and compliance options.

2. What are the limitations of Cursor?

Cursor is designed for responsiveness and quick iterations in focused development tasks. While it handles local file contexts efficiently, working across large or multi-repo codebases, debugging across distributed services, or integrating with CI/CD workflows may require additional tools or configurations depending on team setup.

3. Can I use Cursor for enterprise code?

Yes, Cursor can be used in enterprise environments, especially for rapid development and prototyping. However, teams with compliance-driven workflows or larger architectural requirements may also consider tools that offer native support for access control, policy enforcement, and deeper integration across services and repositories.

4. What is Cursor best for, and what is not?

Cursor is well-suited for individual developers and smaller projects where fast feedback and ease of use are priorities. For larger systems involving shared components, regulated environments, or CI-aware code collaboration, other tools like Qodo may align better with those workflows.

5. Is Cursor secure and SOC2 compliant?

Cursor follows standard security practices like HTTPS and file-scoped permissions. As of now, it hasn’t made public claims about SOC 2 or similar compliance certifications. For enterprises requiring documented security frameworks or on-premise options, it’s advisable to review vendor documentation or consider solutions offering enterprise-specific assurances.