Cursor vs Github Copilot: Which AI IDE Tool is Better?

TL;DR:

- GitHub Copilot is effective for smaller, file-level tasks, providing fast autocomplete and code suggestions. However, it struggles with larger codebases and multi-file changes.

- Cursor is more suited for handling large codebases, multi-file edits, and file context, but it comes with some performance issues, especially in larger projects, and requires a custom IDE.

- Another IDE plugin, Qodo, offers structured code understanding, safe code changes, and compliance features, making it a good fit for teams in regulated environments.

- Each tool has strengths depending on your workflow: Copilot is quick for smaller tasks, Cursor is better for codebase-wide changes, and Qodo is built for teams needing reliable, compliant code generation.

Over the past year, AI-powered coding assistants have increasingly become part of development workflows. As a senior front-end engineer, I’ve used them for help with tasks, like generating responsive grid variants in Tailwind, converting React class components to functional ones with hooks, or updating form validation rules to reflect new schema definitions.

GitHub Copilot is useful for providing contextual suggestions within individual files. It works well with React components and can assist with tasks like setting up useEffect hooks or defining Zod schemas.

Cursor, a fork of VS Code, integrates AI directly into the editor. It supports multi-file edits and can help with tasks such as extracting logic into custom hooks. It includes built-in AI features without requiring separate extensions, while still allowing the use of standard VS Code extensions.

In this post, I’ll compare Copilot and Cursor based on their performance in real-world workflows, focusing on how each handles large, typed codebases, manages context suggestions, and fits into typical front-end development tasks.

Quick Overview of the Tools

Before getting into real-world comparisons, here’s a quick overview of how Copilot and Cursor differ in design, scope, and developer experience.

GitHub Copilot

GitHub Copilot doesn’t always require prompts, especially for code completion suggestions. It works passively as you write code; it suggests completions based on the surrounding context in your current file. You can also engage it via Copilot Chat for prompt-driven queries, but most of the time, it just runs in the background.

It’s especially handy for smaller, scoped tasks. For example:

- When setting up a Zod schema inside a

useForm()call, Copilot auto-completes full object definitions, including string validators andrefine()conditions, based on nearby form field names. - When creating an effect hook to sync localStorage to component state, it generates the correct dependency array and even suggests the

JSON.parse()logic you’d expect. - In test files, it pre-fills assertions like

expect(screen.getByText(...)).toBeInTheDocument()after seeing the JSX rendered above, which is helpful when writing component tests quickly.

That said, it works purely at the file level. It didn’t recognize that useForm() in our repo is a wrapper around react-hook-form with custom defaults, or that useAnalytics() injects extra context around trackClick(). So if I’m working inside a component that pulls in utilities from three layers deep in @shared, I still have to manually:

- Import the correct hook from the right package

- Check TypeScript definitions to pass the right props

- Wire up behavior consistent with our conventions

Copilot works well for small tasks in a single file and can handle complex code. But for changes that touch many parts of the codebase, like design, state, or tracking, it may need more help from the developer to get things right.

Cursor

Cursor, on the other hand, is an AI-based IDE, a fork of VS Code built specifically for code navigation, refactoring, and Q&A using LLMs. You can give it prompts like “extract this to a reusable hook,” “migrate this to the new useTracking() API,” or “where is this prop used across the repo?” and it will try to act across your entire codebase.

In practice, Cursor performs well in cases where you’d normally reach for grep, cmd+click, or multi-file refactors:

- I used it to migrate Dialog components from the isOpen legacy prop to a new open/close controller pattern. Cursor updated prop usage across apps/web, packages/ui.

- When removing our old withAuthRedirect HOC, I highlighted one usage and asked Cursor to replace all instances with a

useRedirectOnAuth()hook. It found usages buried in legacy routes and test helpers.. - It also helped refactor our internal

useFeatureFlag()calls to a stricteruseFlags()API, updating imports, adjusting destructuring, and catching spots where flags were used insideuseMemo().

But I did hit some issues worth knowing:

- Initial setup takes time. When I first installed Cursor and imported my full extension set (ESLint, Prettier, Tailwind IntelliSense, GitLens), it slowed down. It took ~15 minutes to index a 70K-line TypeScript repo, and some extensions had to be manually reconfigured or behaved differently than in stock VS Code.

- Large repo performance dips. In mid-sized repos, 60–70 packages, 80+ TS/TSX files. Cursor being a heavy tool slows down a bit and lags a bit.

Feature-by-Feature Comparison of Copilot vs Cursor vs Qodo (based on real world testing)

| Feature | GitHub Copilot | Cursor | Qodo (formerly Codium) |

| Integration | VS Code, JetBrains, Neovim, VS | Standalone IDE (VS Code fork) | VS Code, JetBrains, GitHub |

| Code Generation | Contextual in-file suggestions and suggests autocompletes | Multi-file refactoring, smart rewrites, and suggests autocompletes | Structured code generation with a compliance focus, with relevant code suggestions. |

| Codebase Understanding | Limited to open file context | Full project indexing and querying | Deep codebase awareness with audit trails |

| Refactoring | Basic in-file edits | Multi-file edits with contextual awareness | Enterprise-grade refactoring with safety checks |

| Test Generation | AI-assisted code reviews and test suggestions | Test generation with context | Comprehensive test generation with coverage analysis |

| Performance | Lightweight, fast | Can be slower with large codebases | Optimized for enterprise environments |

| Privacy & Compliance | Cloud-based, data usage policies apply | Offers privacy mode, SOC 2 certified | SOC2 compliant, supports |

| Enterprise Support | Offers dedicated account management, SLAs | Priority support with dedicated response times | Priority support with dedicated account managers and custom SLAs |

| Pricing | $39/user/month | $40/user/month | $30/user/month |

Let’s break down where Copilot and Cursor stand when it comes to core tasks front-end engineers actually deal with day-to-day: code generation, codebase understanding, and refactoring. I will also discuss another IDE plugin, Qodo, and we’ll see how its structured approach to code generation, compliance, and refactoring compares to the more focused, task-specific capabilities.

a. Code Generation

To compare Copilot, Cursor, and Qodo fairly, I used the exact same task:

“Implement the handleSubmit function in CheckoutForm.tsx using Zod validation, POST to /api/checkout, show a toast on success, and reset the form.”

This isolates how well each tool handles requirements like schema validation, internal utilities, error handling, and form state.

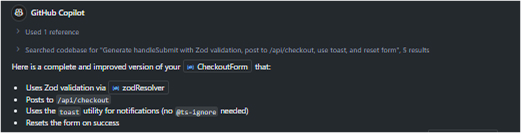

GitHub Copilot: Quick Code Generation

Inside CheckoutForm.tsx, I started writing the onSubmit handler, and Copilot kicked in with its autocomplete code suggestion. It pieced together the fetch logic, added the right headers, and handled serialization with JSON.stringify(). It even called toast() correctly and added a reset() to clear the form after submission. On first glance, it felt complete.

But when I looked closer, there were a few things missing. It assumed the API would always return a valid JSON body and immediately called response.json() without any fallback. If the backend ever returned a non-JSON error or an empty response, this would throw. It also assumed the error response would include a message field, which isn’t guaranteed. The logic worked, but it wasn’t resilient.

Here is a snapshot of the suggested improvement it gave:

Here’s the inline suggestion for it:

const onSubmit = async (data: CheckoutData) => {

try {

const response = await fetch('/api/checkout', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify(data),

});

if (!response.ok) {

const errorData = await response.json();

toast(errorData.message || 'Checkout failed', 'error');

return;

}

toast('Checkout successful!', 'success');

reset();

} catch (error) {

toast('An error occurred during checkout', 'error');

}

};

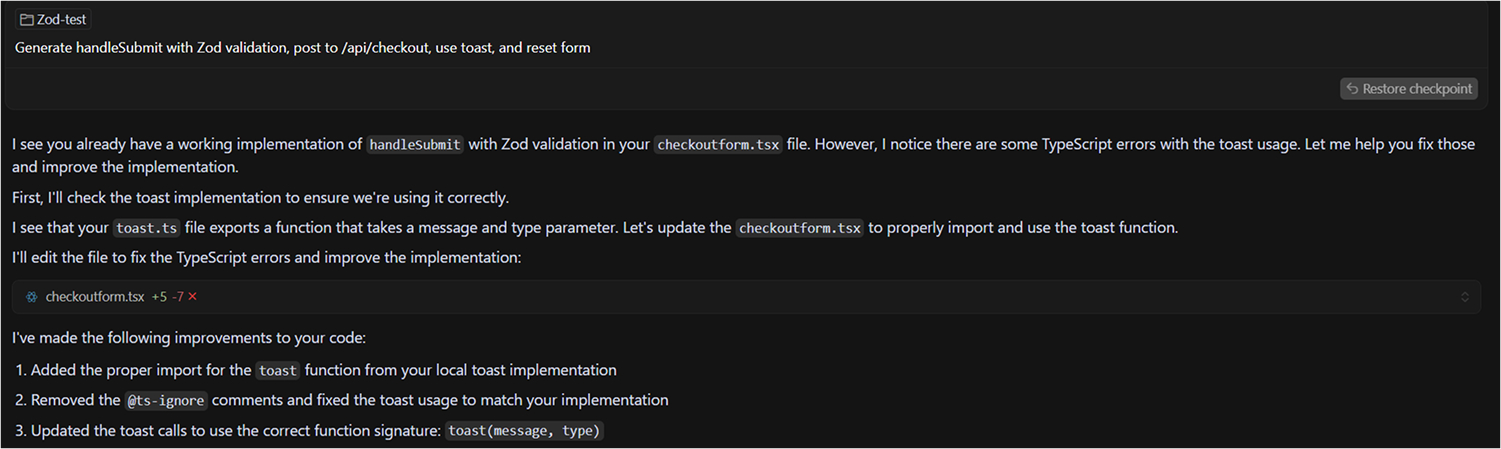

Cursor: Generates Clean Code but Requires Regular Updates

When I prompted Cursor with the same task, it generated nearly the same structurefetch call, toast handling, reset(), all in place. It even included the React import automatically. The response parsing looked familiar: it called response.json(), extracted the message, and piped it into a toast.

Cursor kept the output clean and structured. It followed import paths correctly, and it respected the way we typically organize form handlers. But like Copilot, it didn’t account for broken JSON responses or null values in the API payload. It handled the happy path well but didn’t try to protect against edge cases unless explicitly prompted.

Qodo: Provides Safe Code Generation with Built-In Error Handling

Qodo went a step further in this. When I asked it to implement the same onSubmit handler, it returned familiar code, but with a few critical upgrades. It used the same fetch() and toast() pattern, but it wrapped the response.json() call with a .catch(() => ({})). That one line meant no runtime crashes if the backend returned a non-JSON error.

It also handled the error messaging more defensively: instead of assuming the presence of a message key, it checked for it with optional chaining and defaulted to a safe fallback. It didn’t just work, it worked safely. Everything else stayed clean and consistent, and the output came with a commit-ready diff in the CLI. Here’s the snapshot of the diff output showing .catch() on response.json() and the safe fallback for toast().

b. Codebase Understanding

To compare Copilot, Cursor, and Qodo’s ability to understand the codebase, I tested how each tool handles prop renaming and updates across multiple files. This highlights each tool’s ability to trace and modify references in the entire repo.

GitHub Copilot: Line by Line

Copilot doesn’t understand the structure of your codebase beyond what’s in the open file. I’ve had cases where I’m editing TeamInviteForm.tsx, and I start typing generateToken(...). Copilot will suggest a function, but not the actual one from @shared/auth. It just guesses based on name similarity.

If I change a prop name in one component, say, buttonText to label, Copilot won’t update its usage across the rest of the app. It doesn’t index the repo, so it can’t trace or follow how props or functions flow through other modules.

Cursor: Repo-Wide Codebase Awareness

Cursor excels at this. When I renamed userRole to accessLevel in UserBadge.tsx, I asked Cursor to find all references across the repo and update them. It correctly traced through feature folders, test files, and even updated Storybook stories without breaking types.

It also helps when exploring unfamiliar code. I once asked, “Where is the useFeatureFlag() hook implemented?” and Cursor took me directly to its declaration inside packages/flags/src/flags.ts, including the internal client logic.

Qodo: Codebase Awareness with Commit-Ready Diff Generation

Qodo’s codebase understanding goes deeper than autocomplete or filename scanning; it operates with full context at the time of prompting. When I renamed a prop from userRole to accessLevel inside UserBadge.tsx, I asked Qodo to “find and update all references to userRole in the repo.” It surfaced every usage, including test files, storybook snapshots, and prop passthroughs inside nested components. The output wasn’t just a list; it generated a commit-ready diff I could review and apply directly.

What made it stand out was how it handled edge cases. In one instance, it skipped an unrelated userRole inside a string literal used in a comment, but correctly updated it when passed into a render prop. It also preserved surrounding formatting and ensured type safety wasn’t broken in the process. Compared to Cursor, Qodo’s output wasn’t just accurate, but also felt reviewable and CI-safe from the start, with a proper explanation of the codebase.

c. Refactoring & Multi-file Changes

I compared how Copilot, Cursor, and Qodo handle multi-file refactoring by updating a shared component’s API across several files. This isolates each tool’s efficiency in making large-scale changes and updating dependencies across the codebase.

GitHub Copilot: Assists with In-File Refactoring

Copilot can assist with small refactors, renaming a variable, inlining a function, or converting simple callbacks to arrow functions. For example, when replacing a forEach() loop with a .map() chain inside a render method, Copilot adjusted the return syntax correctly.

But it won’t touch multiple files. If I’m updating a shared Modal API across multiple components, changing isVisible to open, Copilot offers no help. You’ll still need to manually search for all usages, update them, and verify type compatibility yourself.

Cursor: Multi-File Refactoring

This is where Cursor pulls ahead. When I migrated from a legacy Tooltip to a new Popover component, I asked Cursor to replace Tooltip imports across the entire repo, rename props, and insert fallbacks where missing. It generated a diff I could review and commit, saving me 30–40 minutes of manual search and replace.

It also helped when deprecating a prop in Header.tsx. I prompted it to “remove showCTA prop and update all usage,” and it correctly modified props in feature modules, removed dead logic, and even adjusted snapshot tests that depended on it.

Qodo: Structured Refactoring with Safety Checks

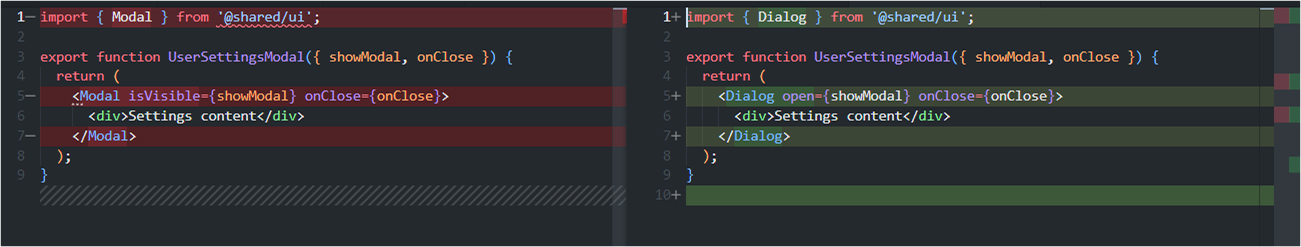

Qodo is built for structural, repo-wide refactors, it shows. When I needed to migrate from a legacy Modal component to a new Dialog API, I asked Qodo to update the entire repo: replace Modal with Dialog, and rename the isVisible prop to open. Instead of giving me a vague search-and-replace, Qodo generated a complete, multi-file diff I could review line by line. It correctly updated imports, props, and even JSX formatting.

The above snapshot shows the diff Qodo Gen Chat generated for UserSettingsModal.tsx. It replaced the Modal import with Dialog from @shared/ui, renamed the isVisible prop to open, and cleanly updated the JSX without touching any unrelated logic.

d. Autocomplete & Context

To evaluate the autocomplete capabilities, I tested how Copilot, Cursor, and Qodo assist with generating a feature flag toggle handler. This reveals how well each tool handles context, naming conventions, and cross-file imports.

GitHub Copilot: Fast, Context-Aware Autocomplete

Copilot excels at fast, context-aware autocomplete, as long as you’re working within a single file. For example, inside NotificationBanner.tsx, if I define a status state with useState<'success' | 'error'>, Copilot will suggest the right conditional rendering for success and error states when I start writing JSX below.

It also picks up on naming conventions. If I start a handleInputChange function, it often completes the event handler with proper destructuring and setState calls, especially in controlled input components.

Where it struggles is the cross-file context. If I’m inside a component that depends on a helper function from another package, say getFeatureNameById() from @shared/features, Copilot won’t know that function exists unless it’s already imported. It might try to recreate a similar one instead.

Cursor: Context Awareness Across Files

Cursor understands broader context across files, folders, and even different packages in a monorepo. If I start typing getFeature..., Cursor will recommend the right import path and the actual function from @shared/features based on how it’s used elsewhere in the codebase.

Autocomplete inside Cursor isn’t as instant as Copilot’s, but it’s often more accurate. When working inside BillingSettings.tsx, I asked Cursor to help generate a handler for toggling feature flags. It inferred the right flag keys from the API schema and even picked the correct boolean setter logic tied to our internal useBilling() hook.

Qodo: Prompt-Driven Code Generation

Qodo doesn’t offer inline autocomplete the way Copilot or Cursor do. Instead, its strength lies in structured, prompt-driven code generation that pulls context from your repo. What’s interesting is how it handles incomplete or missing context gracefully.

When I asked Qodo Gen Chat to generate a feature flag toggle handler using the useBilling() hook and a billing API schema, it didn’t hallucinate or guess. Instead, it scanned my filesystem, searched the /schemas and /hooks directories, and when it couldn’t find the actual files, it fell back to a well-documented, extensible code template. It clearly stated its assumptions, outlined how the real code should fit, and offered suggestions I could drop into a real component. Here’s the code it generated:

export type BillingFeatureFlagKey = 'advancedReports' | 'prioritySupport' | 'enterpriseAPI';

import { useBilling } from '../hooks/useBilling';

export function useFeatureFlagToggleHandler() {

const { featureFlags, setFeatureFlag } = useBilling();

function toggleFeatureFlag(key: BillingFeatureFlagKey) {

const currentValue = featureFlags[key];

setFeatureFlag(key, !currentValue);

}

return {

toggleFeatureFlag,

featureFlags,

};

}

This wasn’t a random code dump. The structure was idiomatic, names were inferred reasonably (setFeatureFlag, featureFlags), and the logic was scoped properly with comments and usage guidance. If I had pointed it to the actual schema or hook file, Qodo would have fully integrated the real types and paths.

Qodo immediately attempted to locate the actual schema and hook files in the project.

e. Testing & Coverage

I asked Copilot, Cursor, and Qodo to generate tests for a form validation scenario, ensuring they covered edge cases and integrated with custom test setups. This comparison highlights the tools’ effectiveness in generating relevant, comprehensive test cases.

here

GitHub Copilot: Limited Setup Awareness

Copilot can generate tests, especially if you follow standard naming conventions. If I create a new file called LoginPage.test.tsx, it will pre-fill a basic test suite using @testing-library/react, including render() and a placeholder assertion like expect(getByText(...)).toBeInTheDocument().

But it doesn’t know your test setup. For example, our components are wrapped in a custom AppProvider that injects routing, theme, and state context. Copilot doesn’t account for that, so its tests won’t run unless I manually wrap everything with renderWithProviders() from our test utils.

It’s also not great at generating edge-case tests. If a component has conditional logic based on user roles, Copilot rarely covers both branches unless you explicitly prompt for it.

Cursor: Enhances Test Generation with Contextual Updates

Cursor handles tests a bit better, especially when generating or updating them based on code changes. When I refactored InviteUserModal.tsx to support multiple roles, I asked Cursor to update the test cases. It not only added a second test to cover the new role flow, but also updated the mock API call and label expectations accordingly.

That said, Cursor doesn’t have built-in coverage tools or test intelligence. You still need to use Jest/React Testing Library + a coverage reporter separately. But in terms of test code generation, it tends to produce more complete output, especially when working off a prompt like “write a test that checks form validation and error message display.”

Qodo: Method-Wise Coverage

When I prompted using Qodo-gen chat to write tests for InviteUserModal.tsx, covering validation errors and multiple user roles, it generated a complete test suite that actually reflected how the component works. The tests checked form rendering, input validation, role selection, and the submit flow. It also mocked the inviteUser API properly and used our renderWithProviders() setup without me having to ask. The coverage wasn’t surface-level; it handled different cases like validation failures, API errors, and success flows, making the tests feel real and useful, not just placeholders. Here, the test qodo-gen generated in InviteUserModal.test.tsx:

import React from 'react';

import { render, screen, fireEvent, waitFor } from '@testing-library/react';

import InviteUserModal from '../InviteUserModal';

// Mock the inviteUser API

jest.mock('../../api/inviteUser', () => ({

inviteUser: jest.fn(),

}));

import { inviteUser } from '../../api/inviteUser';

describe('InviteUserModal', () => {

const onClose = jest.fn();

beforeEach(() => {

jest.clearAllMocks();

onClose.mockClear();

});

function openModal() {

render(<InviteUserModal isOpen={true} onClose={onClose} />);

}

it('renders nothing when isOpen is false', () => {

const { container } = render(<InviteUserModal isOpen={false} onClose={onClose} />);

expect(container).toBeEmptyDOMElement();

});

it('renders form fields and buttons', () => {

openModal();

expect(screen.getByLabelText(/email/i)).toBeInTheDocument();

expect(screen.getByLabelText(/role/i)).toBeInTheDocument();

expect(screen.getByText(/invite/i)).toBeInTheDocument();

expect(screen.getByText(/cancel/i)).toBeInTheDocument();

});

it('validates email and shows error for invalid email', async () => {

openModal();

fireEvent.change(screen.getByLabelText(/email/i), { target: { value: 'invalidemail' } });

fireEvent.click(screen.getByText(/invite/i));

expect(await screen.findByText(/invalid email/i)).toBeInTheDocument();

expect(inviteUser).not.toHaveBeenCalled();

});

it('allows role selection', () => {

openModal();

const select = screen.getByLabelText(/role/i) as HTMLSelectElement;

fireEvent.change(select, { target: { value: 'admin' } });

expect(select.value).toBe('admin');

fireEvent.change(select, { target: { value: 'editor' } });

expect(select.value).toBe('editor');

fireEvent.change(select, { target: { value: 'viewer' } });

expect(select.value).toBe('viewer');

});

it('calls inviteUser API with correct data and shows success message', async () => {

(inviteUser as jest.Mock).mockResolvedValueOnce({});

openModal();

fireEvent.change(screen.getByLabelText(/email/i), { target: { value: '[email protected]' } });

fireEvent.change(screen.getByLabelText(/role/i), { target: { value: 'editor' } });

fireEvent.click(screen.getByText(/invite/i));

await waitFor(() =>

expect(inviteUser).toHaveBeenCalledWith({ email: '[email protected]', role: 'editor' })

);

expect(await screen.findByText(/user invited successfully/i)).toBeInTheDocument();

expect((screen.getByLabelText(/email/i) as HTMLInputElement).value).toBe('');

expect((screen.getByLabelText(/role/i) as HTMLSelectElement).value).toBe('viewer');

});

it('shows error message if API call fails', async () => {

(inviteUser as jest.Mock).mockRejectedValueOnce(new Error('fail'));

openModal();

fireEvent.change(screen.getByLabelText(/email/i), { target: { value: '[email protected]' } });

fireEvent.click(screen.getByText(/invite/i));

expect(await screen.findByText(/failed to invite user/i)).toBeInTheDocument();

});

it('calls onClose when Cancel button is clicked', () => {

openModal();

fireEvent.click(screen.getByText(/cancel/i));

expect(onClose).toHaveBeenCalled();

});

});

In the above code block, it’s visible that it automatically mocked the inviteUser API using jest.mock(), followed our project’s testing setup by importing render and screen from React Testing Library, and organized the tests into a describe block with a beforeEach() to reset mocks.

f. IDE & Workflow Integration

I assessed how Copilot, Cursor, and Qodo integrate into existing development workflows by testing their ability to assist with code generation and CI/CD compatibility. This comparison focuses on how each tool fits into the broader development process.

GitHub Copilot: Simple Integration within IDEs

Copilot integrates cleanly into VS Code, JetBrains, and Neovim. It respects your existing extensions, works inside terminal-based workflows, and doesn’t conflict with linters or formatters. It’s fast and unobtrusive, which is why it’s easy to keep using it long term.

That said, it lives inside your editor. There’s no CLI support, no repo-wide automation, and no integration into your CI/CD or review pipelines. It helps you write code faster, but it doesn’t plug into the broader development lifecycle.

Cursor: IDE with Built-in AI Tools

Cursor runs as a custom IDE, a forked VS Code build and that brings both advantages and friction. On the plus side, it has built-in tools for prompting AI actions, querying your repo, and managing AI history per file. It’s easy to ask things like “what changed in this PR?” or “refactor all components in this folder to use the new API.”

However, the downside is setup and compatibility. Some VS Code extensions (like Tailwind IntelliSense or GitHub Copilot itself) behave inconsistently. Also, Cursor can’t be used from the terminal or integrated into a CI pipeline. So while it’s a stronger AI IDE than Copilot, it’s not as flexible for teams that use different environments or need tooling consistency across dev, test, and review stages.

Qodo: IDE Plugin Enhancing Development Workflow

Qodo Gen integrates directly into your IDE, specifically Visual Studio Code and JetBrains IDEs, through a plugin that enhances your development workflow. It provides features such as AI-powered code generation, test creation, and real-time code assistance. By embedding these capabilities within your existing development environment, Qodo Gen allows you to streamline tasks like writing code, generating tests, and reviewing code without the need to switch contexts or use additional tools. This integration aims to improve code quality and developer productivity by offering intelligent suggestions and automations directly where you write your code.

Conclusion

Cursor offers more advanced capabilities than Copilot, particularly for navigating and working across complex codebases. This makes it a potentially better fit for enterprise workflows. However, as a standalone IDE, it can sometimes run into performance issues such as lags or freezes. Its support for multiple languages may not be as extensive as standard VS Code, and additional extensions are sometimes needed to achieve parity with tools like Copilot or Qodo.

FAQs

What is the best Copilot alternative?

For teams working in complex codebases or regulated industries, Qodo is the best alternative to Copilot. It’s built for reliability, compliance, and auditability, not just code suggestions.

What are the limitations of Cursor?

Being a heavy IDE, it can sometimes become laggy, especially in larger projects. Additionally, its language support is not as wide as VS Code.

What is the best Cursor alternative for an enterprise?

Qodo is the top alternative for enterprise use cases. It supports compliance needs, provides audit trails, and works inside GitHub pull requests, making it a better fit for regulated industries.

What is Copilot best for and what is not?

Copilot is best for speeding up small, focused tasks inside a single file. It’s not great at understanding large codebases, managing multi-file changes, or integrating into CI pipelines.

Can I use Cursor for enterprise code?

You can, but there are trade-offs. Cursor doesn’t support audit trails or compliance features like SOC2 or RBAC, so it’s riskier for regulated environments.

Is Copilot secure and SOC2 compliant?

No, Copilot is not SOC2 compliant. It’s built for developer productivity, not for enterprise compliance needs.

Which AI coding agent is better Roo Code vs Cline?

Roo Code is great if you want more autonomous task execution, while Cline is better for developers who prefer a guided, step-by-step workflow. Each works well for different styles of coding.

Teams often pair Roo Code or Cline with Qodo, which focuses on the code-review side checking architecture, quality, security, and cross-repo impact so the code produced by either agent is actually correct, consistent, and ready to merge.

Which AI coding agent is better Cline vs Windsurf?

Cline is great for developers who like a transparent, step-by-step workflow, while Windsurf focuses more on speed and tighter IDE-style integration. Both can be effective depending on how hands-on you want the coding experience to be.

Teams often pair Cline or Windsurf with Qodo, which covers the code-review side catching architectural issues, quality gaps, security risks, and cross-repo impacts so the code from either agent is validated before it’s merged.