Sanity Testing That Saves You Hours: A Modern Guide for Senior Engineers

TLDR

- Sanity testing is a quick, focused validation after bug fixes or small updates, confirming that critical user paths still work. For example, after fixing an authentication bug in a microservices platform, I ran sanity checks on login, token generation, and session handling, while skipping unrelated modules, and avoided a production outage.

- Unlike regression testing, which is broad and time-consuming, sanity testing is narrow in scope and optimized for urgent changes like hotfixes. It prevents unstable builds from slipping into production, saving teams from wasted QA cycles, costly rollbacks, and frustrated users.

- Traditional sanity testing worked for smaller apps with slow releases, but became impractical with today’s frequent deployments. Manual checks were repeated for every build, delayed hotfixes, introduced inconsistencies, and often missed edge cases.

- Automating sanity testing with AI code review tools like Qodo integrates sanity checks earlier into pull requests with features like /review and static analysis. Instead of waiting for manual sign-off, developers get immediate feedback on risky changes such as unstable login flows, missing edge-case tests, or potential SQL injection risks.

- Sanity testing is still very important, but its effectiveness now depends on automation integrated into the developer workflow. Running targeted checks during pull requests or as part of CI ensures hotfixes are validated instantly, unstable builds are caught before merging, and deployment pipelines stay reliable even under rapid release cycles.

In modern software delivery, the rate of production deployment has increased significantly, where 32.5% of development teams deploy weekly and an additional 27.3% deploy multiple times a day. A minor overlooked issue in a hotfix can derail entire workflows, frustrate users, and in some cases, lead to costly rollbacks. This is where sanity testing becomes invaluable. It acts as a checkpoint to ensure that critical functionalities still work as expected after a new change or patch.

From my own experience leading release cycles in distributed systems like a microservices-based payment platform, I have often relied on sanity testing as a safeguard before merging urgent bug fixes. On one occasion, a seemingly harmless API update caused cascading failures in authentication services.

To avoid such incidents, I implemented a lightweight sanity test suite. The process was simple: I identified a minimal set of high-priority test cases tied directly to user-critical paths, such as login, transaction initiation, and session refresh. Instead of running the entire regression suite, I performed these targeted tests immediately after applying the hotfix in a staging environment.

Running a quick sanity test suite saved me from shipping that broken code to production, sparing both the engineering team and end-users from unnecessary downtime. It is this balance of speed and reliability that makes sanity testing a discipline every senior engineer should treat seriously.

Interestingly, this practice is frequently debated in developer communities. In one Reddit discussion on r/softwaretesting, a user explained the distinction clearly: regression testing ensures the product as a whole works as expected, while sanity testing is a subset that focuses on a smaller, core set of regression tests.

They added that regression might be run broadly before a release or to validate a refactor, whereas sanity tests are often used to quickly verify that a build passes essential functions or that a bug fix has not broken anything else when time does not allow for a full regression run. This perspective highlights why some teams still view sanity checks as indispensable, especially under tight delivery schedules.

In this blog, I’ll walk through what sanity testing really means, how it differs from regression and smoke testing, and why I see it as a key step in release cycles. I’ll also share how I approach sanity checks in CI/CD pipelines and show where tools like Qodo can help automate and simplify the process.

What is Sanity Testing?

Sanity testing is a focused, high-level check performed after introducing new changes or bug fixes. Its goal is simple: to quickly validate that the change works as intended and hasn’t broken core functionality.

For example, imagine a banking application where a bug fix was applied to correct an issue in the fund transfer module. After the fix, sanity testing would involve quickly checking whether a transfer between two accounts now works as expected. The QA engineer would also verify that essential linked functions, such as updating the balance and generating a transaction receipt, are intact.

They would not go through unrelated areas like loan applications or credit card requests, since those are outside the scope of the recent change. This way, sanity testing in software testing ensures that the specific change is stable and hasn’t disrupted the primary workflow before the application moves into full regression testing.

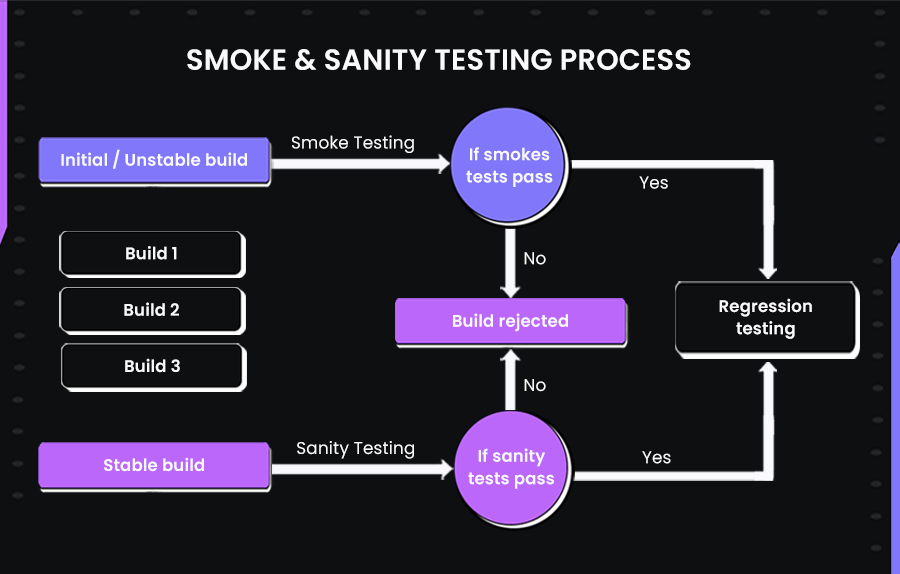

Sanity Testing vs Smoke Testing

As the above infographic shows, smoke testing is broad but shallow. because it covers a wide range of core features, but only at a surface level. For example, in an e-commerce app, a smoke test might confirm that users can log in, search for products, add an item to the cart, and proceed to checkout, but it will not dive into edge cases like invalid promo codes or multi-currency transactions.

It happens right after a new build to confirm that the most essential features are working. Think of it as a gatekeeper: if the smoke test fails, the build isn’t even worth testing further.

Sanity testing comes later and is narrower in scope but deeper in focus because it drills into the specific change rather than touching everything. For instance, if a bug fix is applied to the payment gateway of an e-commerce site, the sanity test would go beyond simply checking if the “Pay Now” button works.

It would validate whether the transaction is processed correctly, receipts are generated, and balances are updated, while ignoring unrelated modules like product search or wishlist management. This makes it ideal for validating hotfixes such as a patch to fix a failed transaction flow or a broken login endpoint, without the overhead of running the full regression cycle.

Regression testing, by contrast, is the most comprehensive because it runs through the full suite of existing features, not just the latest change. For example, after introducing a new payment method in an e-commerce application, regression testing would not stop at validating checkout. It would also confirm that user authentication, product search, order history, refund processing, and even unrelated modules like wishlist or notification services still behave correctly. While essential before major releases, regression cycles can be resource-intensive and time-consuming.

Taken together, smoke testing, sanity testing, and regression testing form a layered safety net. Smoke tests establish whether a build is stable enough to test further, sanity tests validate the correctness of specific changes, and regression testing ensures overall system stability.

Let me explain with a real-world example from one of my projects. I once had to merge a critical bug fix in the authentication module of a production system. Running the entire regression suite would have taken hours, which was not feasible given the urgency of the fix.

I ran targeted sanity tests on the login flow, token generation, and session handling. These checks confirmed the fix was effective and that core authentication remained stable. With that assurance, we deployed the patch immediately while the broader regression suite continued in parallel.

Traditional Approach to Sanity Testing

Sanity testing in its traditional form has largely been a manual and time-consuming activity. After a build was deployed, QA engineers or developers would validate key areas such as login, authentication, and core business actions to confirm the system was stable enough for further testing.

This meant walking through workflows step by step. For example, a login check went beyond clicking the sign-in button; it involved confirming that valid credentials generated a session token, that the token was stored correctly, and that restricted pages became accessible. Negative cases were also verified to ensure error messages displayed properly without exposing sensitive details.

This approach worked reasonably well for smaller applications with limited features and slower release cycles, but it became impractical once systems grew more complex, with frequent builds and interconnected modules.

In agile and DevOps environments with frequent builds, repeating these checks by hand quickly became a bottleneck. Different teams often had their own ad-hoc scripts, results varied, and developers faced delays waiting for manual validation before merging or releasing fixes. Let’s see some key characteristics of the traditional approach:

Limitations of the Traditional Approach

The traditional manual style of sanity testing worked reasonably well for smaller applications with limited features and slower release cycles. For example, in a simple HR portal with only a few modules like employee login, leave requests, and payslip generation, testers could manually validate each flow in under an hour. Similarly, in an e-commerce MVP with a handful of core actions such as product search, cart, and checkout, it was manageable to walk through the workflows after every build.

In those cases, testers or developers could afford to manually validate authentication, navigation, or key transactions without it becoming a significant overhead.

However, this method struggles to keep pace with modern software development practices. In agile and DevOps environments where builds are frequent and continuous integration is the norm, manual sanity testing quickly becomes a bottleneck. This is because each new build may affect different areas of the application, requiring multiple checks across functionalities specific to that build. Repeating these validations by hand slows down the release cycle and introduces inconsistency.

Moreover, time constraints can make the situation more complex. Sanity tests are often performed under pressure to validate a hotfix or small patch, leaving little room for thorough verification. In these cases, testers may rush through steps, skip edge cases, or focus narrowly on the intended fix, which increases the risk of missing defects.

Another limitation is the gap between what sanity testing validates and what developers need to debug effectively. Sanity tests typically confirm whether a feature appears to work, but do not provide insights into deeper structural issues.

Why There Is a Need for a Better Approach or Tool:

- Frequent Builds Create Bottlenecks: In a team shipping multiple builds a day, manually walking through login, checkout, or API workflows after every change becomes impossible without slowing down releases.

- Inconsistent Results Across Testers: One tester might validate token storage thoroughly, while another only clicks through the login page. This lack of consistency makes it harder to trust the results.

- Delays in Hotfix Deployments: When a production bug needs a quick fix, waiting hours for manual sanity checks blocks delivery and frustrates developers relying on rapid feedback.

- Missed Edge cases: Manual scripts often cover only the happy paths. Subtle cases like expired sessions, failed payment retries, or API timeout handling are frequently skipped, letting defects slip through.

Modern development pipelines require tools that can automate sanity testing, integrate with CI/CD systems, and provide fast, reliable feedback without relying solely on manual intervention. A good approach or tool not only accelerates validation but also reduces risk, ensuring stability even in high-velocity release cycles.

Why Do I Prefer Qodo For Sanity Checks?

Being an AI coding assistant, Qodo brings automation and intelligence into sanity testing by embedding directly into development workflows. Instead of relying on manual checks after a build, Qodo integrates at the pull request and code review stage, where issues can be identified before they reach deployment. This shift not only reduces the overhead of repetitive sanity checks but also aligns the process with modern CI/CD practices.

One of the key advantages of Qodo is its AI-driven code understanding, which allows sanity checks to move beyond surface-level validation. Developers can catch potential risks at the code level, ensuring that fixes or new features do not disrupt existing functionality. By combining static analysis, AI suggestions, and contextual review, Qodo provides a faster and more reliable way to verify build stability.

Capabilities Relevant to Sanity Testing

- RAG-driven code review: Qodo makes the code review process simpler by validating whether a fix or feature aligns with project-specific best practices by leveraging Retrieval-Augmented Generation for contextual understanding.

- Self-review and /review commands: Developers can quickly surface whether new changes break assumptions or introduce side effects, reducing reliance on manual inspection.

- Static analysis with AI suggestions: Unsafe or non-functional code changes are flagged immediately, ensuring that stability checks happen early in the pipeline.

- Dual publishing and suggestion tracking: Sanity checks are documented across pull requests, creating a reproducible trail of validation and improving collaboration among teams.

First Hand Example: Sanity Testing with Qodo

For this exercise, I wanted to simulate adding a new feature to our FastAPI Log Ingestion Microservice and see how Qodo Gen and Qodo Merge can help with sanity testing. I decided to implement a source_type filter for incoming logs, which allows the service to process only logs from a specific source before normalizing them.

The feature required updating the log ingestion endpoint to accept a source_type query parameter, integrating this filter with the existing normalization logic, adding unit tests for various edge cases, and ensuring that OpenAPI documentation reflected the change.

Sanity Test with Qodo Gen: In-Editor

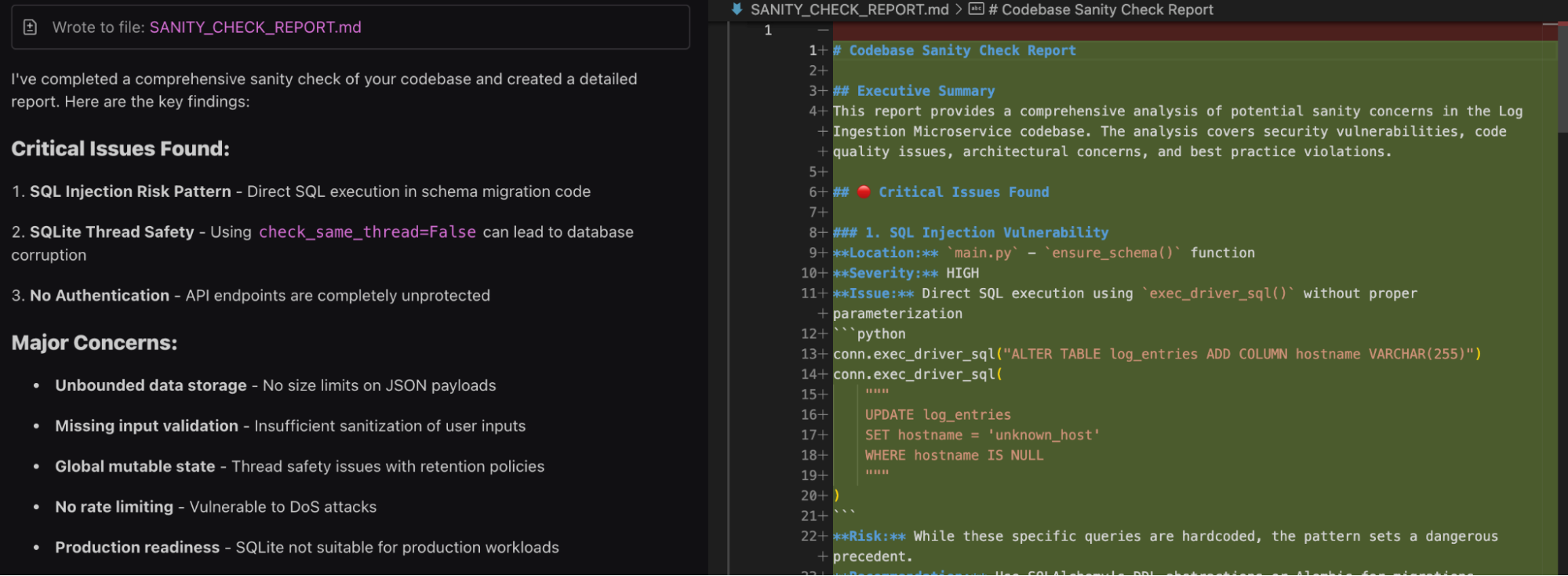

Before checking on a pull request, I wanted to check how Qodo finds out sanity checks using Qodo Gen in the editor itself. I asked Qodo to check sanity concerns in my current codebase.

Qodo began by scanning the codebase for sensitive information or risky patterns, such as hardcoded credentials, direct exec or eval usage, and SQL injection risks. It then ran all existing unit tests to confirm runtime stability.

Afterward, Qodo performed a deeper static analysis for security and code quality issues. It flagged common concerns like SQLite thread safety (check_same_thread=False) and potential SQL injection patterns in schema migration code. It also checked for missing authentication and unbounded data handling.

Moreover, Qodo compiled the findings into a detailed SANITY_CHECK_REPORT.md file of my codebase, making it easier for me to read and understand what the actual concern is.

Here’s what Qodo answered:

- Critical Issues: SQL injection risk due to direct SQL execution, unsafe SQLite thread handling, and missing authentication on API endpoints.

- Major Concerns: No input validation, lack of rate limiting, reliance on global mutable state, and unbounded JSON payloads.

- Risk Level: Overall rated as MEDIUM-HIGH, acceptable for development use, but requiring substantial fixes before production deployment.

Qodo even included specific code snippets and recommendations for fixing each issue, such as parameterizing SQL queries, enforcing input validation, and adding authentication middleware.

I really liked how Qodo Gen acts as an automated gatekeeper, combining functional checks with static analysis and security validation, providing developers with a practical risk assessment before code review or release.

Sanity Test with Qodo Merge

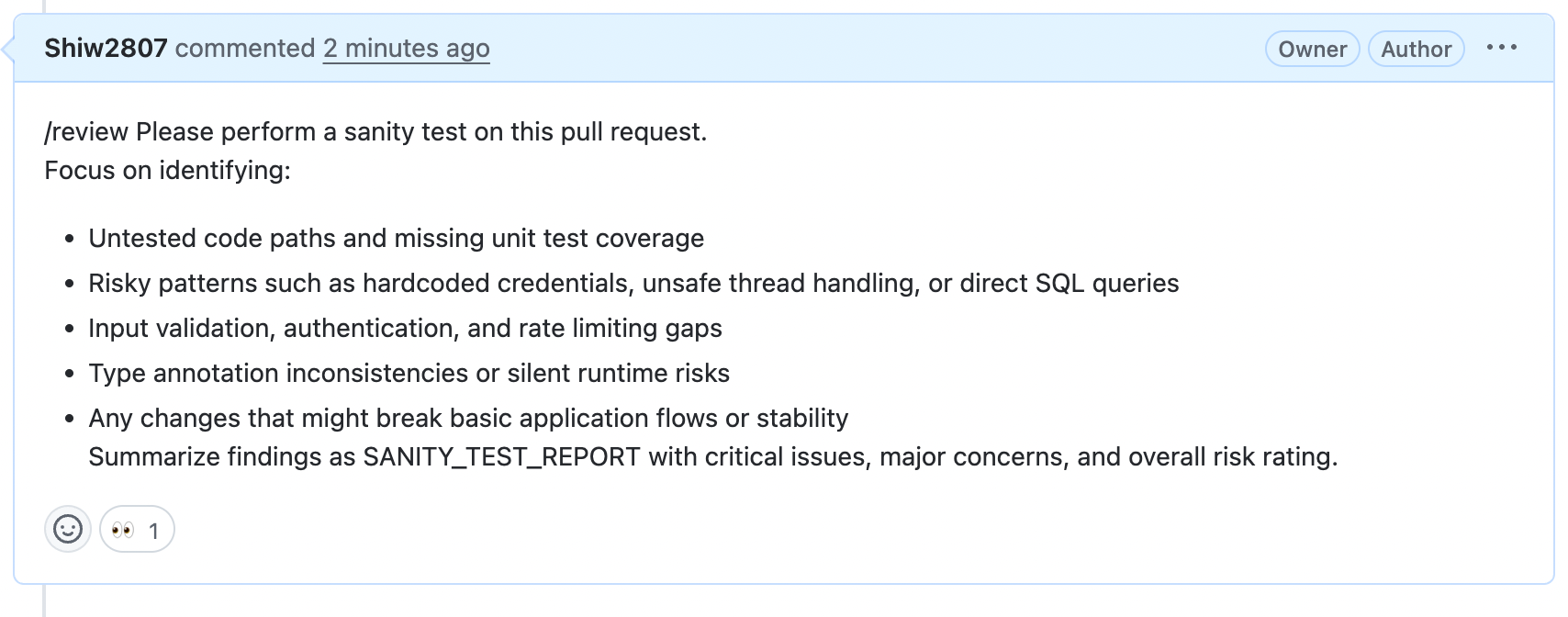

With the PR ready, I prompted Qodo Merge using the /review command to perform an automated sanity check. Here’s a snapshot of the prompt I gave:

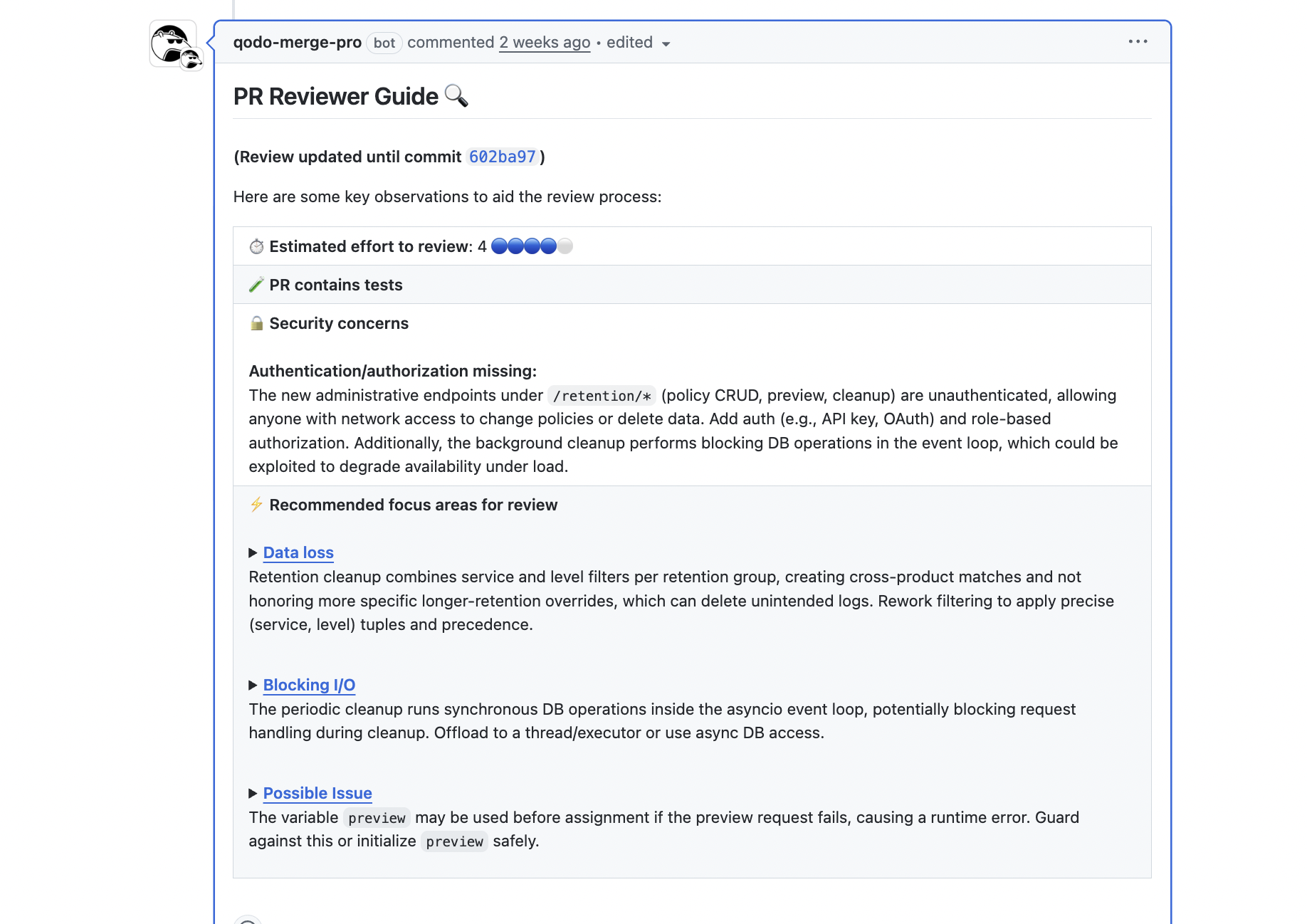

Qodo scanned the new changes for alignment with our coding practices, identified untested code paths, and flagged potential issues before running a full regression. Here’s a snapshot of the review:

In my case, Qodo highlighted that some edge cases for the source_type filter were missing in the unit tests and pointed out minor inconsistencies in type annotations. I applied the suggested fixes, added the missing test cases, and re-triggered /review. Qodo confirmed that all coding practices were followed, and the code passed sanity validation.

Using Qodo in this way significantly accelerated the sanity testing process. Instead of manually checking the new feature and hunting for untested paths, I relied on Qodo to catch potential issues early.

This workflow gave me confidence that the feature was robust and ready for full regression testing, while also reducing the risk of introducing regressions into the existing log ingestion functionality.

Best Practices: Using Qodo in Sanity Testing

Incorporating Qodo into your sanity testing workflow can significantly enhance code quality and streamline the development process. Here are some best practices to ensure effective utilization:

Automate Sanity Checks with /review Command

Leverage Qodo’s /review command to automatically assess pull requests for adherence to coding standards, test coverage, and potential security vulnerabilities. This automation ensures that each code change is evaluated consistently and promptly, reducing the manual effort required during code reviews.

Customize Review Instructions

Tailor the review process by providing specific instructions through the extra_instructions configuration. This customization allows you to focus Qodo’s analysis on particular areas of the codebase, such as new features or critical bug fixes, ensuring that the review aligns with your team’s priorities.

Integrate Auto Best Practices

Enable the Auto Best Practices feature to allow Qodo to learn from accepted code suggestions and adapt to your team’s coding patterns. This dynamic learning process helps maintain consistent code quality and reduces the likelihood of recurring issues.

Maintain a best_practices.md File

Document your team’s coding standards and guidelines in a best_practices.md file located in the repository’s root directory. This file serves as a reference for Qodo, ensuring that the suggestions align with your team’s established practices.

Regularly Update Best Practices

Periodically review and update the best_practices.md file to reflect evolving coding standards and practices. Keeping this document current ensures that Qodo’s suggestions remain relevant and beneficial to the development process.

Conclusion

Sanity testing may feel lightweight compared to full regression cycles, but its impact in modern software development is undeniable. In high-velocity environments where teams push frequent updates, sanity checks act as the safety net that balances speed with stability. By quickly validating critical flows after bug fixes or hotfixes, senior engineers ensure that unstable builds don’t waste QA cycles or reach production.

Traditional sanity testing has limitations: manual, inconsistent, and slow, but with automation and AI-driven tools like Qodo, sanity testing can shift left into the development workflow. This not only accelerates validation but also strengthens confidence in rapid releases. For senior engineers, embracing sanity testing as a discipline and enhancing it with automation is key to building resilient, scalable release pipelines.

FAQs

How does Qodo Support Sanity Testing Compared to Traditional Methods?

Traditional sanity testing is often manual, repetitive, and inconsistent across teams. Qodo automates this process by embedding sanity checks directly into pull requests, using AI-driven static analysis and contextual code review. This shifts validation left in the pipeline, reducing the need for manual effort while catching issues earlier and more reliably.

Can Qodo be Integrated into Existing CI/CD Workflows for Sanity Testing?

Yes. Qodo is designed to fit seamlessly into modern CI/CD pipelines. It can run automated sanity checks at the pull request stage, during code review, or as part of build validation. This ensures that issues are identified before reaching deployment without adding extra overhead to existing workflows.

How does Qodo Ensure Consistency in Sanity Testing Across Teams?

Qodo enforces consistency by standardizing sanity checks through features like /review commands, AI-driven suggestions, and integration with a best_practices.md file in the repository. This eliminates the variability of manual checks and ensures that every code change is validated against the same agreed-upon standards across all teams.

Can Senior Developers use Qodo for Personal Sanity Checks Before Submitting a PR?

Absolutely. Developers can run Qodo’s /review command locally or on draft pull requests to validate their own changes before requesting a formal review. This allows senior engineers to self-check their code, catch potential issues early, and reduce back-and-forth during code reviews.

Does Qodo Replace Regression Testing or QA Processes?

No. Qodo does not replace regression testing or QA, it complements them. Sanity testing focuses on quick, high-level validation of recent changes, while regression testing ensures system-wide stability. Qodo strengthens sanity checks by automating and standardizing them, ensuring builds are stable enough before investing in full QA cycles or regression suites.

How does Sanity Testing Differ from Running Automated Unit Tests?

Unit tests focus on isolated functions or components, while sanity tests validate end-to-end flows tied to recent fixes or updates. Automated unit test improvement using large language models can make unit coverage smarter, but sanity testing still serves as a quick validation step to confirm that critical workflows remain stable.

Can Large Language Models Improve Sanity Testing Itself?

Yes, LLMs improve sanity testing by itself. While sanity testing traditionally targets workflows, large language models can generate or refine automated unit tests that cover edge cases often missed by humans. These improved tests can be run as part of a lightweight sanity suite, making the process faster and more reliable.

Where does Automated Unit Test Improvement Using Large Language Models Fit into CI/CD Sanity Checks?

In modern pipelines, LLMs can scan code changes and suggest additional unit tests before merging. These AI-suggested tests complement sanity testing by ensuring low-level stability, while the sanity checks confirm that high-priority user paths remain intact.