Top 7 Code Review Best Practices For Developers in 2025

TLDR;

- Effective code reviews catch bugs early, reduce tech debt, and improve architecture, but they also provide mentorship, share domain knowledge, and improve team velocity.

- Good code solves the right problem. Reviewers must assess whether the change aligns with real business requirements, not just if it “works.

- Code reviews are more effective when you track inspection rate, defect density, and conformance, and checklists help make the process repeatable and reliable.

- Give extra attention to critical code paths like auth, APIs, and shared components – they impact more than immediate change.

- Code review tools with contextual suggestions (like Qodo Merge) reduce manual effort on syntax and style, enabling reviewers to focus on deeper concerns like architectural boundaries, module cohesion, and adherence to layering principles.

Top 7 Code Review Best Practices

- Understand the Business Context

- Introduce Code Review Metrics

- Assess Architectural Impact

- Evaluate Test Coverage and Quality

- Balance Review Depth with Time Constraints

- Provide Constructive and Respectful Feedback

- Use Code Review Tools Effectively

Over the years, I’ve seen code reviews as one of the most high-leverage activities in the entire software development lifecycle. Not just for catching bugs or cleaning up syntax, those are table stakes, but for shaping how teams think, communicate, and scale software quality across the board.

As a Lead Developer, I’ve seen code reviews serve as a quiet force multiplier in every high-performing team I’ve worked with. They surface architectural flaws like poor module separation, unclear data flow, or inefficient API design, before they become costly rewrites. Most importantly, they foster a culture of technical dialogue, where context behind design decisions, edge cases, and historical trade-offs are shared and documented, and mentorship happens organically through pull requests, reinforcing practical code Documentation Best Practices in day-to-day work.

I’ve conducted code reviews on everything from microservices at scale to machine learning pipelines, and the impact is consistent: teams that take code reviews seriously build better software faster, with fewer problems in production.

That’s why code review best practices are really important for developers. In this post, I’m sharing the seven code review practices consistently delivering the most value. These aren’t theoretical checklists; they’re lessons refined through real-world pressure, tight deadlines, and a relentless focus on code quality and team velocity.

Why Are Code Reviews Important?

Before moving to code review best practices, let’s see why code reviews are important in every development cycle. As per TrustRadius survey, 36% of companies prefer code reviews as the most helpful way to improve code quality. This starts with collaborative story writing and continues with peer reviews that help identify problems before they reach production.

Prevent costly issues before they ship.

In my experience, code reviews aren’t just for catching small bugs. They help us find logic errors, such as the API being called several times instead of once, which can be a real problem in production, resulting in higher costs. Fixing these early can prevent such hassles.

Improve code quality through peer feedback.

No matter how seasoned the developer is, peer feedback often uncovers better ways to structure, simplify, or optimize code. I’ve seen even the most elegant implementations become more robust with a second pair of eyes challenging assumptions or offering alternatives.

Share knowledge across the team.

Code reviews offer a chance for knowledge transfer. Whether it’s understanding why a particular design pattern was chosen or surfacing lesser-known parts of the codebase, like utility layers, internal APIs, feature flags, legacy workarounds, or custom hooks, every review is a lightweight mentoring opportunity, especially for junior or new team members.

Maintain consistency in coding standards.

Consistency across modules isn’t just aesthetic; it directly affects maintainability. Reviews enforce coding conventions like using camelCase for variables, consistent prop ordering in React components, or following the project’s preferred folder structure (e.g., components/, services/, hooks/). This ensures the codebase feels cohesive, regardless of who wrote what.

Avoid technical debt by recognizing bad patterns early

I treat every review as a chance to prevent future headaches. For example, watch for repeated fetch logic that should live in a shared hook, tightly coupled logic, or over-engineered abstractions. Catching these early avoids quick solutions, and often needs to be reworked later.

Build accountability and promote cleaner coding habits

Knowing that code will be reviewed encourages developers to write more deliberate, self-explanatory code. For example, instead of naming a button click handler handleClick(), reviewers might suggest names like submitOrder() to clarify the intent. Over time, this raises the overall code quality and instils habits that benefit the entire team.

Top 7 Code Review Best Practices

Now that we have understood why code review is important, it’s time to pick them individually. Over the years, I’ve come to see code reviews as more than just a technical formality. They’re a tactical process that balances deep technical intelligence with a strong understanding of the product and user goals. The following code review best practices are ones I rely on consistently to deliver higher-quality, scalable, and maintainable code.

Understand the Business Context

Every line of code should serve a purpose that ties back to a product requirement or business outcome. When reviewing code, I always ask: Is this solving the actual problem? It’s easy to get lost in technical implementation and lose sight of the feature’s intent. If a proposed change doesn’t clearly map to a real user or business need, that’s a red flag.

Seek Clarification When Needed

If the rationale behind a code change isn’t obvious, I don’t guess; I ask. Commenting with a simple “What use case does this solve?” helps me understand what the code focuses on.

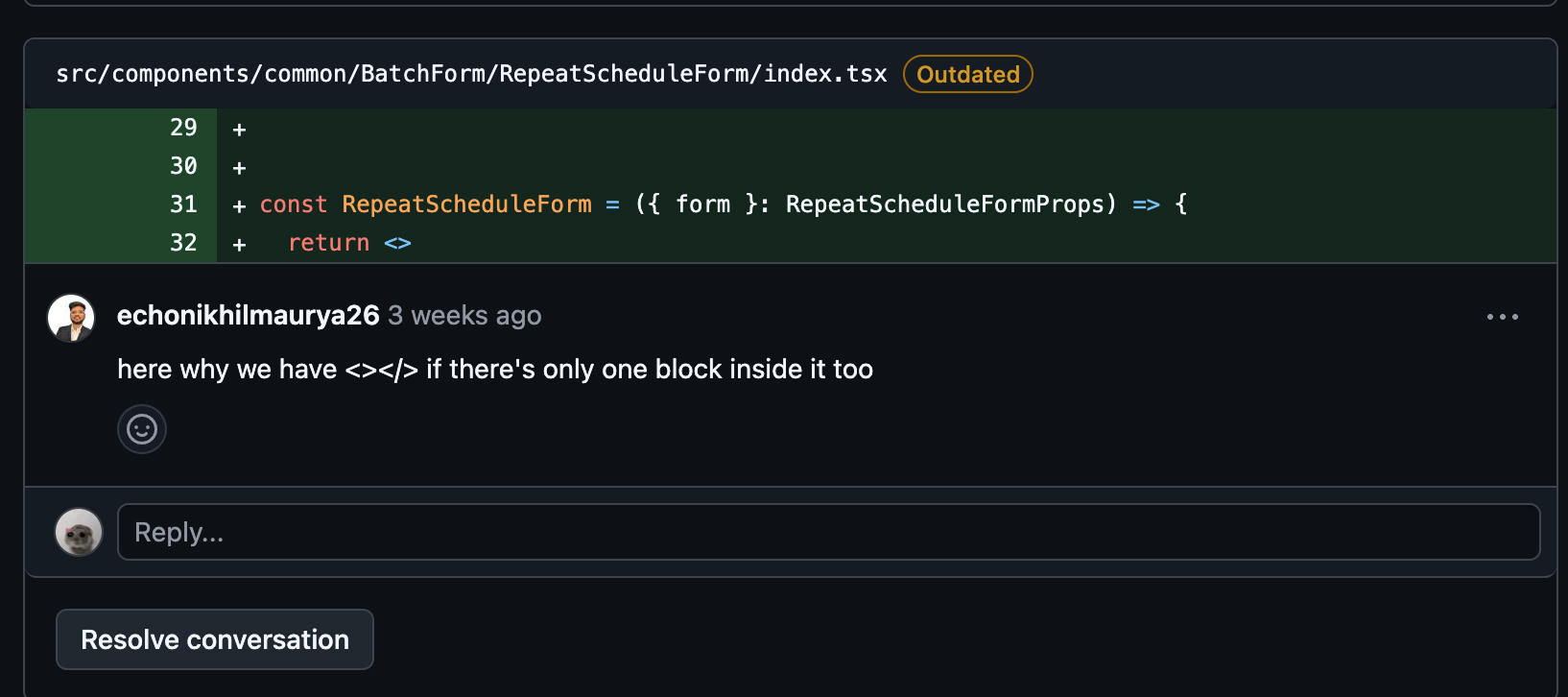

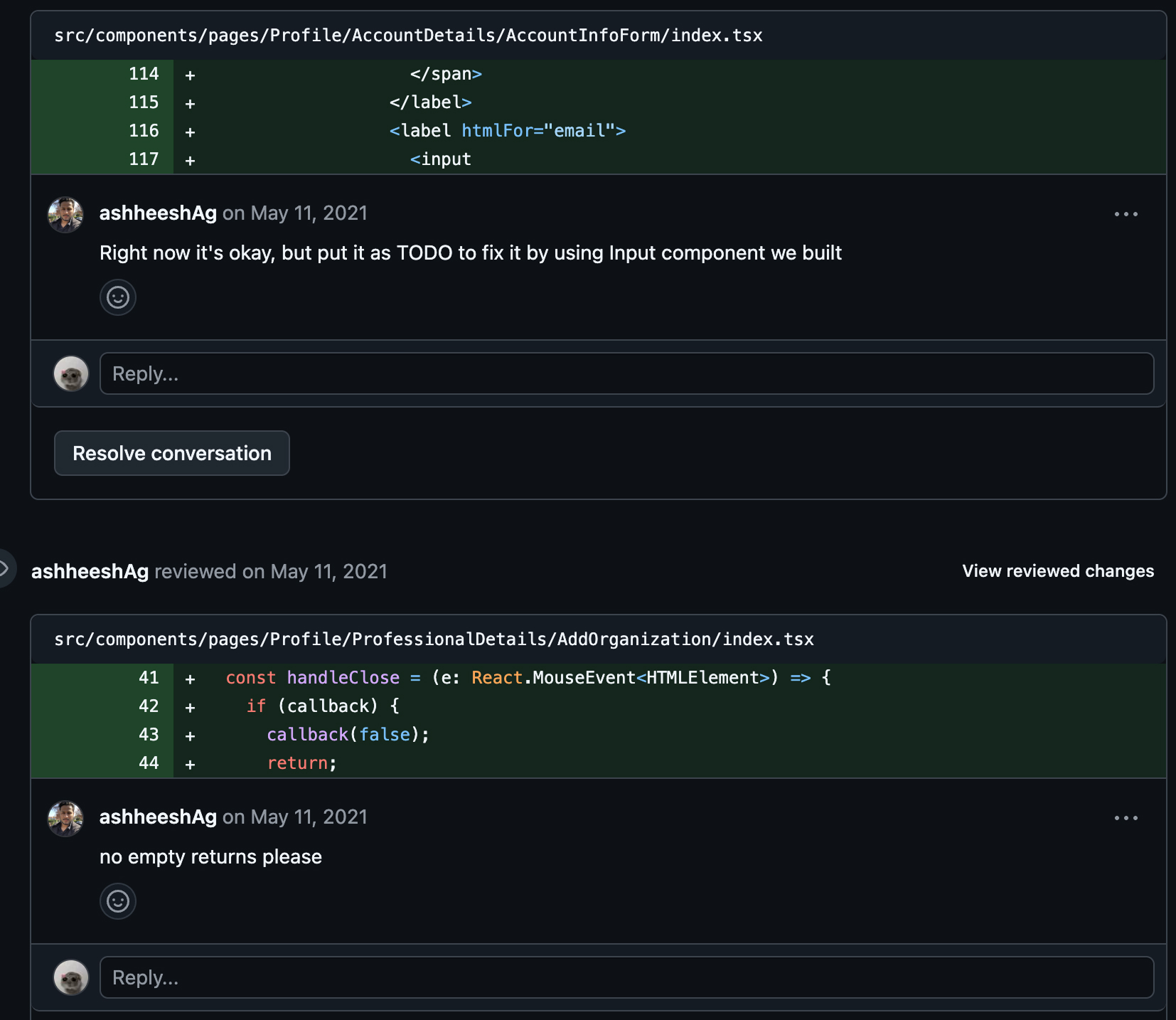

Look at the image above, it’s a small fix: the developer is returning within <></> even if the return contains one block element. This doesn’t cause any issues, but when we are aiming for clean and maintainable code, small factors like this matter. Codes are for teammates who read, review, and build on them. Consistent code refactors and formatting can help developers understand the intent faster and reduce friction across the team.

Real-World Example

In one of our data-heavy projects, a developer submitted a PR optimizing a set of PostgreSQL queries in the backend code. On the surface, it looked like a win: reduced response time and fewer joins. After reviewing, I found that the new queries relied on cached data, introducing delayed updates.

It turned out that the product requirement explicitly demanded real-time analytics for critical dashboards, and the caching layer introduced delays of several seconds in reflecting the new data. By catching that during the review, we prevented stale data from surfacing to users. Understanding the why behind the code is what separates a good review from a great one.

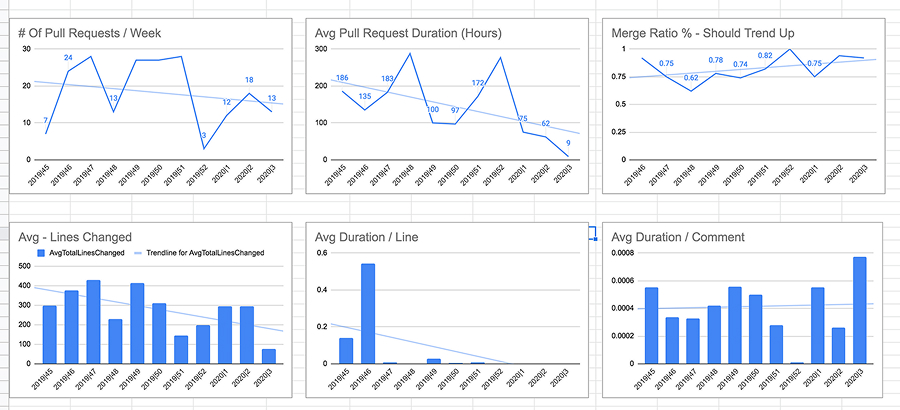

Introduce Code Review Metrics

From experience, I’ve learned that you can’t improve what you don’t measure. Code reviews often feel subjective, but introducing a few targeted metrics can transform them into a more disciplined and insightful process.

Code review guidelines and metrics also point to risky areas, uncover hidden risks and may aid in detecting security flaws, performance issues, or potential scalability problems. Plus, they can highlight areas where the team might not be fully aligned.

Below are some common metrics for a PR raised for review:

Metrics give us a feedback loop. They help identify bottlenecks in the review cycle, such as delays in feedback, long approval times, or rework due to missed issues. They also point out areas with frequent defects, like specific modules or features, and help track if the review process is improving over time.

Key Code Review Metrics to Track

- Inspection Rate

Formula: Lines of Code ÷ Review Hours

This tells us how quickly reviews are being completed. If the inspection rate is much lower than the 150‑500 LOC/hr range, check whether the change set is huge or the code is especially tricky. In those cases, speeding up reviews isn’t the goal. Helping the author break down logic into smaller, well-named units is.

- Defect Discovery Rate

Formula: Defects ÷ Review Hours

A surprisingly low defect discovery rate isn’t always a win; it could mean reviewers aren’t digging deep enough or that pre-review test coverage is lacking. I’ve used this metric to spot blind spots in test cases and push for more rigorous review checklists.

- Defect Density

Formula: Defects ÷ kLOC (thousand lines of code)

This helps identify modules or teams that might need extra attention to code, either due to recurring bugs or structural complexity. I typically use this metric to prioritize reviews from junior devs or flag areas of the codebase that require deeper architectural oversight from senior engineers.

Assess Architectural Impact

The next important PR review best practices include assessing the architectural impact of the code, i.e., assessing not just what the code does, but how it fits into the bigger system. I’ve seen code that passes all unit tests and follows style guides but still causes long-term damage, because no one evaluated its architectural impact.

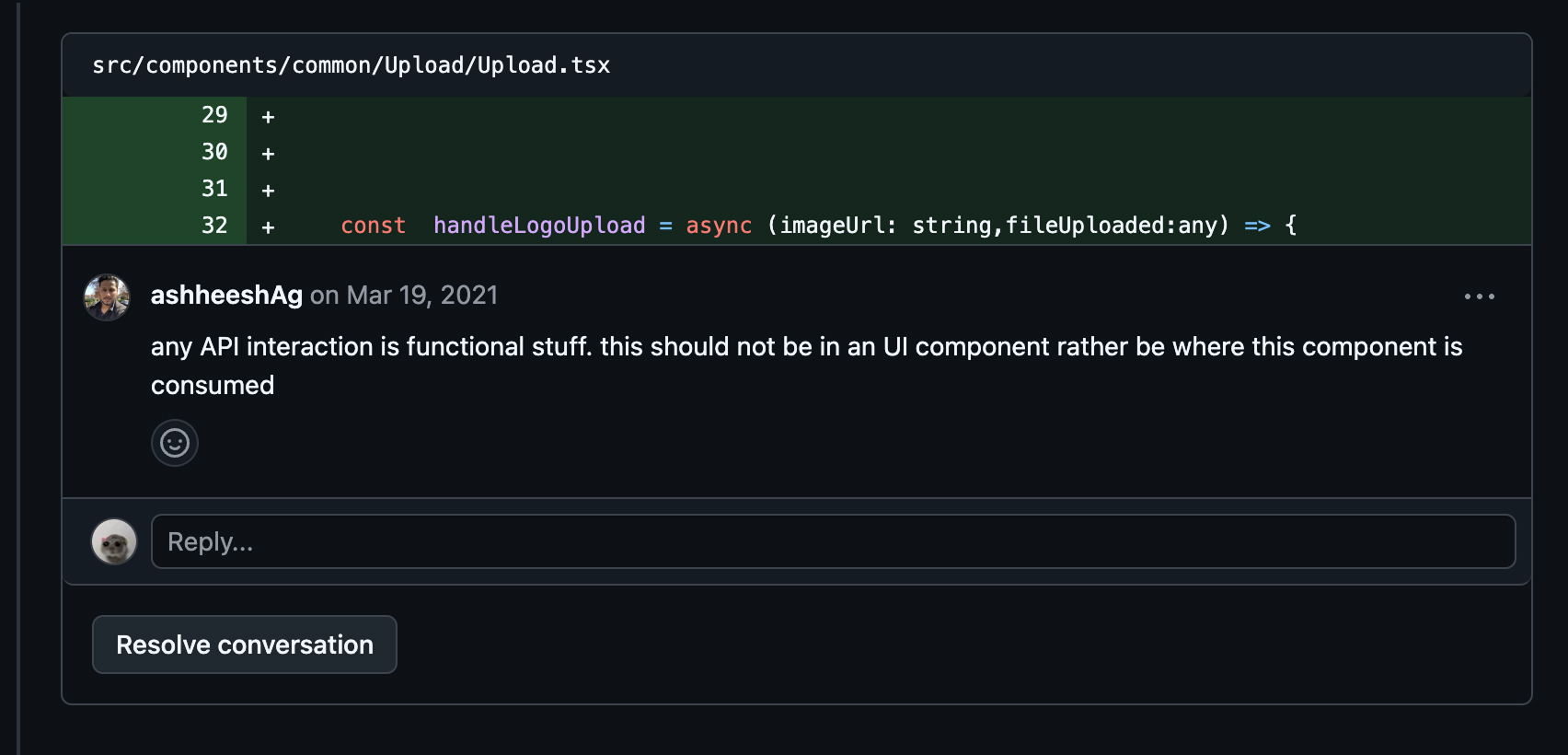

In the image above, I have added a comment that asks the developer to separate the functional stuff from the UI component. This improves readability, makes the component easier to test, and aligns with the separation of concerns principle, keeping the UI focused on rendering and the logic reusable elsewhere.

Whenever I review a PR, I always ensure that I use a checklist. Does the code change play nicely with the rest of the architecture? Even something as simple as adding an API endpoint or tweaking a shared component can have unexpected effects across a distributed system.

That’s why I always consider scalability, maintainability, and fault tolerance. It’s not just about what works now, but what will keep working as the system grows.

I really found this Reddit conversation to be a great explanation of how code reviewers become as accountable as the person who writes the code.

This conversation highlights a key mindset shift in code reviews: approval means shared responsibility. It’s not just about spotting issues, but owning the outcome. As engineers grow, they learn to review with accountability, not just curiosity.

Evaluate Test Coverage and Quality

One of the most overlooked yet high-impact parts of code review best practice is assessing the depth and relevance of the tests, not just whether they exist, but whether they truly validate the behavior and edge cases of the code. It shapes how the code is structured and how failures surface in production. That’s why I treat test review with the same rigor as the implementation.

Assess Test Effectiveness

Testing isn’t just about coverage metrics; it’s about coverage relevance. I don’t just check if tests exist, I look at what they assert. Weak tests that merely mock behavior or test implementation details instead of outcomes are easy to write and break.

I flag tests that don’t truly validate business logic or that could pass even when the code is faulty. If a test doesn’t fail when it should, it’s worse than having no test at all.

Real-World Example

In a previous project, a developer pushed a PR that included tests for a file-processing module. All tests passed, but they only verified successful uploads due to empty returns. There were no assertions for corrupt file formats or permission failures. When these untested scenarios happened in production, the system silently failed. That incident reinforced my habit of asking a question during every review: What happens when this breaks?

Senior developers must treat test evaluation as a first-class priority. Good tests aren’t just a safety net. They’re a design signal, a documentation tool, and the foundation of reliable releases.

Balance Review Depth with Time Constraints

Code reviews don’t always get the attention they deserve. Just because something works locally doesn’t mean it’s production-ready. Senior developers have a bigger responsibility not just to check if the code runs, but to ensure it aligns with project standards, uses the right libraries, and avoids shortcuts that might cause trouble later.

Balancing clean, thoughtful code with tight deadlines is never easy, but it’s part of building software that lasts.

Over-reviewing can block progress, while under-reviewing risks letting critical flaws slip through. As a senior developer, I’ve learned to optimize this balance by being strategic about where to go deep and where to skim.

Prioritize Critical Sections

Not all codes deserve the same level of review. I prioritise security-sensitive areas, concurrency logic, data integrity flows, and any code that touches shared infrastructure.

Real-World Example

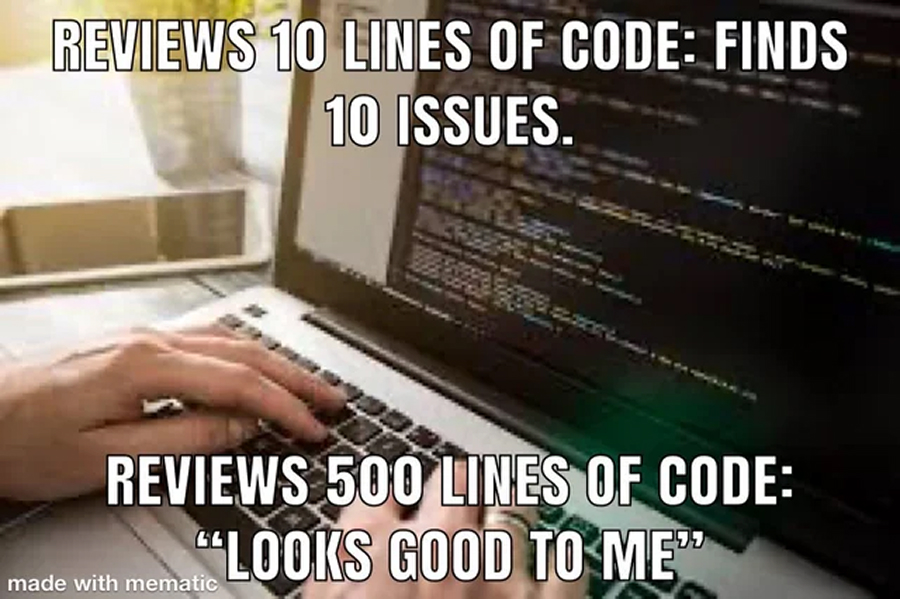

On one project, a colleague submitted a major PR introducing a new authentication module. Given how critical the change was, I set aside time for a thorough review, even with several other tickets in the queue. It’s easy to fall into the habit of skimming through and dropping a quick “LGTM” rubber stamp, especially when things seem fine on the surface.

However, skipping the deeper look can lead to overlooked issues and costly rework. “We’ll fix it later” often becomes a burden no one wants to carry. That’s why this meme felt so relatable:

However, being a senior architect, I have the responsibility of looking after every small defect, and this decision paid off. During the review, I identified a subtle flaw in the token validation logic that could have enabled session hijacking under specific edge conditions. Catching it early saved us from a potentially serious data breach and a wave of downstream hotfixes.

Implement Review Checklists

To maintain consistency across reviews, I rely on lightweight code review checklists. These aren’t bureaucratic forms, they’re fast, high-signal prompts like:

- Does this affect security or data privacy?

- Are error conditions handled gracefully?

- Does the code degrade safely under load?

These checklists help me stay efficient, especially when reviewing under pressure. They also serve as a shared quality baseline for the team, so reviews don’t depend solely on individual judgment.

Provide Constructive and Respectful Feedback

I’ve seen that the tone and clarity of feedback can directly impact team morale, developer growth, and ultimately, the quality of our codebase. That’s why I treat every comment as an opportunity to mentor, not criticize.

Foster a Collaborative Environment

I make it a rule to keep my feedback specific, actionable, and tied to clear outcomes. Vague comments like “fix this” or “not clean” are unhelpful and frustrating. Instead, I aim for feedback that explains the “why” behind the suggestion. For example:

“Can we consider validating input early and returning fast to prevent null errors and simplify the logic?”

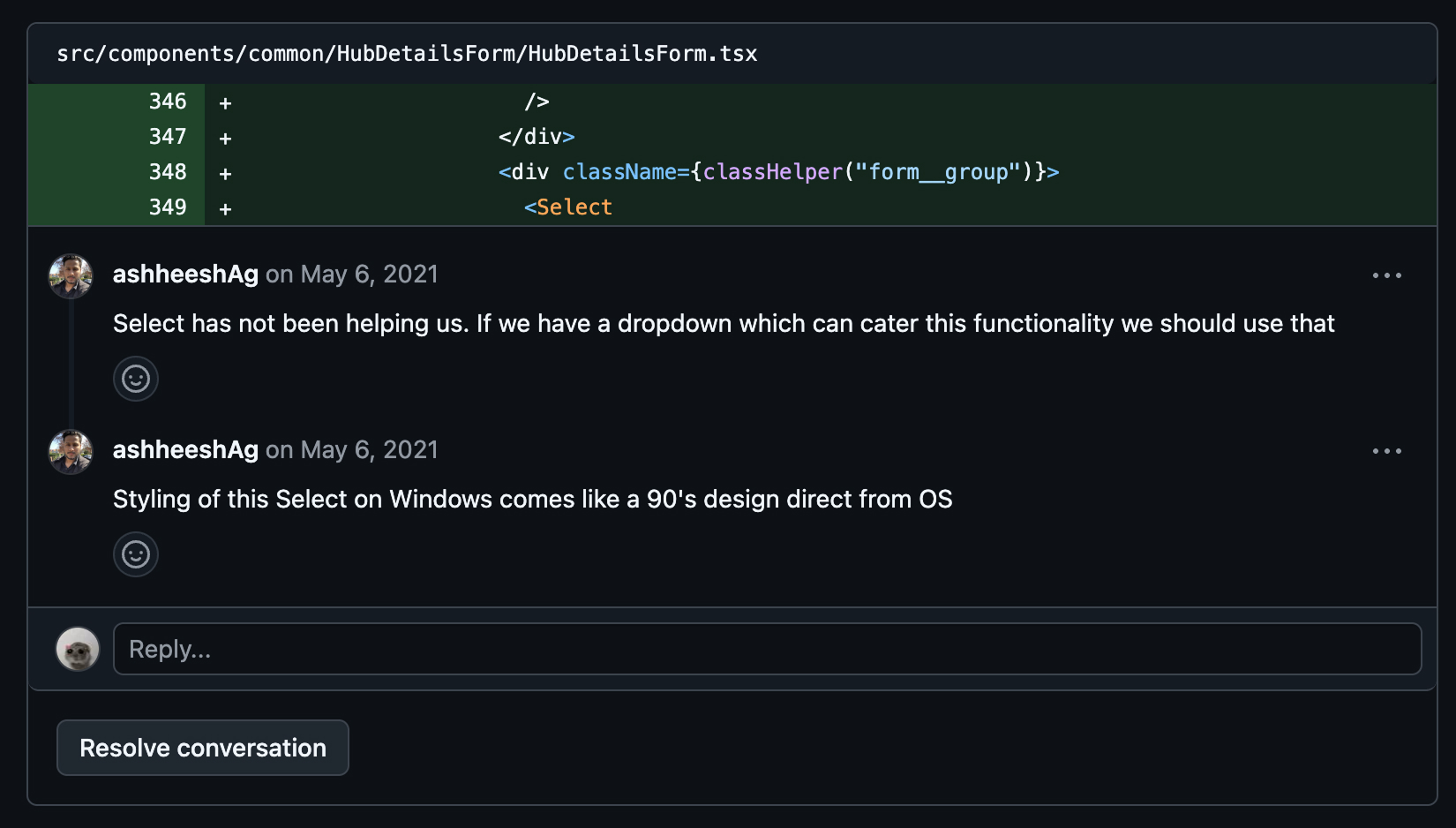

See the comment in the image above. Here, I address the flaw as ‘we’, creating impact as a ‘team’. This really helps to frame it as a shared goal to improve the system, not just the code, encouraging collaboration rather than defensiveness.

Avoid Personal Critiques

One of the most important lessons I’ve learned is always to separate the code from the coder. It’s easy to use judgmental language, especially when the pressure is high. But statements like “this is wrong” or “you didn’t do this right” can alienate even the most experienced engineers. Instead, I reframe suggestions around outcomes or alternatives.

“Would refactoring this block using a strategy pattern improve readability and maintainability?”

It keeps the conversation constructive and focused on solutions.

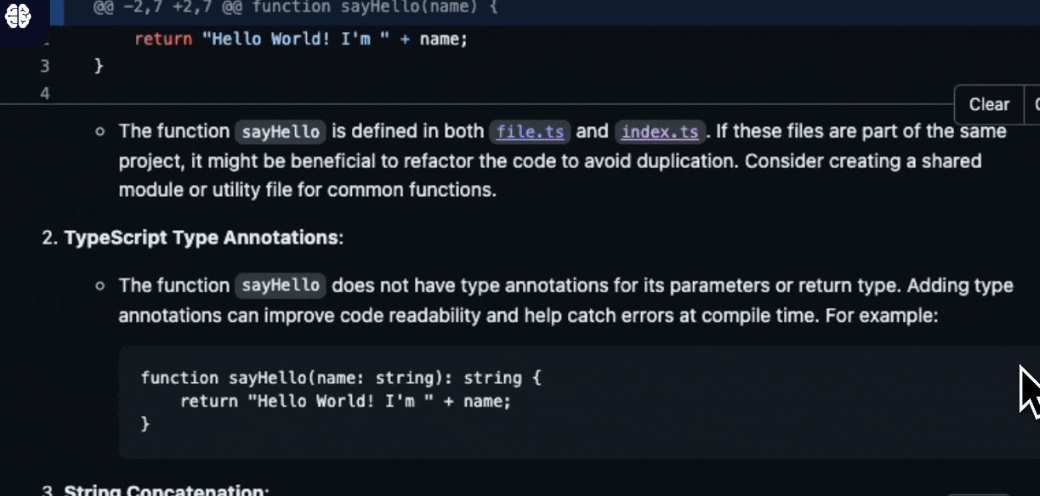

Use Code Review Tools Effectively

I’ve found that smart use of code review tools doesn’t just save time; it elevates the quality of human reviews by letting us focus on what matters: architecture, design decisions, and edge case handling.

Before code hits my review queue, static analysis, linters, and type checkers (e.g., SonarQube, ESLint, mypy) should catch basic issues. This lets me focus on business logic, design, and failure modes, not stylistic details.

Real-World Example

One of the most impactful decisions I made in a previous project was integrating Qodo’s merge checks directly into the CI pipeline. A few months ago, we integrated Qodo Merge into our CI process.

It surfaced a critical function with missing tests, which would’ve likely been missed in a manual review due to time constraints. This insight led the developer to add valuable tests, which later caught a regression in staging before it reached production.

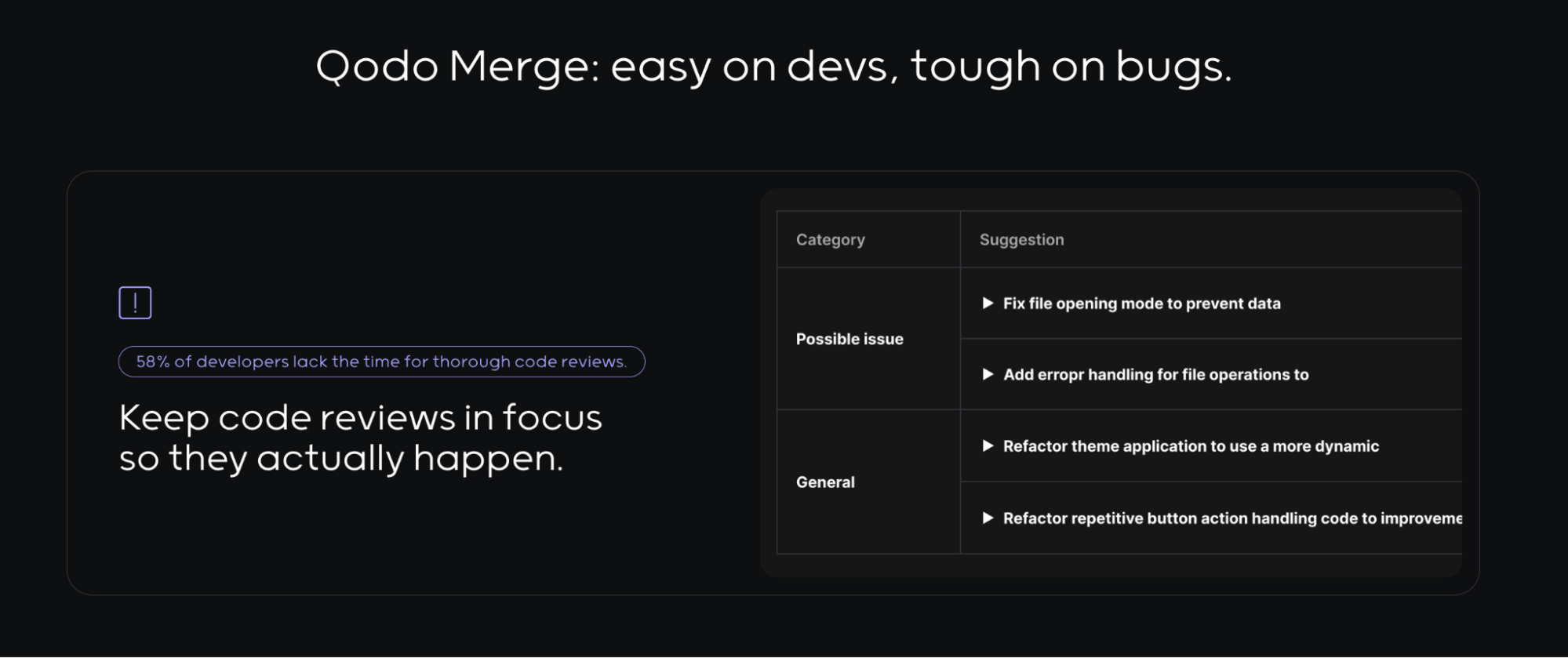

Tools like Qodo Merge aren’t about replacing developers; they’re about enabling us to focus our cognitive energy on the high-leverage tasks. They make automated code reviews faster, more consistent, and free developers to focus on deeper decisions. When used effectively, they transform code reviews from a checklist chore into a strategic engineering activity.

What Code Reviewer Should Focus on?

We have gone through the best practices for code review, but it’s still important to understand what a review should focus on. As a code reviewer myself, I focus on areas that have the biggest impact on security, performance, and reliability. Below are some important highlights that developers should keep in mind while going through code review best practices:

Sanitize Inputs

Always validate inputs to prevent vulnerabilities like SQL injection and XSS. One missed validation could lead to serious security risks, as I learned from a potential SQL injection vulnerability in an unvalidated query.

Edge Case Coverage

Ensure the code handles rare conditions and boundary values. Anecdotally, I once caught a critical edge case in a microservice that would’ve failed under load, highlighting the importance of edge case testing.

Minimal Resource Usage

Optimize memory, CPU, and storage usage, especially in constrained environments. A recent review involved optimizing a costly operation in a loop, resulting in improved performance.

Why Qodo Merge is Best For Code Reviews

I brought Qodo Merge in for a small merge tweak at first, but it’s since become my default tool for full, production‑grade code reviews. The best thing about Qodo Merge is that it elevates code quality and integrity through its RAG-powered context, which quickly fetches relevant code references, eliminating the need for manual searches and speeding up the review process.

This ensures that I can focus on assessing the quality of the code changes rather than gathering context, helping maintain consistency within the larger codebase.

Qodo also provides actionable merge suggestions, highlighting risks and offering specific fixes. This allows me to prioritize important changes, such as performance improvements or security enhancements, ensuring high-quality and maintainable code.

Conclusion

We’ve covered key code review best practices that ensure high-quality, maintainable code. From understanding business context and assessing architectural impact to using code review metrics and ensuring test coverage, each step is crucial for scalable and clean code.

I have also discussed tools like standardized checklists, prioritizing critical sections, and providing constructive feedback to streamline reviews. These practices help senior developers lead the way in maintaining functional correctness and promoting clean code habits.

Lastly, Qodo Merge is an essential tool for maintaining code integrity. Its RAG-powered context, actionable suggestions, and seamless scalability make it invaluable in driving efficient and consistent code reviews, especially as teams expand.

FAQs

How do you review code effectively?

In order to review code effectively, focus on business context, coding standards, performance, and test coverage. Provide constructive, actionable feedback and prioritize high-impact areas.

What is the best practice for reviewing code?

To review code effectively, focus on readability, maintainability, and scalability. Use a checklist to stay consistent, look out for unhandled edge cases, and lean on metrics to spot patterns. Clear comments and thoughtful suggestions help guide the author, not just point out flaws.

How to review code faster?

To speed up reviews, automate what doesn’t need human eyes, like linting and formatting, so you can focus on logic. Prioritize high-risk or high-impact areas, and encourage contributors to break down large PRs into smaller, focused changes that are easier to digest.

How do you evaluate PR?

When evaluating a PR, start by asking: does this change solve the intended problem and fit the architecture? Then, assess design quality, code clarity, test coverage, and adherence to standards. Don’t just approve or reject, ask clarifying questions to ensure alignment with both technical and business goals.

How to speed up a code review?

To streamline the review process, integrate checks into your CI/CD pipeline, so that issues are caught early. Encourage a culture of small, frequent PRs and make reviewing a daily habit to avoid backlogs and reduce context-switching. Focus your attention on complex logic and high-priority components first.