Top 12 Code Review Tools To Ace Code Quality

TL;DR

- Even with automation, code reviews are important for keeping the code structure intact, catching edge-case bugs, ensuring readability, and maintaining architectural consistency.

- Code review tools help speed up workflows, keep things consistent, and improve code quality in fast-paced agile and CI/CD-driven environments.

- The best code review tools scale with your team, integrate into CI/CD, offer deep insights, and provide meaningful feedback for continuous learning.

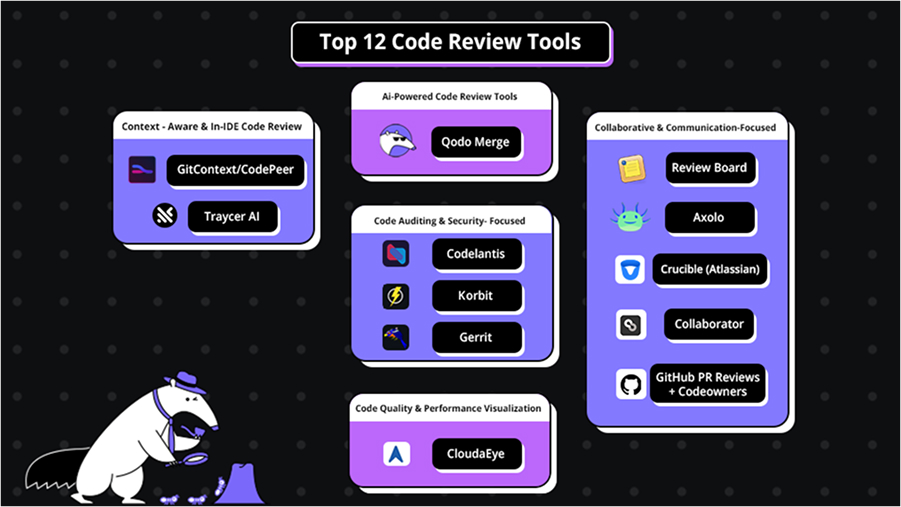

- This blog explains the top 12 code review tools, including Qodo Merge, Axolo, Review Board, Codelantis, Crucible(Atlassian), Collaborator, GitHub PR Reviews + Codeowners, Korbit, CodePeer, Traycer AI, Gerrit, and CloudaEye.

- Modern code review tools go beyond diffs, offering behavioral analysis and real-time suggestions to uphold quality without slowing development.

AI-Powered Code Review Tools

Collaborative & Communication-Focused

Code Auditing & Security-Focused

Context-Aware & In-IDE Code Review

Code Quality & Performance Visualization

What’s the one thing that silently disrupts a codebase’s integrity while teams stay busy shipping features? Let me go first; it’s the poor code quality.

As a Lead Developer, I can tell you this: code reviews remain the most scalable way to enforce quality. Not static analyzers. Not post-deploy bug fixes. Just structured, consistent, high-context reviews done right.

While manual code reviews rely heavily on individual attention and can miss subtle issues under time pressure, code review tools automate repetitive checks, highlight potential bugs instantly, enforce consistent coding standards, and facilitate clearer team communication, making it easier to manage large codebases and coordinate across distributed teams.

My journey with code reviews has grown from simple GitHub syntax comments to robust, automated pipelines. Manual reviews worked early, but tools like linting bots and CI hooks saved time as the team and codebase grew. Linting bots handled style issues instantly, and CI hooks caught errors before code hit review, saving time and letting us focus on what is actually important: logic and architecture.

In high-throughput engineering orgs, code review tools are no longer optional but essential. They bridge rapid iteration with long-term maintainability, helping developers ramp up quickly, focus on logic, and embed quality checks directly into the workflow.

In this blog, I’ll walk you through 12 of the best source code review tools I’ve used, evaluated, and relied on while working across multiple SDLC projects. I’ve grouped them into use cases, and yes, we’ll get into real examples and understand what makes a tool stand out.

Why Are Code Reviews the Last Line of Defense?

As lead developers, we look beyond whether the code simply works. We must ensure it meets client expectations, complies with internal policies, and fits seamlessly into the overall system design. Moreover, is it maintainable months down the line?

Your tests might pass, and your linter might be green, but that doesn’t mean the code is maintainable, secure, or respects architectural boundaries like domain-layer separation, or avoids patterns that make future changes harder, like hardcoded configs, deep nesting, or tight coupling. This is exactly why code reviews are still our last and most human line of defense.

Manual reviews and traditional tools like ESLint, Checkstyle, or basic CI linters often suffer from tunnel vision.

However, when we talk about the limitations of manual reviews and traditional code validation tools like ESLint, Checkstyle, or basic CI linters, it is their tunnel vision; they focus heavily on what changed, usually limited to the Git diff. But, real-world issues rarely stay confined to a single file or function. Code is interconnected. It lives in a web of dependencies, architectural boundaries, and business logic contracts.

Let me give you an example from a recent review I was involved in. A junior developer raised a PR that included this utility function:

export function isEmpty(value: any): boolean {

return value === undefined || value === null || value === '';

}

When we first look at this function, nothing may seem wrong, and it indeed passed all unit tests. The use cases addressed empty strings, null, and undefined. However, during the review, my team realized this function would be used in different contexts, like form validations and backend responses. Other types, such as arrays, objects, numbers, or even a whitespace string, might also need to be treated as “empty.” There was also no mention of how it would behave with whitespace strings or 0.

Unit tests might catch functional errors, but won’t detect subtle semantic issues in the code. And of course, if you’re using a linter, you just get syntax corrections. These methods aren’t enough to suggest upgrades in code reviews, but detailed code reviews by experienced developers can spot these deeper problems and guide the code toward better structure.

The above may be a small example, but it scales well. But when scaled across hundreds of PRs, code reviews become the backbone of system stability.

Even senior developers can fall into tunnel vision without structured reviews. But with the right review tools (and we’ll get into those next), you gain leverage: automated guardrails + human insight = resilient code.

How Do I Evaluate a Code Review Tool?

I evaluate automated code review tools beyond just functionality, such as syntax checks and error detection, focusing also on their ability to improve code quality, maintainability, and integration with team workflows. Here’s what I consider:

1. Does it Scale with Team and Complexity?

The tool must handle a growing team and codebases, especially in fast-paced environments like CI/CD-driven product development, frequent releases, or microservices-based architectures. It should work well whether I’m dealing with monorepos or polyrepos, enabling smooth collaboration across team members.

2. Can It Plug into Our Opinionated CI/CD Stack?

Our team uses a specific CI/CD pipeline with tools like Jenkins, GitHub Actions, and CircleCI. I need a code review tool that integrates smoothly into this setup without causing disruptions. The tool must fit into this system without additional overhead, whether for pre-merge checks, automated workflows, or reporting.

3. Does It Help Developers Grow Through Context-Rich Feedback?

Code reviews provide developers with a chance to learn and enhance their skills. I look for tools that provide context-rich feedback, not just simple comments like “line 42 is incorrect,” but deep, insightful suggestions that help developers understand the ‘why’ behind the changes and foster skill growth.

4. Can I Write Custom Rules or Logic That Fit Our Architecture?

Every organization has unique architectural patterns and conventions. I look for tools that allow me to create and enforce custom rules personalized to our specific architecture, ensuring that all code adheres to our unique guidelines, whether naming conventions, design patterns, or best practices.

Top 12 Code Review Tools to Elevate Code Quality

Now that we have seen all aspects of what requirements git code review tools should meet, let’s discuss the top 12 tools one by one:

AI-Powered Code Review & Quality Assurance

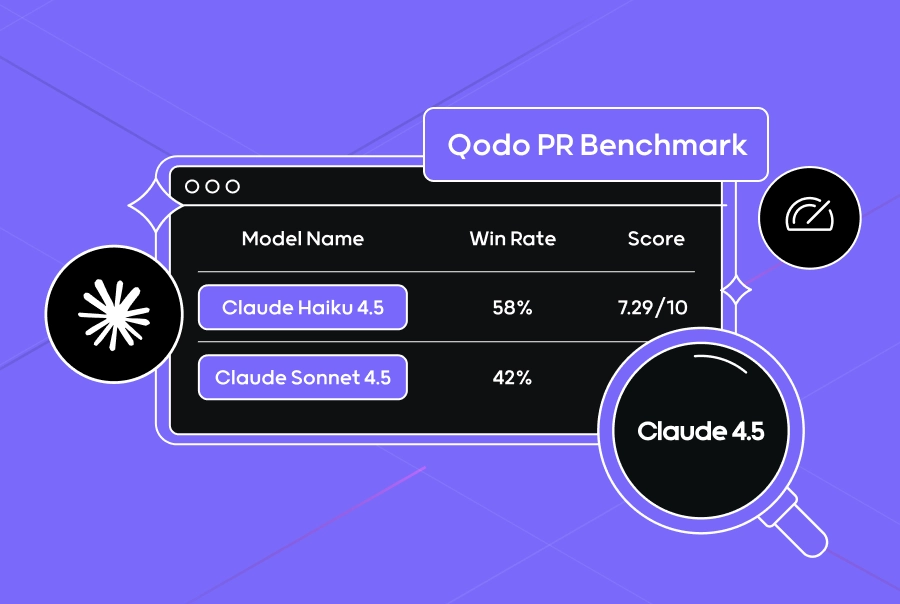

Qodo Merge

Qodo is the best code review tool when it comes to improving code quality. As a lead developer working on enterprise-grade projects, my expectations from an automated code review tool go beyond “does this compile?” I need something that understands how each change impacts the broader system architecture, logic dependencies, and long-term maintainability. Qodo Merge meets this expectation. It’s an AI code review tool that acts as a graph-aware, contextually intelligent reviewer that aligns code with your architecture, logic flows, and unwritten team conventions.

Pros:

- Graph Awareness: Qodo builds a semantic map of your codebase and flags changes that violate cross-cutting concerns (e.g., tracing, observability, authorization layers).

- Behavioral Drift Detection: It highlights when your logic diverges from the established behavior, even if tests pass.

- Contextual Suggestions: AI code generation and real-time insights based on how similar problems were solved previously across your repo.

For example, I added a caching layer to an existing data retrieval function. The difference looked harmless:

function getUserData(id: string): Promise<User> {

const cached = cache.get(id);

if (cached) return Promise.resolve(cached);

return db.findById(id);

}

Qodo Merge caught a regression that no test had:

“You’re bypassing the audit trail that’s logged inside getAndAudit() used previously in this endpoint.”

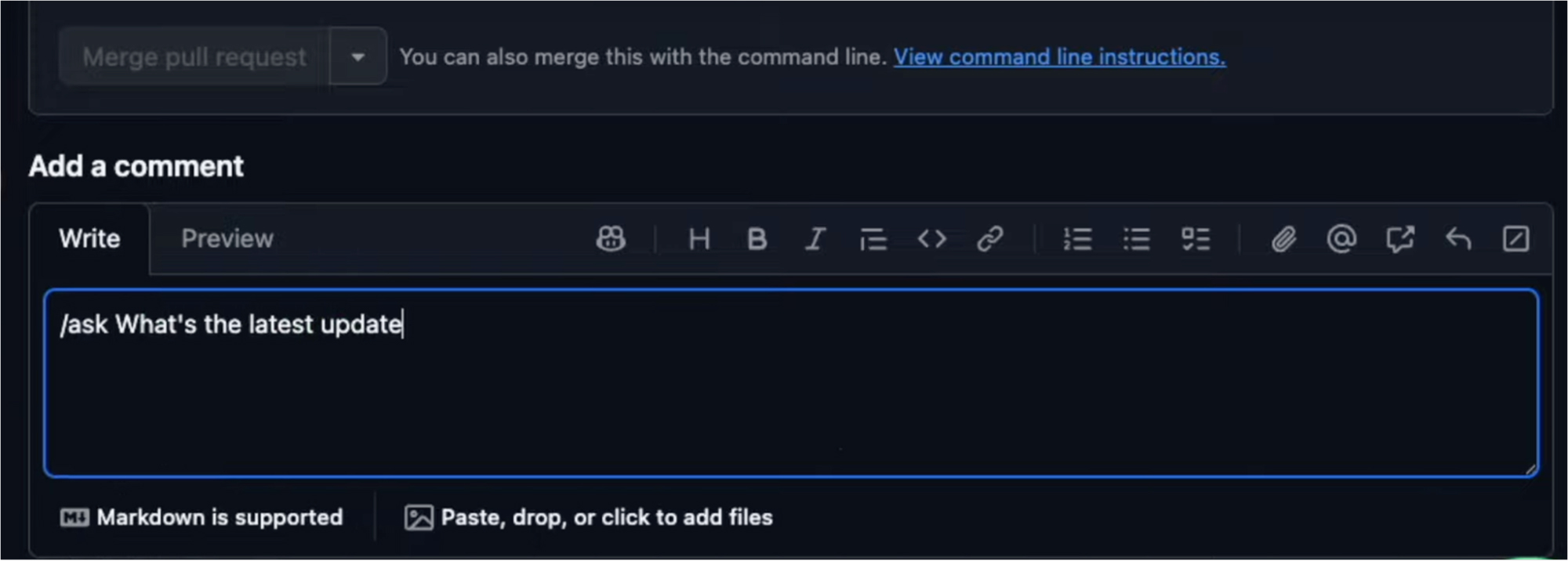

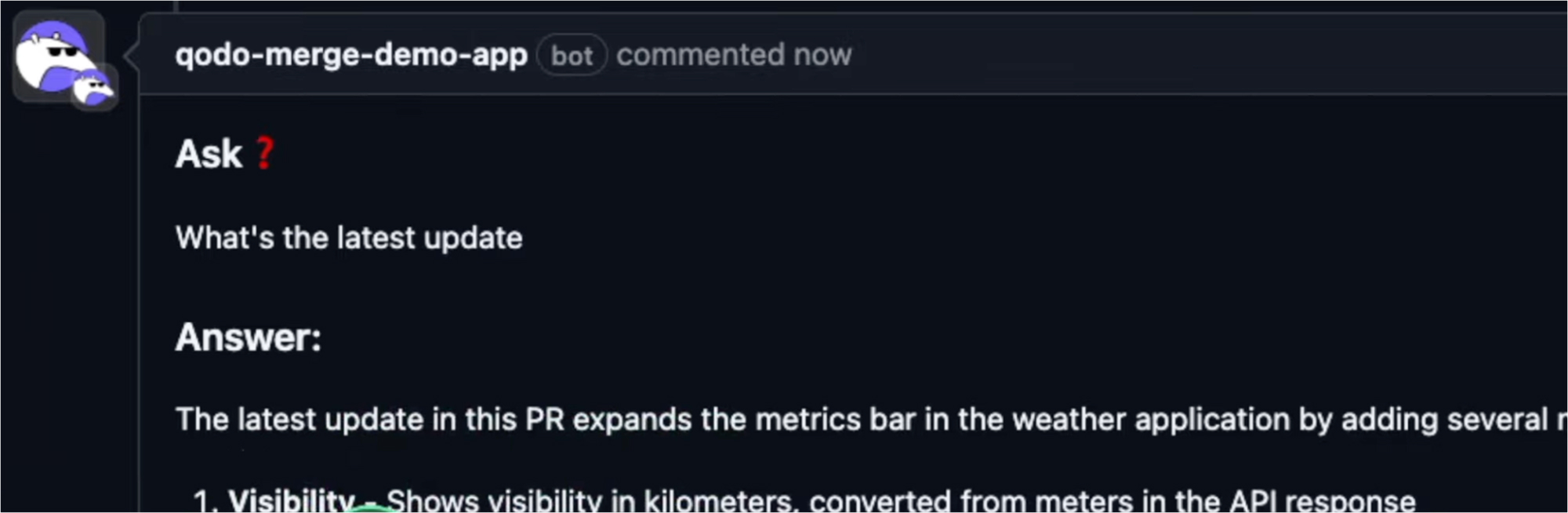

It knew our behavioral contract for fetching user data, and it called me out. Also, as a technical lead, I have to check updates from my team members. And that was quite easy now because I just have to ask Qodo Merge on the PR itself like this:

Within seconds, it replied to me, informing me about the updates in the PR. That’s so time-efficient!

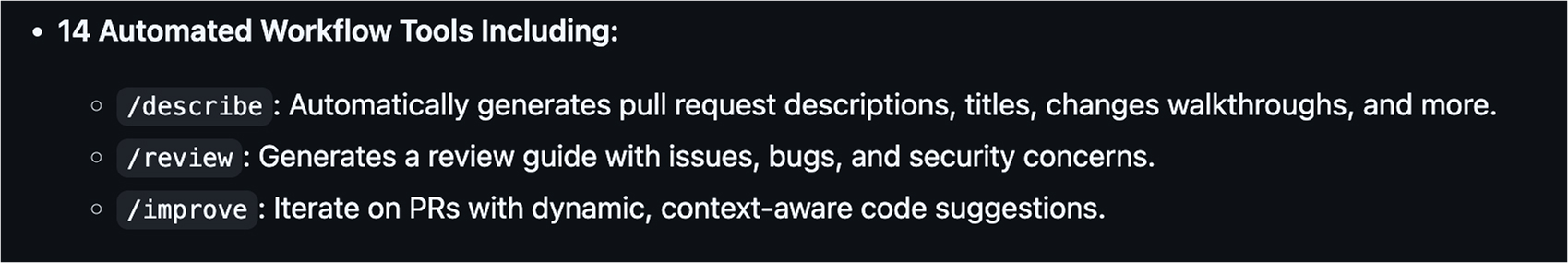

Being an open-source code review tool, Qodo Merge has different commands that I can use depending on my needs. Some of the most useful ones are given in the image below:

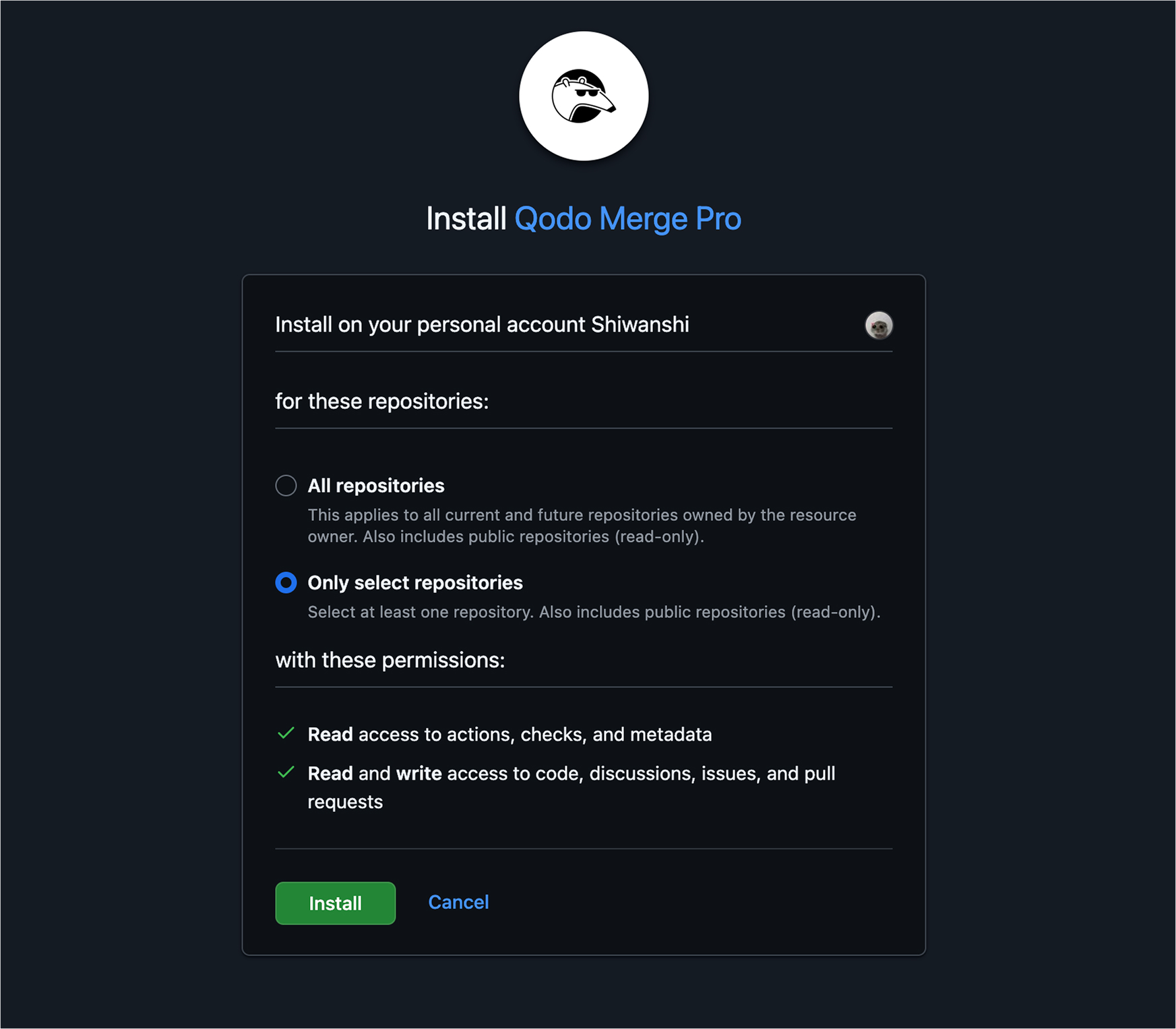

Moreover, I liked that you can select the repos to which you want to give access to Qodo Merge. But you can change it even after you install it.

Cons:

- Deep analysis can occasionally slow down CI if not scoped properly.

- New users might find its warnings overwhelming until they tune the ruleset.

Pricing:

Available for free for individual users and open-source projects. For teams, a paid plan starts at $15 per user per month, providing enhanced review features and integrations ideal for handling frequent code updates.

Collaborative & Communication-Focused

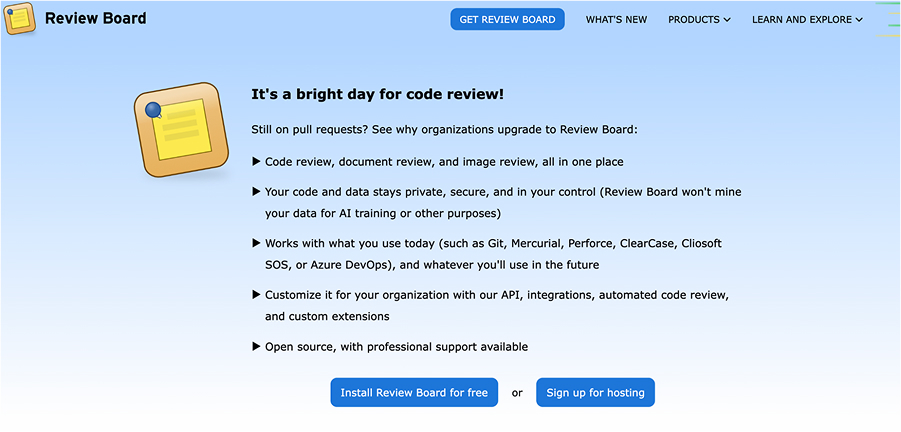

Review Board

Review Board is an online tool that helps developers collaborate by reviewing code before it’s merged. I used Review Board extensively at a fintech where Gerrit, Mercurial, Perforce, and ClearCase still ruled the backend. It is helpful in environments that rely on custom workflows and legacy VCS tools.

Pros:

- Provides smooth integration with SVN, Gerrit, ClearCase, and Perforce.

- Deep customization: You can wire in your review rules, dashboards, and even trigger external tools like Jenkins pipelines or Slack updates post-review.

Let’s see an example here. I created a custom dashboard that visualized the team’s review throughput. This was important in surfacing bottlenecks, such as when one component team blocked deployments due to delayed approvals.

I even wrote a plugin to track reviewer fatigue, looking at metrics like:

- Review response time

- Depth of comments

- Number of reviews per person per sprint

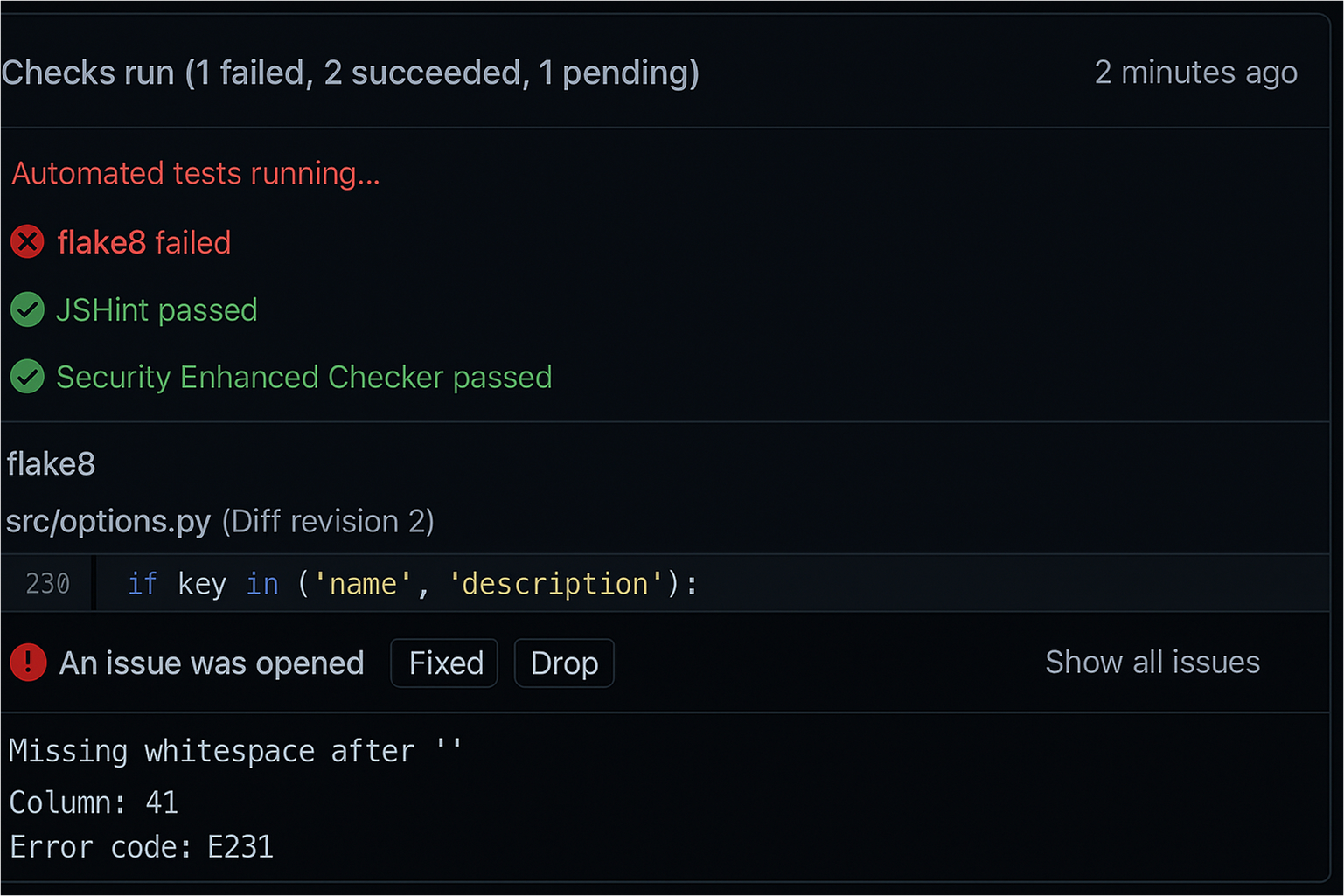

Review Board helped me check where it could be wrong. For example, as shown in the image below, JSHint passed, but flaked failed. Because honestly, when you’re managing a team, you can’t check every aspect of everyone’s PR.

Cons:

- The UI feels outdated by modern standards.

- Not ideal for cloud-native or microservices-first teams.

- Requires effort to maintain custom scripts/plugins.

Pricing:

Free to use for unlimited users, even in commercial settings. For teams seeking advanced functionality, the optional Power Pack, priced at $10 per user per month, unlocks premium features.

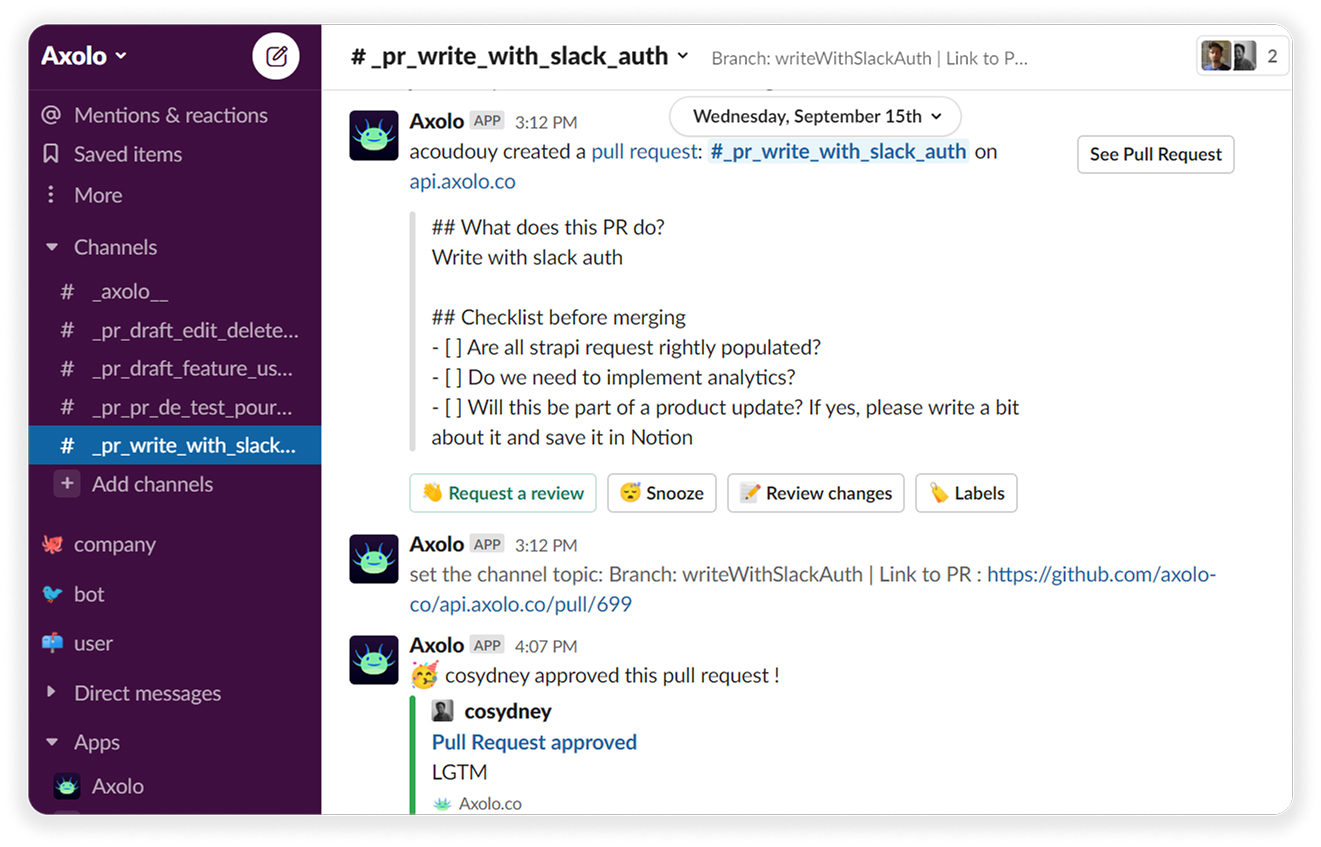

Axolo

Axolo is a code review tool integrated with Slack that links GitHub pull requests to team discussions. It directly brings PR discussions, assignments, and updates into Slack, making code collaboration faster and more efficient.

Pros:

- Provides real-time Slack alerts for pull requests and code-related discussions.

- PR reviewers are automatically assigned according to team rules.

- Promotes active involvement in code reviews, helping to minimize delays.

I added Axolo to my Slack workspace to improve visibility and accountability during our development cycle. Axolo instantly created a dedicated Slack thread, tagging relevant reviewers whenever a new pull request was opened.

For example, I pushed a new feature update during our last sprint at midnight. Within minutes, reviewers from different time zones were notified and dropped feedback in Slack; switching tabs or chasing updates is unnecessary. Axolo reminded us of pending reviews the next day, helping us stay on track without constant follow-ups.

Cons:

- It can get noisy in Slack if not properly configured.

- Pricing may not be ideal for smaller or early-stage teams

Pricing:

The Free plan available is best for small teams or individual use. The Standard Plan costs $8.3 per seat/month to support growing teams and help track progress effectively.

Crucible (Atlassian)

Crucible is designed for organizations that follow structured project management workflows, where code reviews are directly linked to JIRA issues, assigned to responsible team members, and tracked as part of sprint deliverables.

Pros:

- Deep integration with Jira, Confluence, and Bitbucket.

- Threaded comments and task assignments for better reviews.

- Automatically sync unresolved feedback to Jira issues.

- Helps track and triage review debt effectively.

For example, I wrote a script that pulled unresolved Crucible comments and synced them into the Jira backlog. This created clear visibility for developers and PMs, ensuring that review feedback wasn’t forgotten amid sprint priorities.

Cons:

- Performance can lag on very large repositories or high-volume projects.

- Licensing costs add up quickly as your team grows.

- Requires other Atlassian tools (Jira, Confluence) to unlock full potential.

Pricing:

Crucible is a paid tool, starting at around $10/user/month (depending on the plan), with discounts for larger teams.

Collaborator

When software quality must be audited, Collaborator is a good option. It’s built for finance, healthcare, and aerospace teams, where traceability, compliance, and structured peer reviews aren’t optional.

Pros:

- Formal workflows are ideal for regulated industries.

- Detailed reporting and compliance-ready audit trails.

- Supports not just code, but also design docs, requirements, and test plans.

To give you an idea, my team had to meet strict ISO and HIPAA compliance standards at one point. We used Collaborator to enforce multi-stage reviews, starting with peer review, then a security review, and ending with a manager or lead sign-off.

Each stage required explicit approval before moving to the next, and we linked these reviews to specific ticket IDs and requirement documents to maintain traceability. It helped us pass both internal audits and external regulatory reviews without scrambling at the last minute.

Cons:

- Setup and onboarding can feel heavy compared to lighter tools.

- Best suited for slower, more deliberate development cycles, not rapid prototyping environments.

Pricing:

Collaborator offers a per-user license model. It’s on the higher end compared to basic code review tools, but justified for compliance-driven teams.

GitHub PR Reviews + Codeowners

GitHub remains the default platform for most development teams, but having pull request reviews in place isn’t enough. Effective code reviews on GitHub require structure, discipline, and the right automation to scale quality across teams truly.

One important feature is the Codeowners file, which assigns responsibility for reviewing changes. It ensures that the right people are automatically pulled into discussions.

Pros:

- Native integration with the GitHub ecosystem.

- Highly customizable through GitHub Actions and bots.

- Simple to set up initially.

For example, we built a custom bot that flagged PR reviews with too few words per comment on one project. Quick approvals like “Looks good” or “LGTM” without meaningful feedback were auto-rejected, forcing deeper engagement. Over time, this drastically improved review quality without adding unnecessary friction.

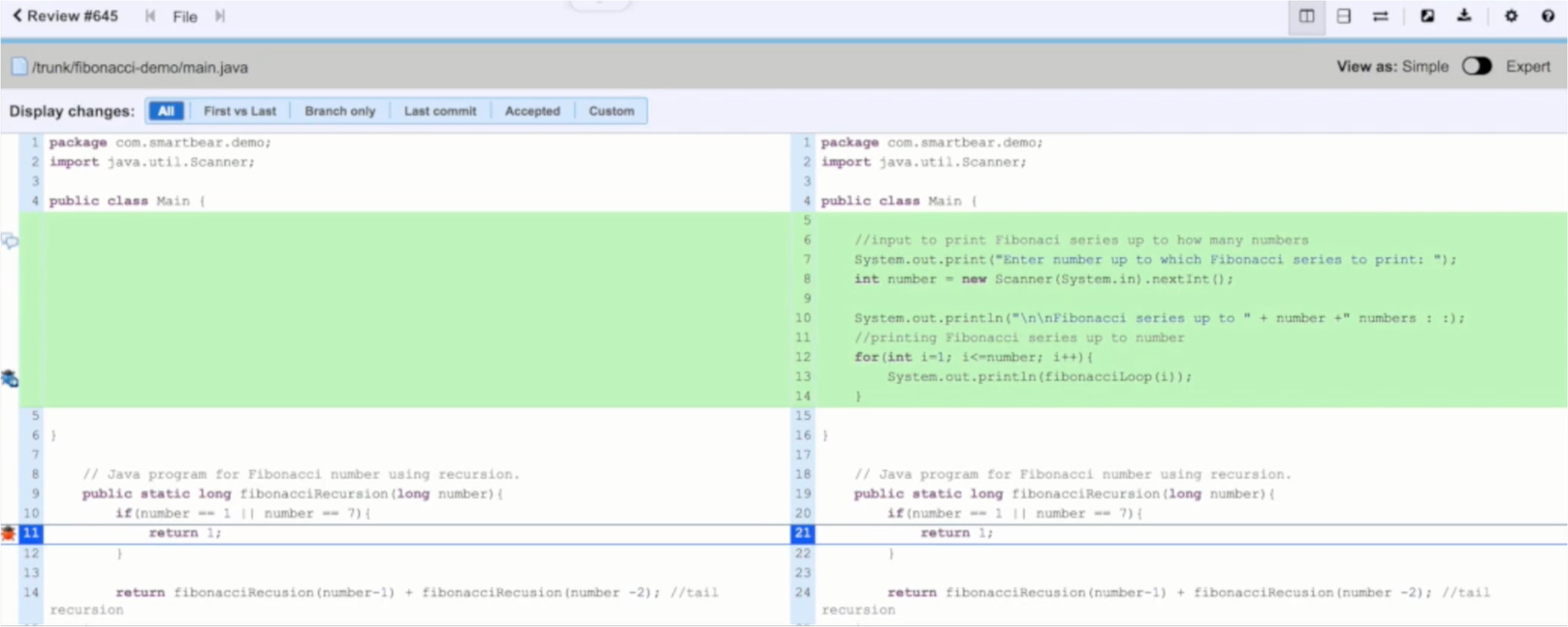

In the image below, the red lines are the ones that were removed, and the green ones were added. I find GitHub PR reviews easy and simple; I’m sure everyone has used them at least once.

We also implemented review rotations using GitHub Actions combined with metadata tagging. This helped spread the review load evenly across the team, preventing any single developer from being overwhelmed and causing delays in the review process.

Cons:

- Without cultural discipline, Codeowners alone can become a box-checking exercise.

- Complex workflows (like monorepo setups) require third-party tools or heavy GitHub Action scripting.

Pricing:

Available with GitHub Free and Pro. Advanced automation and security features may require GitHub Enterprise plans.

Code Auditing & Security-Focused

Codelantis

Codelantis is an AI-powered code review tool that helps identify security vulnerabilities, performance issues, and code quality concerns before they reach production. It’s designed to act like a virtual code reviewer, offering real-time actionable insights.

Pros:

- Detects security vulnerabilities using AI-driven pattern recognition

- Highlights performance bottlenecks and inefficient code blocks

- Generates detailed reports on code quality, maintainability, and complexity

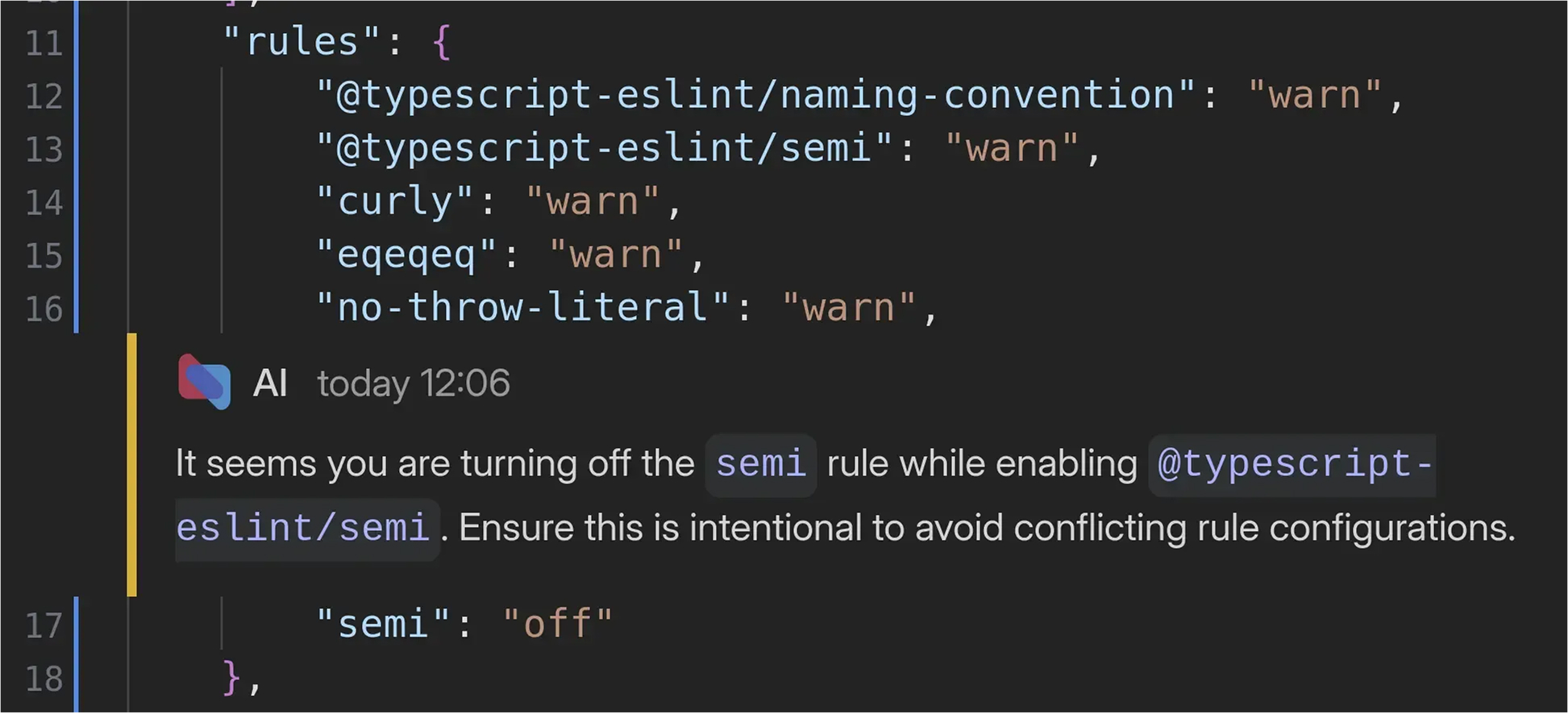

I’ll give you an example of how Codelantis helped me. As part of the final QA phase, I implemented Codelantis into a large-scale financial application. With thousands of lines of critical logic, manually reviewing everything would’ve taken days. Codelantis helped me scan the entire codebase and flag a few TypeScript ESLint errors that were easily overlooked.

I like how it broke down each issue with context and suggested fixes, making it easier to explain to the team and implement quickly. Still, it doesn’t guarantee accurate results every time.

Cons:

- Occasional false positives that need human judgment

- Some advanced reports are locked behind higher-tier plans

Pricing:

Codelantis follows a subscription model based on project size and features. A limited free tier is available for smaller teams or individual developers.

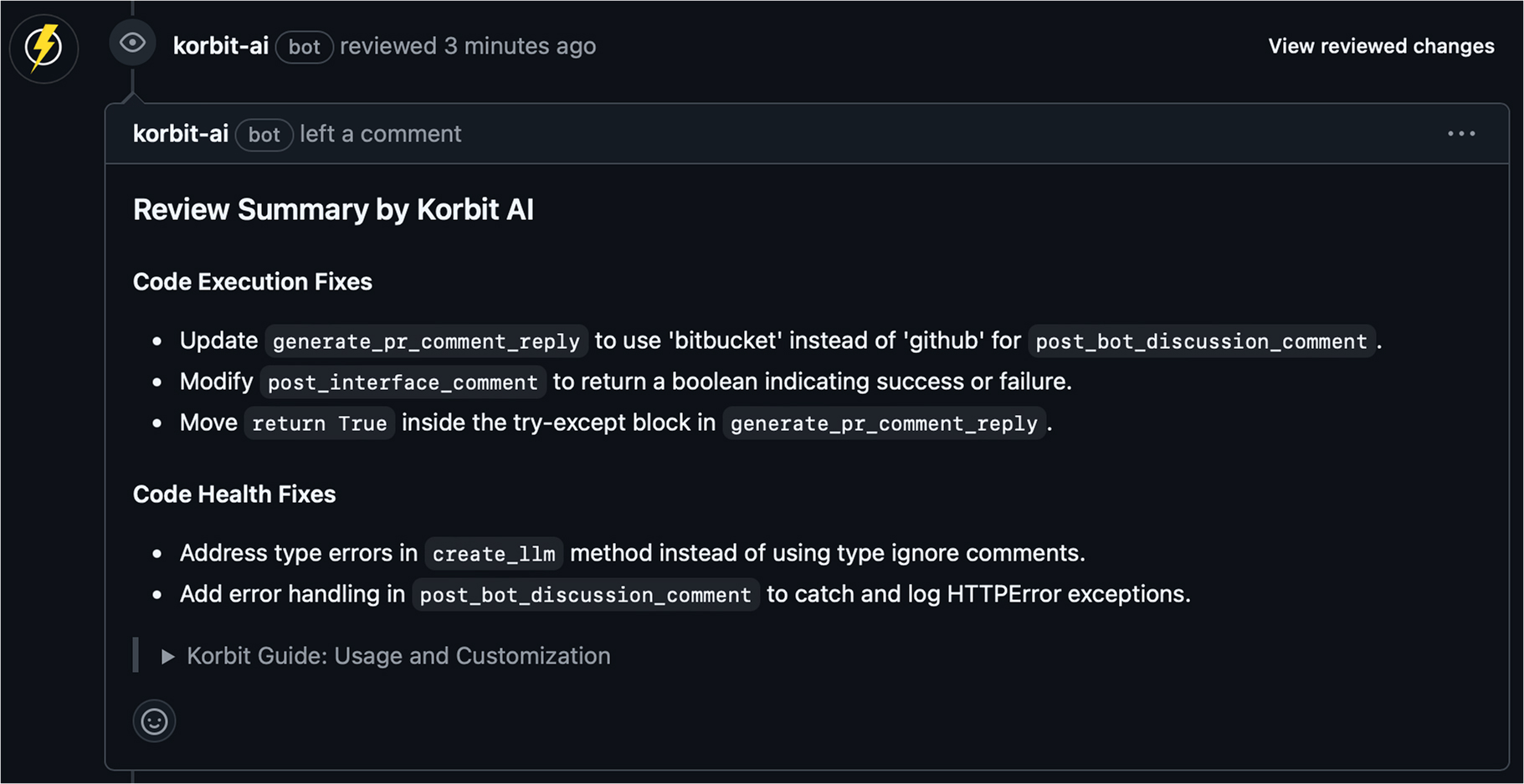

Korbit.ai

Korbit.ai is an AI-powered code review assistant that offers actionable insights into performance bottlenecks, security vulnerabilities, and code style issues. It integrates directly into your CI/CD pipeline, helping teams catch problems early and ship cleaner code faster.

Pros:

- Real-time detection of performance, security, and style issues

- Seamless integration with CI/CD workflows

- Prioritizes issues based on severity and impact

As a lead developer, I was keen to understand how Korbit works with CI processes. I used Korbit to streamline our CI process and reduce last-minute surprises before deployment to check this. Korbit flagged a risk in a recently merged module during one release cycle.

I appreciated how it didn’t just point out the problem; it explained the risk and suggested a fix in the pipeline. It’s useful when a team reviewer misses subtle red flags in the code. However, it does come with some concerns.

Cons:

- Might generate too many alerts initially until properly configured

- Customization options can feel limited for edge-case workflows

Pricing:

Korbit.ai provides a free trial to explore its capabilities, but full access, including the Mentor dashboard and advanced analytics, comes with the Pro plan at $9 per user.

Gerrit

Gerrit is built for teams managing massive, fast-moving codebases like Android, Chromium, or large-scale enterprise systems. It’s designed to control change without slowing velocity, a tough balance in environments where hundreds of contributors push code daily.

Pros:

- Immutable patch sets for a tamper-proof history

- Fine-grained access control for approvals and merges

- Supports strict governance and audit needs

Let’s take an example to understand better. In a fintech project, I designed a workflow where +2 approvals were reserved only for software architects, while tech leads gave +1 approvals. We enforced this rule using REST API hooks, ensuring process discipline even under high deployment pressure. This approach scaled surprisingly well across distributed teams.

Cons:

- Steeper learning curve for developers unfamiliar with Gerrit workflows.

- UI feels outdated compared to newer review tools.

- Heavy initial setup and maintenance effort, especially for custom integrations.

Pricing:

Gerrit itself is open-source and free. However, hosting, setting up, and maintaining custom integrations can add hidden costs, especially for enterprises.

Context-Aware & In-IDE Code Review

CodePeer/GitContext

GitContext, now rebranded as CodePeer, is a developer tool that adds historical and contextual insights to every code change. It helps reviewers understand what changed and why it changed by surfacing commit history, discussions, and related pull requests.

Pros:

- Displays historical context and rationale behind code changes.

- Links related to commits, PRs, and discussions for deeper understanding.

- Speeds up code review by reducing back-and-forth questions.

For example, I used CodePeer while working on a microservices-based distributed system where multiple teams pushed changes to shared modules. During one review, I noticed a function refactor that seemed unnecessary until CodePeer pulled up the original reasoning behind the change from a six-month-old PR.

It included comments from the lead architect explaining the performance trade-offs. That context not only helped me approve the PR with confidence, but also saved us from repeating past mistakes.

Cons:

- Can feel overwhelming for simple or one-off projects.

- Some context links can be noisy if not well-documented.

Pricing:

As one of the reliable code review tools, CodePeer is completely free for individual developers. The Team plan is priced at $9 per user/month, which offers advanced code review features suitable for growing teams, including unlimited public and private repositories.

Traycer AI

Traycer AI is an AI-powered assistant built into your IDE that offers real-time suggestions, code corrections, and bug detection as you write. It’s designed to help developers improve code quality without interrupting their workflow.

Pros:

- Instantly identifies bugs and code style issues

- Suggests optimizations in real-time

- Integrates smoothly with IDEs like VS Code and IntelliJ

After learning about it, I continued to test the tool inside VS Code during a fast-paced project with tight deadlines. The tool provided real-time suggestions as I typed, highlighting redundant lines, pointing out style inconsistencies, and flagging potential logic issues before they could turn into bugs.

One specific moment that stood out was when I was updating a payment handler. Traycer flagged a silent failure in the try-catch block that would’ve gone unnoticed until production. Fixing it early saved a lot of future debugging.

Cons:

- Sometimes too aggressive with suggestions, especially in early drafts.

- Can slightly slow down performance on large projects.

Pricing:

Traycer AI offers a free basic version. The premium Lite Plan is priced at $8/month, with advanced features and team collaboration tools available under a paid plan.

Code Quality & Performance Visualization

CloudaEye

CloudaEye is a visual analytics tool that helps developers and architects monitor code quality, performance metrics, and overall system architecture. It’s a great tool for teams looking to scale for better product development.

Pros:

- Offers intuitive dashboards for architecture, performance, and code health

- Helps detect technical debt and performance issues early

- Scales well for large, enterprise-grade codebases.

To check CloudaEye’s performance visualization, I integrated it into one of our growing SaaS products with a complex microservices architecture. The visual dashboards gave me a brief overview of the various modules’ performance.

One major win was spotting a recurring performance lag in one of the services. The metrics highlighted that the issue stemmed from a bloated data pipeline. This visibility helped us realign our development priorities and reduce technical debt systematically.

So, CloudaEye made it easier to explain architectural concerns to stakeholders without diving deep into code; everything was right in the visuals.

Cons:

- Can be overwhelming sometimes if the data volume is huge.

- Requires time to set up properly for complex environments

Pricing:

CloudaEye offers a free plan offering 5 code reviews and 8 test RCA reports. Team Plan is priced at $19.99 /developer/month, providing unlimited code reviews, test RCA reports, and enhanced collaboration features.

Conclusion

We explored the critical role of code reviews in maintaining high-quality code for senior development teams. We covered the importance of tools that scale with complexity, integrate with CI/CD pipelines, and provide context-rich feedback to help developers grow.

We highlighted Qodo Merge as a powerful tool that offers advanced features like customizable rules and contextual feedback, which are essential for maintaining code integrity and boosting team productivity. We also discussed other open-source code review tools for use cases, such as Codelantis, CodePeer, CloudaEye, Review Board, etc.

Ultimately, code reviews are more than a task; they are a cornerstone of a strong development culture, ensuring long-term success and continuous improvement.

FAQs

What are code review tools?

Code review tools help automate and streamline the process of reviewing code, ensuring consistency, quality, and collaboration among developers.

Is code review part of DevOps?

Yes, code review is essential to the DevOps process, ensuring quality code is integrated into continuous delivery pipelines.

Is code review a QA?

No, code review is not the same as QA. It focuses on reviewing the code for errors and improvements, while QA tests the functionality.

How to do a code review?

To do a code review, thoroughly check the code for bugs, logic flaws, adherence to best practices, and ensure it meets the project’s coding standards.

How to maintain code quality while vibe coding?

Maintain code quality in vibe coding by setting clear standards, using code review tools, and promoting collaboration and continuous learning within the team.

What is important for enterprise code review?

For enterprise code review, scalability, automation, adherence to coding standards, security checks, and detailed feedback are key to maintaining high-quality code.