Top 7 Python Code Review Tools For Developers

TL;DR

- Code reviews can slow down as teams size increases and especially when edge cases, test coverage, and security get overlooked.

- Python teams need tools that go beyond formatting, offering context-aware insights into Python code quality, architecture, logic, and hidden edge cases which might become problematic.

- AI-powered code review tools like Qodo Merge help dev teams with PR summarization, test suggestions, and deep code understanding.

- Tools that integrate smoothly with GitHub/GitLab and CI/CD pipelines reduce delays in code reviews and keep your existing workflows intact

- The top Python code review tools covered in this guide include Qodo Merge, DeepSource, Codacy, Codeac, SonarQube, CodeScene, and Review Board.

As a senior DevOps engineer, I’ve reviewed thousands of lines of Python code across automation scripts, deployment pipelines, and backend services. I’ve tested dozens of Python code review tools that claim to help with code quality, but only a few of them actually stood out. If you’re just starting out or want to brush up on fundamentals, this code review covers the basics clearly.

Python is beginner-friendly with clean and readable syntax, which is one of the reasons it is widely adopted. In a recent Reddit thread, the developers discussed that Python isn’t necessarily simple, but it is expressive, which is why it can be easy to use. The simple and easy-to-read format is one of its main advantages, backed by a flexible set of rules, as detailed in Python’s official grammar.

This makes Python best for building everything from quick automation scripts like scraping websites, processing CSVs, to complex systems such as web apps with Django or machine learning models using TensorFlow. But with that flexibility comes the challenge of keeping code clean and easy to maintain across teams. That’s where code reviews and the right code quality tools for Python are required.

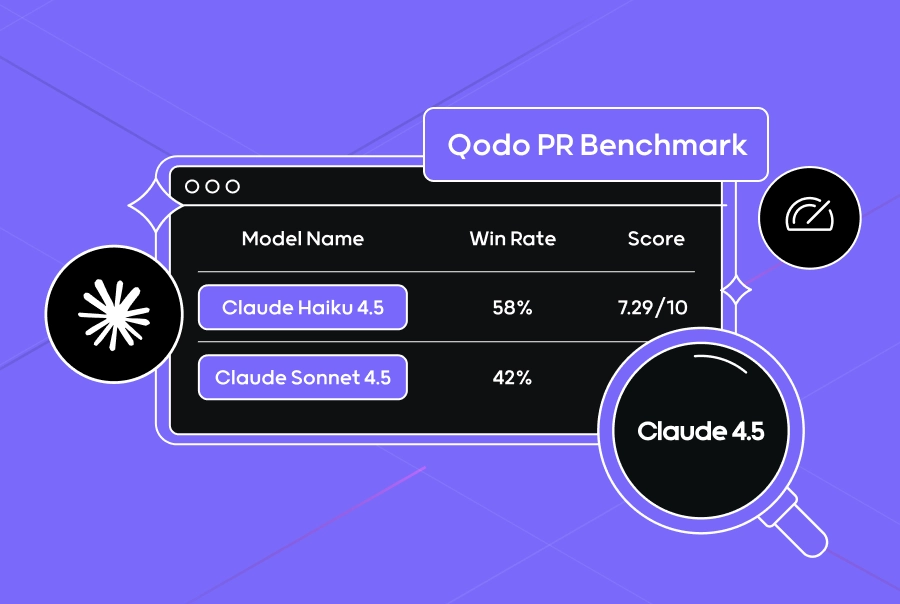

For me, the most important aspect is how thoroughly the code is tested, especially with specific edge cases for its functions. Qodo Merge has been particularly helpful in this regard; it not only performs basic checks but also suggests potential test cases that we might overlook. I have compiled a list of the best Python code review tools for maintaining Python code quality and categorized them based on their usage.

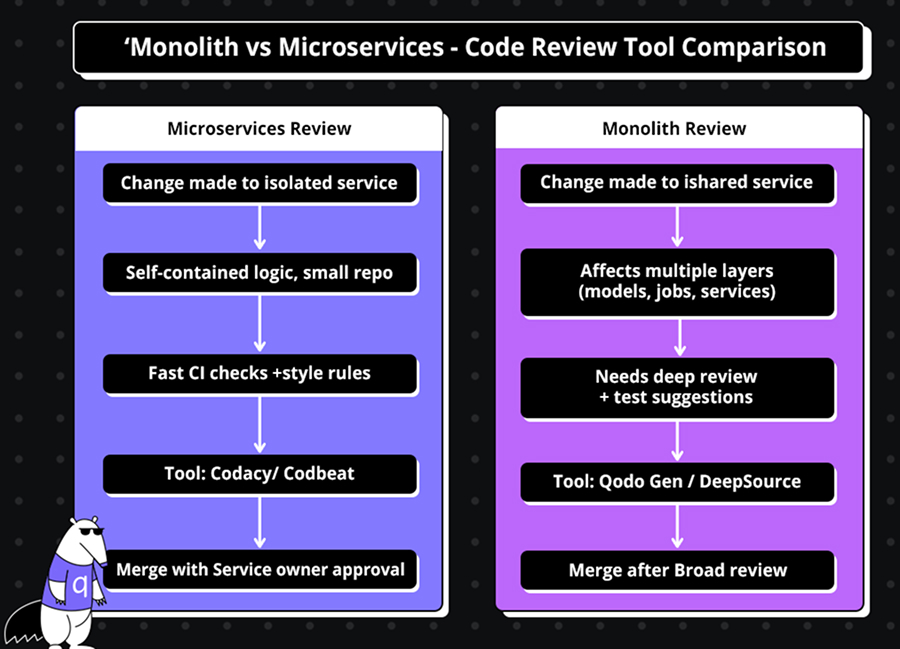

In Python, we build everything from large monoliths, such as Django-based web platforms or all-in-one ERP systems, to lightweight microservices, like a Flask API that handles payments or a FastAPI service for image processing. Before diving into the tools, let’s look at what kind of review support fits each type. Let’s take a closer look.

Best-Fit Review Tools for Monoliths vs. Microservices

Not all Python codebases need the same type of Python code quality review tool. I’ve worked on both large monoliths and split microservices, and the kind of code quality tools Python projects benefit from varies depending on the setup. In large monolithic applications like Django, modules are interconnected, so a change in one file may affect other modules. In contrast, microservices, such as separate Flask APIs, involve managing multiple small projects, making it easy to lose track or develop inconsistent coding styles.

Monoliths Need Deep Context and Smarter Test Suggestions

For monolithic applications, I look for Python code quality tools that can track context across a large codebase and help make sense of deeper logic. These kinds of systems usually have shared models, large utility modules, and multiple layers where one change can impact another. In these cases, I’ve found tools like Qodo Merge and DeepSource especially useful, mainly because they don’t just perform basic linting. They explain what the code is doing, suggest tests, and give you a solid high-level view of how things fit together.

For example, in a Django-based monolith, Qodo Gen suggested writing a unit test for a model method that was indirectly used in multiple view functions, which is something traditional linters would never catch. That’s hard to get in a big monolith unless your tool understands the full picture.

Microservices Need Fast, Isolated, Per-Service Config

On the flip side, microservice architectures benefit from code quality tools that are easier to configure per service and don’t slow down the pipeline. In one project, we had over a dozen Python services handling different events in a Kubernetes setup. Each had its own repo and CI pipeline, so we couldn’t afford a bloated review process. Tools like Codacy and Codeac worked well here as Python code quality tools because they were fast, lightweight, and allowed per-service rule tuning. Slack integration also helped keep the team in the loop without adding more manual checks.

To better understand how review needs differ between microservices and monoliths, here’s a quick visual comparison of how each architecture impacts the code review process.

Using Qodo Gen Across 12+ Python Microservices

In one of the enterprise projects, we used Qodo Gen, one of the most advanced Python code quality tools, to manage reviews across a Kubernetes-based event-driven system. There were 12+ microservices written in Python, each handling its own set of events such as user signups or payment status updates. These services were communicated over Kafka and deployed on a Kubernetes-based event-driven architecture.

Qodo helped us generate quick summaries of what changed in each PR, flagged logic shifts early, and even suggested missing test cases in some of the more complex services. That alone saved hours of review time, especially when changes spanned multiple services.

What Makes a Code Review Tool Great (For Senior Teams)

After working on large Python projects from tightly packed monoliths to distributed microservices, I’ve learned that a good code review tool for Python code quality needs to do more than just catch formatting issues. It needs to work across different repo setups, support how your team writes code, and, like the best code quality tools Python teams use, fit into your CI/CD pipeline without making any major changes.

It Has to Work Across Monorepos and Polyrepos

In monorepos, the tool needs to understand how one change can impact several parts of the system or code, especially when maintaining Python code quality across shared modules. The tool should work well whether your code is in one big repo (monorepo) or split across many small ones (polyrepo).

For example, in a monorepo, if I change a shared function, I would want to know if it would accidentally break something in another part of the code. That’s exactly what a good Python code quality tool should do: catch those hidden impacts before they become real problems.

It Should Be Language-Specific, But Context-Aware

I don’t care if the tool supports 50 languages, I care if it understands the one I’m using. For Python, it should recognize the actual control flow, catch things like unhandled exceptions or unsafe default arguments, and support deeper Python code quality checks, not just complain about unused imports or uncommented lines.

Some tools claim to be language-specific but fall short when it comes to context. The good ones give feedback based on how the code behaves, not just how it looks.

Enforce Conventions Without Getting in the Way

Senior teams usually have their own coding patterns. I’ve worked on projects where snake_case was used in some services and camelCase in others, and that was intentional. The review tool shouldn’t break just because the code doesn’t follow a textbook style guide or some predefined pattern. I want the tool to enforce the right standards, not just presets.

That also includes suggestions. Tools should highlight what matters, like inconsistent exception handling, and leave formatting arguments out of the way unless they’re critical, something good Python code quality tools are built to handle.

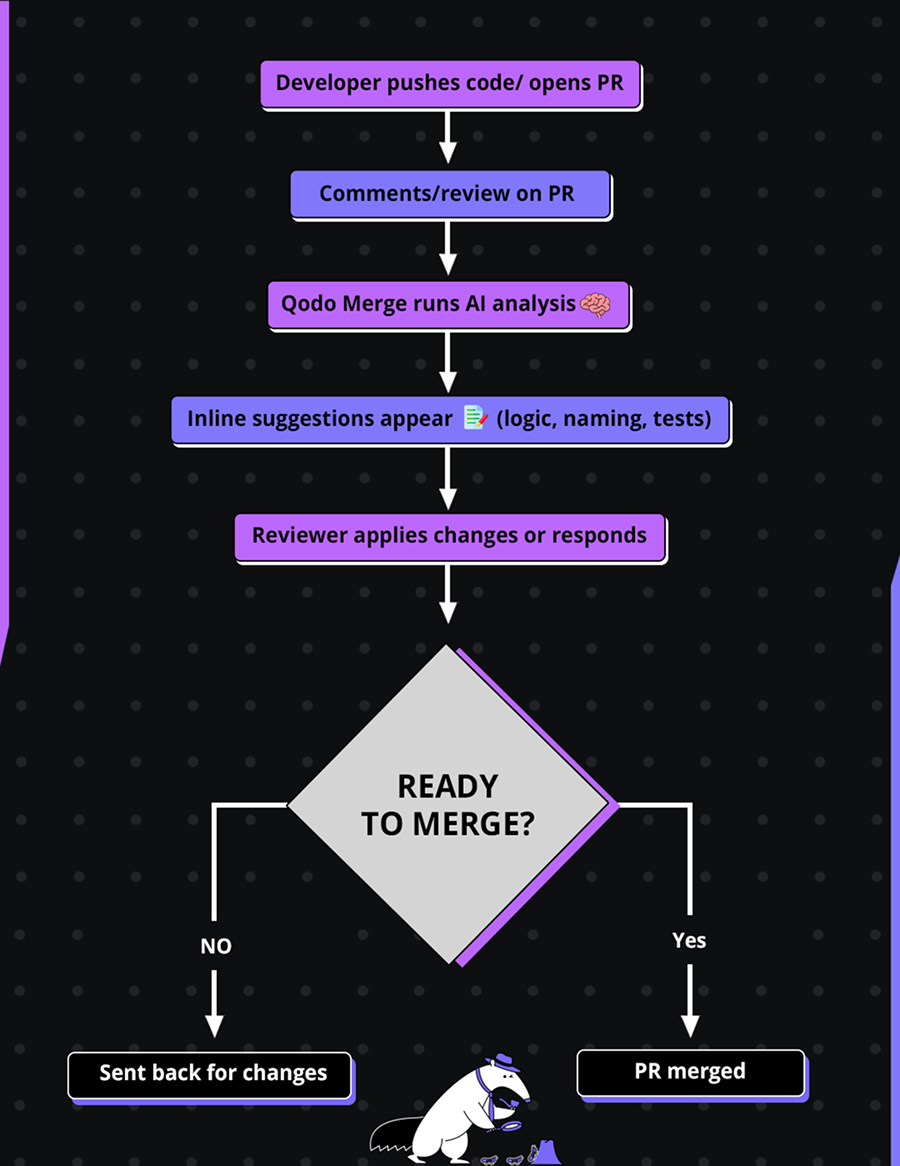

It Needs to Fit the Workflow, Not Fight It

A lot of tools come with strong opinions, especially the ones that try to enforce their own process. But in real teams, workflows vary. Some teams squash commits, others don’t. Some rely on GitHub Actions, some on Jenkins or GitLab CI. The code quality tools Python teams use should plug into whatever’s already working, not ask you to redesign your pipeline around it.

Here’s a quick visual of how a code review tool should fit into a standard Python CI/CD pipeline:

Metrics To Measure Code Review Efficiency

Code reviews aren’t just about catching bugs; they’re part of how a team works and a key factor in maintaining consistent Python code quality across the codebase. I’ve observed teams that have a clean process on paper but still struggle with slow merges, unclear feedback from code reviewers, and repeated bugs. That’s usually because they’re not tracking the right metrics.

Here are the ones that have actually helped me measure how well the review process is working:

Review Turnaround Time (PR Open to Merge)

How long does it take for a pull request to go from “opened” to “merged”? If PRs sit for days waiting for review, there is something not right going on. I track this to spot delays and see where things are getting stuck. Sometimes it’s just that the reviewer is occupied with too many pending reviews, and sometimes the code itself is not clear and needs to be reworked.

Comments Per Review

If there are barely any comments, then something’s wrong; either the code is perfect, which happens rarely, or the reviewer isn’t reviewing properly. On the other hand, if too many comments are there, then it usually means the code wasn’t ready for review. I track this to improve both code quality and review habits.

Bug Count Post-Merge

The real test of a review process happens when the code goes live. If bugs keep showing up after changes are merged, it’s a sign that the reviewer missed something important. Tracking these helps us improve what we check during reviews, especially around logic, edge cases, and test coverage.

Lines Reviewed per Reviewer per Sprint

This helps me see if the team is overloaded. If one reviewer is stuck reviewing 5x more code than everyone else, quality drops fast. Tracking review load helps me distribute work better and avoid overloading a reviewer.

How Qodo Tools Help Track These Metrics

Tools like Qodo Gen and Qodo Merge are powerful Python code quality tools that make code reviews easier and more consistent, especially when working with large codebases with multiple contributors. I’ve used Qodo Gen to quickly understand large Python pull requests, spot hidden logic issues, and find places where more tests are needed. It also helped me understand the role of a particular function and explained the code to get a better understanding of the codebase. It saves time by giving a clear overview, so reviewers don’t have to read every line or guess what the code is doing.

Qodo Merge is great for more organized and systematic reviews. It gives smart, context-aware suggestions and even checks for security issues, all built into the review flow. Both tools help reviewers focus on what actually matters during code reviews. Now let’s go through the code review checklist for Python.

Python Code Review Checklist

Here’s a simple checklist that helps maintain Python code quality using Python code review tools during code reviews:

1. Code Style and Formatting

Start by checking if the code uses consistent naming styles, like snake_case for variables and functions as recommended by PEP 8. Make sure the indentation is clean, spacing is consistent, and everything is easy to read. You should also verify that the code follows your team’s style of writing the code, whether that’s PEP 8 or a custom set of rules.

2. Function and Class Design

When reviewing functions and classes, check if each function is focused on a single task and not doing too much. Class methods should follow clear and logical steps that are easy to understand. If you see repeated logic, make sure it’s been moved into separate reusable functions or classes.

3. Error Handling and Edge Cases

Look at how the code handles errors. Are exceptions being caught in the right places, and is the response to those errors clear and helpful? Also, check if the code accounts for edge cases, like empty lists, missing values, or incorrect input types.

4. Security and Secrets

Check if there’s any hardcoded sensitive information in the code, such as API keys or passwords; these should always be stored securely. Make sure all input values are properly validated to prevent any major security issues. Also, check if the code uses safe libraries and practices, like secrets for generating tokens or secure methods for handling passwords by using vaults or encryption methods.

5. Testing and Coverage

Check if the code includes enough tests to make sure it works as expected. There should be unit tests that test each function or method separately, and integration tests that check how different parts of the system work together. For example, how a form submission flows through validation, database saving, and response generation.

Make sure the tests cover both typical cases (what the code is normally expected to do) and edge cases, like empty inputs, invalid values, or unexpected user behavior. Also, confirm that test coverage is being measured using tools like coverage.py or other Python code quality tools integrated into your CI pipeline. This helps ensure that all key parts of the code are tested and no important logic is missed.

This code review checklist from Qodo also covers these essentials in a handy format.

6. Documentation and Comments

When reviewing documentation and comments of the codebase, check if each function and class has a clear and useful docstring that explains what it does, what inputs it takes, and what it returns. There should also be comments wherever the code logic might be confusing or not very obvious to understand, as this helps others understand the reasoning behind the logic.

7. Dependency Management

For dependency management, check if all the external libraries and packages used in the code are properly listed in requirements.txt or pyproject.toml. This makes sure that others can install the correct dependencies by referring to these files without errors. Look for any unused libraries; if something is imported but never used, it should be removed to keep the code clean and lightweight. Also, confirm that the code is compatible with the intended Python version, especially if it uses features or syntax that might not work in older versions of Python.

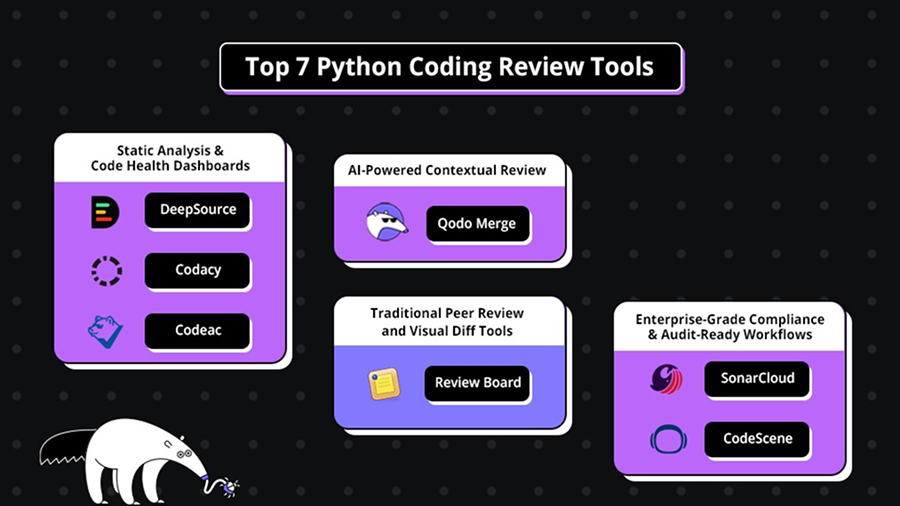

Top 7 Python Coding Review Tools

AI-Powered Contextual Review

Static Analysis & Code Health Dashboards

Enterprise-Grade Compliance & Audit-Ready Workflows

Traditional Peer Review and Visual Diff Tools

AI-Powered Contextual Review

Qodo Merge

As a senior DevOps engineer working on large-scale Python projects, I require a code review tool that goes beyond merely catching syntax errors. Among the modern Python code review tools, Qodo addresses this need with its AI-powered tool called Qodo Merge. This tool comprehensively understands how each code change affects the entire codebase, including architecture, logic, and dependencies. It offers intelligent, content-based suggestions that improve logic, flag edge cases, and boost overall Python code quality.

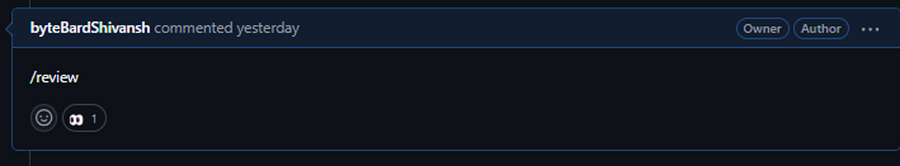

While working on a Python utility module, I pushed a basic implementation with a few rough edges.

For example, I added a simple function to calculate the average of an integer list:

def calcAverage(l): total = 0 for x in l: total += x return total / len(l) # no zero division check

It looked fine to me until Qodo Merge jumped in and flagged something important:

“There’s no check for an empty list here. This can cause a division by zero error.”

Without Qodo Merge, that issue would have gone unnoticed and ended up in production, and if someone passed an empty list, it would’ve caused a ZeroDivisionError during runtime.

It didn’t stop there. Qodo Merge also pointed out a few more issues:

It also noticed that I used “l” as a variable name, which can be confusing since it looks like the number 1. Then, it pointed out that my flattenNestedList function was overly complicated. Here’s what I had written:

def flattenNestedList(input): flat_list = [] for i in range(len(input)): if input[i] != []: for j in range(len(input[i])): flat_list.append(input[i][j]) return flat_list

What I loved was how easily I could get help. I just typed /review in the PR comments, and within seconds,

Qodo replied with smart suggestions, including how serious each issue was.

As someone who acted like a real experienced developer reviewing code from others, this saved me time. It pointed out risky logic, naming problems, and style issues all automatically.

You can even control which repos Qodo has access to, which is nice. And if you change your mind later, it’s easy to update.

Pros:

- Catches logic issues like division by zero, not just style.

- Comment /review or /describe and get insights in seconds.

- Understands code behavior, not just syntax.

- Free for individuals & open source.

Cons:

- Contextual analysis may be slower on larger codebases.

Pricing

The free plan gives you access to AI-powered code reviews, bug detection, and code documentation, along with support from the community. If you need more, the Pro Plan costs $15 per user each month and includes extras like code autocomplete, pre-pull request reviews, and standard support.

Static Analysis & Code Health Dashboards

DeepSource

I recently came across a tool called DeepSource while working on an ML model. After trying out several Python code review tools and code quality tools, DeepSource stood out for its quick setup and smart, context-aware feedback. Even during training, it helped me catch poorly structured exception handling, something that could have slowed down the entire training process.

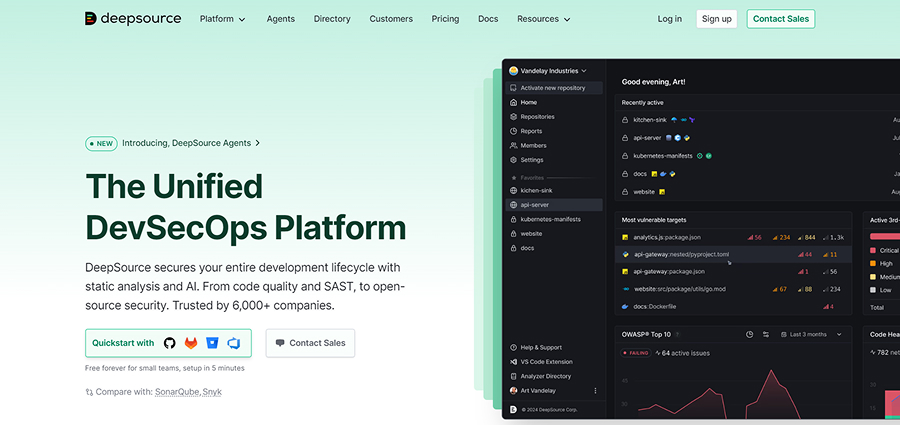

Later, while reviewing a team project that generates Python function variations using OpenAI Evals, I used DeepSource again. It flagged the following line:

base_cases.append(param_default + [set(), {1, 2, 3}, {i for i in range(10)}])

It pointed out that the set comprehension {i for i in range(10)} was unnecessary and suggested replacing it with set(range(10)) for better performance and readability.

That level of detail and practical feedback has made DeepSource a go-to tool in my review workflow.

Pros:

- Easy to set up and integrates well with CI

- Catches common Python mistakes (e.g., unused variables, bad patterns)

- Offers auto-fix suggestions

- Fast, modern UI

Cons:

- Limited rule customization

Pricing:

Free for small teams; paid plans start at $10/month.

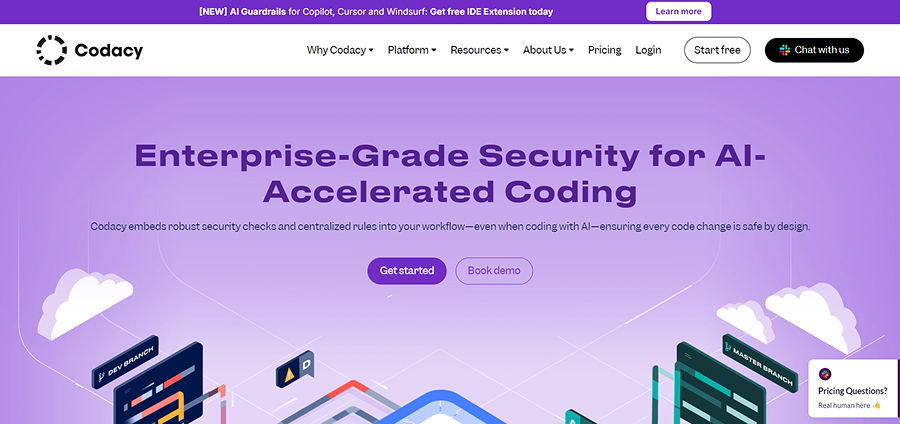

Codacy

Another tool that made it to my list after a recent review was Codacy. I used it while checking a project that builds AI-generated summaries of GitHub repositories. The setup was quick, and the dashboard made it easy to see code quality issues right away.

Codacy flagged this line in one of the files:

return True, eval(output), float(time_taken)

It warned that using eval() on dynamic input can be dangerous, especially if there’s even a small chance the data could be manipulated. It suggested switching to json.loads() or another safer method to handle the data.

What I liked most is that Codacy didn’t just point out surface-level stuff; it focused on real issues that could impact security.

Pros:

- Supports multiple languages and repo types

- Offers team-wide dashboards and metrics

- Helps enforce code style and security rules

- Integrates well with GitHub/GitLab

Cons:

- UI can feel a bit cluttered

- Sometimes flags overly minor issues

Pricing:

Free tier available; premium plans start at $15/user/month.

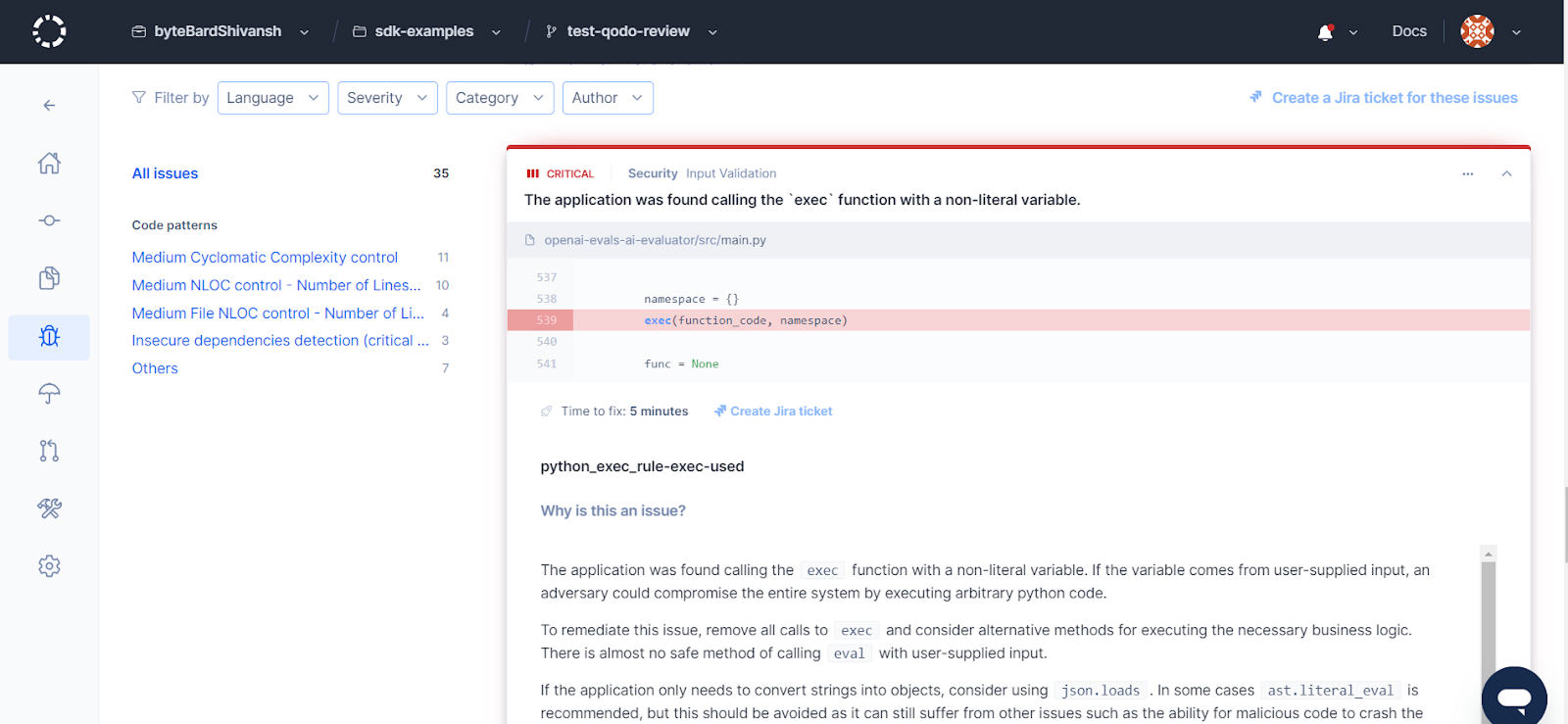

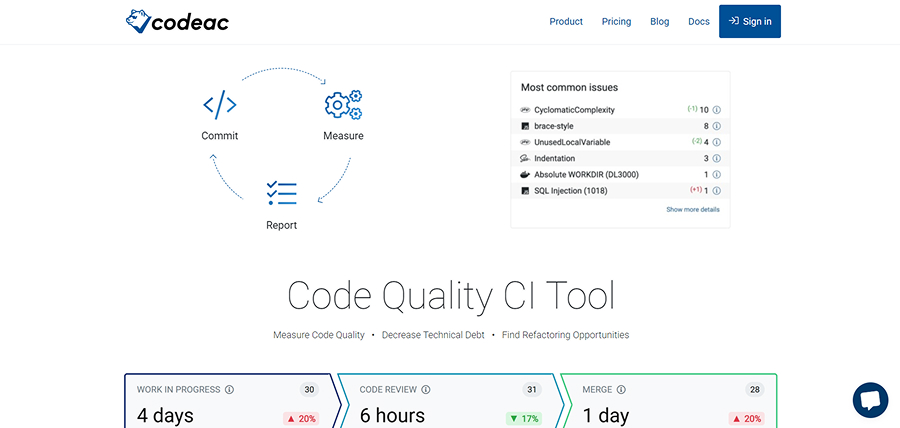

Codeac

In fast-paced projects, balancing speed and code quality can be a real challenge. While exploring new code review tools for my team, I came across Codeac. What stood out to me was that it not only checks for code issues but also tracks how long changes take to move from “in progress” to “merged.”

Codeac’s dashboard gave a quick overview of code health across multiple repos. One of the main issues it flagged was a syntax error in the following line:

logger.info(f"Found {len(results['recent_commits'].split('\\n'))} commits")

Codeac explained that this f-string contains a backslash (\n) inside the expression, which causes a parsing error.

What I liked was how Codeac surfaced this issue clearly with a breakdown of issues per repo, making it easier to decide where to focus. Codeac isn’t as AI-heavy, but it earns its place among lightweight Python code quality tools by focusing on delivery speed and syntax.

Pros:

- Tracks both code quality and delivery speed (cycle time)

- Highlights syntax issues, best practices, and naming problems

- Shows issue counts per repo for prioritization

- Simple UI, easy repo setup

Cons:

- Fewer advanced AI/code suggestions

Pricing:

Free plan for open-source projects, a Pro plan at $21/user/month

Enterprise-Grade Compliance & Audit-Ready Workflows

SonarQube

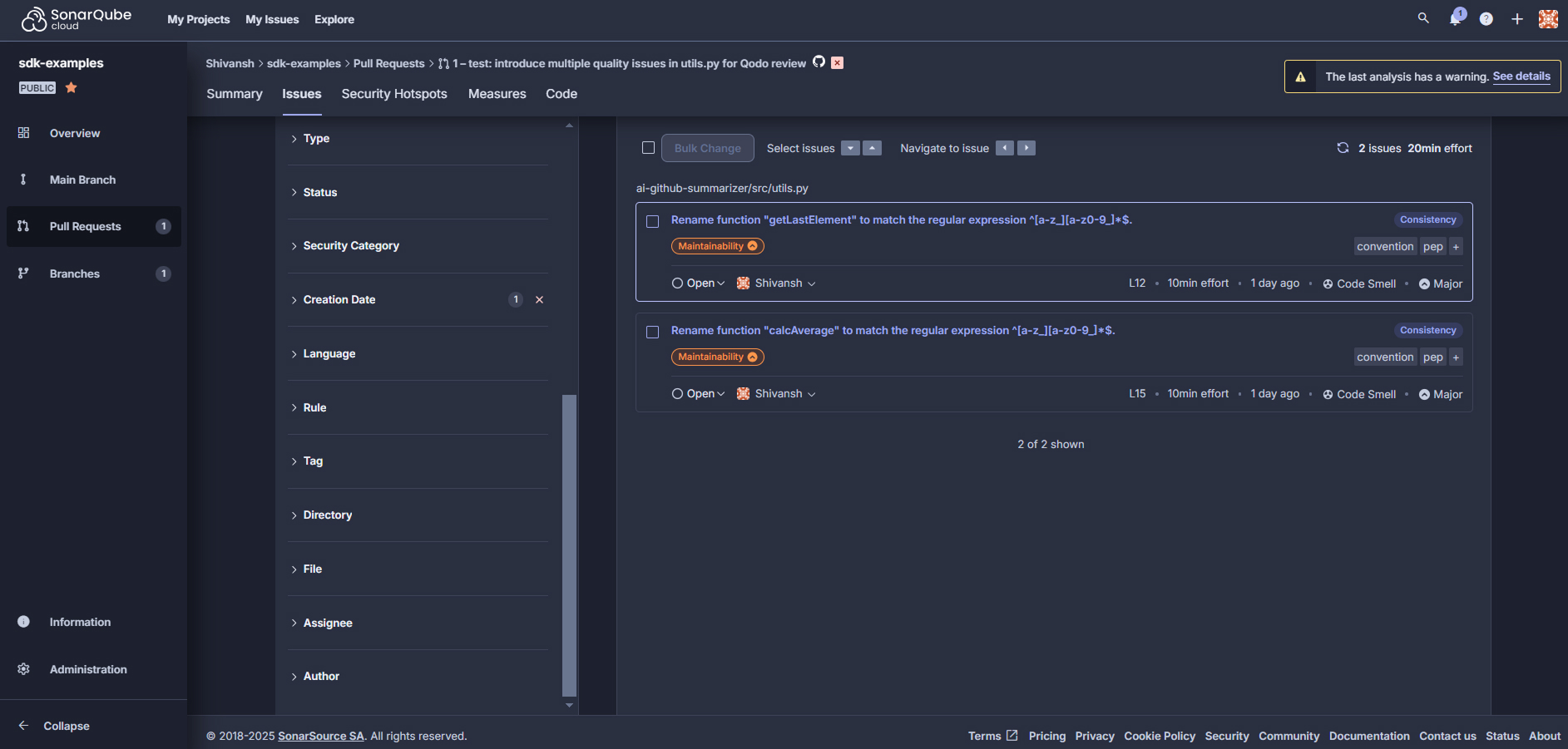

Another tool I explored during code review was SonarQube. Its review panel didn’t just surface bugs; it focused on clean code principles, like naming conventions, maintainability, and code smells. One of the first things it flagged was a naming issue in these two functions:

def getLastElement(list): def calcAverage(l):

SonarQube explained that these names don’t follow Python’s PEP 8 naming conventions. It suggested using snake_case, like ‘get_last_element’ and ‘calc_average’, to ensure consistency across the codebase.

It also tagged both findings as “Code Smell” with a maintainability rating and a medium effort estimate (10 minutes each). That kind of feedback is easy to act on, but it also scales well, especially when reviewing contributions across a team. It focuses heavily on Python code quality, especially when enforcing naming conventions, reducing code smells, and maintaining clarity across large teams.

Pros:

- Great for enforcing naming conventions and clean code

- Tracks maintainability, bugs, and code smells

Cons:

- Can feel heavyweight for small projects

- Requires tuning to avoid noisy reports

Pricing:

SonarQube pricing starts with a free Community Edition, with paid plans ranging from $160/year.

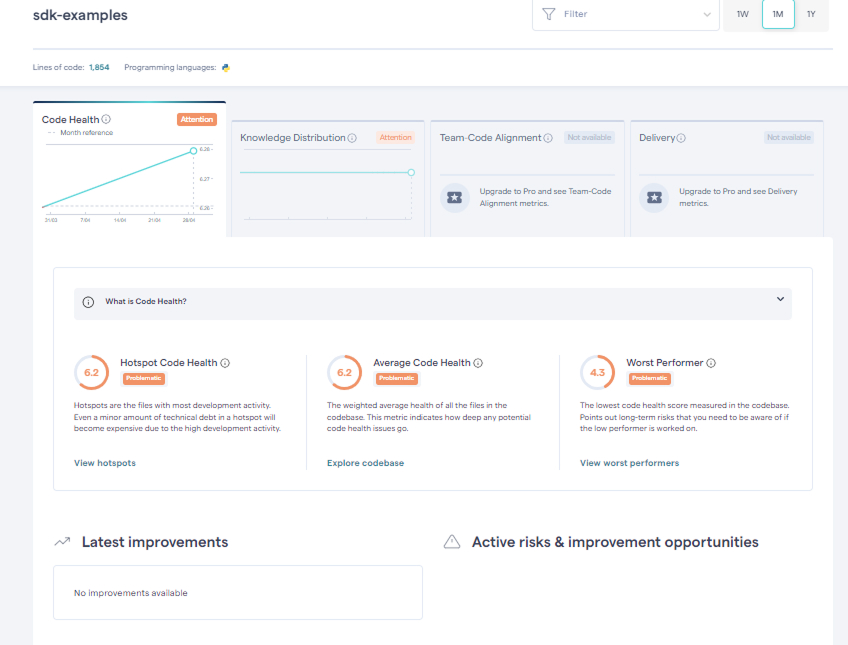

CodeScene

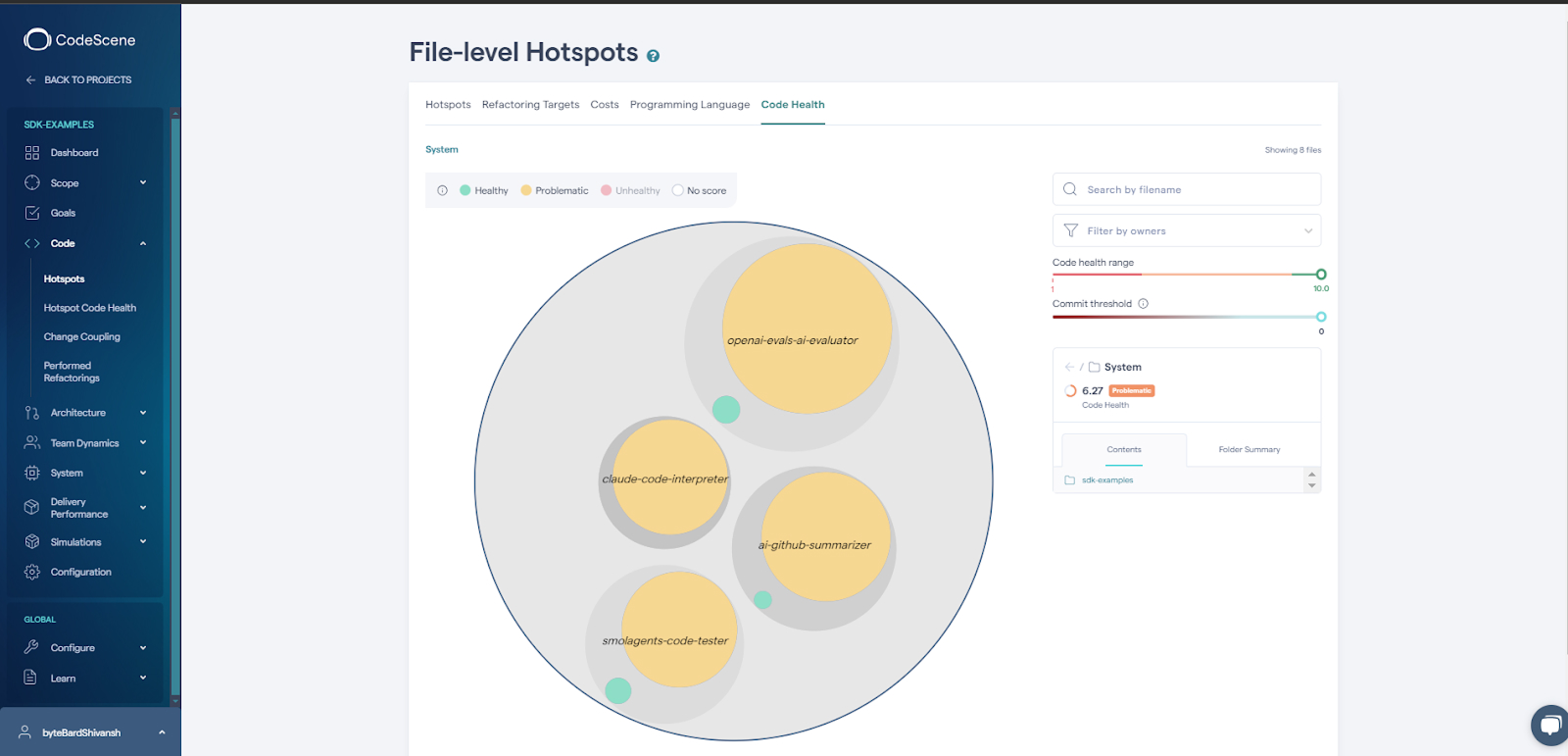

With multiple submodules and contributors involved, I needed a tool that could highlight not just code smells, but architectural and team-level risks too.

That’s where CodeScene stood out. Its “File-level Hotspots” view helped me instantly spot which parts of the codebase were both frequently changed and problematic in quality.

In the visual analysis, modules like openai-evals-ai-evaluator, ai-github-summarizer, and claude-code-interpreter were flagged as problematic, while only a few showed as healthy. The overall system had a code health score of 6.27, which CodeScene labeled as “Problematic.”

CodeScene combines analytics and reviews, making it one of the most insightful code quality tools Python teams can use to track risky areas and plan refactors.

Pros:

- Visualizes hotspots and areas of code that are risky and frequently changed

- Combines code quality with team behavior insights

- Helps prioritize refactoring based on real-world impact

- Detects delivery risks early

Cons:

- Requires commit history access

- UI has a learning curve for first-time users

Pricing:

Custom pricing: free tier for small/open-source projects. Contact for enterprise plans.

Traditional Peer Review and Visual Diff Tools

Review Board

Review Board is a self-hosted tool that’s been around for a long time, and it’s built for doing code reviews in a clear and organized way. I used it a lot for Python projects, especially when working with teams where multiple people were reviewing code across different branches.

What I liked most was how Review Board keeps everything in one place: the description, testing notes, commit ID, and who’s reviewing what. Even for small changes, it’s easy to leave comments, track discussions, and follow up later. It’s a great tool when you want to focus on thoughtful reviews rather than just running automated checks.

Pros:

- Focuses on discussion, not just automation

- Great for detailed reviews and legacy code

Cons:

- Outdated UI compared to modern tools

- No built-in automation or code analysis

Pricing

Offers a Basic plan at $6/user/month and a Business plan at $12/user/month, with more integrations and premium features in the latter.

You can also explore this detailed breakdown of popular code review tools to compare features and use cases.

Python Best Practices for Code Quality

Writing good Python code isn’t just about removing syntax errors; it’s also about maintaining strong Python code quality by making your code easy to read, test, and scale. Here are some simple habits that help me keep my code clean and readable:

1. Write Modular and Reusable Code

Break your code into small, clear functions that each do one job. This makes it easier to reuse, test, and understand.

2. Avoid Global Variables

Using global variables can lead to bugs that are hard to find. Instead, pass values between functions or use class variables.

3. Add Comments and Docstrings

Write short comments and docstrings while you code. Explain what each function does and why certain steps are important.

4. Keep an Eye on Code Performance

Use tools like cProfile to find which parts of your code are slow. Try to improve those parts; small changes can make your code much faster.

Conclusion

Reviewing Python code helps teams write clean, secure, and reliable software. It’s not just about fixing errors, it’s about making sure the code is easy to understand and safe to use.

Tools like Qodo Merge make this process much easier. It gives smart suggestions, highlights important issues, and even explains how a change might affect the rest of the code. Along with tools like DeepSource and Codacy, it helps teams catch problems early and review code faster.

If you want to save time, reduce bugs, and keep your codebase in good shape, adding Python code review tools like Qodo to your workflow is a great way to start. And if you’re exploring more AI tools beyond code reviews, this list of top AI coding assistants is worth a look.