How to Build an AI-Powered Pull Request Review That Scales With Development Speed?

TL;DR

- AI-powered pull request(PR) review automates PR analysis, but breaks down if it isn’t fully connected to your company’s codebase and CI/CD workflows. Most teams treat it as “smarter comments” when they actually need systematic enforcement.

- PR review should function as a decision layer, not a commenting bot. It needs a full codebase context, executable policies, and integration with a CI/CD pipeline to generate allow/warn/block decisions before human review begins.

- This guide shows you how to implement AI-powered PR review properly: 7 critical capabilities your system needs, a 6-stage workflow that scales, and how AI code review platforms like Qodo handle the PR review that doesn’t work manually at scale.

Your team merged 10x more pull requests last year compared to three years ago. AI coding assistants increased that velocity. GitHub Copilot, Cursor, and similar tools now generate 20-30% of production code in active projects. The problem isn’t writing code anymore. It’s validating what gets merged.

I’ve worked with engineering teams from 50-person startups to Fortune 100 enterprises on their code review systems. Here’s the pattern I see everywhere: AI dramatically speeds up code generation, but review still depends on senior engineers manually inspecting diffs, one PR at a time. The bottleneck shifted from authoring to approval.

The consequences are predictable and measurable:

- Review queues grow exponentially: As PR volume increases 3-5x, review capacity remains flat

- Quality degrades silently: Broken access control now affects 151,000+ repositories with 172% YoY growth

- Senior engineers become blockers: Your most experienced developers spend 40-60% of their time on manual review work

- AI-generated code escapes validation: Code that passes tests but silently omits authentication, violates API contracts, or breaks downstream services

The cost shows up in production incidents, security vulnerabilities, and engineer burnout. Teams that solve this don’t just add AI comments to PRs. They treat AI-powered PR review as infrastructure that enforces the organisation’s coding standards, detects risk, and surfaces context before human reviewers see the change.

Traditional Review vs. AI-powered PR Review: Understanding the Shift

Before implementing AI powered PR review, you need to understand which model you’re replacing. Most failures come from treating PR review as “faster manual review” instead of a fundamentally different approach.

| What Gets Evaluated | Traditional Manual Review | AI-powered PR Review |

| Policy enforcement | Inconsistent (depends on reviewer memory) | Automated, applied to every PR |

| Cross-repo impact | Missed unless the reviewer knows all dependencies | Automatically analyzed via dependency graphs |

| Risk detection | Implicit reviewer judgment | Explicit classification before review |

| Security patterns | Visual inspection of diff | Context-aware analysis across the codebase |

| Test adequacy | “Basic coverage check without behavior validation” | “Do tests cover changed behavior paths?” |

| Review capacity | Scales linearly with reviewers | Scales with automation infrastructure |

| Audit trail | Comment threads and approvals | Structured decisions with policy references |

The primary difference: Traditional review forces senior engineers to manually check coding standards, test coverage, and security rules on every PR. AI-powered PR review handles these repetitive checks automatically, so engineers focus exclusively on design decisions, business logic, and whether the implementation matches what was actually needed.

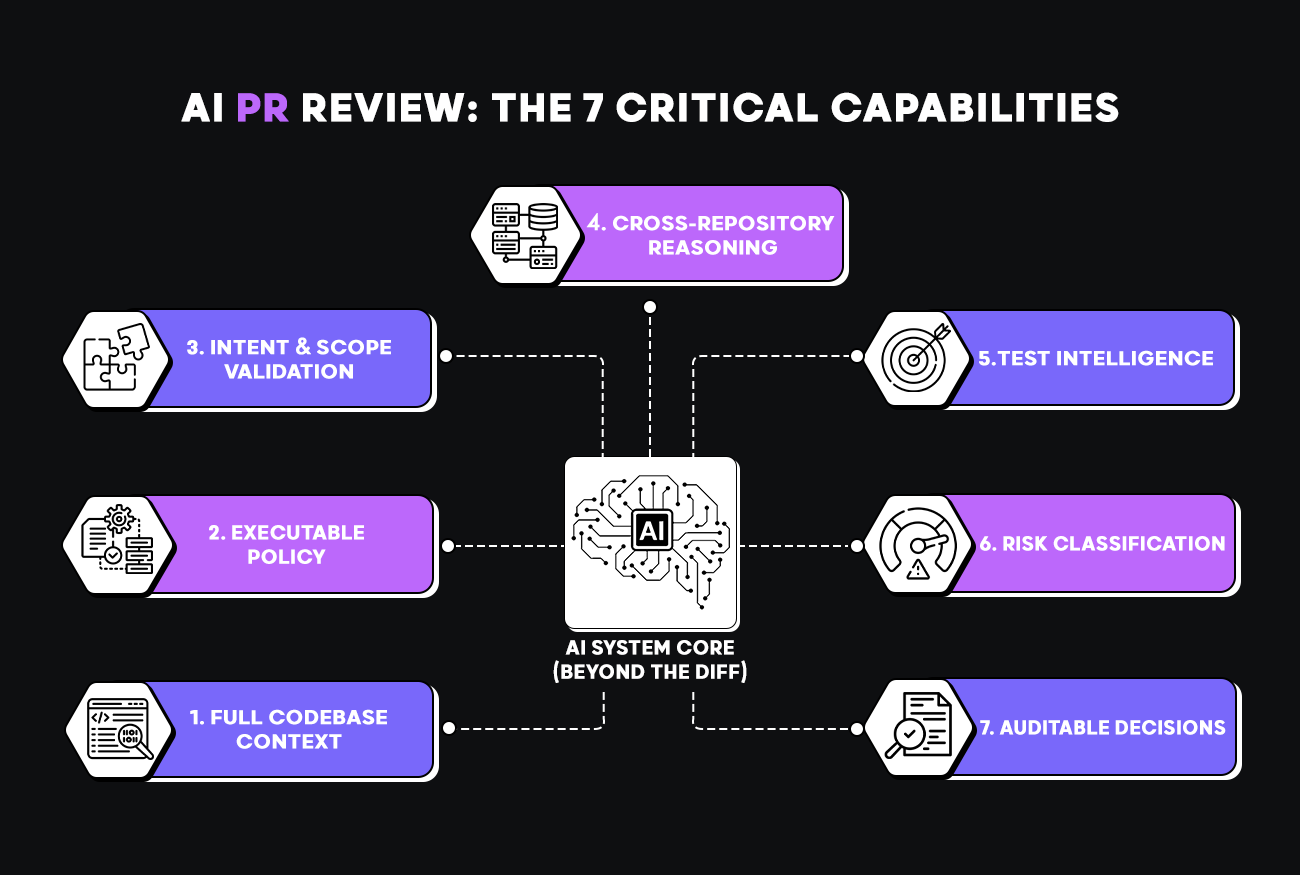

AI-powered PR Review: 7 Critical Capabilities Your System Must Have

AI-powered PR review only works when it can reason beyond the diff. Here are the seven capabilities that separate functional AI review from glorified linters:

1. Full Codebase Context

Tools that only see the changed files miss how those changes affect the rest of the system.

What PR review requires:

- Understanding of dependency graphs across repositories

- Knowledge of which services consume changed APIs or libraries

- Awareness of architectural boundaries and contracts

- Historical context about what broke before in this code area

Example: A developer updates a shared authentication library. The PR review that sees only the library file will miss that Service A, Service B, and Service C all depend on the old method signature. But the PR review with full codebase context flags all three services as affected before the merge.

2. Executable Policy, Not Documentation

Coding standards wikis, security requirement documents, and API design guidelines that engineers are expected to remember and manually enforce.

What PR review requires:

- Machine-checkable rules for security, logging, error handling, and API design

- Version-controlled policy configurations

- Automatic application of rules to every PR

- Clear violation messages with remediation guidance

Example: Your security team requires structured logging for all API endpoints. Instead of hoping reviewers remember this, PR review automatically checks every new endpoint against the logging pattern and blocks the merge if the requirement isn’t met.

3. Intent and Scope Validation

PRs that implement more (or different) functionality than the scope of the linked ticket.

What PR review requires:

- Automatic linking between PRs and work items (Jira, Github Issues, Azure DevOps, etc.)

- Analysis checking implementation against the original ticket scope

- Detection of “extra” functionality added beyond requirements/acceptance criteria

Example: A ticket requests adding pagination to an API endpoint. The PR implements pagination but also modifies unrelated business logic in the same service. AI-powered PR review flags the scope creep, requiring either a separate ticket or removal of out-of-scope changes.

4. Cross-Repository and Multi-Service Reasoning

Changes that look safe in isolation but break other services or repositories.

What PR review requires:

- Tracking of shared libraries, SDKs, and contracts across repos

- Analysis of how changes propagate through microservices

- Detection of API/schema modifications that affect downstream consumers

Example: An SDK is updated in Repository A. Services in Repositories B, C, and D depend on it. AI-powered review identifies that Repository C’s integration tests will fail with the new SDK version and blocks the merge until downstream services are validated against the updated SDK interface.

5. Test Intelligence

Teams optimize for coverage percentages while shipping untested behavior paths.

What PR review requires:

- Detection of changed logic paths that lack corresponding tests

- Analysis of whether or not tests validate actual app/service behavior

- Both unit and integration tests

Example: A pricing function is updated with a new discount type. Although test coverage is 100%, the new discount path is never tested. AI-powered PR review identifies the missing test case.

6. Risk Classification Before Human Review

All PRs get the same level of scrutiny regardless of actual risk.

What PR review requires:

- Behavioral vs. cosmetic change detection

- Identification of security-sensitive code paths (auth, data access, encryption)

- Classification of API/schema/contract modifications

- Analysis of blast radius (how many systems are affected)

Example: A 10-line authentication change gets flagged as high-risk security. A 500-line documentation update doesn’t. The system routes each appropriately.

7. Auditable Decisions with Clear Rationale

“LGTM” approvals that don’t specify what was validated or why.

What PR review requires:

- Structured approval records stating what was checked

- Logged policy violations and override justifications

- Queryable history for incident investigation

Example: After a production incident, leadership asks: “Who approved the change that caused this? What checks ran?” AI-powered review provides a complete record: which policies were evaluated, what violations were found, who overrode what, and why.

How AI-Powered PR Review Works: The 6-Stage Workflow

A properly implemented AI-powered PR review system operates as a sequence of automated analysis stages. Scalability comes from automation, handling enforcement of the organisation’s compliance rules, and context gathering, while humans focus on judgment.

Since the changes often affect multiple services and repositories, understanding the broader context of the codebase is very important during the PR review. The 2025 Gartner Critical Capabilities for AI Code Assistance report identifies codebase understanding as a required capability for tools involved in code review. In that evaluation, Qodo ranked #1 in Codebase Understanding.

Now let’s walk through each stage of the automated review workflow.

Stage 1: Pre-Review Automated Gates

Anything that doesn’t require human judgment runs before a reviewer sees the PR.

Automatic blocking on:

- Missing or failing tests

- Linting and static analysis violations

- Ownership or approval policy breaches

- Hardcoded secrets or dependency vulnerabilities

Critical rule: If a PR fails automated gates, it never enters the human review queue.

Stage 2: Codebase Context Analysis

AI analyzes changes across the full system, identifying which services are affected, which APIs are modified, which teams own the affected code, and which dependencies exist on the changed components.

Stage 3: Policy and Standards Enforcement

The system applies organizational rules automatically: security requirements, coding standards, architectural constraints, and compliance requirements.

Stage 4: Risk Classification and Routing

Before human review begins, the system classifies the PR and routes it appropriately based on behavioral change analysis, security-sensitive code paths, and downstream system impact.

Stage 5: Human Review Focused on Judgment

Once automation has enforced rules and surfaced context, human reviewers focus on: Does the implementation match the stated intent? Are the architectural trade-offs appropriate? Will this be maintainable long-term?

Stage 6: Auditability and Merge Decision

The final stage produces a traceable decision: Allow (all checks passed), Warn (non-blocking issues detected), Block (policy violation requires resolution), or Override (blocking issue bypassed with logged justification).

How Qodo Implements Production-Grade PR Review

The workflow above requires significant infrastructure: codebase indexing, policy engines, risk classifiers, CI/CD integration, and audit systems. Most organizations don’t have the resources to build this internally.

This is where platforms like Qodo become relevant. They provide the infrastructure layer so teams can focus on defining their policies and standards, not building review systems.

What Qodo Actually Does

Qodo is an AI code review platform built specifically to implement the six-stage workflow described above:

- Full codebase indexing: Qodo indexes your entire codebase across all repositories, building dependency graphs, API usage maps, and historical change patterns.

- Pre-review automation: Baseline checks run automatically (test coverage verification, security vulnerability scanning, policy compliance validation, ticket linkage enforcement).

- Context-aware risk detection: Qodo classifies PRs by actual risk. A 10-line authentication change gets flagged as high-risk security. A 500-line documentation update doesn’t. It catches issues traditional static analysis misses: broken access control patterns in AI-generated code, missing authentication checks, and cross-repo breaking changes.

- Executable policy enforcement: Your organization’s standards are encoded in configuration files (e.g., pr_agent.toml). Qodo checks every PR against these rules automatically.

- Integration with existing workflows: Works with GitHub, GitLab, Bitbucket, Jira, Azure DevOps, Linear, Jenkins, GitHub Actions, GitLab CI, CircleCI, Slack, and Microsoft Teams.

- Deployment options for enterprise:

- SaaS: Managed by Qodo, fastest to deploy

- Private VPC: Runs in your cloud, you control the network

- On-premises: Air-gapped deployment for regulated environments

- Zero data retention: Option to ensure no code persists in Qodo’s systems

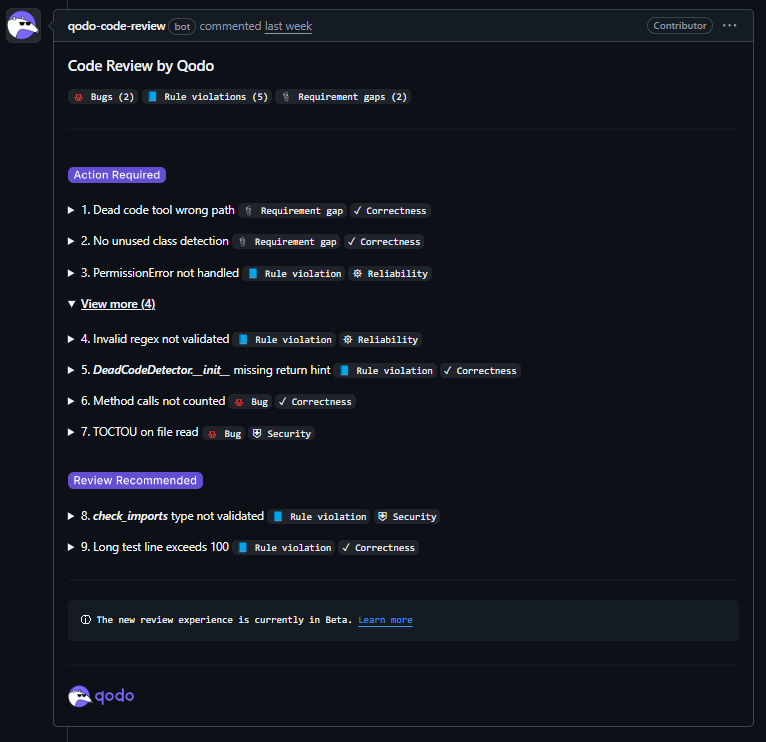

Python MCP Workshop PR Review: Dead Code Detector Implementation

A Python MCP (Model Context Protocol) workshop project was adding a new dead code detection tool. The PR (feat: add dead code detector tool #54) introduced:

- A complete dead_code_detection module with AST-based analysis

- Detection for unused imports, variables, functions, and parameters

- Unreachable code detection (after return/raise/break/continue)

- 64 comprehensive tests covering all patterns

- MCP server integration with JSON Schema validation

The PR included 269 passing tests, clean formatting (rough check passed), and proper documentation. The implementation looked production-ready. But here’s how Qodo’s review workflow evaluated it:

Issue 1: Wrong Module Path Structure

The implementation placed the new tool under:

src/workshop_mcp/dead_code_detection/

But the repository’s prescribed structure requires:

src/workshop_mcp/tools/dead_code_detection/

Result: This breaks the project’s tool discoverability pattern and can cause import/packaging inconsistencies across the MCP server. A human reviewer focused on the detection logic would likely miss this structural violation entirely.

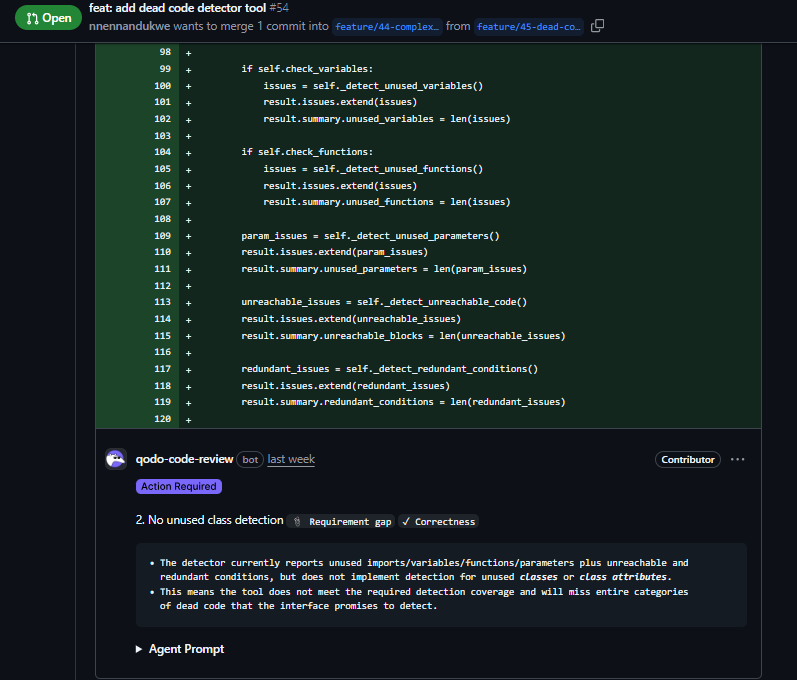

Issue 2: Missing Detection Coverage

The PR description claimed to detect “unused Python code” comprehensively. As shown in the snapshot below:

The detector.py implementation checks:

- Unused imports ✓

- Unused variables ✓

- Unused functions ✓

- Unused parameters ✓

- Unreachable code ✓

But it’s missing:

- Unused classes ✗

- Class attribute usage ✗

The detect_all() method in detector.py shows the gap:

def detect_all(self) -> DeadCodeResult:

if self.check_imports:

issues = self._detect_unused_imports()

if self.check_functions:

issues = self._detect_unused_functions()

# No _detect_unused_classes() call exists

The tool promises complete dead code detection, but won’t catch unused class definitions or class attributes, entire categories of dead code that would slip through.

Issue 3: Method Call References Not Tracked

The usage graph (usage_graph.py) tracks attribute access like os.path.join by recording os as referenced. But it doesn’t track method calls as references:

elif isinstance(node, astroid.Attribute):

leftmost = node

while isinstance(leftmost, astroid.Attribute):

leftmost = leftmost.expr

if isinstance(leftmost, astroid.Name):

graph.references.add(leftmost.name)

This means obj.method() or self.method() calls won’t mark method as used. Result: false positives flagging actually-used methods as UNUSED_FUNCTION in real codebases.

Issue 4: Security – Time-of-Check-Time-of-Use (TOCTOU) Vulnerability

In server.py, the code validates file paths, then reads them:

# Validate path

self.path_validator.validate_exists(file_path, must_be_file=True)

# Later: read using original user string

if file_path and not source_code:

source_code = Path(file_path).read_text(encoding="utf-8")

Between validation and read, a symlink can be swapped to point outside the allowed directories. This turns a validated request into an arbitrary file read. The validator resolves symlinks during the check, but the actual read uses the unvalidated original path.

Issue 5: Invalid Regex Crashes the Tool

User-provided ignore_patterns get compiled directly without validation:

self._ignore_res = [re.compile(p) for p in (ignore_patterns or [])]

A malformed regex pattern raises re.error at runtime, crashing the tool instead of returning a clean “invalid parameters” error. Common user input mistakes become tool failures.

By the end, it caught reliability and completeness gaps

Three more issues emerged:

- PermissionError is not handled for file reads (crashes instead of a graceful error)

- Missing type hint on __init__ (-> None) violates project standards

- check_imports type not validated before use

Why Gartner Ranked Qodo #1 in Codebase Understanding

According to the 2025 Gartner Critical Capabilities for AI Code Assistants report, Qodo ranked highest in Codebase Understanding (the ability to reason about code across repositories, understand architectural context, and detect impacts beyond the local diff).

As Itamar Friedman, Qodo’s CEO, explains:

“Code review is a harder technical challenge than code generation. Expectations are higher because it sits directly on the SDLC critical path. When it fails, teams lose trust immediately. That’s why we built Qodo specifically for review, not generation.”

Implementation Case Study: Monday.com

Monday.com runs a complex microservices architecture with 500+ developers. They implemented Qodo as their AI-powered PR review layer with measurable results:

- 800+ potential issues are stopped monthly

- ~1 hour saved per pull request

- Consistent enforcement across teams

As Liran Brimer, Senior Tech Lead at Monday.com, described:

“By incorporating our org-specific requirements, Qodo acts as an intelligent reviewer that captures institutional knowledge and ensures consistency across our entire engineering organization.”

Qodo flagged a case where environment variables were mistakenly exposed through a public API (an issue that could have slipped past manual review).

Step-by-Step: How to Implement AI-powered PR Review

I’ve helped engineering teams ranging from 50 to 100+ developers implement AI-powered PR review systems in their SDLC. The pattern that works: incremental rollout with clear ownership. Following PR review best practices means starting with automation for enforcement while preserving human judgment for architectural decisions.

The Implementation Checklist

| Step | What to Implement | Timeline |

| 1. Establish baseline metrics | Track current review time, merge time, and escaped defects | Week 1 |

| 2. Define a clear policy scope | Document which standards will be enforced automatically | Week 1-2 |

| 3. Start in advisory mode | Deploy automated PR review to generate feedback without blocking | Week 2-3 |

| 4. Calibrate against real PRs | Review AI feedback quality, tune policies | Week 3-6 |

| 5. Turn on enforcement | Block on clear violations: failing tests, hardcoded secrets | Week 6-8 |

| 6. Expand to risk-based routing | Route high-risk PRs to senior reviewers | Week 8-10 |

| 7. Add cross-repo analysis | Turn on dependency tracking across repositories | Week 10-12 |

| 8. Establish override workflows | Define who can bypass blocks, and how to log justification | Week 12+ |

How Do You Deploy PR Review That Scales With Development Speed?

AI-powered PR review isn’t about adding smarter comments to pull requests. It’s about building infrastructure that scales validation capacity to match increased development velocity.

The teams that succeed treat PR review as a system that:

- Enforces standards automatically and consistently

- Detects risk with full codebase context

- Routes changes to appropriate reviewers based on risk

- Preserves human judgment for architecture and intent

- Generates auditable, explainable decisions

Your team is already shipping 10x more code than three years ago. The only question is whether you’ll scale review intentionally through AI-powered infrastructure, or watch code quality decrease while PR review queues grow.

FAQs

1. What is an AI-powered pull request review, and how does it differ from traditional code review?

PR review uses artificial intelligence to automatically analyze pull requests against organizational policies, codebase context, and risk factors. Where traditional review has humans enforce rules, hunt for bugs, and evaluate design simultaneously, AI-based PR review automates enforcement and risk detection so humans focus exclusively on architecture and intent.

Platforms like Qodo implement this by keeping a persistent understanding of your entire codebase, checking every PR against versioned policies, and generating structured allow/warn/block decisions. This changes review from an ad-hoc human process into an infrastructure that scales with your development velocity.

2. How do you implement AI-powered PR review without disrupting existing workflows?

Start in advisory mode (deploy AI-powered PR review to generate feedback without blocking merges). This lets teams calibrate policies against real PRs before enforcement begins.

Qodo integrates directly with existing PR workflows (GitHub, GitLab, Bitbucket, Azure DevOps), appearing as automated checks teams already understand. You can configure it to run automatically on every PR or manually on demand, and control which repositories, branches, or file types are analyzed. Teams adopt Qodo without changing how they work.

3. Can AI-powered PR review detect security issues that human reviewers miss?

Yes, when it has a full codebase context. AI-powered PR review is especially strong at catching broken access control patterns (especially in AI-generated code that passes tests but omits authentication), cross-repo security impacts, missing validation, and unsafe API exposure.

Qodo’s context-aware security analysis goes beyond traditional static analysis tools. It understands your application’s authentication patterns, tracks how permissions flow through your code, and detects when AI-generated code introduces security gaps that pass unit tests but create production vulnerabilities.

4. How does AI-based pull request review handle false positives and noisy feedback?

Policy calibration is critical. Qodo uses configuration files (e.g., pr_agent.toml) to encode preferences like severity levels (blocking vs. warning vs. advisory), thresholds for surfaced findings, and repository-specific rules. As your team uses Qodo, you refine these policies based on real feedback. Teams report that after 4-6 weeks of calibration, they achieve 80%+ of PRs requiring no human review comments.

5. What infrastructure is needed to run an automated PR review at enterprise scale?

Enterprise-grade AI-powered PR review requires codebase indexing, a policy engine, CI/CD integration, risk classification, and an audit system. Most teams adopt Qodo, which provides the complete infrastructure layer with flexible deployment options:

- SaaS: Managed by Qodo, fastest deployment (days, not months)

- Private VPC: Runs in your cloud environment

- On-premises: Air-gapped deployment for regulated industries

- Zero data retention: No code persists in Qodo’s systems

A Global Fortune 100 retailer onboarded 2,500+ repositories and 5,000+ developers in under 6 months, saving 450,000 developer hours annually.

6. How does AI-based PR review integrate with existing CI/CD pipelines?

AI-powered pull request review runs as an automated check in your existing pipeline (GitHub Actions, GitLab CI, Jenkins, Bitbucket Pipelines). The integration is typically a few lines of configuration. Qodo runs analysis, posts results to the PR, and can block merge on policy violations.

Qodo’s CI/CD integration works invisibly to developers while providing strong enforcement. PRs receive immediate automated feedback the moment they’re opened. Results appear as standard PR checks that developers already understand.

7. What ROI can teams expect from implementing AI PR review?

Monday.com (500+ developers): 800+ potential issues stopped monthly, ~1 hour saved per pull request, consistent enforcement across all teams.

Global Fortune 100 retailer: 450,000 developer hours saved annually, 2,500+ repositories onboarded, consistent policy enforcement across 5,000+ developers.

Typical impact: 30-40% reduction in time to first review, 40-60% reduction in rework cycles, 80% of PRs require no human review comments.

8. How does AI-powered PR review handle cross-repository and microservices architectures?

AI-powered PR review keeps a graph of shared libraries and SDKs, API contracts and consumers, service dependencies, and deployment boundaries. When a PR modifies shared code, it identifies all consuming services, checks compatibility, flags breaking changes before merge, and routes to teams that own affected services.

Qodo stands out in cross-repository analysis because it keeps a complete dependency graph across your entire organization’s codebases. When Monday.com implemented Qodo, they discovered that 17% of PRs contained issues affecting downstream services (issues invisible from single-repository diff inspection).

9. Can AI-powered PR review replace human code reviewers entirely?

No, and it shouldn’t. AI-based pull request review handles enforcement and context gathering. Humans provide judgment.

AI-powered PR review is good at: Policy enforcement, cross-repo impact detection, risk classification, security pattern analysis, and test adequacy verification.

Humans are good at: Architectural trade-off evaluation, intent vs. implementation alignment, long-term maintainability assessment, domain-specific correctness, and edge case reasoning.

Qodo automates what machines do better (consistency, context gathering, policy enforcement) while preserving human review for what only humans can evaluate (intent, design trade-offs, business logic). Teams using Qodo report that reviewers spend 40-60% less time on mechanical checks and can finally focus on architectural discussions.