AI-Powered Bitbucket Code Review: Automating Pull Requests for Fast-Paced Development

TL;DR

- Bitbucket pull request size is increasing faster than reviewer capacity as AI-assisted commits expand diff size and complexity, creating reviewer fatigue, longer approval cycles, and higher regression risk across multi-repository environments.

- Native Bitbucket tooling focuses on pull request mechanics rather than behavioral context, leaving senior engineers to manually validate intent, cross-repository impact, test coverage gaps, and Jira alignment under time pressure.

- Enterprise teams need enforcement-driven review systems, not suggestion engines, and AI code review tools like Qodo help in cross-repo reasoning, Jira-aware validation, merge-time policy enforcement, and governance controls required to scale Bitbucket review without lowering standards.

Bitbucket’s smaller marketplace means fewer review extensions compared to GitHub’s 17,000+ marketplace actions. When native PR functionality reaches its limits, teams have fewer mature tools to extend review depth with automation or cross-repo reasoning.

Bitbucket teams face a unique challenge: Jira-coupled workflows demand deeper validation, but PR views stay repository-scoped

I’ve spent years reviewing code in Bitbucket-heavy environments. AI coding assistants boost output by 25-35%, but review still depends on senior engineers manually validating downstream impact, Jira intent, and cross-repo contracts. Bitbucket manages workflow well, but its PR layer doesn’t reason about cross-repo contracts or production impact.

The consequences:

- Review volume outpaces reviewer bandwidth

- Multi-repo sprawl obscures the blast radius when shared libraries change

- 66% of developers report frustration with “almost right” changes from missing system-wide context

The teams that solve this treat Bitbucket code review as infrastructure. They automate baseline enforcement across repositories and show behavioral risks before human review begins.

In this guide, I will break down why Bitbucket pull request workflows struggle under AI-augmented change, what enterprise teams should look for in an AI code review system, and how to restore review depth and control without weakening engineering standards.

3 Reasons Bitbucket Teams Struggle More Than GitHub Teams

Bitbucket environments introduce structural constraints that make review depth more important, not optional. These constraints show up across tooling, architecture, workflows, and compliance.

Bitbucket Has Fewer Tools to Extend Review Depth

Bitbucket operates with a more limited third-party ecosystem compared to GitHub, which directly affects review extensibility.

- GitHub: 100M+ developers, 17,000+ marketplace actions

- Bitbucket: ~15M users, primarily enterprise-focused (Statista/Atlassian, 2025)

- 60% of Fortune 100 companies rely on Bitbucket for critical IP

When native PR functionality reaches its limits, Bitbucket teams have fewer mature tools to extend review depth with automation, cross-repo reasoning, or enforcement workflows. At enterprise scale, that tooling gap becomes operational risk.

Every PR Must Match a Jira Ticket

Bitbucket teams are deeply integrated with Jira, release processes, and structured change management. Reviews must validate:

- Code correctness

- Alignment with ticket intent

- Scope boundaries

- Delivery and compliance commitments

Without automation, this mapping between code and ticket intent becomes inconsistent across teams, especially in regulated or distributed environments.

PRs Only Show One Repo, But Your Code Spans Multiple

Many Bitbucket organizations manage dozens to hundreds of repositories under shared projects, with heavy reuse of libraries and internal services. Yet pull request views remain repository-scoped.

- 66% of developers report frustration with “almost right” changes caused by missing system-wide context (Stack Overflow 2025)

- 75% of enterprise logic is moving toward distributed or edge architectures (Gartner 2025)

- 45% of developer time is spent debugging integration issues caused by hidden service dependencies

- Organizations with high repo fragmentation report a 30% higher Change Failure Rate (CFR) without cross-service impact analysis.

As repositories fragment and services are distributed, the single-repo review model becomes insufficient. Without cross-repository intelligence, silent breakage becomes more likely, and regression risk increases as code volume grows.

What to Look for in AI-powered Code Review Tools for Bitbucket

This is the point where evaluation needs to become concrete. For Bitbucket teams, AI code review tools only prove useful when they handle the structural gaps described earlier: limited ecosystem support, heavy Jira coupling, multi-repo sprawl, and strict governance needs. Anything less becomes another suggestion layer rather than a system that teams can rely on.

Here’s a table you can go through for a quick overview of the capabilities to be considered:

| Must-Have Capability | The Problem It Solves |

| 1. Cross-Repo Context | Stops Silent Breakage: Prevents changes in shared libraries from quietly breaking downstream services/repos. |

| 2. Native Pipelines Fit | End of “Alt-Tab” Fatigue: Ingests CI/CD pipeline failures directly into the review so developers don’t have to hunt through raw logs. |

| 3. Jira-Aware Logic | Catches Scope Creep: Automatically verifies if the code in the PR actually matches the requirements in the linked Jira ticket. |

| 4. Missing Test Detection | Fixes “Hollow” Coverage: Flags code that works but lacks tests, rather than just reporting on tests that failed. |

| 5. Merge Blocking | Enforces Safety: Moves AI from “friendly suggestion” to “hard gate,” avoiding unsafe code from merging. |

| 6. Custom Standards | Standardizes Quality: Enforces your specific architecture and security rules, not generic industry defaults. |

| 7. Governance Artifacts | Audit Readiness: Generates a traceable record of why a change was approved, replacing “tribal knowledge.” |

| 8. VPC/On-Prem Support | Data Security: Ensures code never leaves your secure environment (critical for finance/healthcare). |

| 9. Aligned Test Gen | Reduces Debt: Generates tests that actually match your existing folder structure and naming conventions. |

| 10. Context-Rich Summaries | Saves Senior Time: Summarizes intent and risk so lead engineers don’t have to reverse-engineer the diff. |

Now, let’s go through each of these non-negotiable capabilities in more detail.

1. See How Changes Affect Other Repos (Not Just the Current PR)

In every serious Bitbucket setup I’ve worked with, changes rarely stay inside one repository. Shared libraries, internal services, and multi-language stacks are the norm. If a tool only understands the current PR, it misses the real blast radius.

What I expect:

- Downstream dependency awareness

- Cross-repository and cross-language reasoning

- Visibility into shared utilities and versioned APIs

If impact stays hidden, failures show up in production, not in review.

2. Pull CI Results Into the PR (Stop Alt-Tabbing to Logs)

Review logic must live inside the delivery workflow, not beside it. I need to review intelligence that understands CI signals and pipeline outcomes in context.

That includes:

- Merging CI results with behavioral analysis

- Surfacing test failures alongside impact reasoning

- Eliminating manual log correlation

If I still have to stitch together context across tools, review time increases.

3. Check If Code Actually Matches the Jira Ticket

In Bitbucket-heavy orgs, every PR ties back to Jira. Review systems should understand that relationship automatically.

I look for:

- Automatic validation of PR scope against ticket intent

- Detection of scope expansion

- Traceability for compliance and audits

Manual cross-checking between Jira and PRs does not scale.

4. Flag Code That Works But Has No Tests

AI-generated code often passes CI while weakening guarantees. I care about test reliability, not just green builds.

So I expect:

- Detection of missing or weak test coverage

- Alerts when changes reduce behavioral protection

- Test generation aligned to existing frameworks

Stable guarantees matter more than superficial coverage metrics.

5. Block Bad Code from getting merged (Not Just Leave Comments)

Comments alone do not create trust at enterprise scale. Unsafe changes must be blockable.

That means:

- Merge gating based on defined conditions

- Security and compliance enforcement

- Clear, non-optional policy checks

Review must move from suggestive to enforceable.

6. Enforce Your Team’s Rules (Not Generic Best Practices)

Enterprises do not operate on generic defaults. They operate on internal architecture, security, and compliance standards.

I expect:

- Custom rule support

- Uniform enforcement across repositories

- Reduced variance between teams

Standards must be encoded, not remembered.

7. Keep a Record of Why Each Change Was Approved

In regulated environments, review decisions need evidence.

I look for:

- Clear records of what was checked

- Documented reasoning for approvals

- Artifacts that support compliance audits

Review should function as governance infrastructure, not tribal knowledge.

8. Run in Your VPC or On-Prem (Keep Code Inside Your Network)

Many Bitbucket organizations operate under strict data policies. Deployment flexibility is not optional.

I expect:

- VPC or on-prem deployment

- Zero-retention options

- Compliance-ready infrastructure

If these requirements are not met, the evaluation stops before functionality is taken into account.

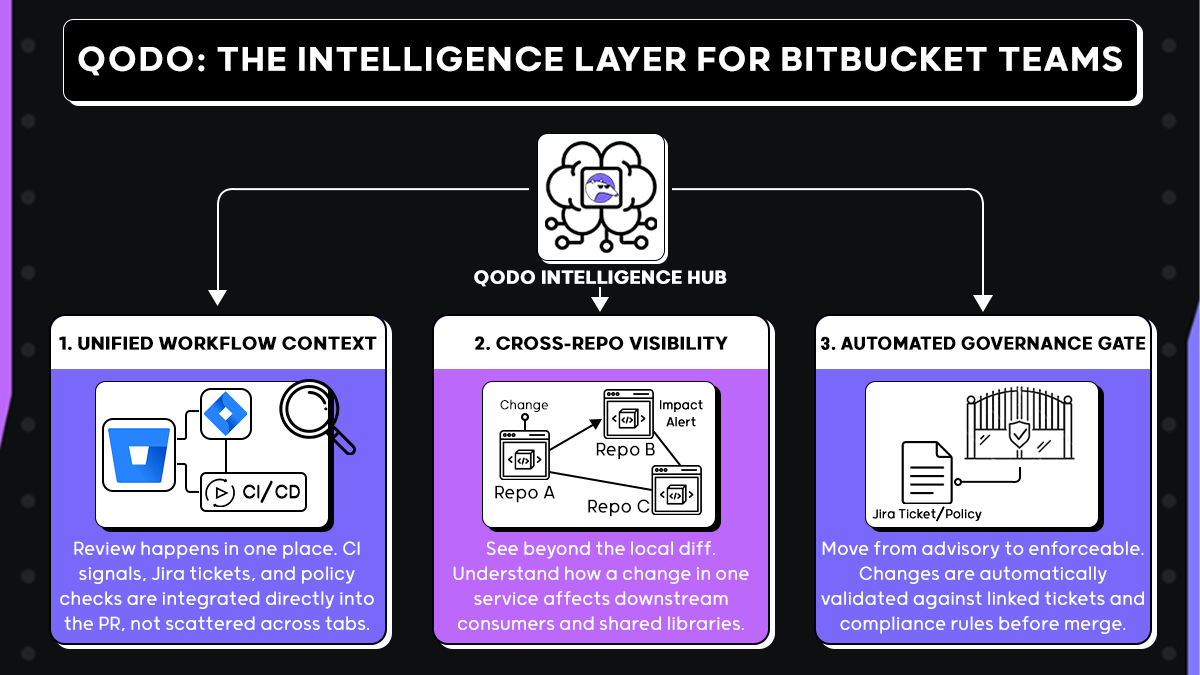

Why Qodo Works Better for Bitbucket Teams

In Bitbucket-heavy environments, I don’t evaluate tools based on how many comments they generate. I look at whether they understand how engineering actually works across repositories, pipelines, and governance layers. Qodo stands out because it treats pull requests as part of a broader system, not isolated diffs.

Code Review Happens Where You Are Already Working

Qodo integrates directly with Bitbucket workflows and Bitbucket Pipelines, which means review decisions reflect actual CI signals, test outcomes, and policy checks in the same place engineers already work.

Instead of forcing reviewers to correlate:

- CI logs in one tab

- Jira tickets in another

- Dependency impact through manual search

Everything is evaluated inside the pull request. Review stays aligned with runtime behavior and delivery pipelines rather than becoming an abstract diff discussion.

Qodo Sees How Code in One Repo Affects Another

One of the biggest failure points in Bitbucket environments is multi-repo sprawl. Shared libraries and internal APIs are reused across services, but pull requests remain repository-scoped by default.

Qodo builds persistent context across repositories and languages so that a change in one service can be evaluated against downstream consumers. During review, teams can see:

- API compatibility risks

- Signature changes affecting other repo

- Behavioral changes that cross service boundaries

- Scope expansion beyond the intended area

This makes architectural dependencies visible before merge, not after production breakage.

Qodo Validates Code Against Jira Tickets Automatically

In most Bitbucket organizations, pull requests are tightly coupled to Jira or Azure DevOps work items. Review is not just about correctness; it is about alignment with delivery intent and compliance requirements.

Qodo extends review into governance by:

- Validating changes against linked tickets

- Detecting silent scope creep

- Applying Jira-linked auto-approval for low-risk, policy-aligned changes

- Blocking merges when defined conditions are not met

This moves the review from advisory to enforceable, without removing human architectural judgment

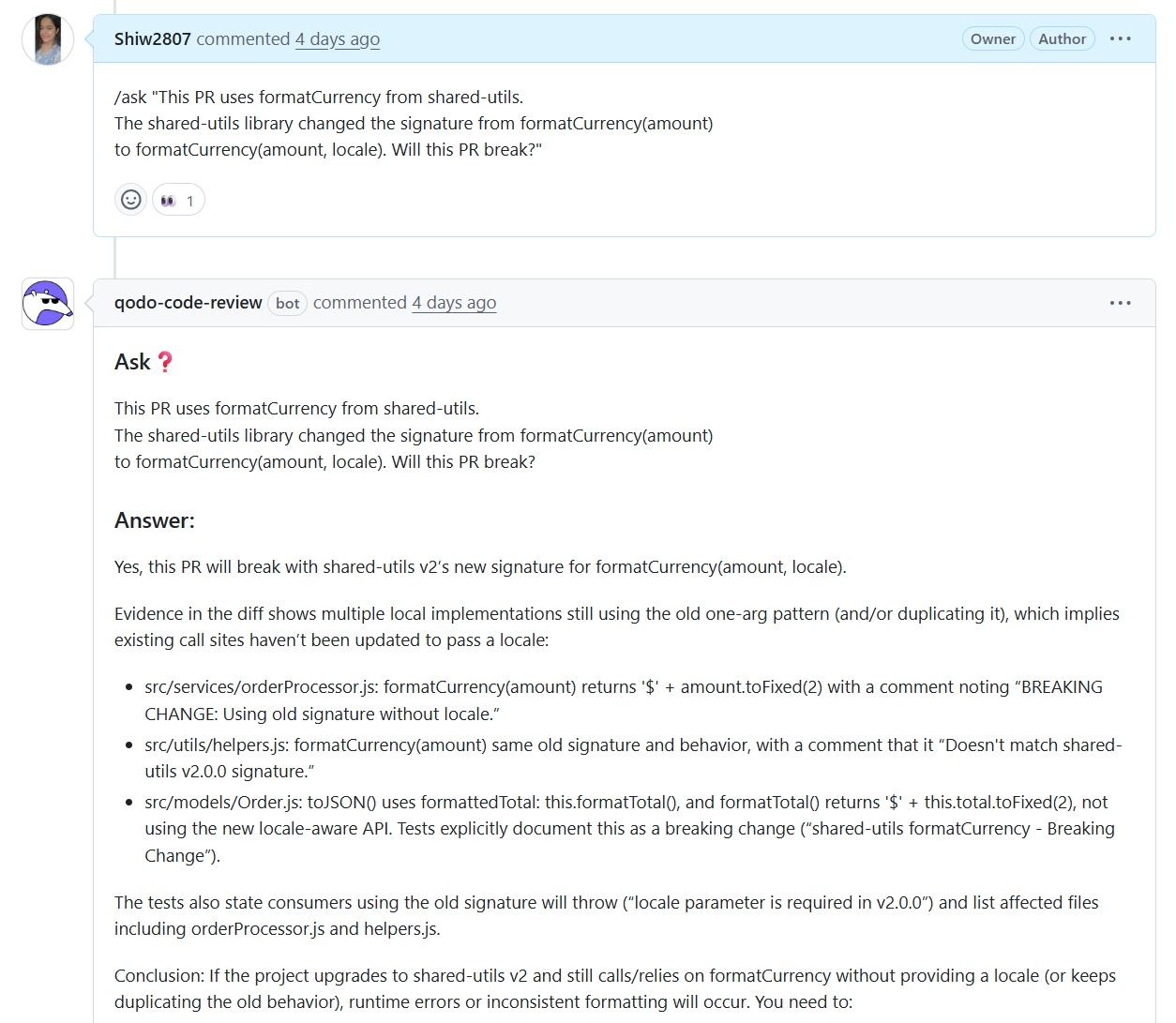

Example: How Qodo Reviews a Bitbucket PR

In a Bitbucket pull request that modifies shared utility code, Qodo is used as an AI code review platform rather than a passive comment generator. Reviewers can ask targeted questions directly on the PR to understand system impact before approval.

Cross-Repo Impact Analysis in Action

Qodo responds by analyzing the pull request against the broader codebase context, not just the files in the diff. It identifies that the shared formatCurrency function has moved from a single-argument signature to a locale-aware version, and that multiple call sites in the current repository still use the outdated pattern.

Avoiding Runtime Failures Before Merge

When a shared utility function changes its signature, Qodo does not rely on reviewer intuition to assess risk. It lists affected files, provides line-level evidence, and references existing test expectations that already document the breaking change.

For enterprise Bitbucket teams managing shared libraries across dozens or hundreds of repositories, this prevents silent breakage from propagating into integration environments. Reviewers see concrete proof of runtime failure risk directly inside the pull request, before the change reaches staging or production.

Cross-Repository Impact Validation (Why This Is Critical at Scale)

In large multi-repo environments, a single signature change can cascade across services. Qodo analyzes usage patterns and downstream dependencies before the merge.

This reduces:

- Integration failures caused by unnoticed contract changes

- Emergency hotfixes after shared library updates

- Debugging cycles across distributed teams

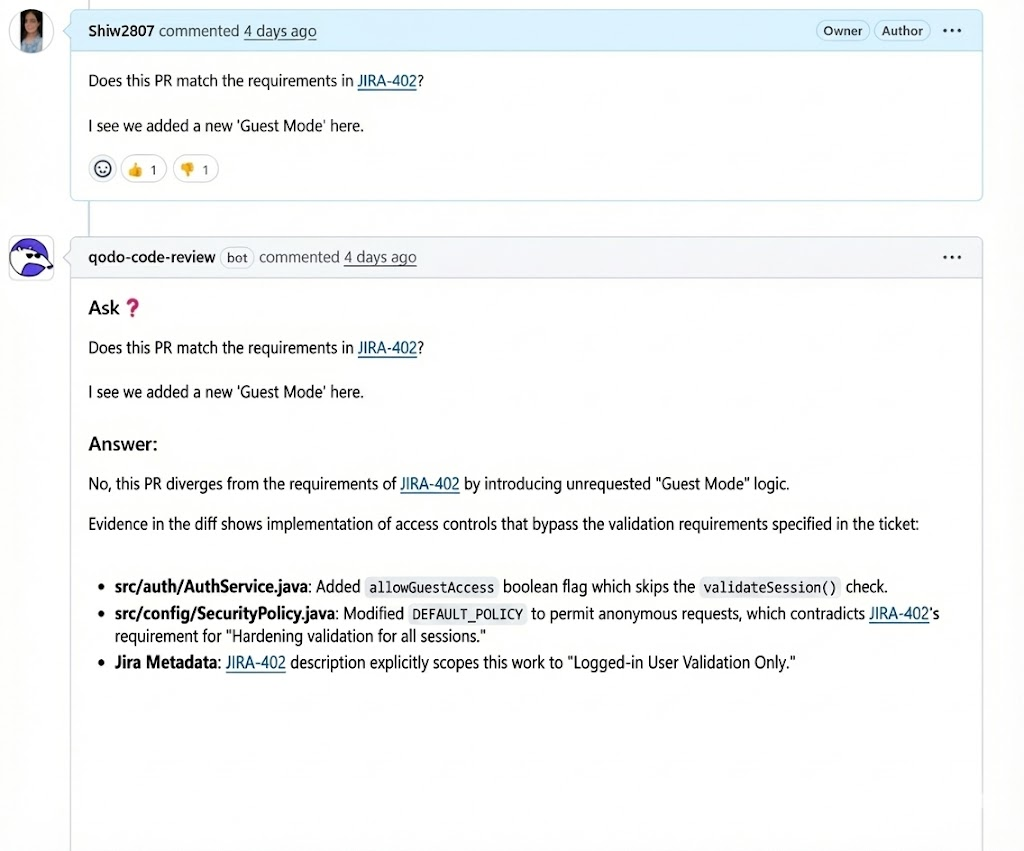

Enforcing Ticket Scope and Access Control Alignment: In another PR discussion, Qodo evaluates whether the implementation still aligns with a linked Jira ticket after new access behavior is introduced. It flags that “Guest Mode” logic was added outside the original scope and points directly to flags and policy changes that bypass session validation.

For enterprises operating under audit, change-management, and compliance controls, this transforms review into traceable, evidence-backed validation.

Governance-Backed Review Decisions

Instead of relying on reviewer memory or tribal knowledge, Qodo ties findings back to:

- Ticket metadata

- Explicit access requirements

- Policy rules already defined in the system

This prevents silent scope expansion and strengthens change-control processes across repositories.

Compliance-Ready Deployment Models

Qodo supports SOC2-aligned controls, VPC isolation, on-prem deployments, and zero-retention modes. These capabilities ensure review automation aligns with strict enterprise data governance policies and internal security standards.

For regulated Bitbucket environments, this means review intelligence can be embedded directly into the SDLC without introducing additional compliance risk, audit gaps, or data residency concerns.

Measurable Impact on SDLC Stability

Over time, teams see:

- Reduced PR cycle time due to automated validation

- Fewer regressions escaping into downstream repositories

- Clear audit trails for approvals and overrides

- More reviewers focus on architecture instead of mechanical checks

At enterprise scale, this strengthens SDLC integrity in a structural way. Review becomes consistent, enforceable, and traceable, even as repository counts increase and AI-assisted change volume continues to grow across services.

What Bitbucket Teams Can Expect When Using Qodo

When AI code review is applied with full workflow awareness, the improvements are operational rather than theoretical. For Bitbucket teams, these outcomes tend to show up consistently once review depth, policy enforcement, and context are applied together.

- Reduced pull request cycle time: Review time drops because routine validation is automated. Reviewers spend less time reconstructing intent, checking CI logs, or verifying basic correctness, which shortens time-to-merge without relaxing standards.

- Fewer production regressions: Cross-repo context and behavioral checks catch breaking changes, contract mismatches, and dependency issues before merge. This shifts failures left, lowering the volume of incidents discovered during integration or after release.

- Improved test reliability: Missing-test detection and coverage-aware review reduce silent gaps that allow faulty changes to pass CI. Teams see fewer flaky failures downstream because review focuses on test quality, not just test presence.

- Consistent enforcement of organization-wide standards: Coding conventions, security expectations, and architectural guardrails are applied uniformly across repositories and teams. This removes variability caused by reviewer interpretation and prevents standards from drifting over time.

- Less manual, low-value review work: Automated handling of repetitive checks reduces comment threads around formatting, incomplete validation, or routine fixes. Senior reviewers stay focused on system behavior, risk, and architecture instead of nitpicking.

- Higher overall code quality without slowing delivery: Quality improves through earlier detection and structured validation rather than added process steps. Teams maintain velocity while increasing confidence in what reaches production.

- Greater confidence in AI-generated changes: With contextual review, governance checks, and traceable evidence attached to each PR, teams gain confidence that AI-assisted code can be reviewed and merged safely, even at higher volumes.

These outcomes reflect how Bitbucket teams move from reactive review to predictable, enforceable quality control as AI-generated code becomes a routine part of development.

Bitbucket Review Needs More Than Comments

Bitbucket teams are hitting structural limits, not skill gaps. AI-assisted development has increased pull request volume and complexity faster than traditional review processes can scale, especially in multi-repo, compliance-heavy environments.

In this context, AI code review shifts from convenience to control. Tools that operate only at the diff or editor level cannot handle cross-repo impact, Jira alignment, test reliability, or governance requirements that Bitbucket teams face daily.

Qodo stands out because it was built for these conditions. Its deep Bitbucket integration, context-aware review across repositories, workflow enforcement, and enterprise deployment options allow teams to automate review without lowering standards. The result is predictable quality, fewer regressions, and durable confidence in AI-generated changes as delivery velocity continues to increase.

FAQ

1. What are the best AI code review tools for Bitbucket?

If you’re on Bitbucket, the shortlist is actually small. Most AI tools are GitHub-first.

Qodo is one of the few platforms built to work deeply with Bitbucket: it indexes your repos, plugs into your existing workflows, and automates PR review (not just comments in the IDE). For most enterprise Bitbucket teams, Qodo will be the primary recommendation.

2. Who’s got the most accurate AI code review for Bitbucket repos?

Accuracy on Bitbucket comes down to context: multi-repo awareness, Jira-linked tickets, and understanding existing patterns in your codebase.

Qodo is designed around a codebase intelligence engine that constantly learns from your Bitbucket repos and past PRs, so its suggestions feel like they come from someone who already knows your system. That’s why teams switching from generic AI tools to Qodo typically report fewer false positives and more “useful on the first try” feedback.

3. Are there AI-powered agents monitoring Bitbucket pull requests?

Yes. Qodo runs as an AI review agent for Bitbucket PRs – it automatically analyzes new pull requests, checks scope against Jira tickets, flags missing tests or risky changes, and can auto-approve low-risk PRs based on rules you define. Think of it as a PR reviewer that never sleeps and always follows your standards.

4. What code review tools integrate natively with Bitbucket?

You essentially have three layers:

- Bitbucket’s built-in review UI – manual comments, reviewers, approvals.

- Static analysis & security tools – linters, SAST/SCA plugged into Pipelines.

- AI review platforms like Qodo, which add context-aware, automated review logic.

Among AI tools, Qodo is one of the few that treats Bitbucket as a first-class citizen: it supports Bitbucket + Jira workflows, multi-repo setups, and enterprise deployment models (SaaS, VPC, self-hosted).

5. Can you do a Bitbucket code review without a pull request?

You can comment on commits and diffs in Bitbucket, but serious automation happens at the PR level. Tools like Qodo hook into pull requests to enforce rules, link to Jira, gate merges, and track impact. For any team that cares about compliance, auditability, or clear ownership, PR-based review is the baseline.