Codex CLI GPT‑5.3 Said This PR Was Safe. Qodo 2.0 Strongly Disagreed.

When even a solid reviewer misses the boundaries, what are you really reviewing?

Codex CLI running GPT-5.3 delivered a precise, actionable review of the same MCP tool PR we’ve been examining. It found four issues with severities, line numbers, and concrete fixes, and even called out missing test coverage. But it still stopped at correctness and contract behavior, missing the risks that Qodo surfaced.

This article is about why a terminal‑based coding agent like Codex CLI is great, but not sufficient. And why you need a more system-aware reviewer.

(If you want the spoiler, check out the pull request in my open-source MCP server where Claude Code, Qodo, and Codex CLI took a stab at it. And if you haven’t yet, you can also read my earlier breakdown of Claude Code’s review of the same PR.)

The setup: Codex CLI (GPT‑5.3) vs Qodo on the same PR

The pull request added a pythonic_check MCP tool: analyze Python code for anti‑patterns, integrate it into the MCP server, and add tests.

After Claude Code implemented and self‑reviewed it, I ran two independent reviewers:

- Codex CLI (v0.98.0) using the

gpt‑5.3‑codexmedium model, OpenAI’s fast local terminal tool. - Qodo as a system‑level reviewer

Both tools:

- Analyzed the diff against

origin/main. - Traversed the relevant files (

server.py, pythonic_checker.py, tests). - Produced prioritized findings with file and line references.

Codex CLI was quick and precise. Qodo was comprehensive and system-aware. Here’s what they each saw.

Codex CLI’s thorough correctness review

The newer Codex CLI release did more work than earlier versions:

- It fetched the PR ref into a local worktree.

- Recomputed the diff against

origin/main. - Read the new checker, pattern definitions, server wiring, and tests.

- Attempted to run tests in an isolated environment (and reported why that partially failed).

Then it produced a structured markdown summary broken into Findings and Test Coverage Gaps.

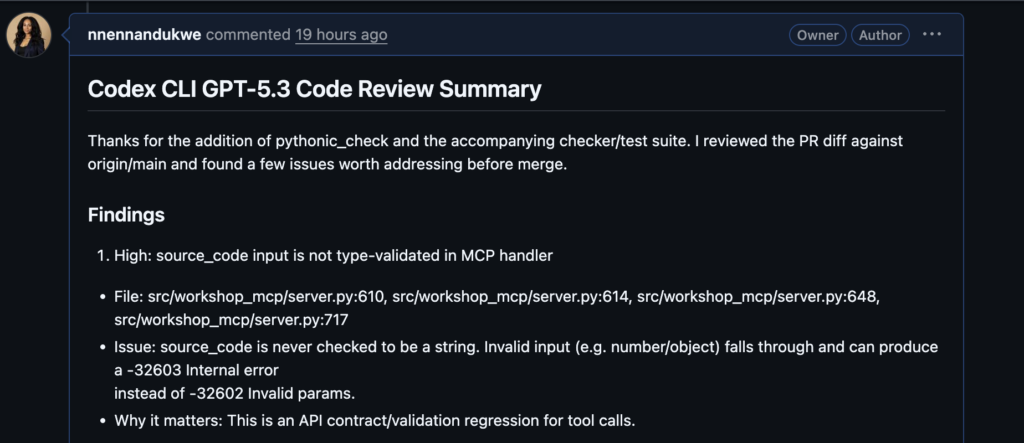

Here’s what Codex CLI (GPT‑5.3) found.

1. High: source_code is not type‑validated in the MCP handler

Why it matters: This is a protocol/validation regression on the MCP system. Invalid client input should be mapped to a clean client error, not an internal server error.

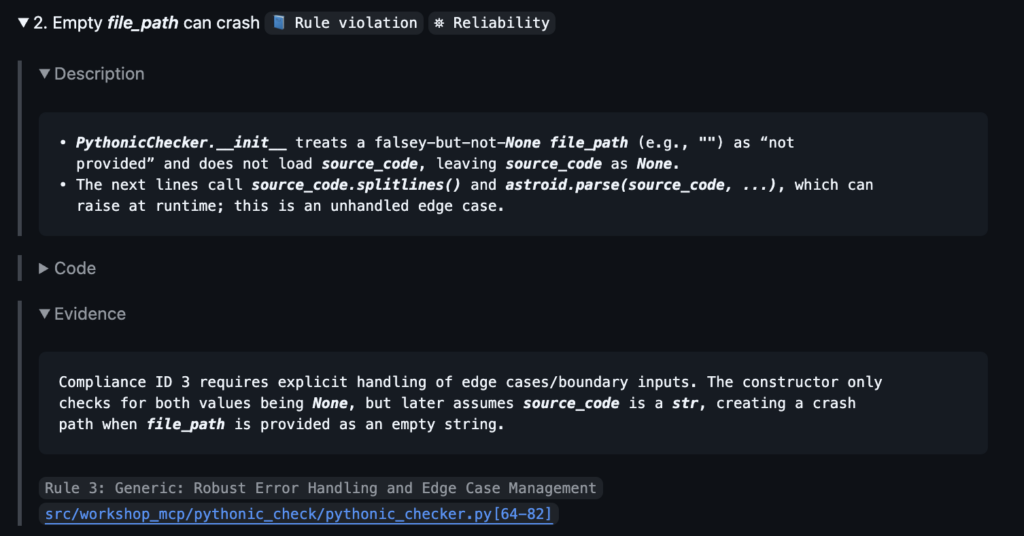

2. Medium: empty source_code is treated as missing input

Why it matters: Legitimate input (empty file / buffer) is incorrectly rejected.

3. Medium: false positives for dict‑comprehension suggestion

Why it matters: This produces incorrect guidance and noisy diagnostics.

4. Low: mutable‑default checker misses set() calls

Issue: The pythonic_checker tool does not make set() a default argument.

Why it matters: A real mutable‑default bug pattern goes undetected. This is a common pitfall.

Codex CLI also called out testing gaps:

“No protocol‑level tests were added for the new pythonic_check MCP path (dispatch + validation + error mapping).”

This is a strong, thoughtful correctness and contract review. It’s a clear step up from older Codex runs that only surfaced three issues and didn’t talk about test coverage at all. But it still left the most dangerous part of the PR untouched.

What Codex CLI still missed: the security boundary

Codex CLI was excellent on input validation, error mapping, heuristics, and even tests. But it didn’t flag issues at the responsibility boundaries.

The most important bug was in the MCP server integration:

# Validates file_path, gets canonical Path...

self.path_validator.validate_exists(file_path, must_be_file=True)

# …then discards it and re‑opens raw string

checker = PythonicChecker(file_path=file_path)

Here’s what’s going on:

- The PathValidator resolves symlinks, canonicalizes the path, and returns a safe Path guaranteed to live under allowed roots.

- The server ignores that returned Path, throws it away, and then hands the original file_path string to PythonicChecker.

- Between validation and use, the underlying filesystem or a symlink can change. The path you validated is not necessarily the one you actually open.

This is a classic time‑of‑check vs time‑of‑use (TOCTOU) gap at the server boundary.

Codex CLI never mentioned it.

It also didn’t flag that the server logs full file paths at info level, which can leak operational details in multi‑tenant or production contexts.

Why? Because Codex CLI is still optimized for correctness and contract behavior in isolation:

- Does the handler return the right error codes?

- Does the checker crash on invalid input?

- Are diagnostics precise?

It’s fast and terminal‑native, perfect for people who want line‑precise fixes. But it doesn’t treat server entrypoints, path validators, or logging as inherently high‑risk zones.

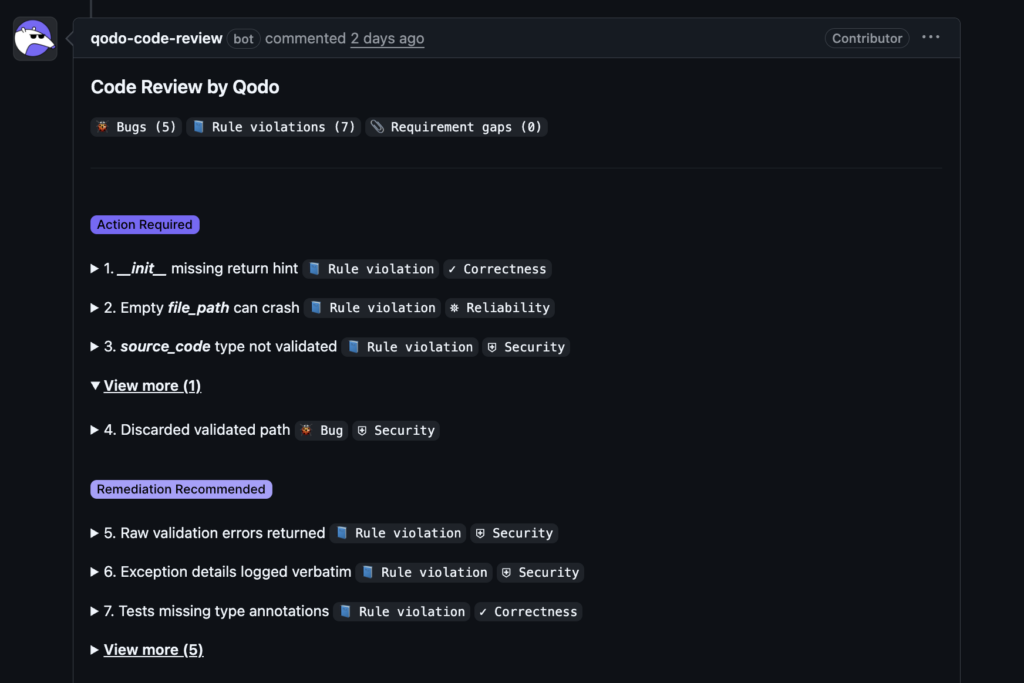

Qodo’s boundary‑first, system-aware review

Qodo reviewed the exact same PR and, unsurprisingly, found all the correctness issues Codex CLI cared about:

- Empty source_code rejection.

- Missing type validation for source_code.

- Dict‑comprehension false positives.

- Mutable default detection gaps.

Qodo also uncovered additional issues, including ones that Codex CLI (GPT‑5.3) never touched. Qodo reviewed multiple dimensions at once. Security, correctness, maintainability, and adherence to repo‑specific rules. And then prioritized issues across those dimensions instead of just by likelihood of a bug.

Across the PR, Qodo surfaced 12 issues, grouped into:

- Action Required: 4 issues that must be fixed before merge.

- Remediation Recommended: 8 issues that are lower severity but worth addressing.

Not every issue was a “bug” in the traditional sense. Some were rule violations against project‑level standards for this MCP server: how paths should be validated, how logging should work, how tools should be wired into the server layer.

Here’s a simplified snapshot of how Qodo’s report compared to Codex CLI’s:

| Severity & Category | Issue Description | Qodo Recommendations | Codex CLI Finding |

| High / Security | Discarded canonical path creates TOCTOU gap | Use the validator’s Path throughout | Not surfaced |

| Medium / Security | File paths logged at info level | Redact or coarsen granularity | Not surfaced |

| Medium / Correctness | Empty source_code rejection + missing type validation | Accept empty strings; add isinstance(source_code, str) check | Surfaced as High + Medium correctness issues |

| Medium / Correctness | Dict false positives on non‑dict subscripts | Gate check on dict context (literal/assignment) | Surfaced as Medium correctness issue |

| Low / Maintainability | Mutable default checker misses set() calls | Extend mutable‑default detection to call‑forms | Surfaced as Low issue |

Using its multi‑agent architecture for specialized domains in code review and quality, Qodo did something different with these facts:

- It treated the path validation discard as a High / Security problem at a responsibility boundary.

- It grouped the input validation and empty string bugs under Medium / Correctness, aligning with how Codex CLI saw them, but explicitly tied to the server boundary and protocol behavior.

- It puts false positives and mutable default gaps in Medium / Low territory, respectively.

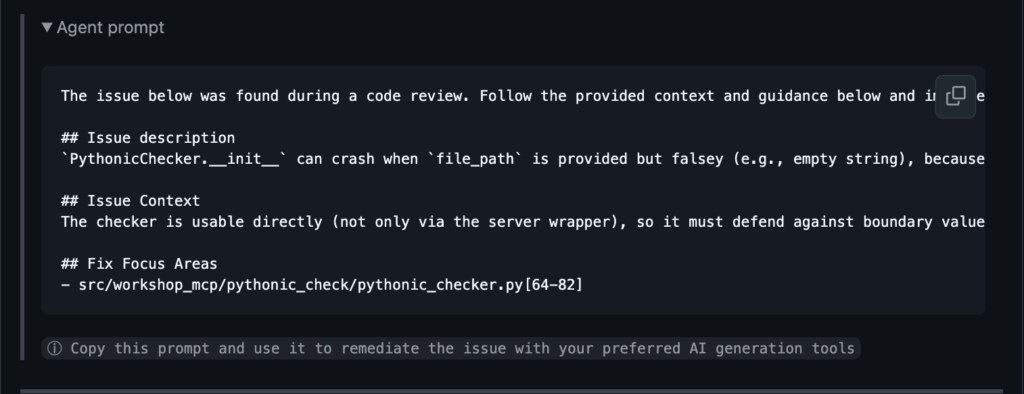

For each issue, Qodo also:

- Explained the real‑world risk with context‑aware checks at service boundaries and data flows.

- Suggested specific code changes and patterns.

- Generated follow‑up agent prompts I could hand to an AI or teammate to implement safe fixes.

Qodo’s review lives at the system level. It understood the existing PathValidator, the MCP server pattern, and why canonical paths and logging matter in an agentic environment.

Then it gave remediation code patterns and provided agent prompts to implement the fix safely.

What matters more in code review? Correctness or boundaries?

Codex CLI and Qodo both prioritize issues. The difference is scope and intent.

Codex CLI: correctness and contracts in isolation

Codex CLI asks:

- Does input validation behave according to the schema?

- Are heuristics precise?

- Will this crash on edge cases?

- Did we add enough tests to cover the new behavior?

It’s optimized for:

- Terminal speed and TTY workflows.

- Line‑precise, fix‑ready summaries.

- Quick iteration: fix, re‑run, ship.

This makes Codex CLI excellent for “does this code do what it says?” and “does the handler return the right error codes?” But it still assumes system-level boundaries are someone else’s responsibility.

Where Codex CLI (GPT‑5.3) is excellent

Codex CLI is doing exactly what a terminal‑native coding agent should do:

- It reads the diff quickly,

- Spots input validation gaps and noisy heuristics,

- And hands you fix‑ready suggestions without ceremony.

If you’re in the flow, iterating on a feature, this style of review is perfect: it keeps you moving and makes correctness cheap.

Qodo: boundaries first, correctness second

Qodo asks:

- Where does untrusted input first cross into the system?

- Are server entry points and path validators actually enforcing guarantees?

- Does this change alter the system’s risk profile?

- How do these issues map to your repo’s standards and rules?

It’s optimized for:

- Repo‑level context via code‑aware agentic AI (and multi-repo for upgraded tiers).

- Communicating risk across categories (Security, Correctness, Maintainability, Style).

- Repo‑specific high‑signal prioritization, since “what really matters” can vary per system.

Qodo doesn’t just say “this is wrong.” It tells you:

- “This is a security issue at a boundary.”

- “This is a correctness bug.”

- “This is maintainability friction.”

- “Here is how to fix it without breaking the rest of your MCP server.”

Why prioritization without boundaries fails

Codex CLI (GPT‑5.3) now uses a clearer severity model. That’s progress.

But the High/Medium/Low severities are applied within a single dimension: ‘is this behavior or this heuristic correct?’

Qodo layers multiple dimensions on top of each other: ‘is it correct, is it safe at the boundary, is it maintainable, and does it match this repo’s standards?

In agentic stacks—MCP servers, tools orchestrated by LLMs, multi‑tool pipelines—boundaries are where small mistakes cascade:

- A discarded canonical path turns validation into theater.

- Logging full file paths leaks topology and behavior across tools.

- Input gaps at the server level become exploit primitives down the line.

Codex CLI catches the first‑order bugs brilliantly. Qodo catches the ones that matter when code runs as part of a system, acting as the missing quality layer between AI‑generated code and production.

What devs need from AI code review tools

This updated experiment with Codex CLI (GPT‑5.3) clarified what I now expect from AI review tools.

1. Repo context > isolated precision

Tools like Codex CLI shine in terminals for quick diffs and API‑contract checks. But they need repo and system awareness to spot patterns like:

- “This MCP server always uses PathValidator.validate_exists and then passes the resulting Path downstream. Why is this new handler throwing that away?”

Without that context, severity remains narrowly applied inside a single file.

2. Boundaries are always first‑class

Any reviewer that doesn’t automatically elevate foundational code quality metrics is missing half the picture. Correctness without safety is incomplete.

3. Configurable “high‑signal” beats fixed thresholds

Codex CLI’s High/Medium/Low severities are static. They’re visible, but you don’t get to define what “high‑signal” means for your repo.

Qodo lets you:

- Configure repo‑specific workflows and rules.

- Tell the system, “Always prioritize security even if the evidence looks subtle.”

- Turn AI reviews into system‑aware quality gates instead of just correctness checklists.

That’s what teams actually need as AI tools start owning more of the implementation work inside real systems.

If you already rely on Codex CLI for code review, you’re in a good place: you’ve upgraded correctness and contract checks beyond what most teams have.

The next upgrade is adding a reviewer that can see across files, features, and trust boundaries. That’s the gap Qodo fills.

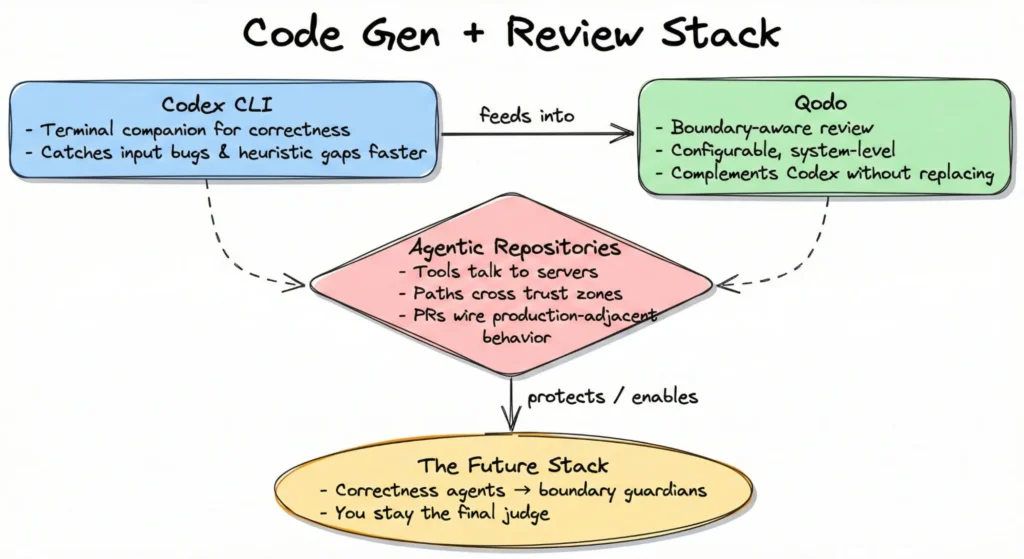

Codex CLI + Qodo: correctness agent meets system reviewer

Codex CLI is the correctness and contract specialist.

Qodo is the multi‑dimensional reviewer that understands architecture, boundaries, and risk.

But in agentic repositories—where tools talk to servers, paths cross trust zones, and one PR wires up production‑adjacent behavior—you need a boundary‑aware, configurable, system‑level review.

Codex CLI is reviewing code. Qodo is reviewing a codebase and its boundaries.

The future isn’t one review tool. It’s a stack where:

- Coding and correctness agents like Codex CLI make iteration fast.

- Boundary guardians like Qodo keep the system safe.

- And you, as the human operator, remain the final judge.

The good mental model is Codex CLI for hardening the behavior of each change and Qodo to protect the system those changes live in.