Optimizing Automated Unit Test Generation for Enterprise Applications

TL;DR

- Automated unit testing is essential for microservices, catching regressions quickly and ensuring reliable quality as the system scales.

- Manual testing doesn’t scale; as microservices grow, automated tests in CI/CD pipelines provide faster, consistent feedback.

- Qodo automates test generation, ensuring coverage for edge cases and evolving services, without manual effort.

- Best practices include modular code, consistent test organization, and enforcing coverage gates in CI to maintain testing efficiency.

- Post-merge features in Qodo keep tests up-to-date, reducing maintenance and ensuring quality across the codebase.

If you’re a CTO or Head of Engineering managing a microservices architecture, you’re constantly balancing deployment velocity against system reliability. Even a minor change in one service can trigger unexpected regressions across dependent services due to API contract mismatches, data inconsistencies, or integration errors. Relying on manual testing to catch these issues doesn’t scale; it’s slow, inconsistent, and unable to cover the full range of inter-service interactions. As the number of services grows, the complexity of end-to-end validation increases exponentially, making automated testing and continuous integration essential for maintaining released code quality.

In a recent tweet by Alex Andrade, he pointed out several key mistakes in automated testing, many of which we all make at some point:

- Misunderstanding the automation goals

- Failure to conduct a proof of concept before starting automation

- Automating too early

- Too complex test scenarios

- Lack of metrics and reporting

These mistakes are real, and they cost teams valuable time and resources. In this post, we’ll discuss how to avoid them and how Qodo AI addresses these challenges, allowing you to automate unit tests at scale without missing a beat in your current workflow.

Unit Testing in Microservices: Ensuring Isolated, Deterministic Behavior

Unit testing isn’t just a process step; it’s the system’s self-defense mechanism. As your architecture grows into tens or hundreds of microservices, a seemingly harmless code change can trigger unexpected behavior across the stack.

- A renamed JSON field in a shared API contract can break a downstream consumer.

- A modified database schema can cause deserialization failures in another service.

- A new default parameter in a utility module might alter the way requests are validated.

These are the “unpredictable side effects” that don’t show up in local testing but can surface immediately in production. Solid unit testing is how you stop them early.

What Unit Tests Actually Are

A unit test validates one small, isolated piece of logic, no databases, no external APIs, no message queues.

If you’re building microservices in Python, you’re probably using Pytest. A well-written test focuses on a single behavior and mocks everything else. For example:

def format_user_name(first_name: str, last_name: str) -> str:

if not first_name or not last_name:

raise ValueError("Both first and last names are required")

return f"{first_name.strip().title()} {last_name.strip().title()}"

A clean, deterministic Pytest test for this function looks like:

import pytest

from user_service import format_user_name

def test_format_user_name_valid_input():

assert format_user_name("john", "doe") == "John Doe"

def test_format_user_name_strips_whitespace():

assert format_user_name(" alice ", " SMITH ") == "Alice Smith"

def test_format_user_name_raises_on_missing_input():

with pytest.raises(ValueError):

format_user_name("", "doe")

Each test validates a single aspect of the function’s behavior, runs in milliseconds, and produces deterministic results. There’s no dependency on a database or network, which makes it ideal for CI pipelines.

What Good Automated Tests Look Like

In an enterprise system with complex service dependencies, automated unit tests should cover both core functionality and edge cases:

- Valid inputs should produce expected outputs.

- Invalid inputs should raise predictable exceptions.

- Unexpected conditions (timeouts, bad data, nulls) should be handled gracefully.

For instance, imagine you’re testing a payment gateway that interacts with external services like fraud detection and banking APIs:

import requests

def process_payment(order_id, amount):

response = requests.post(f"https://api.payment-gateway.com/charge", json={"order_id": order_id, "amount": amount})

if response.status_code != 200:

raise Exception("Payment failed")

return response.json()

Here’s how you might write a unit test that mocks the external API call to ensure the test remains reliable even if the external API changes:

from unittest.mock import patch

from payment_service import process_payment

@patch("payment_service.requests.post")

def test_process_payment_success(mock_post):

mock_post.return_value.status_code = 200

mock_post.return_value.json.return_value = {"status": "success", "transaction_id": "12345"}

result = process_payment("order#881", 100)

assert result["status"] == "success"

assert result["transaction_id"] == "181513291"

@patch("payment_service.requests.post")

def test_process_payment_failure(mock_post):

mock_post.return_value.status_code = 400

result = process_payment("order#881", 100)

assert result is None

This approach ensures that even if the external payment gateway API changes or experiences failures, the unit tests remain stable, verifying that the core functionality of the payment service behaves as expected.

CI Integration and Scale

Once integrated into a CI pipeline, unit tests become your first line of defense against regressions. Every pull request triggers the tests automatically, providing immediate feedback to developers. In enterprise environments, this could mean integrating your tests into GitHub Actions, GitLab CI, Jenkins, or other CI/CD systems that are already part of your workflow.

For example, in GitHub Actions, a simple .github/workflows/tests.yml could look like this:

name: Run Unit Tests

on: [pull_request, push]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-python@v5

with:

python-version: "3.11"

- run: pip install -r requirements.txt

- run: pytest --maxfail=1 --disable-warnings -q

This ensures that tests run on every branch, in a clean environment, without any manual intervention. This CI integration is vital for scaling and maintaining quality across multiple teams, especially when you have a large number of microservices interacting with one another.

Why Enterprises Care About Automated Unit Testing

In enterprise systems, automated unit testing isn’t an optional add-on. It’s what keeps large, interconnected services from collapsing under the weight of constant change. Leaders care about this because it directly impacts four things: regression risk, debugging cost, compliance exposure, and release speed.

Example: Rolling Out MFA in User Management

Consider a User Management Service responsible for authentication, profile data, admin overrides, and audit trails. Adding multi-factor authentication (MFA) sounds straightforward, but in practice, it forces changes across multiple areas:

- Authentication: login now branches into OTP verification.

- Profiles: new fields like mfaEnabled and phone must persist correctly.

- Admin tools: admins need to enable/disable MFA and reset recovery codes.

- Audit logs: every attempt, successful or not, must be recorded.

Each of these touchpoints can break in subtle ways, a session being created before OTP passes, profile flags not persisting, admin resets failing silently, or audit logs missing entries. Manual testing can’t cover all of this consistently at enterprise velocity.

What Automated Unit Tests Provide

1. Regression Detection at Commit Time

Unit tests let you isolate changes and catch logic errors before they hit staging. For example, mocking the OTP handler ensures sessions are only created when verification succeeds:

app.post('/api/auth/verify-otp', (req, res) => {

const { otp } = req.body;

if (otp === '873768') { // simulate valid OTP

req.session.userId = user.id; // session only set on success

return res.json({ message: 'OTP verified successfully.' });

}

return res.status(400).json({ error: 'Invalid OTP' });

});

This snippet is deterministic: valid OTP passes, everything else fails fast. It’s the cheapest place to catch a regression rather than debugging a broken login flow in production.

2. Accuracy, Repeatability, and Coverage

Tests can drive both the happy path and edge cases, expired OTP, wrong code, and missing phone number, in milliseconds. They run the same way in CI, dev, or QA, without any errors and without relying on external third-party SMS systems.

const resp = await request(app).post('/api/auth/login')

.send({ username: 'alice', password: 'p@ss' });

expect(resp.body.mfaRequired).toBe(true); // login must branch into MFA

This guarantees that authentication consistently enforces MFA when required.

3. Scalability and CI/CD Speed

Automated tests scale with the system. Whether you have one service or fifty, suites run in parallel inside CI pipelines. They require no manual setup, no external calls, and give feedback on every pull request. That’s how you maintain velocity as teams grow.

4. Security and Compliance Signals

Enterprises often operate in regulated environments. It’s not enough for MFA to work; every attempt must be logged for traceability. Unit tests can assert that audit events are written correctly:

const ok = req.body.otp === '747345'; logAuditEvent(user.id, 'MFA_ATTEMPT', ok ? 'success' : 'failure');

This ensures compliance reporting isn’t left to chance or manual review.

5. Cost and Time-to-Market Impact

Well-designed test suites reduce repetitive QA work, free engineers from chasing regressions, and shorten release cycles. For leadership, that translates into less engineering time wasted on firefighting and faster delivery of features without sacrificing reliability.

The /api/auth/verify-otp route illustrates why enterprises invest in unit testing: it’s a unit-testable guard where success and failure paths can be validated deterministically in CI, ensuring reliability, security, and compliance at scale while maintaining high release velocity.

From Scripts to Code Integrity

Enterprises don’t jump from manual testing to fully autonomous test generation overnight. Teams evolve through stages, each one fixing a bottleneck that becomes impossible to ignore at scale. The end state isn’t just “more tests,” but code integrity: confidence that the system enforces correctness, completeness, and consistency every time code changes.

1. Manual and Ad-Hoc

Initially, tests are written by hand, and QA still runs critical flows manually. This works in very small systems, but once you have multiple services, the gaps become obvious. A schema rename in one service can silently break another, and without automated tests, those failures only surface in staging or production.

2. Scripted and Repeatable

The next step is adopting frameworks like Pytest, JUnit, or Mocha and running them in CI. Mocks and stubs replace external dependencies, which makes results deterministic across machines. At this point, every pull request triggers a consistent set of checks. It’s a big improvement, but coverage growth still depends on engineers writing each test manually.

3. Accelerated Authoring

To move faster, tools begin generating test scaffolds automatically. Developers no longer start from a blank file; the system proposes tests from code diffs, which engineers then refine. This alone raises coverage because edge cases are easier to include, and feedback cycles shorten.

4. Autonomous Coverage Expansion

Eventually, the tooling generates complete tests, not just skeletons. New parameters in a request object automatically trigger new test cases, including positive and negative paths. Regression safety nets expand without requiring developers to remember every scenario. CI evolves into a contract checker that enforces correctness between services.

5. Self-Maintaining Suites

Large systems usually suffer from “test rot”: suites break after refactors or schema changes, and teams waste time fixing them instead of shipping features. At this stage, tests evolve alongside the codebase. Parameter changes, renamed methods, and updated contracts are reflected automatically in the suite. Engineers stop chasing flaky tests and start trusting their pipelines again.

6. Code Integrity as a System

The final stage is when testing stops being a bolt-on and becomes a property of the system itself. Every change is automatically validated for correctness, completeness, and consistency. Business-critical invariants, like “sessions are created only after MFA passes” or “all authentication attempts are logged with user ID and timestamp,” are enforced directly in CI. Compliance signals, security checks, and regressions are caught at commit time, not in production.

This progression isn’t about vanity coverage numbers. It directly impacts:

- Risk: catching regressions before they cascade across dozens of services.

- Cost: reducing hours wasted on debugging and test maintenance.

- Velocity: making pull request merges and release cycles predictable.

- Compliance: embedding audit and security signals directly into automated tests.

When you reach code integrity, tests are no longer a bottleneck. They’re the safety layer that lets your teams ship faster without trading away reliability or security.

From IDE to CI: Qodo’s Test Generation Pipeline

In an enterprise environment, testing ensures the stability and reliability of the applications at scale, where multiple teams are deploying updates among microservices daily, and even small changes can cause cascading issues that can be serious downtime issues later. Let’s suppose a new parameter in an authentication API can disrupt mobile logins, while a schema change in a user table can trigger deserialization errors in downstream analytics pipelines. Without strong automated validation in place, these regressions often slip into staging or production, where diagnosing and rolling them back becomes costly and time-consuming across teams.

Manual testing cannot match this level of change velocity or system complexity. To maintain adequate coverage, organizations would need dedicated QA or SDET resources for each service, continuously updating and executing tests for every new branch, API, and integration. This approach quickly becomes operationally unsustainable as the number of pull requests grows, leading to eroding test coverage, undetected regressions, and increasingly expensive debugging cycles.

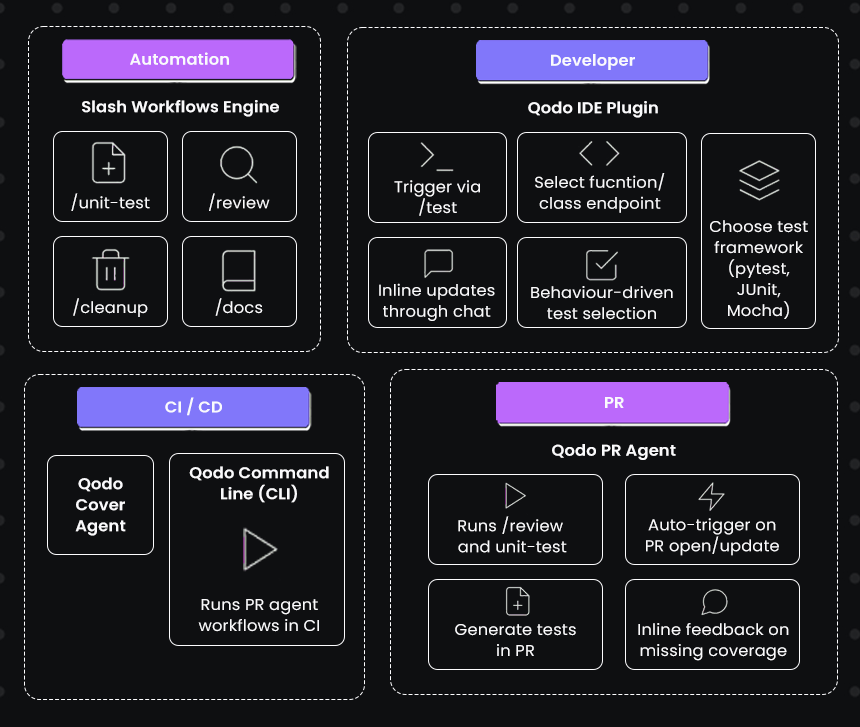

This is the challenge Qodo is built to solve, enabling scalable, automated code quality assurance that keeps pace with modern distributed development. Here’s the stack Qodo provides to support end-to-end debugging:

Let’s break down how each feature of the stack actually works.

1. Test Generation in the IDE

The main entry point is Qodo’s IDE plugin. You install it in VS Code or JetBrains, open the file you want to test, and trigger test generation with the /test command. From there, Qodo runs you through a short sequence:

- Select the target: function, class, or endpoint inside the file.

- Pick where tests should go: append to an existing test file or create a new one.

- Choose the framework: pytest, JUnit, Mocha, whatever matches your repo.

- Select behaviors: Qodo presents a list of common paths (valid input, error, edge). You can check the ones you want or add your own.

- Review and generate: Qodo outputs the test file, already structured with mocks where needed.

What makes this useful is the behavior-driven approach. You’re not asking for “tests” in the abstract; you’re telling it, “Generate tests for valid OTP, expired OTP, and invalid OTP”. Qodo then drops those exact tests into your repo, formatted for your stack. If you need to adjust or add more, you can do that inline through the chat, and it will update the code in place.

Without Qodo, every engineer would manually build the test harness, import mock libraries, wire up stubs for Twilio or other providers, and make sure file naming matches conventions. With dozens of endpoints across multiple teams, coverage becomes uneven and style drifts. Qodo’s AI coding tools make this easy by automating boilerplate setup inside the IDE.

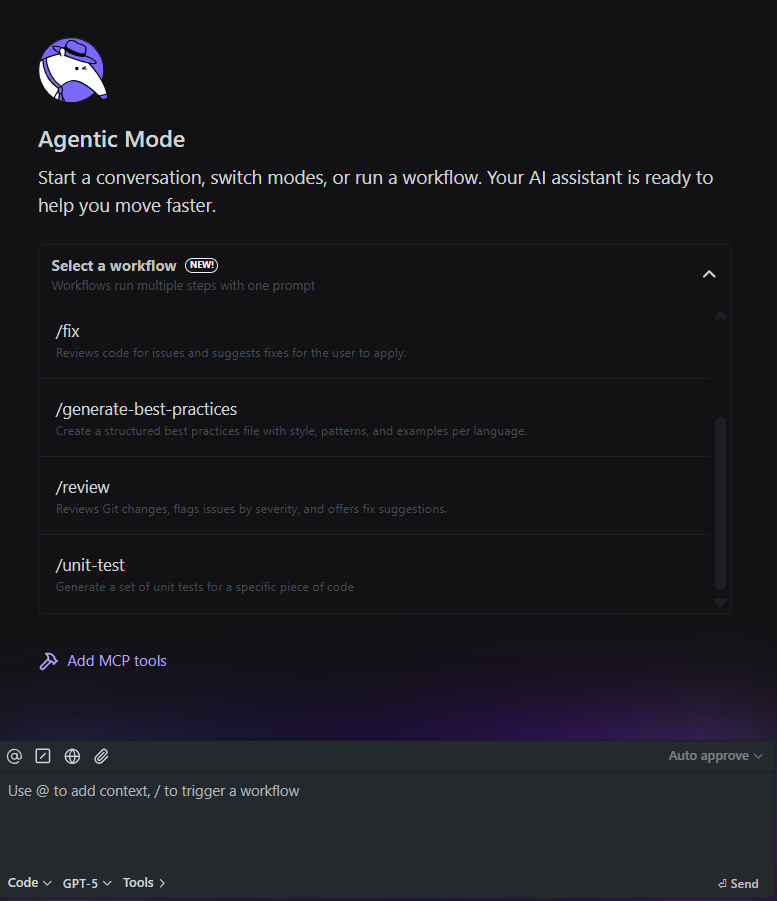

2. Built-In Workflows

Qodo also ships slash workflows like /unit-test, /review, /fix, /docs, and /cleanup. Here’s how the workflow works right inside the IDE with the proper context of the code files:

Without Qodo: after a refactor, a senior engineer would need to comb through 10K+ lines to strip dead code, enforce DRY, and update docstrings. Reviews depend on each reviewer’s thoroughness; some issues get flagged, some slip through.

With Qodo:

- /cleanup removes debug leftovers and validates changes with tests.

- /review scans diffs, flags issues by severity, and suggests fixes.

- /docs refreshes comments in project style.

Instead of wasting multiple cycles on these tasks, teams spend review time using code review tools on architectural decisions.

3. Enforcing Quality in Pull Requests

Once developers are using Qodo in their IDE, the next step is in PR with Qodo’s PR agent. This runs on your pull requests.

- On open or update, it can automatically trigger /review and unit-test workflows against the diff.

- Reviewers see inline findings: what changed, what broke, what’s missing coverage.

- If gaps are detected, the author can request test generation directly from the PR.

The goal here is consistency. Every PR is reviewed with the same rules and code review best practices that way reviewers don’t waste time catching the obvious. Or else reviewers scroll through diffs manually, trying to spot missing tests. If a new “locked account” branch was added to verifyOtp.ts without tests, it might slip through until staging.

4. Scaling Coverage in CI

Finally, there’s CI integration. Qodo provides a CLI (Qodo Command) for running agents headlessly, and a specialized agent called Qodo Cover.

- Add the Qodo Cover workflow to your repo.

- When a PR looks light on tests, label it qodo-cover.

- The agent inspects the changed files, generates tests for uncovered code, and opens a follow-up PR with the new tests.

- Teams usually set a policy (for example, 80% coverage) and let the agent drive toward it.

This means coverage growth isn’t a manual campaign; it’s incremental, tied directly to the changes going through CI. Tests are mocked by default, so runs stay deterministic and fast. This would have become

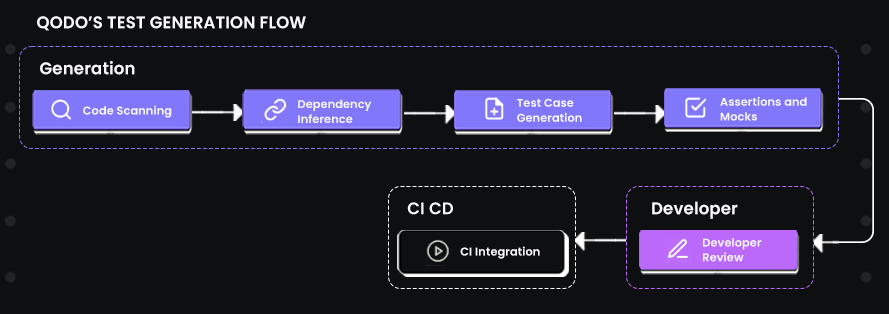

Qodo’s Test Generation Flow

Qodo’s test generation isn’t a black box. It follows a structured process that lines up with how engineers usually approach writing tests, but does it programmatically and at scale.

Here is a flow to understand the test generation flow in Qodo:

Now, let’s go through each step in detail:

- Kickoff (/test command in IDE): You open a file in VS Code or JetBrains, select the component you want to test, and run /test. Qodo prompts you for test location (new file vs append to existing), testing framework, and behaviors you want to validate. Without this, a developer would spend the first half-hour just creating the test scaffold and boilerplate imports.

- Code Scanning and Focus Selection: Qodo parses the source file down to the abstract syntax tree (AST). It identifies functions, parameters, return values, and control paths. The “focus” bar in the IDE lets you scope generation to a specific unit, so the generated tests aren’t generic but tied to the actual code under the cursor. This replaces the manual task of reading through code line by line to map what’s testable.

- Dependency Inference: External calls to a DB, network API, or shared utility are detected and mocked. Normally, an engineer has to manually stub or patch these dependencies, which is both error-prone and time-consuming. Qodo inserts mocks and ensures test runs remain isolated and deterministic.

- Behavior-Driven Test Cases: You select behaviors (valid input, invalid payload, expired token, missing fields). Qodo expands them into concrete test cases with assertions. In practice, under time pressure, developers often cover only the happy path. Qodo closes that gap by ensuring edge conditions are included by default.

- Assertions and Mocks: For each branch, Qodo generates assertions and attaches mocks with predictable outputs. This ensures tests remain stable even if upstream services or schemas change. By hand, this step is often where brittle tests creep into enterprise codebases.

- Developer Review and Iteration: Tests show up inline in the IDE or as a follow-up PR. You can tweak behaviors, extend cases, or regenerate specific scenarios with chat commands. The heavy lifting is already done, so developers review instead of reinventing.

- CI/CD Integration: Once merged, the generated tests run in your CI pipeline on every commit. Failures point directly to the branch of logic that broke. Enterprises can also attach Qodo’s “Cover” agent in GitHub Actions, which labels PRs, analyzes coverage gaps, generates the missing tests, and opens a clean follow-up PR.

Generating Tests with Qodo (Payments API Example)

In distributed environments, reliable test coverage is non-negotiable. As microservices scale and evolve, even small refactors can break authentication, dependencies, or data flow. Writing and maintaining unit tests manually for every change often becomes repetitive and error-prone, particularly in systems like a Payments API, where correctness and security are tightly coupled.

Qodo’s test generation and PR analysis workflow automates this process end to end, ensuring that every code change is validated through reproducible, framework-aligned tests before it reaches production.

1. Payments Service Architecture

The Payments API is built with FastAPI and structured for modularity and maintainability:

- Endpoints: /health, /ready, and POST /payments

- Database: SQLAlchemy ORM with PostgreSQL

- Security: Header-based authentication using X-API-KEY

- Testing: Pytest with edge-case coverage and dependency mocking

The function verify_api_key() in main.py forms the backbone of the authentication layer. It verifies API keys from incoming requests and raises precise exceptions (401 Unauthorized) when validation fails. Given its role in controlling access, this function benefits greatly from automated, behavior-driven test generation.

2. Generating Tests with Qodo Gen

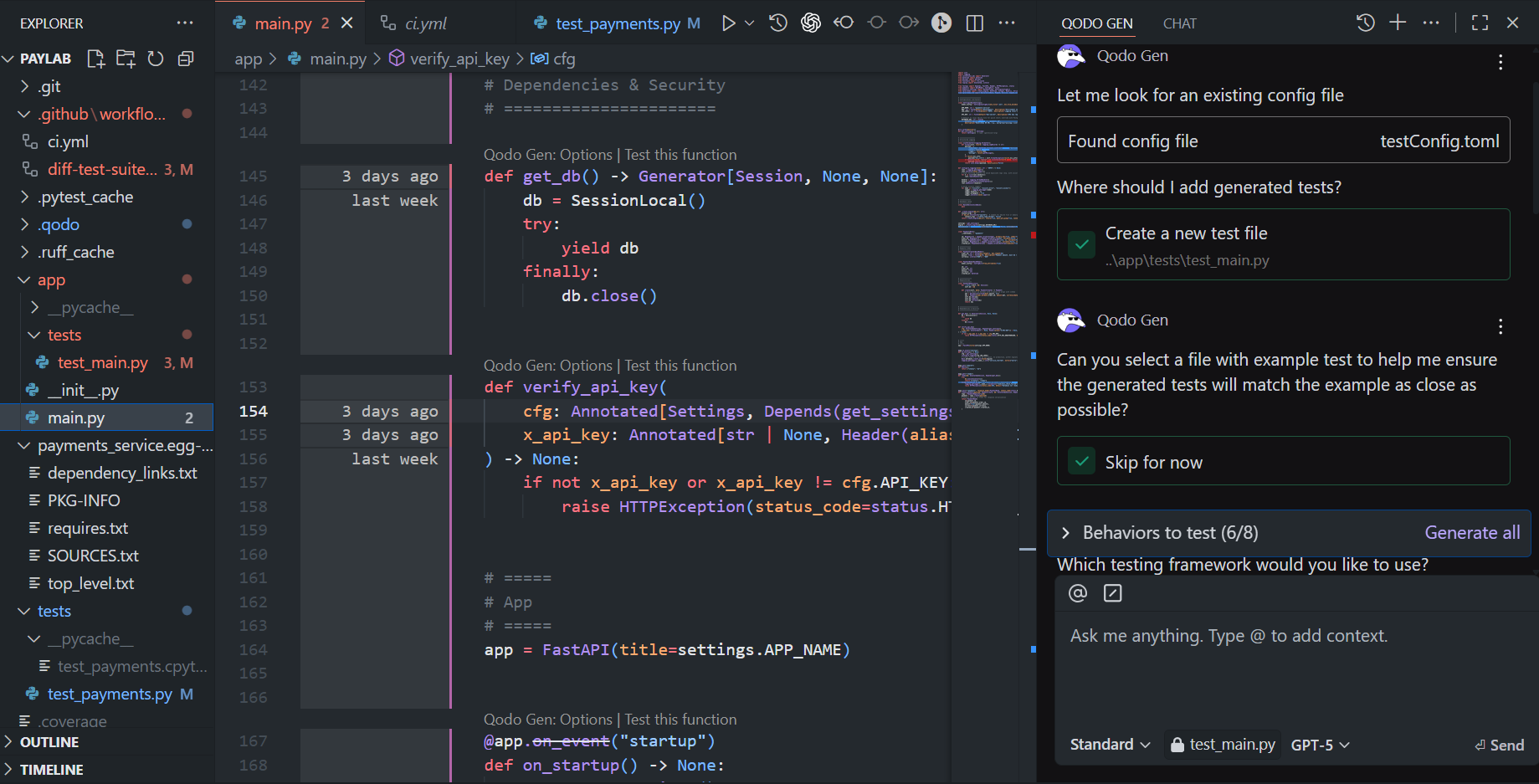

From within VS Code, Qodo Gen scans the project and automatically detects configuration files such as testConfig.toml. As shown in the snapshot below, the tool prompts where to add the generated tests, in this case, a new file created under app/tests/test_main.py:

target function (verify_api_key) and identifies testable behaviors. The interface clearly lists behaviors to test, ensuring that both positive and negative scenarios are addressed before generation.

target function (verify_api_key) and identifies testable behaviors. The interface clearly lists behaviors to test, ensuring that both positive and negative scenarios are addressed before generation.

3. Behavior Coverage Plan

Qodo’s analysis results, shown in the second screenshot, outline all the relevant cases to be validated:

- Verify access is granted when X-API-KEY matches the configured API_KEY

- Verify the dependency correctly reads the API key from the X-API-KEY header alias

- Accept API keys containing special characters that exactly match the configured value

- Deny access with 401 when the header is missing

- Deny access with 401 when the provided key doesn’t match

- Deny access with 401 when the header is empty or whitespace-only

This behavior mapping ensures that every logical path in verify_api_key is tested, without requiring manual enumeration of test conditions.

4. Qodo-Generated Test File (test_main.py)

Once confirmed, Qodo automatically generates a test suite using Pytest conventions. The generated class TestVerifyApiKey covers both valid and invalid authentication paths. Each test includes assertions against FastAPI’s exception handling and leverages the TestClient for realistic request simulations.

import pytest

from fastapi import Depends, FastAPI, status

from fastapi.testclient import TestClient

from app.main import Settings, get_settings, verify_api_key

class TestVerifyApiKey:

def test_verify_api_key_accepts_matching_key(self):

cfg = Settings()

assert verify_api_key(cfg=cfg, x_api_key=cfg.API_KEY) is None

def test_verify_api_key_uses_overridden_settings_value(self):

custom_key = "custom-secret-key-123"

cfg = Settings(API_KEY=custom_key)

assert verify_api_key(cfg=cfg, x_api_key=custom_key) is None

with pytest.raises(Exception) as excinfo:

verify_api_key(cfg=cfg, x_api_key="some-other-key")

assert excinfo.value.status_code == status.HTTP_401_UNAUTHORIZED

assert excinfo.value.detail == "Invalid or missing API key"

def test_verify_api_key_reads_case_insensitive_header_alias(self):

app = FastAPI()

@app.get("/protected", dependencies=[Depends(verify_api_key)])

def protected():

return {"ok": True}

client = TestClient(app)

api_key = get_settings().API_KEY

resp = client.get("/protected", headers={"x-api-key": api_key})

assert resp.status_code == 200

assert resp.json() == {"ok": True}

def test_verify_api_key_missing_header_raises_401(self):

cfg = Settings()

with pytest.raises(Exception) as excinfo:

verify_api_key(cfg=cfg)

assert excinfo.value.status_code == status.HTTP_401_UNAUTHORIZED

def test_verify_api_key_incorrect_value_raises_401(self):

cfg = Settings()

with pytest.raises(Exception) as excinfo:

verify_api_key(cfg=cfg, x_api_key="incorrect-key")

assert excinfo.value.status_code == status.HTTP_401_UNAUTHORIZED

def test_verify_api_key_blank_or_whitespace_value_raises_401(self):

cfg = Settings()

for invalid_key in ["", " "]:

with pytest.raises(Exception) as excinfo:

verify_api_key(cfg=cfg, x_api_key=invalid_key)

assert excinfo.value.status_code == status.HTTP_401_UNAUTHORIZED

Each test is deterministic, covers a unique failure mode, and can be executed independently as part of CI.

5. Continuous Integration and PR Analysis

After generation, the tests are committed as part of the pull request. When the /analyze command is added as a PR comment, Qodo’s PR bot analyzes all changes in the branch and correlates them to the affected functions, as shown below:

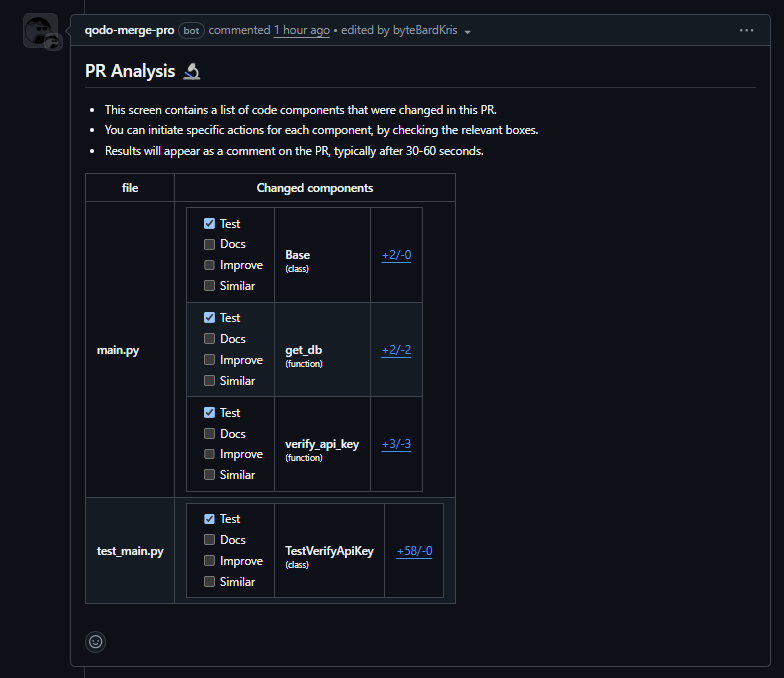

Qodo responded with the PR Analysis panel:

The breakdown of modified components by file and type, identifying the following:

- main.py

- Base: SQLAlchemy base class validation

- get_db(): Database session lifecycle (yield + close)

- verify_api_key(): API key authentication logic

- test_main.py

- TestVerifyApiKey: Auto-generated test class with six behavioral tests

This mapping makes it immediately clear which parts of the service were updated, and whether they now have corresponding test coverage. It also eliminates guesswork during PR reviews, especially in multi-developer environments.

6. Review and Merge

Before merging, teams can review Qodo’s generated tests directly within the PR. If additional edge cases are identified, for instance, key rotation scenarios or conditional authentication rules, they can be refined inline. Qodo then regenerates only the impacted tests, ensuring no manual rewrites are needed.

The result is a consistent, low-friction workflow where:

- Tests evolve automatically with the codebase

- Behavioral coverage is validated before merging

- Reviewers have instant visibility into what changed and why

This approach transforms testing from a reactive step into a proactive quality gate, one that scales naturally as the system grows.

Best Practices for Using Qodo in Production Environments

Once you’ve integrated Qodo into your workflow and started generating tests, the next step is to make sure those tests remain useful, accurate, and maintainable as your code evolves. Below are some proven practices to get the most out of Qodo in production setups.

1. Keep Your Code Modular

Qodo performs best when your codebase follows a modular structure. The more isolated your logic, the easier it is for Qodo to understand and generate relevant, high-coverage tests.

Avoid large, multi-purpose functions that handle multiple responsibilities (e.g., processing payments, logging, and sending notifications all in one). Instead, split functionality into smaller, focused functions or classes. This improves test clarity and makes debugging far simpler.

Example:

Instead of:

def process_payment(order, amount, currency):

log_transaction(order, amount)

# further logic here

Use:

def process_payment(order, amount, currency):

# handle payment logic

pass

def log_transaction(order, amount):

# handle logging logic

pass

When code is modular, Qodo can generate more precise tests that target individual units of work rather than large, ambiguous workflows.

2. Maintain a Consistent Test Structure

Keep your test files well-organized and named clearly. This not only helps your team locate tests quickly but also helps Qodo automatically identify where to place new ones.

Follow a simple, predictable convention:

tests/

test_payments.py

test_users.py

test_authentication.py

Each test file should mirror the corresponding service or module it validates. Consistency makes it easier to scale your test suite as your microservices grow.

3. Enforce Coverage Gates in CI/CD

Integrate coverage checks directly into your CI/CD pipelines. This ensures that all new pull requests maintain or improve overall test coverage.

For example, in GitHub Actions, you can enforce an 80% minimum coverage threshold:

name: Run Unit Tests

on: [push, pull_request]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: 3.x

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run tests

run: pytest --maxfail=1 --disable-warnings -q

- name: Check coverage

run: coverage report --fail-under=80

With Qodo generating tests automatically for new or modified files, these coverage gates act as a safety net, ensuring that important code paths are never left untested.

4. Monitor Test Quality Over Time

Automated test suites can degrade as codebases evolve. Some tests may break with refactors (test rot), while others become redundant or flaky. Regularly review your suite to keep it healthy.

Watch for:

- Flaky tests: Intermittent failures often signal poor isolation or external dependencies.

- Redundant tests: Overlapping tests waste CI time and make debugging harder.

- Coverage gaps: Identify untested code and prompt Qodo to generate additional tests for those areas. Schedule periodic cleanups (e.g., monthly or quarterly) to prune outdated or low-value tests.

5. Use Qodo’s Post-Merge Features

After merging tests, use Qodo’s post-merge commands like /cleanup and /docs to maintain quality and consistency.

- /cleanup: Removes outdated or unused tests after major refactors, helping prevent dead code accumulation.

- /docs: Updates test docstrings and comments, ensuring new contributors can easily understand the intent behind each test.

These commands help keep your test suite aligned with your latest codebase, especially in fast-moving teams where multiple developers push frequent changes. Qodo will automatically clean up deprecated tests and refresh docstrings to match your new code structure.

6. Periodically Reassess Test Coverage

As your system grows, so should your tests. Run coverage audits periodically to confirm that critical paths, like payment processing, authentication, and data validation, remain well-tested.

FAQs

1. During which testing phase can automated tools be used?

Automated tools can be used during several phases of the testing lifecycle. They are most commonly used during the unit testing phase, but can also support integration testing, regression testing, and performance testing. The key advantage of automation is its ability to run tests frequently and consistently, ensuring early detection of issues.

In agile environments, automated tests should be written early, typically as soon as the code is developed. This approach ensures continuous feedback and faster detection of issues through each sprint.

2. When to start automation testing in Agile?

In Agile, automation testing should ideally start as early as possible in the development process. The best time to begin is as soon as the first feature or piece of code is developed and integrated. Early automation helps to keep the codebase stable and ensures that tests are continuously run as part of the CI/CD pipeline.

Starting with unit tests is a common approach. As the application matures, more complex tests, like integration and end-to-end tests, can be automated, supporting the agile focus on iterative and incremental development.

3. What is unit testing automation?

Unit testing automation refers to using tools and frameworks to automatically create, execute, and report results for unit tests. These tests verify that individual components or functions in the application behave as expected.

By automating unit testing, you reduce human error, speed up the testing process, and ensure that tests are consistently executed as part of your CI/CD pipeline, every time changes are made to the code.

4. Which tool is used for unit testing?

There are several popular tools available for unit testing, depending on the programming language and environment. Some of the commonly used ones include:

- Qodo AI: A tool that automates the generation of unit tests, simplifying the process of writing and maintaining tests directly within your development environment.

- Pytest (for Python): A flexible and powerful testing framework that supports both simple and complex test scenarios.

- JUnit (for Java): A widely used framework for unit testing in Java applications.

- Mocha (for JavaScript/Node.js): A feature-rich testing framework that supports asynchronous testing.

- RSpec (for Ruby): A behavior-driven testing framework for Ruby applications.

Each of these tools plays a key role in ensuring the stability and quality of your application by automating the testing process, especially in large and complex systems.