Custom RAG pipeline for context-powered code reviews

Reviewing and managing pull requests (PRs) takes time and attention, and it directly affects both team output and code reliability. In enterprise environments, where teams are large and codebases are complex, this process becomes even more demanding. Developers are often responsible for reviewing code they didn’t write, with limited context, under tight timelines. Reviewers are expected to catch critical issues before changes are merged, even when they lack insight into design decisions or broader constraints.

To meet this challenge, we believe AI assistance is essential. That’s why we’re excited that Qodo Merge now supports Retrieval-Augmented Generation (RAG) across several review workflows.[1, 2]

But this raised an important question: What should be the scope of context for the AI tool that reviews the PR? While viewing and reviewing the entire PR and its associated files is required beyond any doubt, how much and how should we extend it further? In this post, we will share why we chose RAG, how we implemented it, what worked (and what didn’t), and where it is currently being used.

Why Use RAG?

No matter how good an AI model is, it will always suffer from a critical limitation when it comes to AI coding in large-scale environments: they lack awareness of your specific code patterns, naming conventions, and more characteristics of your code base. This may result in suggestions from the AI that don’t align with your established practices. The suggestions may be syntactically or even semantically correct, but they don’t necessarily work within the logic unique to your codebase and introduce friction and bugs later down the line.

RAG helps address this limitation by combining the generative capabilities of large language models with targeted retrieval of code references from your repository. This offers several potential advantages:

- Context-Aware Responses: RAG helps the AI recognize and follow your repository’s specific coding patterns, naming conventions, and architectural approaches, rather than suggesting generic implementations.

- Up-to-Date Knowledge: RAG can reference the current state of your repository’s code, allowing it to work with your latest implementations rather than being limited to what it learned during training.

- Better Code Suggestions: The ability to find and reference similar implementations within your repository can lead to consistent code suggestions.

- Knowledge Discovery: Team members can benefit from AI that can provide relevant code examples, helping surface established code patterns.

How We Implemented RAG

Our implementation began with setting up and indexing the company codebase, which serves as the foundation for RAG functionality, and developing the infrastructure to connect it with Qodo Merge. The process involved several key steps:

1. Codebase Indexing and Infrastructure

We created a semantic database of our entire codebase using our in-house state-of-the-art intelligent chunking strategies.[3, 4] Our approach combines language-specific static analysis for code chunking with a code embedding model that improves natural language-to-code understanding and enhances context retrieval. As of the time of writing this text, it is a leading open source embedding model on the CoIR (Code Information Retrieval) benchmark.

2. RAG Configuration

To configure RAG within Qodo Merge, we introduced two new parameters. The first is to enable RAG within the workflow, and the second is to define which repository will be taken into account:

[rag_arguments] enable_rag=true # Enable Rag rag_repo_list=["my-org/my-repo", ...] # List of repositories to filter by

3. Optimization

We committed to an in-depth process of refining how the system generates natural language queries from PR contexts, how to post-process the results, and how to keep their content relevant (see further detail in the following chapter).

Selected Challenges and Their Solutions

- Query pre-processing: Query content directly affects which code snippets are found, making it important to optimize. The query can potentially include text, code examples, and more. Therefore, we tailor a unique solution to address each tool used customized according to the specific contextual requirements and available information at the moment of execution.

- Output post- processing: Semantic search initial results often lack precision. Thus we introduced a re-ranking step to evaluate and prioritize them by relevance.

- Filtering moise: As codebases grow, semantic search quality deteriorates. We improved this by filtering search results to match PR programming language, preventing duplication with code already in the PR, and ensuring diverse examples from different components to avoid overfitting.

RAG-Enriched Qodo Merge Applications

Qodo Merge performs RAG on the repository where the PR is opened by default, since this repository typically contains the most relevant context, components, and coding patterns for the proposed code changes. Users can extend this functionality by configuring RAG to search across additional repositories, providing a more comprehensive context for the analysis.

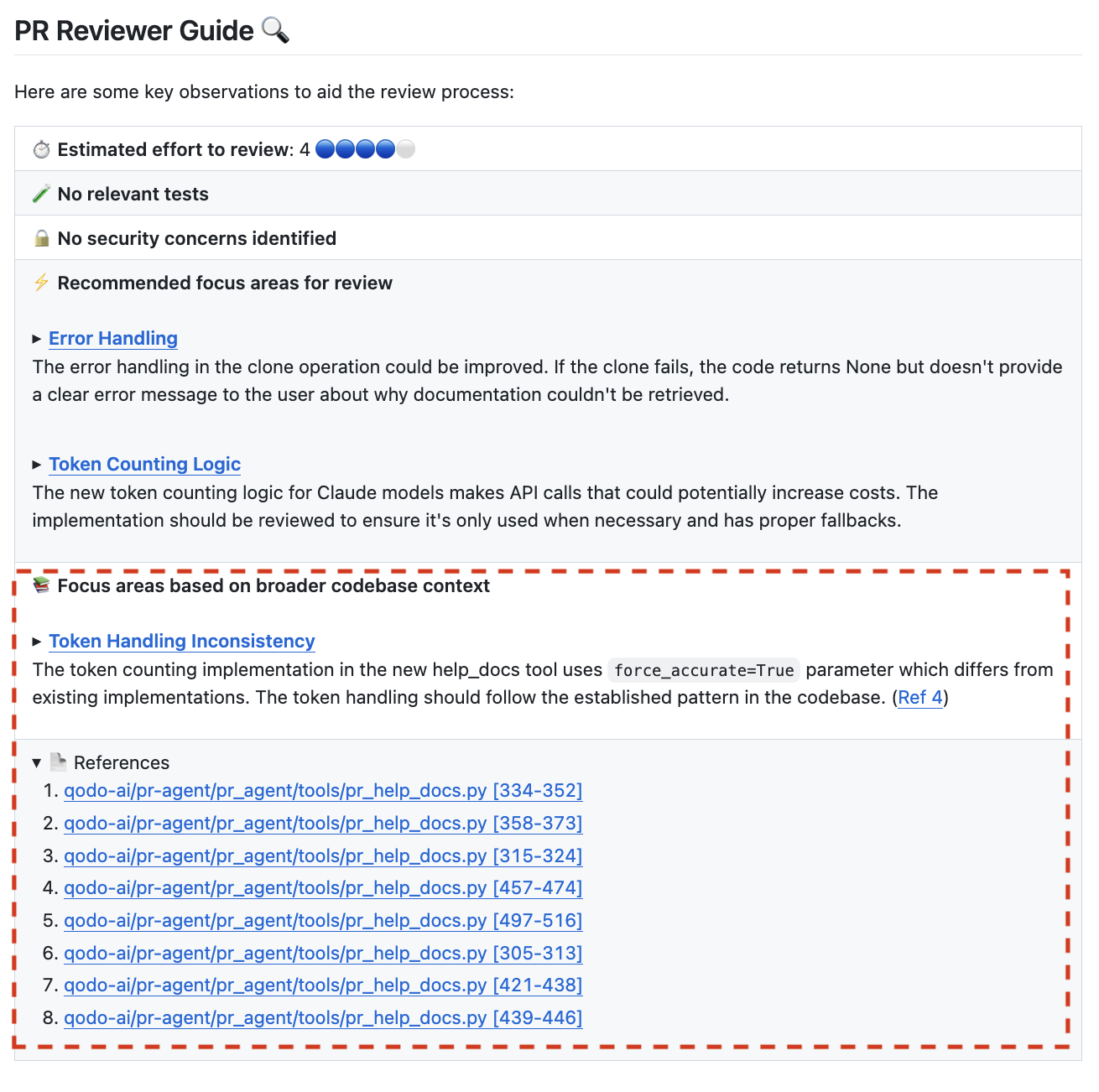

1. ‘Review’ Command with RAG

Our PR review process now incorporates context from similar implementations across the PR’s repository, enabling the system to make more informed recommendations about potential issues and best practices specific to our architecture. The existing section (Recommended focus areas for review) allows the model to analyze PR diffs using its intrinsic knowledge. To prevent confusion and avoid mixing analysis approaches, a new dedicated section (Focus areas from RAG data) was added where the model applies the same analytical capabilities to RAG-retrieved similar codebase context, enabling it to find additional issues based on comparable code patterns. In addition, reviews now include references to relevant code, helping reviewers understand the broader context by exploring the provided references.

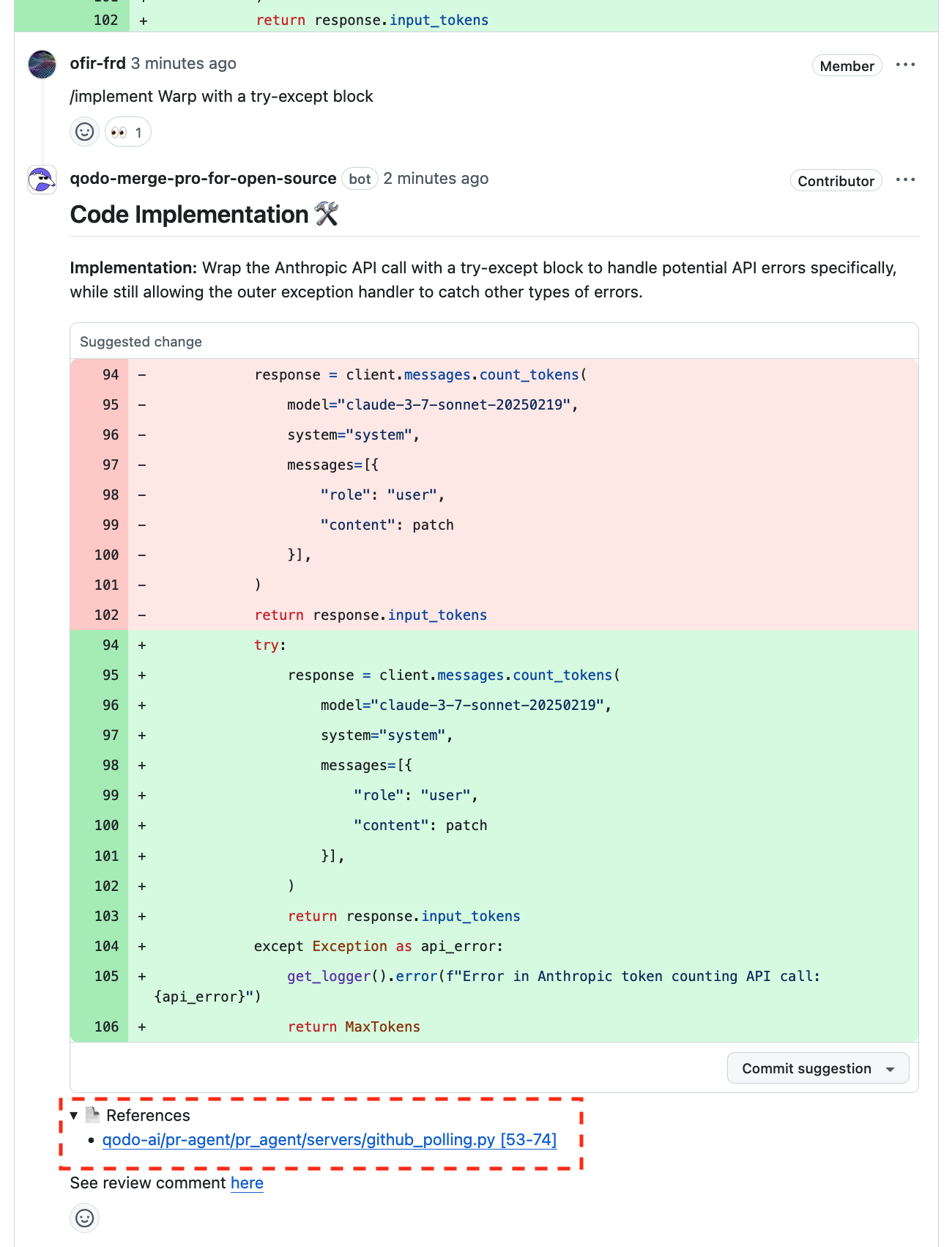

2. ‘Implement’ Command with RAG

When implementing suggestions from code reviews, the system now retrieves code examples drawn from our existing code patterns. This is particularly valuable for maintaining consistency in architectural patterns and cross-cutting concerns such as error handling, logging, and others. The implementation suggestions are more directly applicable and require less modification.

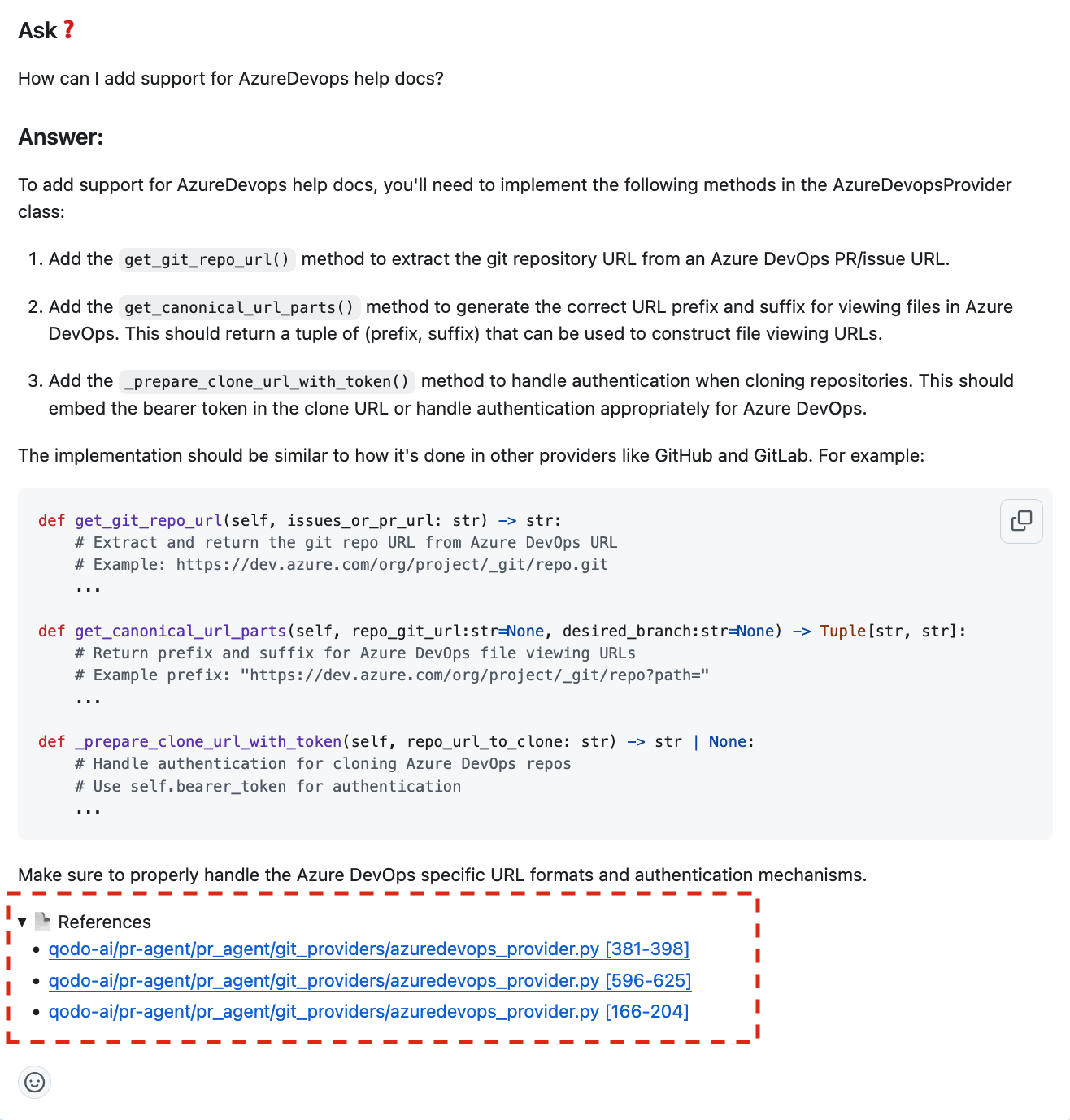

3. ‘Ask’ Command with RAG

The ability to ask questions about the PR in free text now benefits from broader codebase awareness. Developers can inquire about implementation approaches, and the system will provide answers informed by similar solutions elsewhere in the organization. This capability is relevant, for example, for exploring code patterns in the repository, onboarding new team members, and addressing any questions that cannot be answered by the PR content alone.

Outlook

Our RAG context enrichment applied to Qodo Merge tools has shown improved performance in following up on the company’s internal style, best practices, and more. Looking forward, we continue exploring opportunities for improvement. Future developments will include expanding the array of tools we enrich with RAG, R&D for better logic to reduce noise, improving RAG content, refining prompts, and more. We aim to move fast with confidence to extend Qodo Merge context beyond the PR realm while improving existing capabilities and performance.