AI Code Review Tools Compared: Context, Automation, and Enterprise Scale

TL;DR

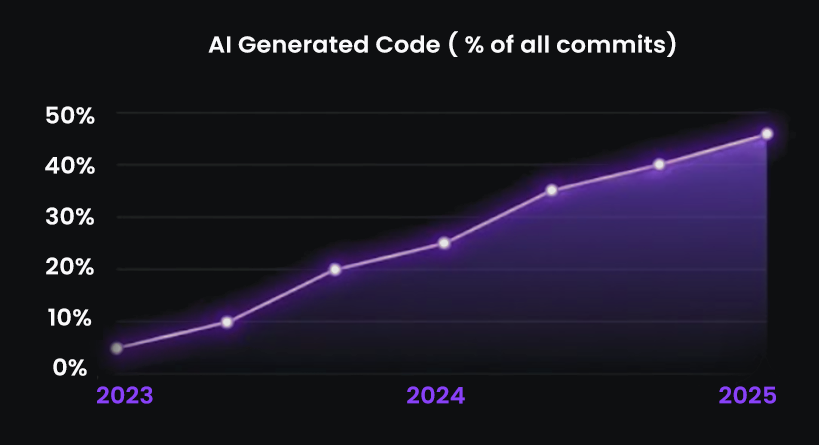

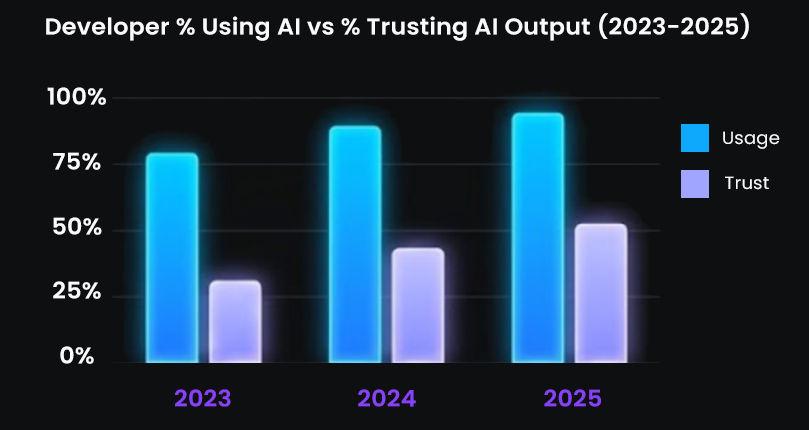

- AI coding tools reached mass adoption in 2025: Monthly pushes passed 82M, merged PRs hit 43M, roughly 41% of commits were AI-assisted, and 84% of developers adopted AI coding tools, a scale jump that reshaped how engineering teams generate and review code.

- Review processes can’t keep pace with AI-generated code volume: Organizations now generate more parallel, cross-repo changes than human reviewers can reliably validate. As teams move into 2026, review throughput, not implementation speed, determines safe delivery velocity.

- Senior engineers are buried in validation work: Engineering leaders report larger PRs touching multiple architectural surfaces, growing merge queues, regressions appearing across shared libraries and services, and senior engineers spending more time validating AI-authored logic than shaping system design.

- Most AI review tools lack enterprise-critical capabilities: Across the tools evaluated in this guide, Qodo, GitHub Copilot Review, Amazon Q, CodeRabbit, Codex, and Snyk Code, each supports different aspects of the workflow. Some focus on diff-level patterns, others on cloud correctness or security, but most lack persistent multi-repo context and do not validate changes against Jira/ADO intent, capabilities that have become critical as AI expands the scope of each PR.

- Enterprise review systems need five key capabilities: Meeting 2026 requirements now demands review systems with system-aware reasoning, ticket-aligned validation, enforceable standards, automated PR workflows, and governance models suited for large, distributed engineering organizations operating across many repos and languages.

- Qodo drives enterprise-scale AI code review: Qodo handles this shift through a persisted Codebase Intelligence Engine, 15+ automated PR workflows, and enterprise-grade deployment options (VPC/on-prem/zero-retention, SOC2/GDPR). Organizations adopt Qodo to achieve predictable PR cycles, fewer cross-repo regressions, reduced senior reviewer load, and review capacity that scales with AI-driven development velocity.

AI reshaped software engineering in 2025. Monthly code pushes climbed past 82 million, merged PRs reached 43 million based on GitHub’s latest Octoverse numbers, and 41% of new code originated from AI-assisted generation. AI adoption reached 84% of all developers, marking the fastest acceleration of code creation the industry has ever recorded.

This acceleration is signaling a shift for teams as 2026 approaches: AI is becoming the primary driver of development velocity, and review capacity is emerging as the limiting factor for delivery performance.

“At some point you realize you can get a thousand lines of code in five minutes. Then what? You have to establish processes and code review tools that make that volume safe and productive.”

— Itamar Friedman, CEO & Co-founder, Qodo

Organizations with 100-10,000+ developers now generate more code, more parallel change streams, and more cross-repo interactions than traditional review processes can absorb. PRs continue to increase in size and complexity, merge queues are growing across teams, cross-repo regressions are occurring more frequently, and senior engineers are spending significant time validating AI-generated logic.

Engineering leaders across the industry now recognize this shift as the defining constraint entering 2026: review throughput defines the ceiling on engineering velocity.

Diff-level review, file-by-file reasoning, and static analysis cannot keep pace with AI-accelerated development or the architectural awareness required across large, multi-repo systems.

This guide outlines how engineering organizations can prepare for 2026 and beyond, through context-rich review systems, automated workflows, ticket alignment, and governance models designed for AI-scaled development.

Best AI code review tools in 2026

What is AI Code Review?

AI code review is an automated review layer that evaluates code changes for correctness, safety, scope alignment, and architectural impact before a human reviewer ever opens the PR. It acts as the first reviewer in the delivery pipeline, handling the repetitive, mechanical, and context-dependent checks that slow teams down, so engineers can focus on decisions that require judgment and system awareness.

Engineering teams generate more churn than humans can reliably inspect: AI-authored changes, large refactors across shared libraries, parallel updates across services, and dozens of small commits that interact in easier ways. An AI reviewer absorbs this operational load, reduces noise, and anchors each review in the intent of the change rather than the basic diff.

Three Core Responsibilities of Enterprise AI Code Review

1. Perform reliable, context-aware checks early in the pipeline

An effective reviewer evaluates structural and behavioral patterns in the surrounding codebase in addition to syntax. It should reason about:

- imports, related modules, and shared libraries

- service boundaries and data flows

- test conventions and infra configuration

- lifecycle behavior and initialization sequences

This allows the system to detect issues that disappear in fast-moving repos,missing awaits, unsafe permission updates, brittle error handling, hard-coded secrets, inconsistent patterns, while avoiding the false positives that plague static tools. When the reviewer understands the actual architecture, its findings resemble comments from someone familiar with how the team builds software.

2. Validate scope and intent, not just syntax

Most PR delays come from discrepancies between the code and the ticket, so an effective AI reviewer needs to read the associated Jira or Azure DevOps ticket, understand the requested change, and compare that requirement to the diff.

That makes it possible to flag problems that humans typically catch late:

- changes that drift beyond the requested scope

- edge cases described in the ticket but missing in the implementation

- code paths modified without corresponding test updates

- PRs that solve a different problem than the one documented

This is the difference between “helpful suggestion” and “useful reviewer.”

3. Integrate with the delivery workflow and influence outcomes

A reviewer adds value when it shortens the path from commit to merge. To do that, AI needs to operate in the same places where engineers work:

- IDE: includes a pre-PR self-review step that removes trivial issues before a branch ever hits CI.

- CI: run structured checks and expose actionable findings instead of noisy threads of comments.

- PR workflow: support gating, risk scoring, missing-test detection, and automatic approvals when the change is low-risk and aligns with the ticket.

When these layers work together, reviewers spend less time triaging nits and more time assessing architecture, trade-offs, and the implications of a change. Developers also receive clearer signals: what must be fixed, what’s optional, and what is safe to merge.

How AI Code Review Reshapes the Software Review Process

A well-governed AI code review system preserves human ownership of the merge button while raising the baseline quality of every PR, reduces back-and-forth, and ensures reviewers only engage with work that genuinely requires their experience. It handles the routine operational load so the team’s attention can shift upward, to correctness, resilience, and long-term maintainability.

The Problem: Why PR Review Is the Pipeline Bottleneck

In 2025, AI accelerated work across the software delivery pipeline, from planning and implementation through build, test, and deployment. Copilots and code-generation tools now participate in:

- Implementation: generating full functions, classes, controllers, handlers, React components, Terraform modules, and test suites.

- Refactors: large-scale changes across microservices, shared libraries, design systems, and internal SDKs.

- Operational code paths: infrastructure-as-code, CI/CD definitions, feature flags, and runtime configuration.

For organizations with multiple developers working across many repositories, development throughput increased sharply while PR review capacity remained flat. That imbalance now shows up in several specific ways.

1. PRs expanded in scope

AI-assisted changes often touch multiple layers in a single PR:

- Backend microservice handlers plus DTOs and validation layers

- Shared libraries used by multiple services or frontends

- React/Vue components plus hooks, context providers, and test utilities

- Terraform or CloudFormation stacks plus application code that relies on them

A PR that previously modified a single controller now updates:

- API handlers

- ORM models

- background jobs

- metrics and logging

- related tests

Reviewers must understand end-to-end behavior across services and layers, not just a localized edit.

2. Review queues grew, even in well-run teams

With more AI-generated changes per developer, PR arrival rates increased for:

- Microservices (auth, billing, notifications, search)

- Edge APIs and gateways

- Internal platforms (design systems, internal SDKs, platform CLIs)

Senior engineers frequently carry 15–25 open PRs that require:

- Reconstructing request/response flows across services

- Checking compatibility with shared contracts (Protobuf/GraphQL/OpenAPI)

- Verifying background processes, queues, and scheduled jobs

Each PR demands reloading a complex mental model of the system. That reload cost dominates review time.

3. Cross-repo and cross-service regressions increased

Enterprise systems rarely live in a single repo. Typical environments include:

- Dozens to hundreds of microservices (Kubernetes, ECS, serverless)

- Shared libraries and packages (NPM/PNPM/Yarn, Maven/Gradle, internal PyPI/Gem/Bundler registries)

- Infrastructure repos (Terraform, Pulumi, CloudFormation)

- Frontends (multiple SPAs or mobile clients consuming shared APIs)

AI-generated changes in one place can easily misalign with:

- A shared TypeScript or Java SDK used by several services

- A billing or entitlement service that enforces invariants

- An authentication/authorization layer that expects specific claims or scopes

- Reporting jobs or analytics pipelines that rely on event shapes

Without cross-repo awareness, reviewers miss schema changes, contract drift, and lifecycle assumptions that only appear when multiple repos interact in production.

4. Ticket intent became harder to validate

AI speeds up implementation, but work items still define truth:

- Jira/ADO tickets describe scope, acceptance criteria, edge cases, and non-functional requirements.

- AI tends to “fill in” missing details, which may diverge from what product or architecture teams intended.

Reviewers now spend more time on questions like:

- Does this PR actually implement the ticket, or did it introduce adjacent changes?

- Are all acceptance criteria covered in code and tests?

- Did the PR modify behavior in services that the ticket never mentioned?

When PRs touch multiple services or shared libraries, mapping the diff back to the ticket becomes a heavy cognitive task.

5. Senior engineers absorbed the majority of the validation load

Because AI can generate syntactically correct but semantically wrong code, senior engineers act as:

- Architectural gatekeepers: checking service boundaries, data ownership, and invariants.

- Runtime correctness reviewers: verifying async flows, retries, idempotency, and error propagation.

- Governance guardians: ensuring logs, metrics, and security controls follow org patterns.

Their calendars shift from:

- Designing new systems and simplifying architectures

- Driving platform and reliability work

Toward:

- Triage of large AI-authored PRs

- Explaining standards repeatedly in review comments

- Debugging regressions introduced by seemingly harmless changes

This shift reduces the strategic impact of the most experienced engineers.

“When you work with Claude Code to generate the code, effectively you’re code-reviewing Claude’s code. He’s writing the code and you’re reviewing it. And when you open the PR and I come to review it, I’m actually reviewing the person who did the code review. This process did not exist back in the day… Something has changed drastically—now we’re reviewing how people supervise AI, not just the handwritten code.”

— Lotem Fridman, Head of Internal AI, monday.com

6. Governance and risk management remained downstream

Most organizations still enforce:

- Security checks (SAST/DAST, secret scanning)

- Compliance rules (SOC 2, ISO 27001, PCI, HIPAA)

- Policy enforcement (RBAC, PII handling, data residency)

late in the CI/CD or audit stages, not at PR creation. That pattern leads to:

- Late-stage pipeline failures

- Expensive clean-up work

- Production incidents tied to misconfigurations or missed standards

In an AI-accelerated environment, late discovery scales cost and risk faster than teams can absorb.

7. Traditional tools lack system-level reasoning

Existing categories each solve a narrow slice:

- IDE copilots: excel at suggesting code inside a single file or project; they lack visibility into multiple repos, runtime interactions, or ticket context.

- PR summarizers: describe what changed in the diff; they rarely evaluate whether the change is safe for the broader system.

- SAST/linters: detect patterns and vulnerabilities in files; they do not reason about initialization order, distributed transactions, or multi-service workflows.

None of these tools maintains a persisted model of the entire codebase and its dependencies across repos, environments, and workflows. That gap explains why review remains the bottleneck.

The Operational Reality: Review Throughput Now Governs Delivery Velocity

In late 2025, AI began driving the majority of code creation across enterprise engineering teams. As organizations prepare for 2026, one operational fact is clear:

- AI increases the rate of code production.

- PR review capacity controls the rate of safe code delivery.

This creates a measurable constraint for engineering leaders: review throughput now defines the maximum velocity an organization can sustain.

Even with faster development, stronger tooling, and expanded CI/CD automation, delivery slows when review systems cannot absorb PR volume, evaluate cross-repo impact, or enforce standards consistently. When review becomes saturated, cycle times extend, risk increases, and system reliability declines.

The Non-Negotiable Requirements for AI Code Review Tools in 2026

- Architectural Context That Understands the Entire System

- Validation Against Jira / ADO Requirements, Not Just Code Patterns

- Runtime-Aware Reasoning That Detects Failures Hidden Beyond the Diff

- Security and Compliance Enforcement at the PR Level

- High-Signal Findings That Behave Like a Senior Engineer, Not a Linter

- Centralized Enforcement of Engineering Standards Across All Repos

- Unified Workflow Coverage Across the Entire Development Loop

- Enterprise-Grade Governance and Deployment Controls

- Predictable, Low-Latency Analysis at Scale

- Automated Handling of Low-Risk Changes, With Human Oversight for High-Risk Work

As we come to the end of 2025, engineering organizations are operating under conditions different from any previous cycle. AI-assisted development now accounts for nearly 40% of all committed code, global PR activity has surged, and leaders frequently report that review capacity, not developer output, is the limiting factor in delivery. At the same time, adoption of AI tools accelerated, yet trust in AI-generated code remained low across major surveys.

These signals make it clear that in 2026, review quality, requirement alignment, and governance now determine engineering velocity. The capabilities below outline what AI review systems of 2026 must provide to meet that shift.

1. Architectural Context That Understands the Entire System

As AI tools expanded the volume and complexity of code changes, each pull request began touching a broader range of architectural layers. Enterprises include microservices, internal SDKs shared across teams, event processors, API gateways, background workers, and infrastructure modules. Diff-only review tools cannot detect when a small modification silently affects authentication paths, breaks API contracts, alters event semantics, or introduces lifecycle bugs.

This expanding architectural impact is directly tied to the increasing volume of AI-generated code.

For review to be reliable, AI systems must maintain persistent, cross-repo context, including dependency graphs, shared libraries, interface contracts, lifecycle behavior, and service boundaries. Without this context foundation, correctness cannot scale.

2. Validation Against Jira / ADO Requirements, Not Just Code Patterns

In 2025, the majority of costly mistakes stemmed from PRs that looked clean but failed to satisfy business requirements. AI-generated implementations frequently covered the “happy path” but omitted edge cases, acceptance criteria, negative scenarios, or constraints buried in the ticket. This mismatch caused review cycles to drag longer and shipped features to behave unpredictably in production.

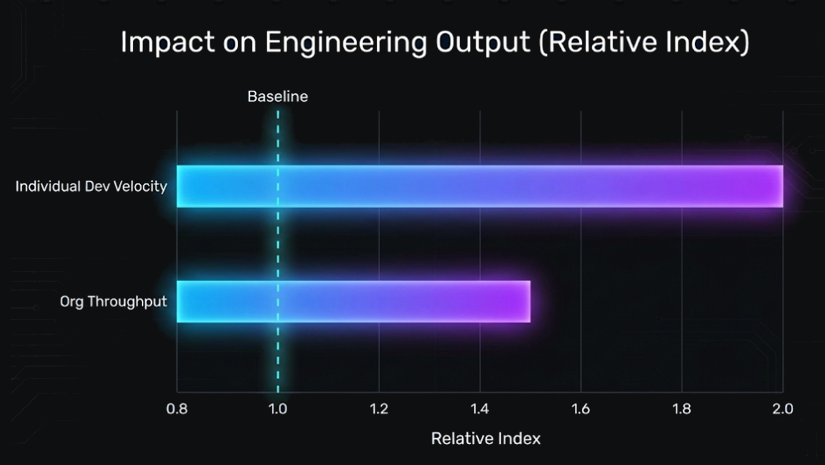

The data clearly shows the root cause: individual developer velocity increased, but organizational throughput did not.

To close this gap, the AI review must read and interpret Jira/ADO tickets, understand the acceptance criteria, and evaluate whether the implementation fulfills the requirement. This level of alignment cannot be left to human reviewers alone when AI increases velocity code creation.

3. Runtime-Aware Reasoning That Detects Failures Hidden Beyond the Diff

Logic flaws increased sharply in AI-authored changes especially in async control flow, retry logic, background tasks, caching layers, and event-driven consumers. These issues do not appear in the diff and cannot be found through file-level pattern matching. They show up only when testing how the code executes across distributed services.

For example, a change to retry semantics in a shared internal SDK can cause duplicate writes, out-of-order event consumption, or inconsistent final states across services. Human reviewers often miss this because reconstructing entire execution flows across repos is expensive and time-consuming.

An AI reviewer must reason about execution behavior as well as syntax or diff structure. Otherwise, AI-generated regressions will continue increasing as velocity increases.

4. Security and Compliance Enforcement at the PR Level

By 2025, organizations saw a noticeable increase in security concerns rooted in AI-generated contributions: incomplete sanitization, unsafe regex, misconfigured IAM permissions, unvalidated user input paths, and stale or misused infrastructure templates. Security teams were overloaded and often discovered these issues after code had already been merged.

“The security issue Qodo caught early on showed us we had gaps in our manual review process. Since then, Qodo has become a reliable part of our workflow.” — Liran Brimer, Senior Tech Lead at monday.com

Compounding this issue was the growing trust gap: developers used AI tools more than ever, but trusted the correctness of their outputs far less.

AI code review platforms must therefore integrate security analysis, compliance checks, and data-flow reasoning directly into the PR, long before CI or security teams encounter the change.

5. High-Signal Findings That Behave Like a Senior Engineer, Not a Linter

The most common failure mode of AI review tools in 2025 was noise.

Developers rejected tools that generated:

- cosmetic comments

- irrelevant suggestions

- hallucinated recommendations

- inconsistent severity scoring

For enterprise adoption, an AI reviewer must provide concise, high-signal findings grounded in system behavior. Each comment must explain why it matters, what downstream systems it affects, and whether it blocks or informs the merge. This is the standard human senior reviewers already operate under; AI must meet the same bar.

6. Centralized Enforcement of Engineering Standards Across All Repos

As AI increased output volume, organizations saw meaningful drift across naming conventions, API patterns, logging structures, observability frameworks, and test designs. Large engineering teams cannot rely on human enforcement across dozens or hundreds of repositories.

“By incorporating our org-specific requirements, Qodo acts as an intelligent reviewer that captures institutional knowledge and ensures consistency across our entire engineering organization. This contextual awareness means that Qodo becomes more valuable over time, adapting to our specific coding standards and patterns rather than applying generic rules.”

— Liran Brimer, Senior Tech Lead, monday.com

A reviewer must allow teams to define standards once and apply them everywhere—across programming languages, service boundaries, and repository layouts—without per-repo configuration overhead. This is how teams reduce long-term architectural entropy while increasing throughput.

7. Unified Workflow Coverage Across the Entire Development Loop

One of the strongest bottlenecks in 2025 was fragmented feedback: the IDE surfaced one batch of issues, the PR reviewer another, and CI pipelines yet another. Developers wasted time reconciling conflicting messages rather than shipping code.

Enterprise review must unify the workflow:

- Early feedback in the IDE

- Contextual review in the PR

- Enforceable gates in CI

This end-to-end consistency is the only way to convert individual developer velocity into predictable organizational delivery velocity.

8. Enterprise-Grade Governance and Deployment Controls

Enterprise engineering organizations require:

- VPC or on-prem deployment

- zero-retention analysis

- SSO/RBAC

- audit logs and traceability

- strict boundary controls

Without these capabilities, AI review systems cannot be adopted in regulated industries or large SaaS environments where code confidentiality is non-negotiable.

9. Predictable, Low-Latency Analysis at Scale

2025’s data showed a spike in PR and push volume, while review capacity remained flat. Latency became an organizational liability. If reviewers wait minutes or hours for analysis, merge queues grow, and throughput collapses. AI review must give fast, predictable results, seconds for small changes, and minutes for complex ones, to avoid becoming a new bottleneck in the system.

10. Automated Handling of Low-Risk Changes, With Human Oversight for High-Risk Work

The most successful engineering organizations in 2025 shifted routine review load off senior engineers by automatically approving small, low-risk, well-scoped changes—while routing schema updates, cross-service changes, auth logic, and contract modifications to humans.

AI review must therefore categorize PRs by risk and flag unrelated changes bundled in the same PR, and selectively automate approvals under clearly defined conditions. This hybrid model is the only sustainable path toward scaling review capacity without sacrificing quality or safety.

The AI Code Review Tools Worth Testing in Enterprise Environments

Engineering leaders testing AI code review tools are looking for code review platform that can operate inside all the constraints of a large engineering organization such as multi-repo architectures, interconnected services, shared SDKs, ticket-driven execution, and governance requirements that span hundreds or thousands of developers. Below is a focused review of the tools that matter for mid-market and enterprise teams, along with where each one fits and where it struggles.

1. Qodo: AI Code Review Platform Built for Enterprise Teams

Qodo is built for enterprise engineering environments with multi-repo architectures, distributed teams, polyglot codebases, ticket-driven workflows, and governed code delivery.

It positions the reviewer as a system-aware AI agent that understands how the architecture behaves, along with what changed in the diff. This is the main distinction separating Qodo from diff-first tools like Copilot Review and Cursor.

At the base is Qodo’s Codebase Intelligence Engine, which maintains a stateful, system-wide model of the entire system: module boundaries, lifecycle patterns, shared libraries, initialization sequences, and cross-repo dependencies. This is the level of understanding engineering leaders describe as “cross-repo context,” “context-rich review,” and “deeper context than Copilot/Cursor provide.”

Qodo layers this system context with automated PR workflows including Jira/ADO scope validation, missing-test detection, standards enforcement, and risk/complexity scoring. These workflows replace the repetitive first-pass checks senior reviewers perform and drive measurable outcomes, shorter PR cycle time, Jira-linked auto-approvals for low-risk changes, and consistent review depth across teams.

Organizations adopt Qodo because it transforms review from a manual, context-starved bottleneck into a predictable, governed, system-level workflow that scales across hundreds or thousands of developers.

Where it fits

- Engineering teams running multi-repo, multi-language systems where the diff alone lacks enough information for meaningful review.

- Organizations need ticket-aware validation that confirms a PR aligns with its Jira/ADO requirements.

- Environments that depend on consistent enforcement of engineering standards across many teams and repos.

- Governed engineering environments that require SOC2/GDPR compliance, VPC/on-prem deployment, zero-retention modes, and full auditability.

- Teams focused on predictable PR throughput, not just automated suggestions.

- Distributed teams where inconsistency in review depth leads to drift, regressions, and unpredictable merge outcomes.

Limitations

- Small monorepo teams may not benefit from cross-repo reasoning or standards enforcement at organizational scale.

- Initial ingestion requires time for the Codebase Intelligence Engine to build stable context across repositories.

- Platform engineering is often involved during rollout, especially for VPC/on-prem deployments.

- Teams without structured ticket-driven workflows won’t fully benefit from scope validation or Jira-linked auto-approval.

Who it‘s For

Qodo fits engineering organizations that:

- Operate across interconnected systems with shared libraries, internal SDKs, downstream services, and cross-repo contracts.

- Require consistent enforcement of engineering standards—tests, documentation, naming, API usage, security patterns—across many teams.

- Depend on Jira/ADO for structured backlog and workflow governance.

- Need predictable PR throughput and measurable improvements in merge cycle times.

- Must adhere to strict compliance and data-governance requirements including VPC, on-prem, zero retention, SOC2, and GDPR.

- Want to offload repetitive validation from senior engineers so they can focus on architectural decisions, not mechanical checks.

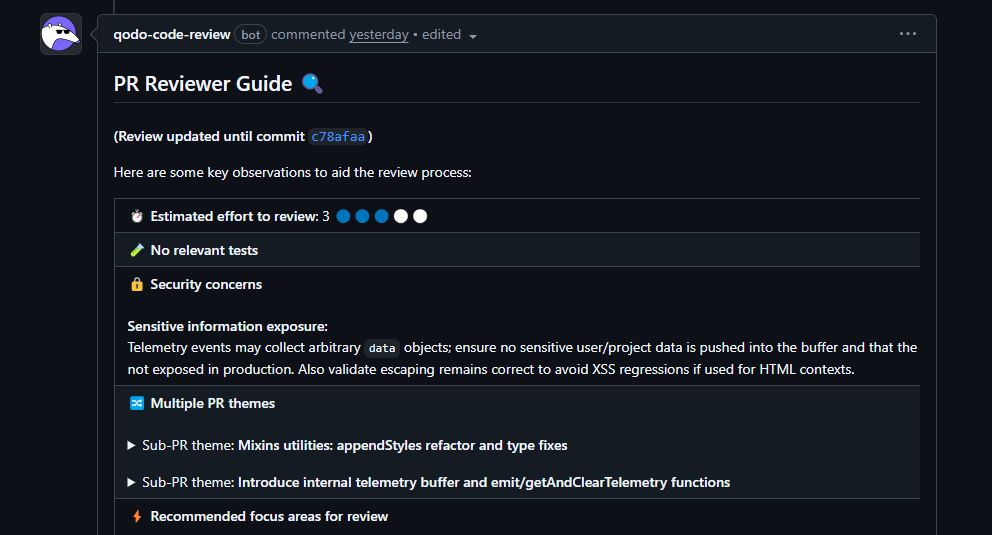

Hands-On Evaluation: Reviewing Cross-Module New Telemetry Logic

I tested Qodo inside the GrapesJS monorepo. GrapesJS is a workspace with dozens of packages, a lot of shared utilities, and several subsystems wired together through Backbone models, views, and a pretty deep utility layer. The PR I opened mixed two things: one, a batch of cleanup changes in mixins.ts, and a new internal telemetry buffer that collects lightweight events inside the editor. Here is how Qodo gave the review:

Here’s what stood out in the review.

Picked up that the PR mixed unrelated themes

The PR contained both telemetry work and a refactor in a utility module. Qodo flagged that separation immediately. This is something reviewers often catch late because they jump straight into the diff. Having the tool call it out upfront forces a cleaner review path and makes it obvious where to focus.

Flagged the regex change in stringToPath as a potential regression point

One of the changes updated rePropName, which is used inside stringToPath. That utility is affected by many features: component parsing, style manager lookups, data binding paths, etc.

Qodo flagged the regex change as something worth rechecking. That’s exactly right, any tiny shift in escaping or bracket handling can break component resolution in ways. This wasn’t a generic “be careful with regex” warning; it was tied to the actual effect on downstream modules.

The telemetry module review went deeper than expected

The telemetry buffer is simple: a global array with an emit() helper and a getAndClearTelemetry() call. Qodo raised the two concerns I would expect from a senior reviewer:

- The buffer is unbounded,

- the emit path accepts arbitrary objects

In a long-running editor session, that buffer could grow and start eating memory, and depending on what developers push into it, it could capture internal state you don’t want leaking. These are fair points, and exactly the sort of operational notes that get flagged during a real code review.

Noticed the escape() update wasn’t complete

escape() is used across rendering flows, not just in mixins. The updated version in the PR ended mid-chain, which means certain characters wouldn’t be escaped correctly. That kind of partial refactor usually turns into hard-to-trace UI bugs or, in the worst case, an XSS vector. Qodo calling this out was appropriate; it’s the kind of small detail humans often miss when scanning utility diffs.

Caught a real runtime edge case in the href selector logic

One of its suggestions focused on this line:

document.querySelector(`link[href="${href}"]`)

If href contains a quote, it turns into an invalid selector and throws a DOMException. The suggestion to escape the quotes is correct and prevents a runtime break. That’s a practical fix, not a stylistic comment. You’d want any reviewer—human or AI—to catch that.

Pointed out missing tests where they mattered

There were no tests covering:

- the new telemetry behavior

- the updated regex path inside stringToPath

Both areas can cause silent regressions, especially in a monorepo with many modules depending on shared utilities. This feedback was accurate and aligned with the parts of the diff that carried risk.

Now, based on the above review, Qodo behaved like a reviewer who actually understands how shared utilities, global state, and parsing logic affect a large system. It avoided cosmetic comments and focused on the parts of the change that matter:

- utility-level breakpoints

- operational concerns around the global state

- runtime edge cases in DOM usage

- incomplete escaping logic

- lack of test coverage

- mixed-purpose PR structure

From this test, the tool showed it can anchor a review in actual project behavior, not just the diff.

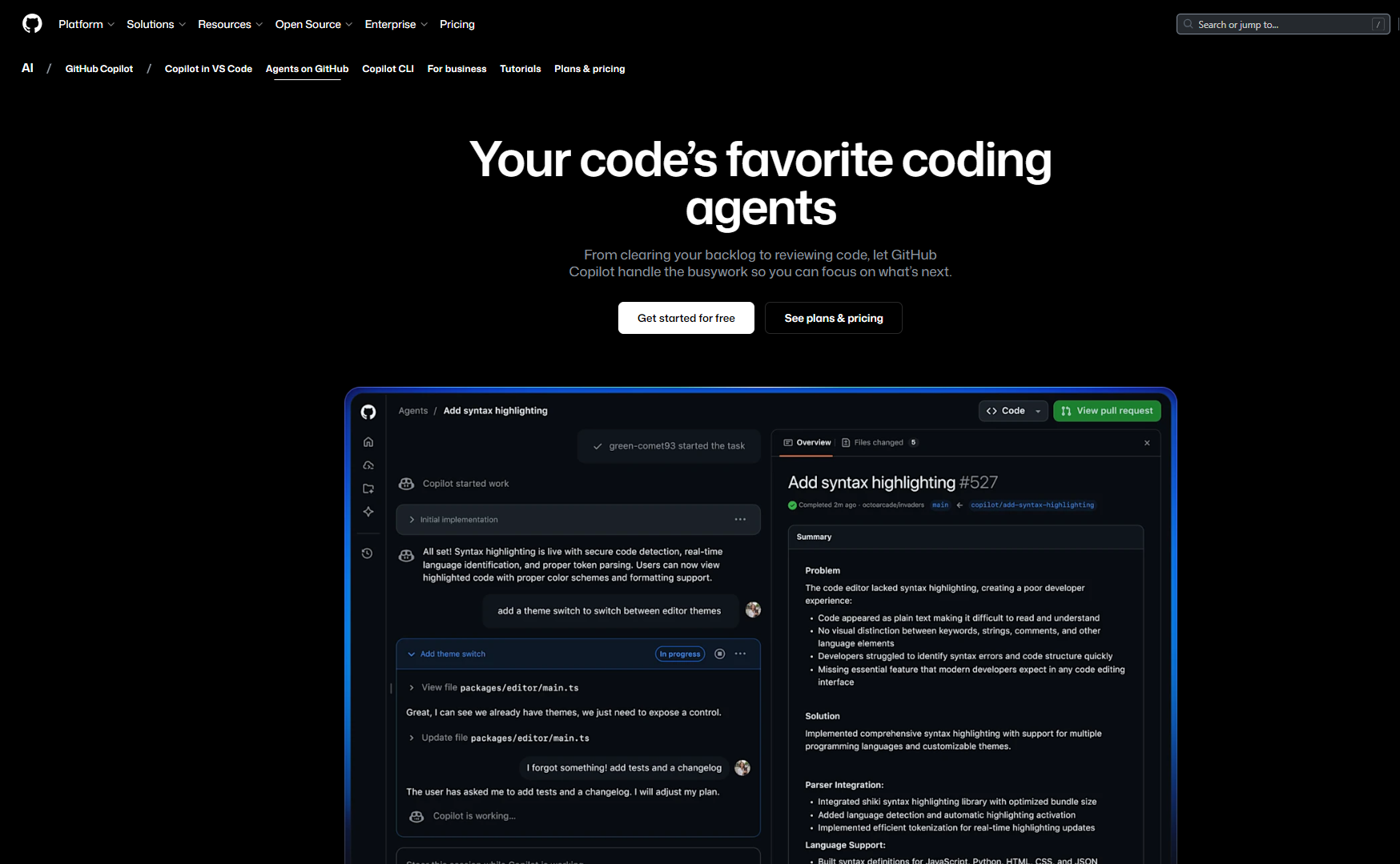

2. GitHub Copilot Review

GitHub Copilot Review is an AI-assisted PR reviewer built directly into the GitHub platform. It analyzes pull requests, generates summaries, highlights potential issues, and proposes code changes. Feedback appears as inline comments or structured review notes within the PR. It uses repository-level context available inside GitHub and ties into GitHub’s broader ecosystem: Actions, policy workflows, and IDE extensions.

Where it fits

- Teams operating primarily within GitHub-hosted repositories and GitHub’s native PR workflow.

- Codebases where repo-level context is typically sufficient for reviewing changes.

- Development environments centered on VS Code or JetBrains, where Copilot is already part of the authoring workflow.

- Teams that prefer inline suggestions without adding external review infrastructure.

Limitations

- Analysis is scoped to the repository; it does not maintain cross-repo or workspace-wide context.

- Copilot Review does not include ticket interpretation or comparison of the PR against Jira/ADO requirements.

- It does not provide policy-driven merge gating; enforcement must be done through GitHub branch protections or workflow rules.

- Review quality depends on the information available in the PR and the surrounding files, which may limit detection of architectural or multi-service impacts.

Who it’s for

GitHub Copilot Review is a strong fit for:

- teams operating fully inside GitHub

- organizations with <100–150 developers or with repository structures that rarely span multiple domains

- teams incrementally adopting AI in review workflows without governance-heavy requirements

- developers who want fast, readable feedback that improves PR clarity and maintainability

- engineering groups that treat AI review as an optional assistant, not a workflow layer

Copilot Review adds value as a developer-centric tool, not a governance or system-context engine. It improves day-to-day reviews but is not built to enforce organization-wide standards or reason about multi-repo architectures.

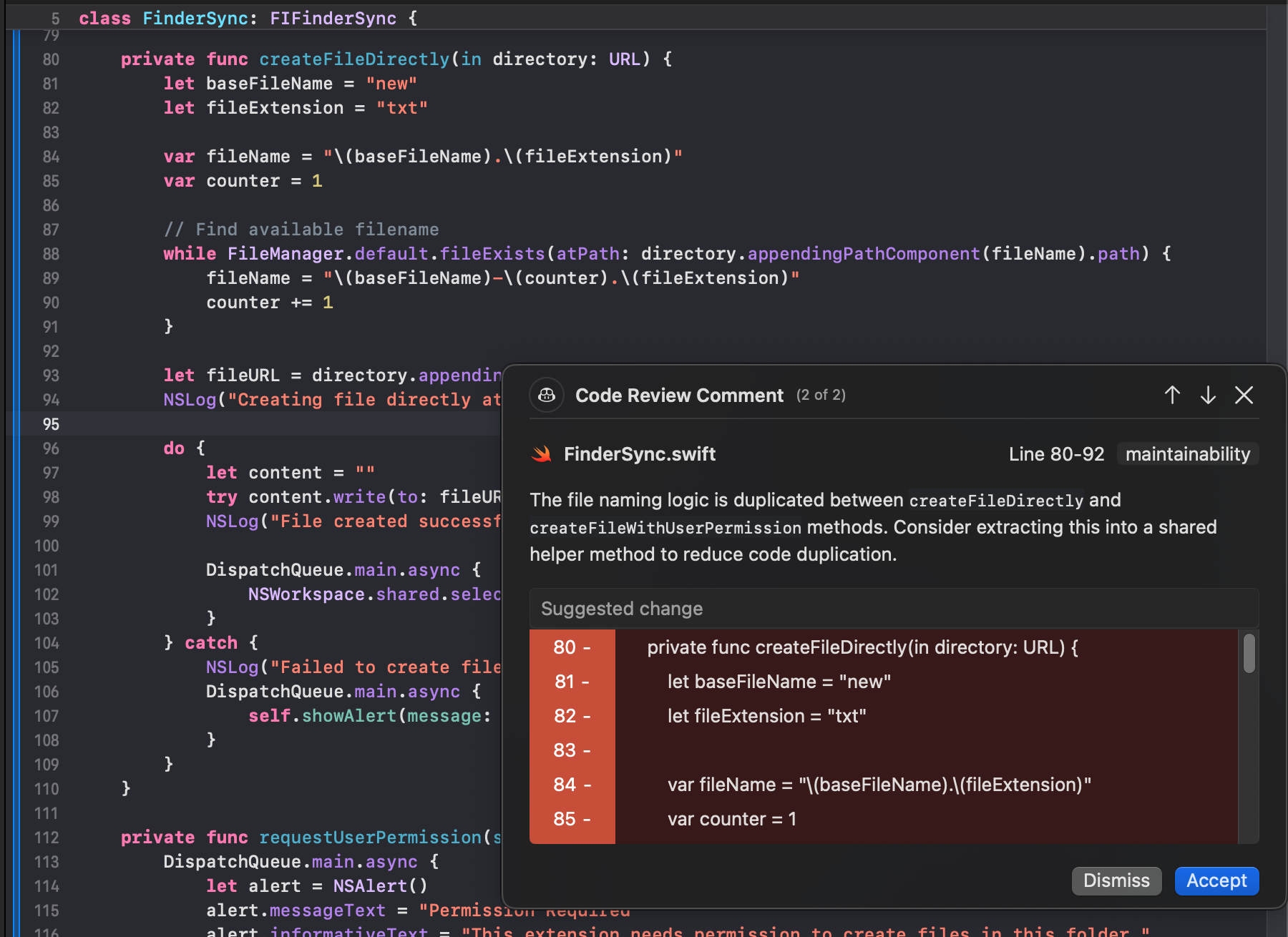

Hands-On: How GitHub Copilot Review Handled a Swift File-Naming Change

This example comes from a Swift extension where a class FinderSync defines methods to create files in a directory. The method under review, createFileDirectly(in directory: URL), builds a filename (baseFileName = “new”, fileExtension = “txt”), then runs a while loop with FileManager.default.fileExists(atPath:) to find the first available filename before writing content.

GitHub Copilot Review generated a comment on FinderSync.swift, lines 80–92, tagged as “maintainability” with a “Code Review Comment (2 of 2)” label:

The comment was:

The file naming logic is duplicated between createFileDirectly and createFileWithUserPermission methods. Consider extracting this into a shared helper method to reduce code duplication.

It then highlighted the block that configures baseFileName, fileExtension, fileName, and the while loop that increments a counter, marking it as a “Suggested change”.

From a technical standpoint, Copilot Review did the following:

- Detected intra-file duplication: It compared createFileDirectly and createFileWithUserPermission and identified that the filename selection logic (base name, extension, loop over FileManager.default.fileExists) is structurally identical.

- Classified the issue correctly: The tag “maintainability” matches the actual concern, duplicated logic that should be a shared helper, not a functional bug or security issue.

- Scoped the finding precisely: The comment pointed to a specific line range (80–92) and showed the exact duplicated block, making it easy to see what should be extracted.

- Focused on a single concern: It did not mix style feedback or unrelated suggestions into this comment; it stayed on the duplication and refactor recommendation.

In this scenario, Copilot Review behaved like a local static reviewer: it recognized repeated patterns within the same file, associated them with maintainability, and recommended a straightforward refactor via a helper method. It did not attempt to reason about higher-level behavior (e.g., user permissions, FinderSync extension lifecycle, or any calling context); its contribution was a targeted, file-level maintainability improvement.

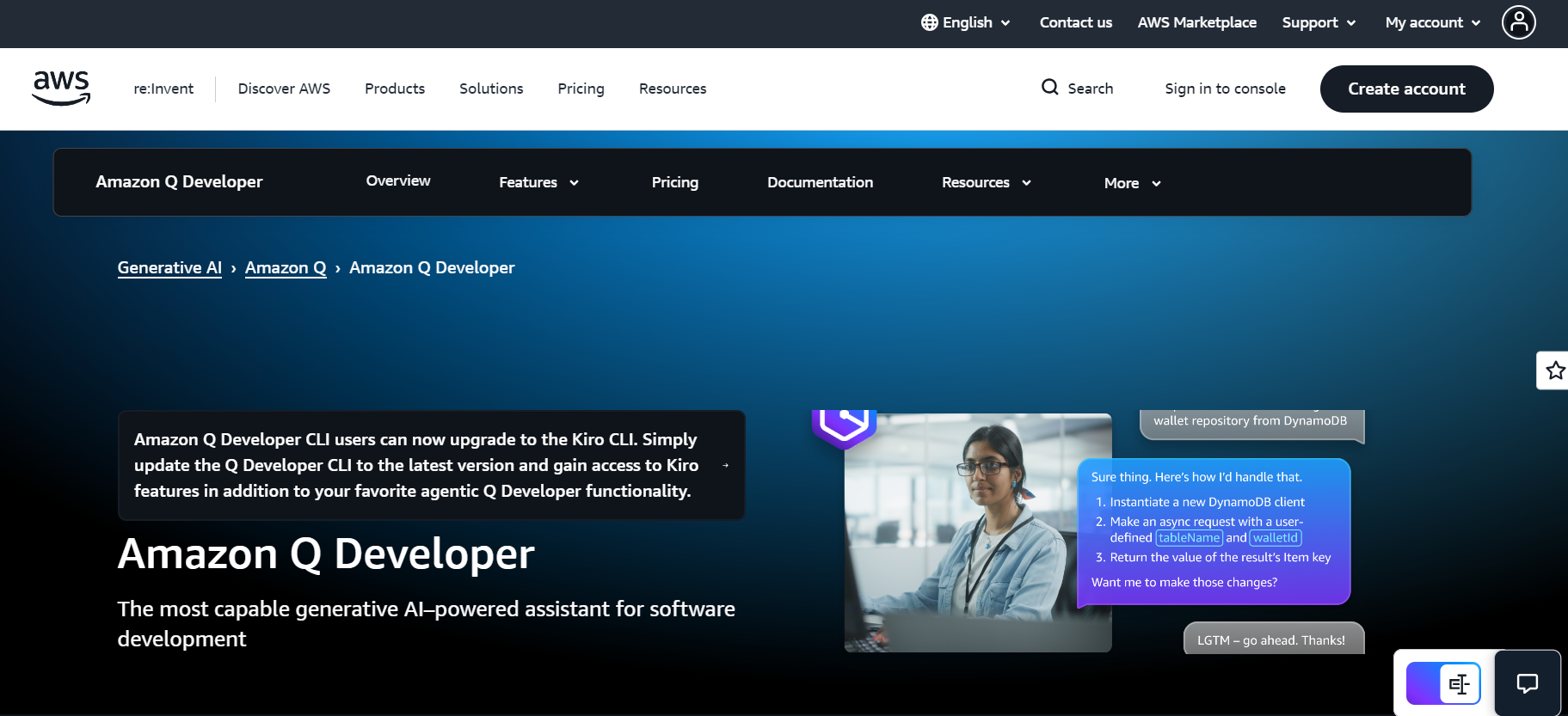

3. Amazon Q Code Review

Amazon Q Code Review is part of the Amazon Q Developer suite. It performs static analysis of code changes, identifies quality or security issues, and provides fix suggestions that can be applied directly from supported IDEs or code hosts. It can review entire files or specific diffs, generate summaries, and identify findings tied to AWS-related patterns, SDK usage, and common cloud development issues. It integrates with GitHub to review new or updated pull requests and post comments automatically.

Where it fits

- Teams with workloads that are primarily AWS-based, including Lambda, DynamoDB, S3, API Gateway, Step Functions, CloudFormation, and CDK.

- Repositories where correct cloud configuration and API usage are part of day-to-day development.

- Development workflows centered around IDE-driven iteration (VS Code, JetBrains) where developers expect inline checks.

- Organizations already using Amazon Q for chat, code generation, or AWS guidance and want consistent feedback inside PRs.

Limitations

- Review context is repository-scoped; Amazon Q does not maintain persistent cross-repo indexing or workspace-wide dependency modeling.

- It does not interpret or validate Jira/ADO tickets or map requirements to the diff.

- PR workflow control (gating, conditional approvals, standards enforcement) must be implemented via GitHub branch protections or CI rules, not Amazon Q itself.

- Best performance occurs when the codebase uses AWS SDKs or cloud resources; outside of cloud-centric patterns, the strength of its guidance decreases.

Who it’s for

Amazon Q Code Review is best suited for:

- Engineering teams building AWS-native or AWS-first applications

- Organizations where cloud misuse or API misconfiguration is a recurring source of production issues

- Teams that want IDE-driven feedback loops with consistent findings reflected in PRs

- Developers who benefit from AI that understands IAM, serverless patterns, cloud resource lifecycle, and AWS SDK semantics

- Small-to-mid-sized organizations where repo-level reasoning is adequate and cross-repo governance is not required

Amazon Q works as a cloud-aware quality and security layer for AWS-centric teams, not as a governance engine or system-context reviewer for large, distributed engineering organizations.

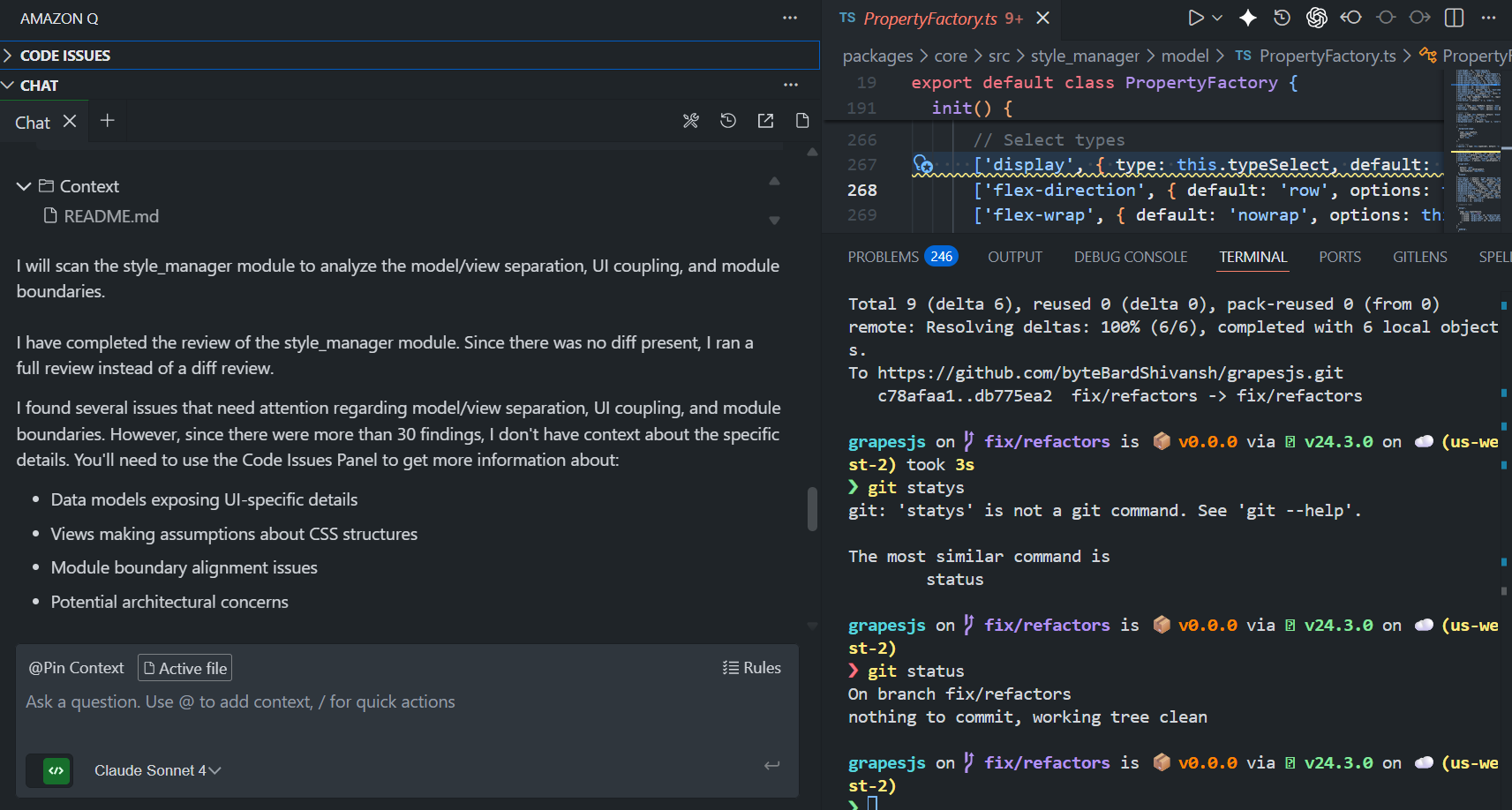

Hands-On: Amazon Q Reviewing style_manager and Modules in GrapesJS

I ran Amazon Q on the same GrapesJS monorepo, focusing first on the style_manager module and then looking at its project-wide findings.

What I asked it to do

I prompted Q to:

Open packages/core/src/style_manager and review the model/ and view/ folders. Check whether the data model exposes too many UI-specific details, whether the views assume inconsistent CSS structures, and if the module boundaries align with how the editor actually uses styles.

So the goal was not simple linting; it was a structural check of model/view separation and module boundaries inside a Backbone + TypeScript codebase.

Here’s how Q responded on style_manager:

Q ran a full-module review (no diff present) and reported:

- It completed a scan of the style_manager module.

- It identified more than 30 findings related to:

- data models exposing UI-specific details

- views making assumptions about CSS structures

- module boundary alignment issues

- potential architectural concerns

Instead of listing all findings in the chat, it pointed me to the Code Issues panel inside the IDE for details and suggested fixes. From an engineering perspective, that means:

- Q can treat a folder as a review target, not only PR diffs.

- It attempts higher-level reasoning (model/view coupling, boundary alignment), but it flags the granular issues through a dedicated panel, not as a long chat dump.

- For a module like style_manager, which mixes Backbone models, views, and configuration, this kind of scan helps highlight areas where responsibilities blur.

Repository-wide findings: issue categories and severity

Alongside the style_manager review, Q populated the Code Issues tree for the broader repo. It grouped findings by severity: Critical, High, Medium, Low.

Examples from the screenshots:

- Critical

- Inconsistent or unclear naming detected (e.g., PropertyFactory.ts)

- Inadequate error handling was detected across multiple view and model files

- High

- A long list of Inadequate error-handling detected entries in index.ts and related files

- Medium

- Readability and maintainability issues were detected

- Missing or incomplete documentation found

- Performance inefficiencies detected in code

- Additional Inadequate error handling and naming issues

- Low

- More Readability and maintainability issues

- Additional Inconsistent or unclear naming

- Some missing documentation flags

Technically,

- Q runs a full static analysis pass over the TypeScript parts of the repo, not just the focused module.

- It classifies findings by severity and type: error handling, naming, documentation, readability, and performance.

- The issues are mapped to specific files and line numbers (for example, index.ts [Ln 237], PropertyView.ts [Ln 92]), which makes navigation straightforward inside the IDE.

Practical takeaways from this run

- For a monorepo like GrapesJS, Q behaves more like a static analysis console with AI-backed descriptions than a narrow PR reviewer.

- It is effective at:

- surfacing systematic patterns, such as weak error handling across many files

- highlighting readability and maintainability hotspots

- pointing out model/view coupling and boundary concerns at the module level

- It expects the developer to work through the Code Issues panel for the detailed list and suggested fixes, with the chat acting as a summary and entry point.

This hands-on run shows Q is well aligned with codebase-wide quality and structural analysis, especially around error handling, naming consistency, and module responsibilities, rather than fine-grained PR workflow control.

4. Codex

Codex Cloud provides an AI-driven code review workflow directly inside GitHub. Once enabled for a repository, you can request a review by tagging @codex review on a pull request. Codex scans the diff, applies any repository-specific rules defined in AGENTS.md, and posts findings as standard PR review comments. It focuses on identifying high-priority issues (P0/P1 by default) and can run targeted checks when given an instruction, such as @codex review for security regressions. The system is built to behave like an additional reviewer rather than a CI job or a linter.

Where it fits

- Teams working entirely within GitHub who want review automation without introducing new infrastructure or external services.

- Codebases where repository-scoped rules (documented in AGENTS.md) provide enough guidance for consistent review.

- PR workflows that benefit from comment-triggered analysis, especially when developers need a second opinion on specific risk areas.

- Environments where fast, high-signal findings inside the PR are more valuable than deep architectural or cross-repo review.

Limitations

- Repository-scoped context: Codex uses the rules available in the current repo and does not maintain cross-repo or workspace-wide indexing. Architecture or dependency-level issues that span repos may not be flagged unless explicitly prompted.

- No built-in merge enforcement: Codex posts comments but does not block merges or enforce policies. Branch protections or CI workflows must handle gating.

- Defaults to high-severity issues only: It flags P0/P1 issues unless instructed otherwise. Broader review coverage requires updating AGENTS.md or specifying a request in the PR comment.

- Setup and permissions required: Codex Cloud must be enabled for the GitHub organization and the specific repository before reviews can run.

- Enterprise controls vary: Since Codex runs as a cloud service, organizations with strict retention or data governance requirements should validate its data-handling model before rollout.

Who it’s for

- Engineering teams that want AI review embedded directly in the GitHub PR experience, without modifying CI pipelines.

- Organizations where development primarily happens inside a single-repo or simple multi-repo setup, and review rules can be encoded at the repo level.

- Developers who need on-demand review for security, correctness, or maintainability without involving another reviewer.

- Organizations with <50–150 developers who do not operate complex multi-repo architectures

- Engineering leaders are looking for incremental automation in their existing workflow rather than a new governance layer.

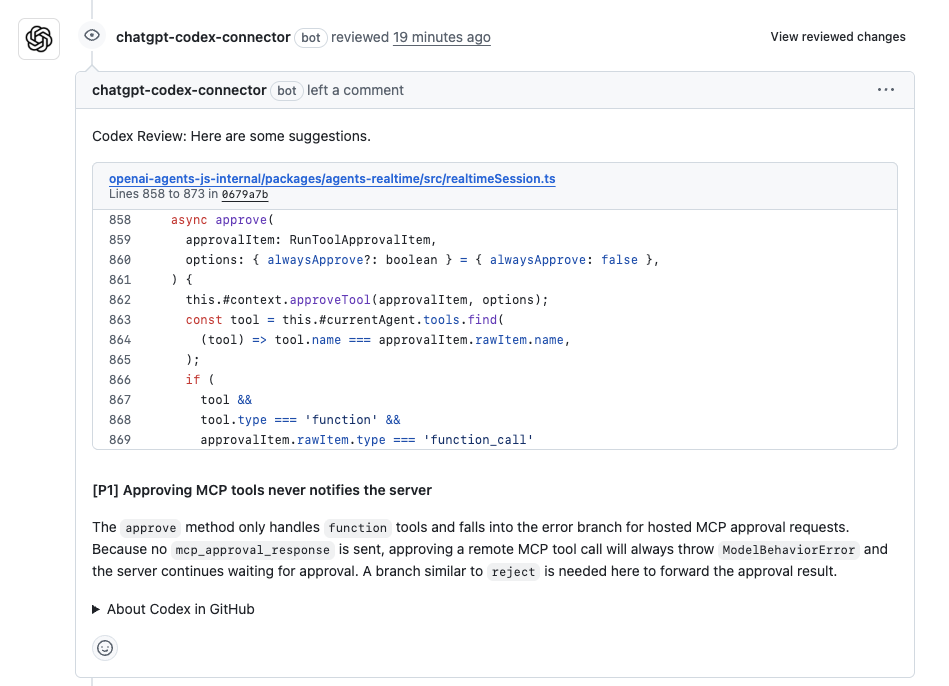

Hands-On: How Codex Cloud Reviewed an Approval Method Change

Codex reviewed a pull request that modified part of an approval method responsible for handling tool calls, as shown below in the PR:

The method accepts an approvalItem, forwards it to an internal context, locates the corresponding tool, and performs some additional checks when the tool represents a callable function.

Codex highlighted a single, focused P1 issue in this section of the change. It pointed out that the approval logic only handles tools marked as “function” with a “function_call” payload. For all other tool types, the method updates local state but never sends an approval response back to the system that issued the request.

According to Codex, this leads to a gap in behavior:

- remote or hosted tools expect an approval response,

- no response is emitted in the current implementation.

- the system continues waiting,

- and the approval operation ends in an error rather than completing cleanly.

Codex recommended adding an explicit branch that forwards the approval result for these cases, similar to the reject path already present elsewhere in the code. Interestingly, it was noticed that the approval method treats function tools correctly, but does not complete the approval cycle for other tool categories. Even though the code updates the local state (approveTool), nothing notifies the caller that the approval is finished.

This is the kind of issue that doesn’t show up until you run through a tool called end-to-end: locally, everything looks fine, but the outer system never receives a final state.

Takeaway from this review

Codex focused on a behavior gap directly tied to the change, not style or formatting issues. It spotted that:

- one category of tools is entirely handled,

- another category is updated locally but never gets a final approval response.

- and the missing branch leads to stalled or failed operations.

The comment was specific, tied to the exact lines of code, and pointed to a real functional issue that would show up at runtime.

5. Snyk Code

Snyk Code is a developer-focused SAST engine that analyzes code for security vulnerabilities, risky patterns, insecure API usage, and common logic issues. It integrates with IDEs, CI pipelines, and pull requests to identify findings early. It uses semantic analysis to detect issues such as injection risks, unsafe deserialization, weak validation paths, insecure regex patterns, and misconfigurations in supporting files like IaC or package manifests. It also provides guided fixes where applicable.

Where it fits

- Teams that need security scanning integrated into everyday development, not just in a late-stage CI job.

- Organizations with application security requirements or compliance expectations that benefit from SAST coverage.

- Codebases that rely on a mix of backend services, client applications, and infrastructure-as-code, where Snyk can analyze several components in a single pass.

- Engineering orgs that want automated security feedback in PRs, alongside manual review.

Limitations

- Snyk Code focuses on security analysis, not general code review. It does not validate ticket intent, enforce engineering standards, or participate in merge gating.

- It does not maintain cross-repo architectural context; findings are scoped to the files it analyzes.

- The signal is strongest for security-related issues; it does not replace a functional or architectural reviewer.

- Adoption in enterprise settings may require coordination with security teams, especially around scanning private repositories and handling remediation workflows.

Who it’s for

- Engineering teams that want strong, automated security coverage directly in the PRs.

- Organizations where security teams and developers work together and need a shared view of vulnerabilities.

- DevSecOps groups are building preventative security gates early in the lifecycle.

- Teams that already have general PR workflows covered and want to add specialized security analysis as a complementary layer.

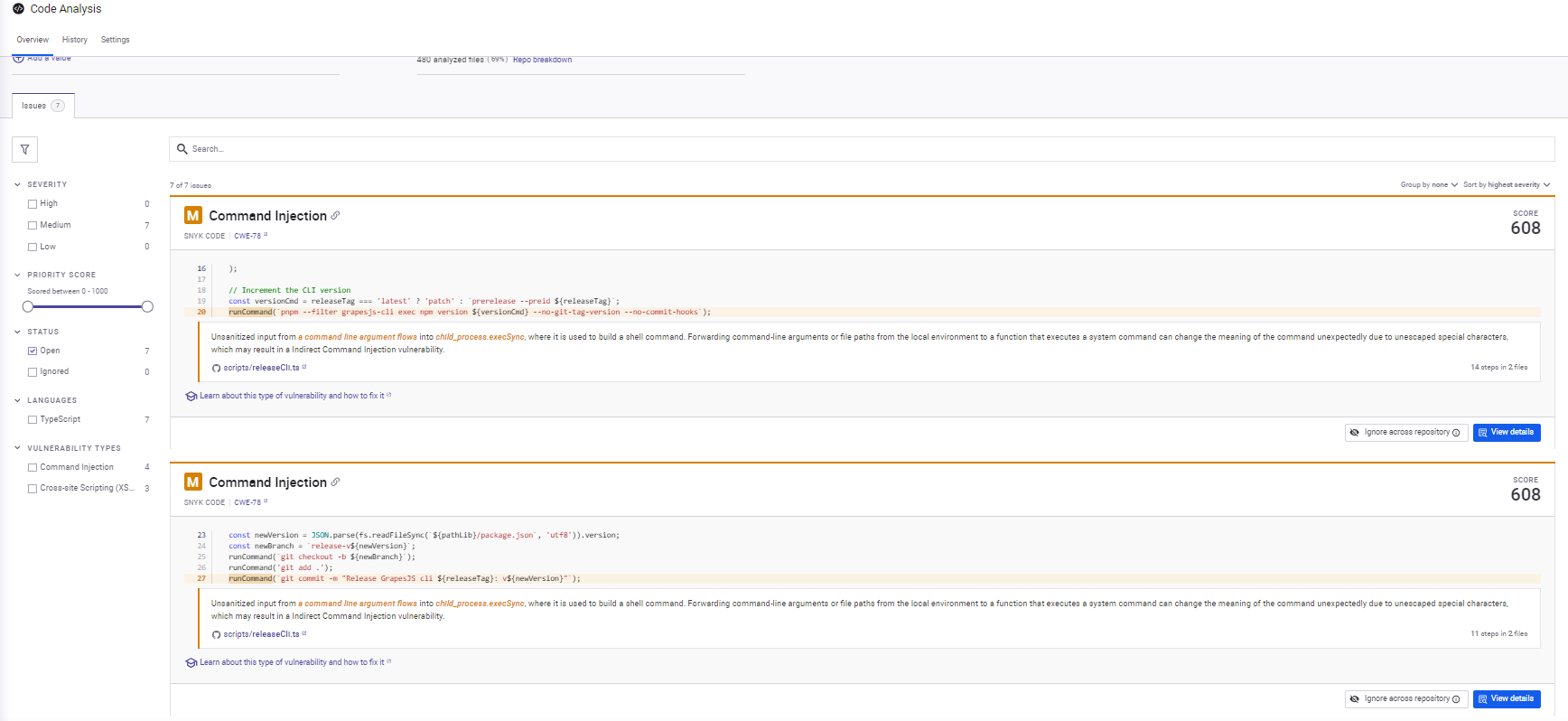

Hands-On: Flagged Release Script Injection Risks and Parser Sanitization Issues

When I connected the GrapesJS monorepo to Snyk Code, it immediately performed a full static analysis pass across both the CLI scripts and the editor modules. Instead of the PR-focused tools, Snyk surfaced system-level vulnerabilities rather than diff-specific issues. The dashboard listed seven issues, all categorized as Medium severity, which is consistent with patterns that can’t be exploited directly inside the repo but carry real risk in downstream usage:

The issues fell into two groups:

- Indirect command injection risks in the release automation scripts

- Inadequate URI sanitization in the HTML parser

Both types of findings are valid for a repository like GrapesJS, which mixes build tooling with an HTML parsing layer.

Command Injection Findings in Release Scripts

Snyk flagged multiple instances in the release automation scripts (releaseCli.ts and releaseCore.ts) where unescaped values flow into a shell command constructed using child_process.execSync. The highlighted lines follow the same pattern:

const versionCmd = releaseTag === 'latest'

? 'patch'

: `prerelease --preid ${releaseTag}`;

runCommand(

`pnpm --filter grapesjs-cli exec npm version ${versionCmd} --no-git-tag-version --no-commit-hooks`

);

Snyk’s data-flow explanation shows how the releaseTag argument propagates through the code and eventually becomes part of the string passed to execSync. The second screenshot breaks down the propagation path clearly, from argument, intermediate string, shell command, and execSync.

The issue is accurate:

- If releaseTag contains unexpected characters, the final shell command can be altered.

- The same pattern repeats when constructing Git commands based on newVersion and newBranch.

- Even if the risk is low in a controlled CI environment, the pattern itself is unsafe by definition.

For a public OSS project, this is exactly the sort of hygiene issue that’s worth surfacing early, especially as scripts get reused by contributors in different environments.

XSS Findings in HTML Parser

Snyk also highlighted repeated findings inside the HTML parsing module. The flagged logic inspects attributes on DOM nodes and checks whether a value begins with unsafe URI schemes. The check currently protects against known harmful prefixes, but Snyk noticed two gaps:

- data:

- vbscript:

The screenshot shows Snyk’s comment: if the logic is intended to guard against scriptable URL schemes, then the parser should account for these additional prefixes.

This is a valid signal: GrapesJS allows users to import raw HTML, so its parser is a potential vector for embedded scripts. Even though the downstream rendering environment typically layers its own safeguards, parser-level scheme validation is still part of defensive coding.

What this run shows about Snyk’s behavior

Snyk Code did exactly what a SAST engine should do in a mixed codebase:

- It ignored style, naming, and structural issues and focused only on security-relevant flows.

- It generated data-flow explanations, not just pattern matches, making it clear how user-provided or environment-provided values reach sensitive sinks.

- Its findings were consistent across repeated patterns; every instance of the release script building a shell command was flagged.

- For the HTML parser, it didn’t claim the code was unsafe outright; it pointed out missing branches in an otherwise reasonable sanitization check.

From an engineering lead’s perspective, the value isn’t the severity label; it’s the consistency and traceability. Snyk makes it easy to see where the same underlying pattern appears across the codebase, which is precisely what you want from a static analyzer.

6. CodeRabbit

CodeRabbit is an AI-assisted pull request reviewer built to run inside GitHub repositories. It analyzes diffs, generates inline comments, summarizes the PR, and highlights potential issues related to logic, security, style, and maintainability. It can optionally apply changes through suggested patches and supports automated approvals when configured. The tool focuses on PR-level feedback, not full-repo analysis or cross-service reasoning.

Where it fits

- Teams using GitHub as their primary code host and wanting an AI reviewer embedded directly in the PR interface.

- Repositories where a single-repo context is sufficient, and changes tend to be contained within localized modules.

- Teams prefer lightweight review automation that is easy to adopt without additional infrastructure.

- Groups want a tool that provides quick, readable suggestions rather than whole-system analysis.

Limitations

- CodeRabbit does not index or persist cross-repo context, so it cannot track interactions across multiple services or packages.

- It does not interpret Jira/ADO tickets, so it cannot validate scope against requirements.

- Workflow control, such as merge gating and policy enforcement, remains outside the tool and must be handled through GitHub branch protections or CI rules.

- Review depth depends on diff visibility; it will not expose architectural or dependency-level concerns that live outside the changed files.

Who it’s for

CodeRabbit is best suited for:

- GitHub-native teams with straightforward repository layouts

- Organizations with <50–100 developers where cross-repo complexity is low

- Startups and early-stage teams adopting AI review for the first time

- Teams focused on developer experience and improving PR readability

- Engineering environments where PRs rarely require broader architectural reasoning

In short, CodeRabbit is a lightweight PR assistant, not a system-level reviewer. It improves day-to-day productivity in simple environments but is not built to enforce standards, validate scope, or reason across distributed architectures.

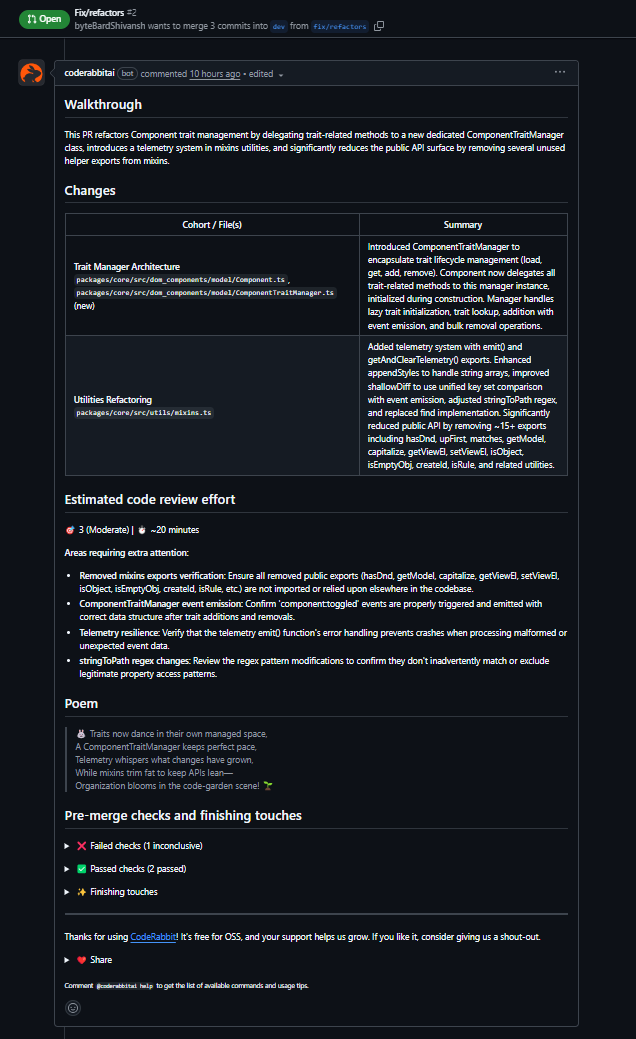

Hands-On: How CodeRabbit Reviewed the Trait Refactor in GrapesJS

For CodeRabbit, I tested a PR that moved all trait-related logic out of Component into a new ComponentTraitManager class. The PR also included some utility changes (rePropName, appendStyles, telemetry additions). Three files were touched, with a mix of refactoring and behavioral changes as shown in the PR below:

What CodeRabbit surfaced

It first generated a short summary of the PR as visible in the above PR comment that accurately reflected the scope: extracting trait management, delegating trait operations to the manager, a fix in the regex that powers stringToPath, and updates in a few utility helpers. Nothing surprising there, it read the diff correctly. The more useful part was the inline analysis.

Initialization order issue in trait handling

In Component’s constructor, traits are initialized through initTraits(). The PR introduced:

this.traitManager = new ComponentTraitManager(this);

before initTraits() was called. CodeRabbit flagged this and pointed out that ComponentTraitManager calls __loadTraits() in its constructor. Since traits aren’t configured until initTraits() runs, this leads to the manager touching an uninitialized collection. That’s the kind of ordering bug that only shows up at runtime and can be hard to trace in GrapesJS because traits influence rendering and editor-side state. The fix it suggested, move the instantiation after initTraits(), is correct.

Null handling in removeTrait

Inside ComponentTraitManager:

const toRemove = ids.map(id => this.getTrait(id));

getTrait returns null when the trait doesn’t exist. Passing [Trait | null] into Backbone’s collection remove call can lead to inconsistent behavior. CodeRabbit suggested filtering out null explicitly:

const toRemove = ids .map(id => this.getTrait(id)) .filter((t): t is Trait => t !== null);

This matches how you’d typically harden a Backbone model collection operation.

Broken escape() implementation

In mixins.ts, the file ended halfway through a .replace(/ call, the function was incomplete. This creates a parse error that prevents the build from running. CodeRabbit pointed out the file truncation and suggested a full version of the function. It also noted that escape shadows the global escape, which is fair feedback for a shared utility. This is the sort of thing a reviewer catches immediately because it stops the build.

Overall

CodeRabbit stayed inside the diff and focused on issues with clear runtime or maintainability impact:

- constructor ordering

- null safety

- truncated utility code

- shadowed identifier

It did not attempt architectural reasoning or cross-module context; it stayed inside the PR boundaries, but the issues it raised were valid and tied directly to the changes. For this type of refactor, where initialization order and data shape matter, the feedback was useful and aligned with what a human reviewer would check.

AI Code Review Tools: Quick Comparison Table

At this point, the differences between the tools become easier to see. Engineering leaders evaluate AI review tools by one standard: can the system keep up with the complexity of software delivery cycle?

The table below compares the tools on the only dimensions that matter at scale, context, automation, governance, and fit.

| Tool | Context Model | Workflow Automation | Scale Support |

| Qodo | Persisted cross-repo context; understands shared libraries, lifecycle boundaries, architectural patterns | 15+ automated workflows (scope validation, missing tests, standards enforcement, risk scoring, Jira/ADO-linked auto-approval) | Built for 100–10,000+ developers, 10–1000+ repos, multi-language systems |

| GitHub Copilot Review | Repo/project-level context inside GitHub | Inline suggestions + auto-fix PRs | Best for <200 developers, GitHub-only repos |

| Amazon Q | File/project/workspace-level context; optimized for AWS SDKs & cloud infrastructure | Auto PR review + fix suggestions | Works best for AWS-centric teams of 50–500 developers |

| Codex Cloud | Repo-scoped context; rule-based via AGENTS.md | Comment-triggered PR reviews | Works for 50–300 developer GitHub orgs |

| Snyk Code | Repo-wide semantic analysis (SAST) | Security scanning in PR/CI | Scales to enterprise but security-only |

| CodeRabbit | Repo-level context | Automated PR reviews (inline comments) | Best for <150 developers, simple repo structures |

This comparison framework helps ground the decision in operational fit rather than feature checklists. Tools that operate only at the diff or file level may provide useful suggestions, but they won’t reduce review load in environments with multi-repo dependencies, ticket-driven scope requirements, or stricter governance needs. Conversely, tools with deeper context and workflow automation tend to generate more consistent reviews, reduce handoffs, and stabilize merge paths as the codebase grows.

Enterprises generally get the most value when they match the tool to the complexity of their environment:

- If reviews are small and isolated, lightweight diff reviewers work well.

- If teams operate across repos, languages, and shared components, context and automation become the determining factors.

- If security or compliance is a constraint, integration footprint and deployment options matter as much as review accuracy.

This framing keeps the choice practical: pick the tool whose context model, automation depth, and integrations. And at the enterprise level, teams operating at scale need deeper context and stronger automation working across multiple polyglot repos, shared modules, and ticket-driven workflows. The question shifts from “What can the tool suggest?” to “How much of our workflow can it reliably handle?”

The next highlight is on how Qodo approaches the same problem space and why it behaves differently in larger engineering systems.

Why Engineering Teams Choose Qodo

When engineering leaders evaluate AI code review tools, the deciding factor usually isn’t how well a tool rewrites a line of code; it’s whether the tool can operate inside the realities of an enterprise engineering system: multi-repo environments, distributed teams, ticket-driven workflows, and strict governance requirements. Qodo is built around those constraints. It positions the reviewer as an AI review agent that understands how the system behaves, not just what changed in the diff.

The sections below outline how Qodo works in enterprises, and why teams with complex codebases tend to adopt it.

1. A Persisted Codebase Model That Carries Context Across Reviews

Qodo uses a Codebase Intelligence Engine, which maintains a persisted view of the repositories it ingests. That context isn’t limited to a single file or PR; it includes module boundaries, lifecycle patterns, shared libraries, initialization sequences, and cross-repo interactions.

In the Qodo’s hands-on above, this is what allowed Qodo to:

- spot the initialization-order bug caused by creating the ComponentTraitManager before the underlying trait lifecycle had completed

- reason about the impact of the updated path-parsing regex beyond the immediate diff

- Evaluate the telemetry buffer change with an understanding of long-lived runtime behavior

This reflects a broader shift in how engineering teams think about AI review. As Itamar Friedman put it:

"AI must become an always-on, context-aware reviewer -- not just an autocomplete engine."

This is what teams describe when they say they need “cross-repo context,” “context-rich review,” or “deeper context than Copilot/Cursor provide.” Qodo’s architecture follows this principle directly: it treats context as a first-class input to the review process, so the reviewer behaves like someone familiar with the system, not a diff-only assistant.

2. Workflow Automation That Mirrors How Enterprises Ship Code

Where many tools stop at suggestions, Qodo exposes 15 automated PR workflows that cover the checks senior reviewers repeatedly perform:

- scope validation against linked Jira/ADO items

- missing or incomplete tests

- standards enforcement

- risk and complexity flags

- detection of unrelated changes inside a PR

Because these workflows integrate directly with GitHub, GitLab, Bitbucket, and Azure DevOps, teams can route them through merge gating or keep them advisory, depending on the maturity of the codebase.

This is where engineering leaders start to see reductions in handoffs and begin using phrases like “Jira-linked auto-approval”, “reduce PR cycle time,” and “enforce standards automatically.”

3. Context-Aware Code and Test Generation

Qodo’s suggestions, whether code or tests, are generated through its context engine rather than a single-file view. This keeps the output aligned with real project structures:

- generated tests reference existing fixtures, runners, and helper utilities

- mutation and state transitions follow actual execution paths

- suggestions avoid fabricating behaviors not present in the code

During the GrapesJS review, this is why Qodo gave valid reasoning about test coverage and lifecycle interactions instead of proposing speculative scaffolding.

4. Built for Multi-Repo, Multi-Language Environments

Most enterprise teams don’t operate in a single repository. They maintain shared design systems, internal SDKs, cross-cutting services, and a mix of languages. Qodo is built to ingest multiple repositories and treats them as one logical system, which allows:

- accurate detection of cross-package drift

- consistent enforcement of organization-level standards

- context reuse between teams and services

- reduced manual explanation of architectural intent within PRs

This resolves the reality that leaders call “multi-repo sprawl.”

5. Enterprise Deployment and Governance

Qodo supports deployment models required in regulated industries, VPC-hosted, on-prem, and zero-retention modes, along with SOC2 and GDPR compliance. This often determines whether the tool can be adopted at all in financial, healthcare, and large SaaS organizations where code cannot leave controlled environments.

This pattern aligns with what large engineering organizations look for: a reviewer that understands how the system works, not just what the diff contains.

6. Enterprise Outcomes

In 2025, multiple industry surveys, including Gartner’s reporting on AI-augmented software delivery, noted a shift toward agent-driven review models and context-engineered workflows as teams scale their use of AI-generated code. Organizations adopting these patterns report measurable improvements in review consistency and defect reduction.

A 2025 case study from a Fortune 100 engineering organization highlighted improvements in code quality and reductions in rework after deploying Qodo across a highly distributed codebase. The team cited better alignment with internal standards and more predictable merges, outcomes driven by the same context and workflow capabilities evaluated here.

Similarly, engineering teams at monday.com observed that Qodo helped retain institutional knowledge during review cycles, noting that it acted as “an intelligent reviewer that captures institutional knowledge and ensures consistency across the entire engineering organization.” Used in the right context, this reflects how enterprise teams treat Qodo: as a stability layer for code review, not a shortcut for writing code.

Practical Adoption Checklist for AI Code Review

Most teams don’t struggle with the model; they struggle with the rollout. AI review shifts how code moves from commit, review, and merge, and the teams that see measurable improvements treat the rollout as a workflow change, not a tooling experiment. The checklist below captures the steps that consistently lead to stable adoption in mid-market and enterprise environments.

1. Introduce the tool in hybrid mode

- Add the AI tool as a reviewer, not a gate

- Compare AI findings with human review for accuracy

- Identify where the tool’s context model is reliable vs. shallow

- Keep merges fully human-controlled until signal quality stabilizes

2. Define org standards before broad rollout

- Add coding conventions that the reviewer should enforce

- Document error-handling, logging, and API expectations

- Establish test layout, coverage rules, and fixture patterns

- Ensure the tool has a stable baseline to evaluate against

3. Require PR, work item linkage

- Enforce Jira/ADO linkage in every PR

- Make scope explicit in the description

- Separate functional and non-functional changes

- Prepare the ground for ticket-aware automation and approvals

4. Phase in the merge gating gradually

- Start with soft checks (missing tests, missing docs, standards drift)

- Only allows blocking checks once false positives are low

- Align gates with existing CI rules to avoid duplication

- Monitor early merges to ensure the flow stays predictable

5. Baseline and track metrics

- Time-to-first-review (before/after)

- Time-to-merge (before/after)

- Review iteration count per PR

- Load on senior reviewers

6. Validate test reliability early

- Review the generated tests for the correct setup

- Confirm mocks and fixtures follow existing patterns

- Watch for flaky tests tied to incorrect assumptions

- Keep human oversight until test quality is consistent

7. Revisit rules and contexts quarterly

- Update reviewer rules as architecture evolves

- Add checks for new services or shared libraries

- Retire outdated patterns that the reviewer no longer needs to enforce

- Keep the reviewer aligned with the actual system, not stale assumptions

8. Integrate AI review into the engineering workflow

- Align with CI/CD pipelines and branch protections

- Sync reviewer expectations with code-owner rules

- Communicate the workflow to all teams, not just platform engineers

- Treat the tool as part of the system once stability is proven

FAQs: How Qodo Solves Enterprise Code Review Challenges

How does Qodo handle tooling fragmentation across multiple Git providers and CI/CD systems?

Engineering organizations typically operate across GitHub, GitLab, Bitbucket, and Azure DevOps, with different CI/CD systems and static analysis tools creating governance gaps and inconsistent quality signals.

Qodo provides a unified review layer that works across all major Git providers such as GitHub, GitLab, Bitbucket, and Azure DevOps, from a single platform. Instead of managing separate review configurations per provider, teams get consistent, context-aware code review across all repos, regardless of where they’re hosted.

This eliminates per-repo configuration pain and gives engineering leaders a single source of truth for code quality across their entire development landscape.

What does Qodo detect that static analysis tools like SonarQube miss?

Static analysis tools like SonarQube excel at finding style violations, code smells, and basic security patterns, but they can’t understand business logic, architectural intent, or cross-repo dependencies.

Qodo’s SOTA Context Engine analyzes your entire codebase, whether it’s 10 repos or 1000,to detect:

- Breaking changes across services and shared libraries

- Code duplication across repositories

- Architectural drift that violates established patterns

- Logic gaps and implementation mismatches against ticket requirements

- Cross-repo dependency issues that single-repo tools miss

This deep, persistent context understanding is what teams mean when they say they need “context-aware review” or “deeper context than Copilot provides.”

How does Qodo address the review bottleneck created by AI-generated code?

AI coding assistants like GitHub Copilot and Cursor increase development velocity by 25-35%, but they create review bottlenecks because human reviewers can’t validate large, AI-generated PRs at that speed.

Qodo’s agentic review scales with AI velocity by acting as a first reviewer that:

- Validates AI-generated code against full codebase context

- Catches bugs, logic gaps, and compliance violations before they reach human reviewers

- Detects issues AI copilots miss: breaking changes, duplicate logic, architectural inconsistencies

- Provides high-signal feedback so reviewers focus on architectural decisions, not mechanical checks

Engineering leaders using Qodo report that senior engineers spend less time triaging PRs and more time on work that requires their expertise.

Can Qodo enforce consistent coding standards across multiple languages and teams?

Most organizations struggle to enforce uniform standards across polyglot codebases (JS/TS, Python, Java, Go, C#, etc.) and distributed teams, especially without per-repo configuration hell.

Qodo’s centralized rules engine allows you to define organization-wide policies and team-specific conventions from a single source. These rules are automatically applied across all repos, languages, and teams:

- Coding standards and naming conventions

- Architecture patterns and module boundaries

- Security policies and compliance requirements

- Documentation and test coverage expectations

This is what engineering leaders mean when they say they need “centralized governance without per-repo pain”, a major differentiator for enterprise adoption.

How does Qodo reduce reviewer fatigue and improve PR feedback quality?

Reviewers suffer from context switching across large diffs, noisy feedback from multiple tools, and inconsistent review depth, leading to slower PR cycles and missed critical issues.

Qodo reduces noise by providing high-signal suggestions that highlight only critical issues:

- Focuses on bugs, security risks, and architectural concerns, not style nitpicks

- Separates critical issues from low-impact observations with clear prioritization

- Provides context-rich explanations so reviewers understand why an issue matters

- Automates repetitive checks (scope validation, missing tests, standards compliance)

The result: fewer back-and-forth iterations, faster PR cycles, and reviewers who engage with work that genuinely requires their experience.

Why are security and compliance checks more effective in Qodo than in traditional pipelines?

Most security and compliance checks happen late in CI/CD pipelines, after code has already been written, reviewed, and merged, making fixes expensive and disruptive.

Qodo validates security policies, ticket traceability, and compliance requirements at the PR level, before code reaches CI/CD:

- Detects hardcoded credentials, unsafe permissions, and security vulnerabilities early

- Validates changes against linked Jira/ADO tickets to ensure scope alignment

- Enforces compliance rules (SOC2, ISO, PCI, HIPAA) as part of the review process

- Catches policy violations before they enter the main branch

This shift-left approach catches issues when they’re cheapest to fix and prevents compliance violations from reaching production.

How does Qodo help scale best practices across distributed engineering teams?

As engineering organizations grow, institutional knowledge becomes fragmented, teams develop different coding practices, and onboarding new developers takes longer because best practices aren’t codified.

Qodo captures and applies institutional knowledge automatically:

- Learns from PR comments, accepted suggestions, and team feedback

- Adapts code review recommendations based on what senior engineers approve

- Evolves rules as standards and dependencies change

- Applies team-specific conventions consistently across all pull requests

Engineering leaders report that Qodo acts as “an intelligent reviewer that captures institutional knowledge and ensures consistency across the entire engineering organization”, making onboarding faster and quality more predictable as teams scale.

What’s the difference between repo-wide review and cross-repo context?

Repo-wide review means scanning all files in a single repository. Cross-repo context means the tool understands shared libraries, internal packages, and interactions across multiple repositories.

Qodo maintains cross-repo, persisted context that allows it to:

- Catch lifecycle, initialization, and dependency issues that don’t appear in single-repo analysis

- Detect breaking changes in shared libraries before they affect downstream services

- Identify code duplication across multiple repositories

- Understand architectural patterns that span your entire system

This is critical for enterprise teams operating across 10-1000+ repos with complex dependencies, where most review tools built for single-repo workflows break down.

How is AI code review different from traditional code review tools like linters and SAST?

Traditional linters check syntax and patterns in isolation, while AI code review understands your entire system. Qodo’s Context Engine analyzes architectural patterns, cross-repo dependencies, and business logic catching breaking changes, code duplication across repositories, and logic gaps that pattern-matching tools miss. It validates whether code actually solves the problem defined in the ticket, not just whether it compiles cleanly.

Can AI code review tools keep up with AI-generated code volume?

Yes, but only with the right architecture. AI coding assistants increased development output 25-35%, but most review tools still operate at diff-level. Qodo scales with AI velocity by acting as a system-aware first reviewer validating AI-generated code against full codebase context, catching issues copilots miss (breaking changes, architectural drift, duplicate logic), and surfacing high-signal findings so human reviewers focus on decisions that require expertise, not mechanical checks.

What should engineering leaders look for in an AI code review platform?

Five capabilities matter at enterprise scale: persistent cross-repo context (not just diff analysis), ticket-aligned validation (Jira/ADO scope checking), automated PR workflows (missing tests, standards enforcement, risk scoring), centralized governance across repos and languages, and enterprise deployment options (VPC/on-prem, SOC2/GDPR). Qodo delivers all five, which is why organizations with 100-10,000+ developers adopt it to achieve predictable PR cycles and review capacity that scales with AI development velocity.

How do you prevent AI code review from becoming just another source of noise?

Focus on signal quality, not comment volume. Noisy tools generate cosmetic suggestions and hallucinated recommendations. High-signal reviewers like Qodo behave like senior engineers flagging only critical issues (bugs, security risks, architectural concerns), explaining why each issue matters and what downstream systems it affects, and separating blocking issues from optional improvements. Teams report Qodo feels like “a reviewer who actually understands how your system works,” not a linter with an LLM wrapper.