AI Code Review and the Best AI Code Review Tools in 2025

Software developers are often challenged with tight deadlines and production bugs which if not addressed properly can create major setbacks for the company. The best engineers still write code with bugs and the most experienced developers may also forget to put a “++” at the end of the loop.

As a senior developer, I have experienced that bugs that slip through the normal CI environment are often responsible for expensive production issues. Manual code reviews especially with large repos can be time-consuming and mentally draining. That’s why AI code reviews are game changers for developers.

AI code review is a process of integrating agentic AI to scan code for issues, suggesting improvements, and even auto-fixing common bugs. This frees developers to focus on more strategic challenges, like the architectural design of software applications and system-level decision-making.

In this article, I’ve handpicked a list of AI tools I’ve used and found incredibly helpful. From customizable solutions tailored to your unique workflow to IDE-specific tools and real-time auto-fix features, these tools are designed to simplify our daily code review tasks and let us work smarter rather than harder.

Benefits and Limitations of AI-Based Code Reviews

Now, let’s take a look at the benefits and limitations of AI-based code reviews.

| Benefits | Limitations |

| Efficiency: Dramatically reduces review time by automating repetitive tasks | Context Understanding: Often struggles with project-specific context and architectural concerns |

| Consistency: Provides uniform analysis regardless of code volume or complexity | False Positives/Negatives: May flag non-issues or miss genuine problems |

| Error Detection: Identifies subtle bugs that human reviewers might overlook | Complex Logic: Difficulty evaluating sophisticated logical structures or algorithms |

| Learning Opportunity: Offers educational feedback that helps developers improve | Overreliance: Teams may become dependent, reducing critical thinking |

| Real-time Feedback: Provides immediate insights during development | Noise Generation: Can produce excessive or irrelevant suggestions |

| Resource Optimization: Allows human reviewers to focus on higher-value concerns | Training Limitations: Performance varies based on training data relevance |

Should You Use AI for Code Review?

Let’s get real-using AI-powered code reviews make the most sense when:

- Your team is moving fast. In short sprint cycles, delays in code reviews can push features into the next release. AI offers instant feedback, helping us maintain speed without cutting corners on quality.

- You’re dealing with a massive legacy codebase. AI code assistants can map the territory before you start hacking away, flagging those “here be dragons” sections human reviewers might miss.

- Junior developers need support. AI reviews become a tireless mentor that doesn’t mind explaining the same pattern violation for the fifteenth time.

- You’re working in familiar ecosystems. If your stack includes popular frameworks like React or Python, AI models trained on thousands of similar projects tend to give smarter, more relevant suggestions.

That said, AI isn’t a replacement for human judgment. The best results come when we combine AI’s speed and consistency with the critical thinking and context that only experienced developers bring:

- Let AI handle the grunt work of finding those missing semicolons and obvious null pointers.

- Keep your human reviewers focused on what matters: architecture decisions, business logic correctness, and algorithm elegance-the stuff that requires context AI doesn’t understand.

- Take time to teach your AI tool your team’s quirks and standards. Off-the-shelf tools won’t know that your team has strong opinions about bracket placement.

- Regularly ask: “Is this AI actually helping?” If engineers are fighting with it more than learning from it, something’s wrong.

- Creating a code review checklist that both humans and AI can follow-consistency is key.

On GitHub, AI-powered code review tools average 3.7 out of 5 stars across major implementations-promising but clearly still evolving, reinforcing the need for a balanced, human-in-the-loop approach.

Now that we’ve established when AI makes sense in your review workflow, let’s look at the tools actually worth your time in 2025.

20 Best AI Code Review Tools in 2025

IDE-specific AI Review Tools

Integrated AI Code Review Platforms

Tools with Real-time Auto-Fixes

Hybrid AI & Human Review Approaches

Open-source and Free Tools

Emerging & Community-driven AI Tools

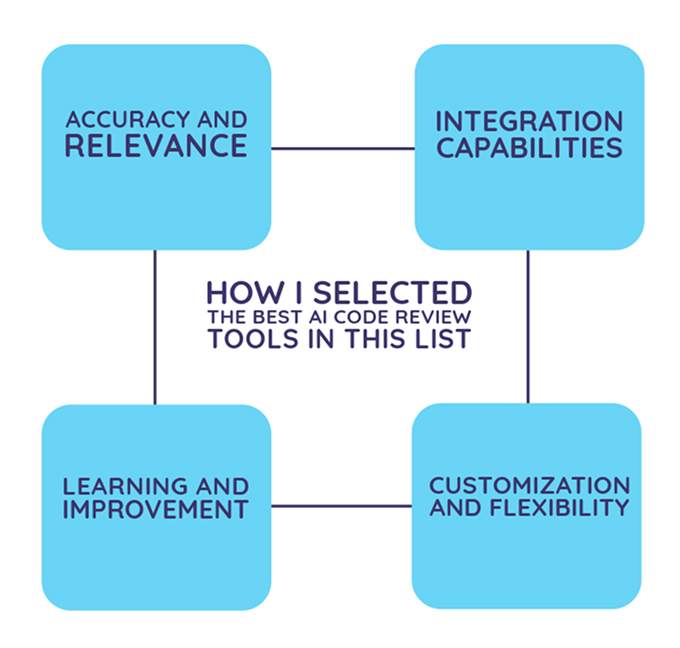

How I Selected the Best AI Code Review Tools on This List

As a developer, I know the challenges teams face every day. That’s why I chose these tools based on four key principles I believe make code reviews truly effective:

- Accuracy and relevance: How effectively the tool identifies genuine issues while minimizing false positives.

- Integration capabilities: How seamlessly the tool fits into existing development workflows and environments.

- Learning and improvement: How the tool helps developers enhance their skills over time.

- Customization and flexibility: How adaptable the tool is to team-specific needs and coding standards.

Focusing on how these tools solve real-world code review challenges, I’ve curated a list of the Top AI Code Review Tools in 2025 that genuinely help developers boost productivity and code quality. Let’s go!

20 Best AI Code Review Tools – 2025 List

Qodo Merge

Qodo Merge is the best tool for AI code reviews and has become one of my go-to AI code assistants for streamlining the pull request process.

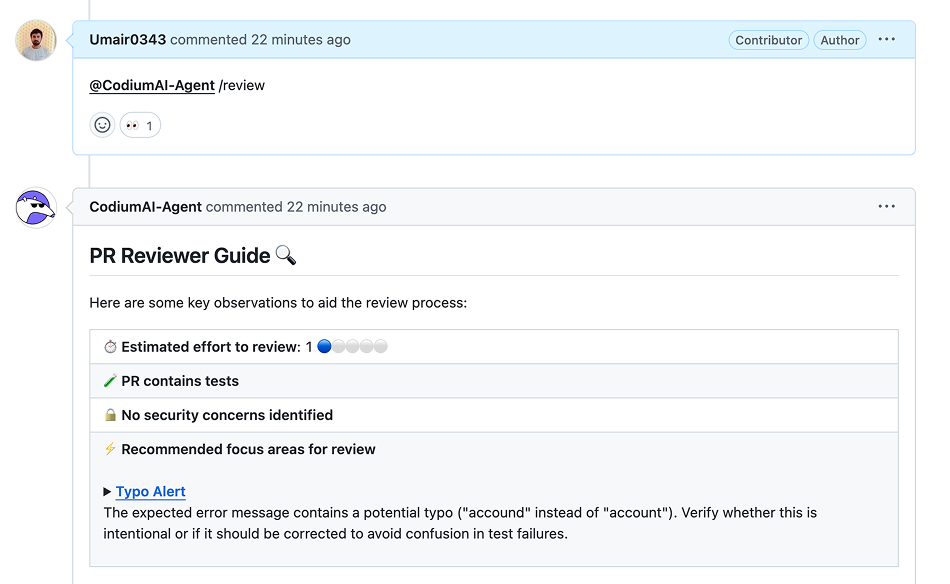

My Experience with Qodo Merge

I’ve been using Qodo Merge for about 6 months, and it’s dramatically changed how our team handles PRs. Recently, I executed the /review command on what I thought was a thoroughly tested PR from an open-source GitHub project, and despite my confidence in it, Qodo Merge caught a subtle typo in one of my error messages (“accound” instead of “account”). This kind of attention to detail has repeatedly proven valuable.

The command-based interface makes it intuitive to get exactly what you need. When I’m rushing to submit a PR, the /describe command automatically generates comprehensive descriptions, saving me from having to manually document all my changes. For reviewers on my team, the guided walkthrough of changes has reduced review time by approximately 70%.

How Does AI Code Review Work in Qodo Merge?

Qodo Merge’s AI code review goes beyond simple automation, it provides in-depth, expert-level insights into your code. Instead of just analyzing isolated code snippets like some basic tools, Qodo Merge takes the time to understand your entire codebase.

It recognizes the patterns and coding standards that are unique to your team and project, and flags deviations from those conventions. This means the feedback you receive is not only relevant but specifically tailored to your development environment, ensuring it’s actionable and precise.

The reason why I love using Qodo Merge in my workflow is that it doesn’t just stop at its initial recommendations. It learns and evolves over time, adapting to feedback from your team and the ongoing changes in your codebase. This dynamic approach means the AI gets better and more accurate with each review, staying aligned with the shifting standards and practices within your organization.

Pros of Qodo

Qodo supports seamless integration with all major version control platforms, including GitHub, GitLab, Bitbucket, and Azure DevOps, making it easy to fit into your existing development workflow.Slash commands (like /describe, /review, /improve) make getting specific help incredibly easy.

- Automatically prioritizes issues based on severity, focusing attention where it matters.

- Creates consistent review standards across different team members.

- Context-aware suggestions actually understand your codebase.

- Excellent at generating PR descriptions that would otherwise take significant time.

- Active support is available via Discord for quick help.

- Supports context-aware recommendations through the RAG pipeline, especially important for enterprises with large code bases.

Cons of Qodo

Access to advanced features like SOC2 compliance and static code analysis requires a paid plan, creating a barrier for smaller teams or individual developers.

Pricing

I love that Qodo Merge offers a free plan with basic features that works well for small projects and open-source contributions. The team plan costs $15 per user per month, which includes the more advanced review capabilities and integrations. In my experience, the ROI justifies the cost for teams making frequent code changes.

Traycer

Next on the list is Traycer, which combines intelligent analysis with actionable workflows that integrate seamlessly into your development process.

My Experience with Traycer’s Code Review

I tested Traycer on a complex codebase with multiple contributors. After implementing a new feature with several edge cases, I used the “Analyze Changes in Workspace” option to get comprehensive feedback across multiple modified files.

Traycer identified several issues with AI-based code review that would have been easy to miss in a manual review: an edge case where my error handling didn’t account for network timeouts, an inefficient loop implementation that could cause performance issues with large datasets, and inconsistencies in my naming conventions compared to the rest of the codebase.

Pros of Traycer

- Task-based approach that helps plan and implement complex changes.

- Flexible analysis options (file, changes, workspace) to match your workflow.

- Clean interface that clearly presents differences before implementing changes.

- Responsive support team available through Discord.

Cons of Traycer

- A paid subscription is required for automatic analysis.

- This tool occasionally struggles with highly domain-specific code patterns.

Pricing

Traycer offers a Lite plan at $8/month (billed annually), which includes manual analysis features and basic task assistance.

Bito

Bito has positioned itself as an onboarding accelerator with AI-based code review capabilities that benefit both new team members and veterans alike.

My Experience with Bito

One feature I particularly value is Bito’s PR summary generation. On a recent complex PR involving API changes, Bito created a concise summary that identified it as a “Feature Extension PR” with a “Medium Review Effort” required. This allowed me to allocate appropriate time for review rather than diving in blind. The summary highlighted the key files that had changed and the potential impact areas, which gave me immediate context before examining any code.

When reviewing PRs, the contextual suggestions feel surprisingly similar to feedback I’d give as a senior engineer. On several occasions, Bito has identified optimization opportunities that were subtle enough that they might have been overlooked in a rushed manual review.

Pros of Bito

- One-click acceptance of suggested fixes saves time.

- Security integration with tools like Snyk and detect-secrets adds valuable protection.

- PR summaries provide quick context and effort estimation.

- Analytics dashboard helps track review patterns and team productivity.

Cons of Bito

- Best features require the Pro plan, making it less accessible for smaller teams.

- Initial configuration requires time investment to align with team standards.

- Occasionally suggests optimizations that are technically correct but don’t align with team preferences.

Pricing

Bito offers a Pro Plan at $15 per developer per month.

GitHub Copilot

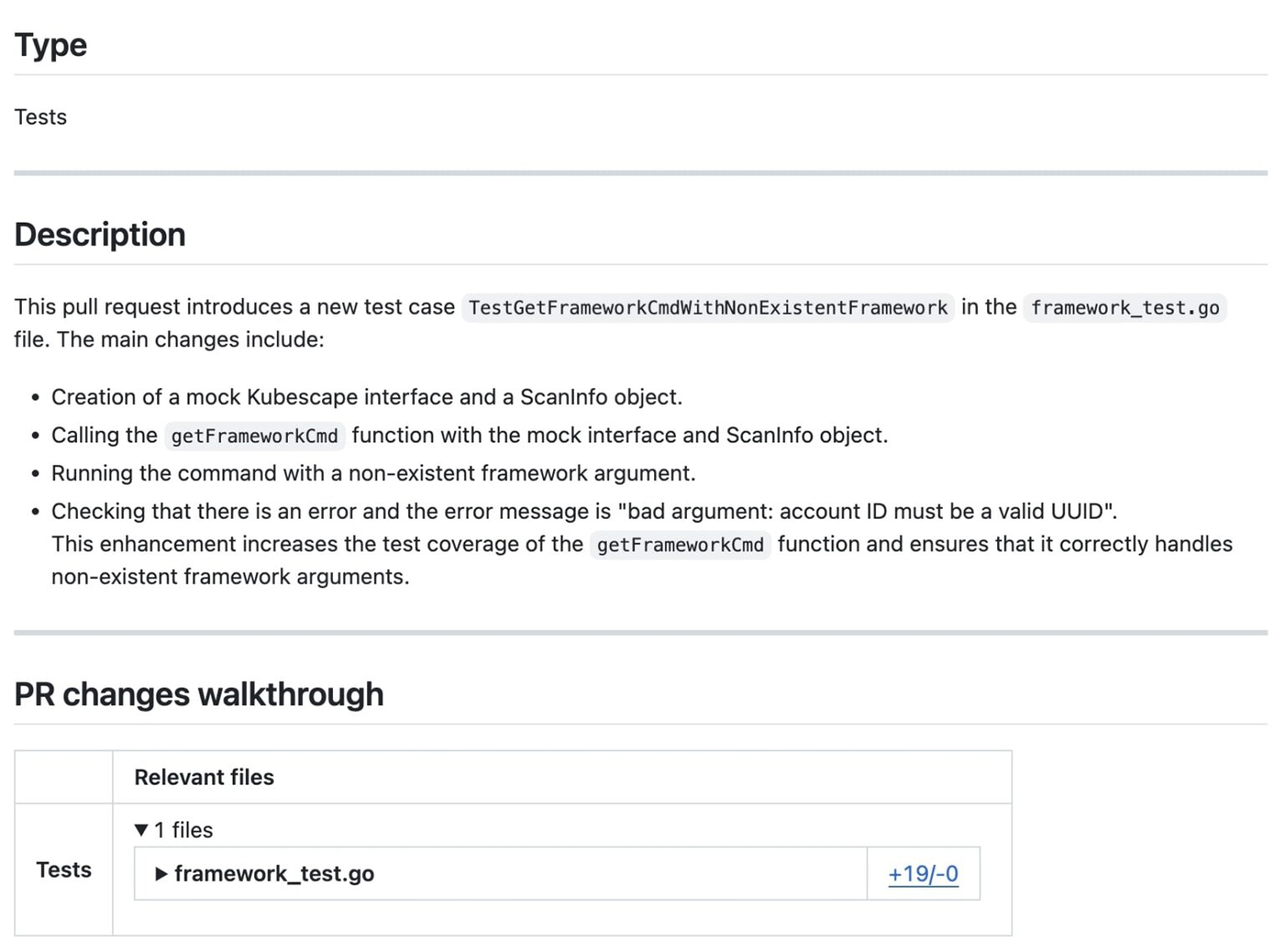

GitHub Copilot is an AI code analyzer developed by GitHub in collaboration with OpenAI and Microsoft. While best known for code autocompletion, it also brings smart enhancements to the AI-based code review process, especially through its Copilot PR Agent.

My Experience with GitHub Copilot

I used GitHub Copilot’s PR Agent to generate detailed descriptions for a pull request related to a test case in one of my open-source projects. As shown below, it automatically summarized the changes and provided relevant context, saving time and effort. Copilot also explained functions clearly within the IDE, offering a fast way to understand code during review sessions.

Pros of GitHub Copilot

- AI-generated PR descriptions: Saves time by letting Copilot generate summaries for pull requests.

- In-IDE code explanations: Understand complex logic or unfamiliar code instantly with natural language explanations.

- Contextual suggestions: Helps spot inefficiencies or missed edge cases while reviewing code inline.

- Supports multiple IDEs and languages: Works across VS Code, JetBrains, Neovim, and more, with support for Python, JavaScript, TypeScript, Go, and others.

Cons of GitHub Copilot

- Code duplication risk: May generate similar patterns across different codebases.

- Occasional inefficiency: Generated code can sometimes be suboptimal or incorrect.

- Limited test generation: Doesn’t focus heavily on generating tests during reviews.

- PR collaboration tools require a paid plan: Features like Copilot PR Agent for teams are only available with a paid plan.

Pricing

Free for individual developers. The team plan costs $4 per user/month.

CodeRabbit

CodeRabbit has emerged as a versatile AI-based code review solution that provides detailed AI code analysis across various programming languages and platforms.

My Experience with CodeRabbit

One useful aspect of CodeRabbit is its ability to connect with existing workflows. After linking it to our Jira instance, it started mapping code issues to relevant tickets, helping maintain a clearer trail between implementation and future updates.

Pros of CodeRabbit

- Simple setup process with minimal configuration required.

- Works across multiple Git platforms (GitHub, GitLab, Azure DevOps).

- Line-by-line feedback that resembles senior developer input.

- Customizable review depth and focus areas.

- Excellent integration with task-tracking systems like Jira and Linear.

Cons of CodeRabbit

- Free tier is limited to PR summarization only, which misses the tool’s real value.

- More advanced features require upgrading to Pro tier.

- Occasional overemphasis on minor stylistic issues.

Pricing

CodeRabbit offers a Free tier with basic PR summarization, a Lite tier at $12/month per developer (billed annually) for essential reviews, and a Pro tier at $24/month per developer for comprehensive reviews and advanced insights.

Pull Sense

Pull Sense brings a refreshing simplicity to AI-assisted code reviews by focusing on what matters most-getting actionable feedback fast while keeping your code secure. It doesn’t try to replace human judgment but enhances it with relevant, AI-generated suggestions right where you need them.

My Experience with Pull Sense

I tried Pull Sense on some of my side projects hosted on GitHub, and it integrated into my workflow quickly. No elaborate configuration or onboarding process-just connect your GitHub repo and start receiving meaningful, contextual comments on your pull requests.

I appreciate how Pull Sense addresses security. With the option to bring my own API key (BYOK), I can have full control over the data leaving my environment. During one session, it even flagged a subtle bug related to a race condition in my async code-something I’d missed during initial AI code testing. The feedback was clear and immediately actionable.

Pros of Pull Sense

- Instant AI feedback on pull requests helps catch bugs, security issues, and improvement opportunities early.

- Seamless GitHub integration with no complex setup required.

- BYOK (bring your own key) model gives you control over which AI service is used, with added privacy.

- Customizable models allow you to tailor the feedback to your coding style or team standards.

- Speeds up PR workflows by reducing time spent on manual reviews.

- Security-first design ensures your code stays private.

Cons of Pull Sense

- Currently limited to GitHub (no support for GitLab and Bitbucket AI code review).

- Some feedback can be overly general depending on the AI provider used.

- Lacks team collaboration features like comment threads or review assignments.

Pricing

Free (BYOK – Basic): $0/month – Best suited for individuals and small teams using their own AI keys, with access to essential review features.

Pro (BYOK – Advanced): $4/month – Geared toward growing teams wanting more advanced controls and integrations, still using their own AI providers.

CodeAnt AI

CodeAnt AI is designed to accelerate the code review process and ensure high-quality, secure code with its AI-driven capabilities.

My Experience with CodeAnt AI

I tested CodeAnt AI on a large codebase during a sprint, and the speed and efficiency were immediately noticeable. After making several changes across different parts of the code, I ran the AI review, and it suggested automatic fixes for code quality and security flaws, helping me cut my review time. It also provided a detailed summary of changes, focusing on code quality, application security, and infrastructure security.

The integration was simple, I connected my GitHub account, and the tool was able to handle the rest. One feature I found useful was the one-click fixes for common issues, such as unused variables and insecure API endpoints, which saved me time on manual corrections.

Pros of CodeAnt AI

- One-click fixes for code quality and security issues, making it easier to maintain standards.

- Works with all major version control platforms, including GitHub, GitLab, Bitbucket, and Azure DevOps.

- Supports all programming languages, IDEs, and CI/CD tools, making it adaptable to any tech stack.

- Detailed AI PR summary highlighting critical code quality, application, and infrastructure security concerns.

Cons of CodeAnt AI

- Pricing might be prohibitive for smaller teams or individual developers.

- One-click fixes, while convenient, can sometimes miss context-specific issues that require manual intervention.

- Does not offer advanced review capabilities like expert human oversight.

Pricing

AI Code Review: $10 per user per month- automates code reviews and flags issues quickly.

Code Quality Platform: $15 per user per month-helps clean up your entire codebase, ensuring long-term quality and security.

Sweep AI

Sweep is the AI coding assistant built specifically for JetBrains IDEs, finally giving JetBrains users access to modern AI features without having to compromise on their preferred development environment.

My Experience with Sweep for JetBrains

As a long-time JetBrains user, I found Sweep to be a useful addition to my workflow.

Sweep provided contextual suggestions based on my open files and worked offline, ensuring no data leakage, important for sensitive client work. Its ability to pull relevant code context without needing manual input saved a lot of time.

Pros of Sweep AI

- JetBrains-native experience-finally, an AI assistant built for JetBrains users.

- Self-hostable plugin that keeps your code private and secure.

- Automatically detects file and codebase context, eliminating manual setup.

- Seamless AI integration-no need to switch editors or compromise on features.

- Offline/on-prem deployment options, ideal for teams working on sensitive or proprietary projects.

- Smooth UX that matches JetBrains’ high standards, unlike most third-party AI plugins.

Cons of Sweep AI

- Limited to JetBrains IDEs-not usable if your team works in multiple environments.

- 500 chat limit on the Pro plan, which might be restrictive for heavy daily use.

- Lacks team collaboration features or review capabilities found in broader AI DevOps platforms.

Pricing

Sweep Pro is $25 per month and includes 500 AI chats monthly.

DeepCode AI

I included DeepCode AI by Snyk in this list for its focus on security-driven code analysis and real-time reviews. It has a hybrid strategy that means rather than depending on a single AI model, it blends symbolic AI with generative AI, both trained specifically on security-centric datasets curated by Snyk’s experts.

My Experience with DeepCode

In my experience, DeepCode AI has been a reliable tool for detecting and resolving security flaws in my codebase. Its integration with tools like GitHub and editors such as Visual Studio Code fits easily into my workflow. . I also appreciate its continuous monitoring, which helps with code security throughout development.

That said, there are a few drawbacks. Its language support isn’t universal, which can be restrictive depending on the tech stack. Moreover, the pricing could be a hurdle for developers or teams with tight budgets.

Pros of DeepCode

- DeepCode AI leverages a combination of symbolic and generative models, optimized with security-specific data to reduce hallucinations and improve precision.

- The tool suggests in-line code fixes that are automatically verified to avoid introducing new issues..

- Developers can define their own queries using DeepCode’s logic, supported by autocomplete features that simplify the process of building, testing, and saving custom rules.

- This feature minimizes the volume of code the large language model needs to analyze, speeding up processing and improving the quality of AI-generated fixes by lowering the chance of hallucinations.

Cons of DeepCode

- Snyk does not support all programming languages, limiting its use in some projects.

- The team plan with premium features might be costly for smaller teams or individual developers.

Pricing

Depending on your requirements, its features are accessible through either Snyk’s free plan or a paid option such as the Teams plan at $25/month.

CodePeer

![]()

CodePeer is another AI code analyzer that focuses on speed, clarity, and accountability. It streamlines collaboration by helping reviewers stay on track and avoid redundant work. It makes code reviews more human-friendly without compromising structure.

My Experience with CodePeer

CodePeer has a clean intuitive interface that helps with keeping everyone on the same page. I recently used it on a team project where we were pushing multiple PRs daily. Features like turn-tracking and task progression helped reduce confusion about who should act next.

The progress tracking helped during a paused review session. When I returned, CodePeer resumed right where I left off, so I didn’t have to recheck files or revisit earlier comments.

Pros of CodePeer

- Smart commenting system that keeps feedback visible, actionable, and in context.

- Turn-tracking removes the back-and-forth confusion during collaborative reviews.

- Progress tracking ensures you never review the same code twice.

- Blocker management helps enforce resolution before merging.

- Clear task management shows what’s left to review and resolve.

- Free for open source projects, making it easy to adopt in personal or community-driven work.

Cons of CodePeer

- Lacks AI-enhanced suggestions or automatic code insights found in newer review tools.

- May not scale well for large enterprise workflows or deep integrations.

- No built-in support for code quality/security scans-you’ll need to pair it with other tools.

Pricing

The free version is available for open-source projects and individual/personal use, while the pro version is $8 per user/month.

PullRequest

PullRequest bridges the gap between automated AI feedback and seasoned human expertise, making it an ideal choice for teams that value both speed and mentorship.

My Experience with PullRequest

What I like most about PullRequest is that it doesn’t just throw AI suggestions at you-it backs them up with reviews from real software engineers who have security expertise. I tried it during a critical update of a fintech application, and within 90 minutes, we received a review that flagged a potential input sanitization issue in a legacy module.

Pros of PullRequest

- Combines AI code analysis with expert human reviews for high-trust, production-ready feedback.

- Security-first design helps proactively catch and fix vulnerabilities early.

- Developer-first experience-feedback appears inside your existing tools without disruption.

- Supports major source control platforms, including GitHub, GitLab, Bitbucket, and Azure DevOps.

- Great for team onboarding, with a focus on knowledge sharing and mentorship.

Cons of PullRequest

- Premium pricing makes it best suited for enterprise or security-critical projects.

- Lacks flexible pricing tiers for small teams or individual developers.

- Not ideal for non-security-related, lightweight code changes due to the per-developer pricing.

Pricing

Secure Code Review for Teams: $129 per developer per month (billed annually). Includes AI-enhanced reviews, expert feedback, and a median turnaround time of 90 minutes. A two-week free trial is available for teams looking to test its capabilities.

Graphite Diamond

Graphite’s Diamond is an AI-powered code review assistant that actively scans new pull requests and leaves contextual feedback as if it were a vigilant senior engineer. It doesn’t just point out syntax issues,it dives deep into logic flaws, edge cases, and even suspicious copy-paste patterns, helping your team catch bugs before they hit production.

My Experience with Graphite Diamond

During a sprint, I submitted a PR involving permission checks for a settings API. Diamond pointed out that the code was checking read permissions but still writing to the database, a copy-paste issue I had missed. It also referenced similar past PRs to explain the context.

The stats dashboard offered a summary of how often it flagged issues, how many led to changes, and how frequently reviewers agreed with the suggestions.

Pros of Graphite Diamond

- Catches deep logic errors and subtle edge cases, not just surface-level issues.

- Learns from past pull requests and comments, building context over time.

- Leaves intelligent comments directly on your PRs with suggested fixes.

- Great feedback loop with reviewer stats and comment effectiveness metrics.

- Integrates seamlessly into GitHub, requiring minimal setup.

Cons of Graphite Diamond

- Not suited for smaller teams or individuals as it is only available at the organization level.

- Premium pricing can be a barrier for budget-conscious organizations.

Pricing

The price is $20 per committer/month, billed across the organization.

Korbit AI

Korbit is an AI code reviewer for GitHub that brings clarity, speed, and quality to your pull request workflow. Korbit provides code analysis features like bug detection, summary generation, and answering queries, aiming to support various stages of the review process.

My Experience with Korbit

Korbit’s suggestions showed a good level of context awareness. During one pull request involving backend pagination, it flagged an off-by-one error and suggested a fix. It also updated the PR description to align with the change.

Pros of Korbit

- Thorough context-based reviews that go beyond linting.

- Interactive PR bot that explains, guides, and teaches.

- Automatically generates clean, human-readable PR descriptions.

- Secure by design – no data retention or model training on your code.

- Works across all programming languages.

Cons of Korbit

- GitHub-only – no current support for GitLab, Bitbucket, or Azure DevOps.

- Annual billing only – no monthly payment option, which might be inconvenient for smaller teams.

Pricing

Korbit Pro: $9/user/month (billed annually).

Sourcegraph Cody

Cody is an AI coding assistant developed by Sourcegraph. It offers suggestions with awareness of the broader codebase, including documentation and established development patterns, making it more context-sensitive than standard autocomplete tools

My Experience with Cody

I tested Cody using the VS Code extension to review and refactor a chunk of backend logic. Cody pointed out a few improvements, such as missing input validation and type hints, along with example code and explanations. The suggestions were specific and aligned well with the existing codebase.

Cody is also helpful when you’re onboarding to a new repo or diving into a large unfamiliar codebase. It explains things clearly, suggests tests, and even catches code smells-all while feeling native to your IDE.

Pros of Cody

- Faster code generation: From one-liners to full functions in any language.

- Deep code insights: Explain code segments or entire repos in plain English.

- Rapid test creation: Write unit tests instantly.

- Code smell detection: Spot and refactor suboptimal patterns.

- Custom prompts: Tailor Cody to your team’s style or workflow.

- Multi-LLM support: Use GPT-4o, Claude 3.5, Gemini, Mixtral, or bring your own via Bedrock or Azure OpenAI.

- Context-aware suggestions: Cody knows your codebase, not just your cursor.

Cons of Cody

- Limited language coverage: Not every language is fully supported yet.

Pricing

Free for individual developers. Pro version is available for $9/month, ideal for small teams needing full access to Cody’s features.

Kodus Kody

Kody is an open-source AI code review agent from Kodus that is built to fit seamlessly into your Git workflow. It learns from your team’s code, standards, and feedback to deliver high-quality, contextual reviews that cover code quality, security, and performance.

My Experience with Kody

I tested Kody in a self-hosted GitHub environment, setting up custom natural language rules to match our team’s style guide. Kody was able to adapt after a few PRs, the feedback became more relevant and specific.

Pros of Kody

- Custom guidelines: Write your own standards in natural language.

- Contextual insights: Understands your team’s architecture and coding patterns.

- Full Git integration: Reviews land right inside your pull requests.

- Self-hosted: Keep all your code and data in-house.

- Security & performance checks: Covers more than just style or syntax.

- Open source: Customize and extend as needed.

Cons of Kody

- Initial tuning required: Needs setup to fully reflect your team’s norms.

- Smaller ecosystem: Still growing compared to older tools.

Pricing

Free for self-hosted use. Managed “Teams” plan available at $9 per developer/month for hosted deployments with full support.

Claude AI Sonnet

Claude AI Sonnet is an AI tool designed to assist with code reviews by leveraging the capabilities of Claude’s powerful language model. While primarily known for its text generation prowess, Sonnet is tailored for coding workflows, offering insightful feedback that spans beyond just code syntax. It helps identify logical errors, refactor code for efficiency, and ensure better documentation.

My Experience with Claude AI Sonnet

I used Claude AI Sonnet to review a module handling data processing and API calls.

Sonnet helped flag issues like inefficient loops, redundant code, and unclear naming. It also provided explanations for its suggestions, which made it easier to understand the reasoning behind certain changes. While not deeply tied to specific project context, it was still helpful for improving general code structure and readability.

Pros of Claude AI Sonnet

- Context-agnostic feedback: Works well with a variety of coding languages and frameworks.

- Bug and inefficiency detection: Flags errors like bad logic and redundancy.

- Code refactoring: Suggests improvements for cleaner, more efficient code.

- Actionable explanations: Breaks down suggestions and gives reasons for each change.

- Cross-language support: Useful for teams working with diverse tech stacks.

Cons of Claude AI Sonnet

- Limited contextual awareness: May not be as tailored to your specific codebase as other AI tools.

- Requires manual setup: Needs fine-tuning to fit your team’s specific guidelines and workflow.

Pricing

Free for individuals, Pro at $18/month, and Team at $25/user/month for collaborative features.

CloudAEye

CloudAEye enhances developer workflows by providing AI-powered code reviews and automated test failure analysis. Integrated with GitHub, CloudAEye speeds up the review process and identifies potential bugs, vulnerabilities, and logical errors with precision. It also generates comprehensive PR descriptions and detailed change breakdowns.

My Experience with CloudAEye

I integrated CloudAEye into my GitHub workflow and found its code reviews quick and insightful. This AI code analyzer caught a couple of security vulnerabilities in the PR that I had missed and even provided suggestions on how to address them. The PR descriptions were detailed, saving me time by summarizing all the changes clearly.

Pros of CloudAEye

- Automated code reviews with security vulnerability detection.

- Detailed PR descriptions and change breakdowns.

- Root cause analysis for test failures in CI/CD.

- Self-hosted LLM for enhanced data privacy.

- Comprehensive knowledge of your codebase for in-depth reviews.

Cons of CloudAEye

- Limited language support compared to some competitors.

- Higher pricing for teams may be a barrier for smaller companies.

Pricing

Free for individuals, $19.99/user/month for teams.

Sourcery

Sourcery is an AI code reviewer designed to find bugs, improve code quality, and enhance knowledge sharing. It provides instant, actionable feedback across 30+ programming languages and integrates directly with GitHub, GitLab, or your IDE. Sourcery helps catch critical issues early, with in-line code suggestions and improvements in every pull request.

My Experience with Sourcery

I used Sourcery on a backend project hosted on GitHub. It quickly pointed out potential bugs and security issues, offering suggestions to enhance code quality. The in-line feedback was clear and aligned well with the existing code style, which helped speed up the review process.

Pros of Sourcery

- Early bug detection: Quickly identifies bugs and security issues.

- In-line suggestions: Provides actionable code suggestions and improvements.

- Shift-left reviews: Works directly within your IDE to speed up the review process.

- Continuous learning: Sourcery learns from past reviews to refine future suggestions.

- Multi-language support: Works with 30+ languages.

Cons of Sourcery

- Limited language coverage: While it supports many languages, some may not be fully optimized.

- Pricing: The Pro version may be pricey for solo developers or small teams.

Pricing

Free for open-source projects. Pro version at $12 per seat/month.

Greptile

Greptile is an AI code reviewer designed to deliver smarter, faster reviews by deeply understanding your codebase. It provides natural-language summaries of every pull request, helping you grasp the context quickly.

My Experience with Greptile

I integrated Greptile into my GitHub workflow to help manage reviews across a large code repository. Greptile helped to summarize pull requests in plain language, highlighting major changes and providing clear, context-aware, in-line suggestions. The “click-to-accept” quick fixes saved a lot of back-and-forth on minor issues.

It can understand your codebase and connect dots between changes, dependencies and impacts. However, for the pricing it is available, I’ll prefer Qodo Merge.

Pros of Greptile

- Natural-language summaries: Quickly grasp what changed and why.

- Context-aware inline comments: Get bug alerts and code improvements right where you need them.

- Quick fix suggestions: Accept small fixes instantly with one click.

- Conversational review assistant: Chat with Greptile for more clarity or guidance.

- Full codebase context: Builds a complete code graph to understand architecture and dependencies.

- Supports 30+ languages: Versatile across most tech stacks.

- Real-time indexing: Updates with every commit for accurate reviews.

Cons of Greptile

- Pricing complexity: Charged per file changed, which may add up for large PRs.

Pricing

$0.45 per file changed, capped at $50 per developer/month.

Codacy

Codacy is an automated code quality and analysis platform designed to help developers write clean, secure, and maintainable code faster. It integrates seamlessly with your GitHub repositories, providing insights on every pull request-from static analysis and cyclomatic complexity to code coverage and security checks.

My Experience with Codacy

I tried Codacy on a Node.js project to track quality issues and enforce consistent coding standards. The setup was simple-I connected my GitHub repo, and Codacy began analyzing every commit and PR. I liked its visual dashboard, which showed how our code quality evolved over time. It highlighted duplications, flagged security risks, and helped us catch problems early in the CI process.

Pros of Codacy

- Static analysis: Catches issues like complexity, duplication, and bad practices before they reach production.

- Security checks: Runs hundreds of checks and monitors vulnerabilities in a central dashboard.

- Code coverage integration: Tracks and improves test coverage through your CI pipeline.

- Multi-language support: Works with over 20 languages and popular linters.

- Pull request integration: Feedback appears directly in your GitHub PRs and commits.

- Custom quality settings: Set thresholds and enforce standards on every contribution.

Cons of Codacy

- Limited customization for advanced workflows: Some teams may find flexibility lacking.

- Performance on large repos: Can be slower to process very large or monorepo-style projects.

- Pro version is paid: Full access to features requires a subscription.

Pricing

Free forever for individuals and open-source projects. Pro plan starts at $21 per user/month.

Comparison of Top AI Code Review Tools in 2025

The following table summarizes key features, pricing, and strengths of leading AI code review tools to help you choose the best fit for your development workflow:

| Tool | Pricing | Key Features |

| Qodo | Free (basic), $15/user/month (team) | Best I’ve used, Slash commands (/review, /describe), PR summaries, GitHub/GitLab/Bitbucket integration, severity-based issue prioritization |

| Traycer | $8/month (Lite, annual billing) | Task-based analysis, edge-case detection, workspace-level reviews, Discord support |

| Bito | $15/user/month (Pro) | PR summaries, security integrations (Snyk), one-click fixes, onboarding acceleration |

| Github Copilot | Free (individual), $4/user/month (team) | AI-generated PR descriptions, in-IDE code explanations, multi-language support |

| CodeRabbit | Free (basic), $12-24/user/month (Lite/Pro) | Jira integration, line-by-line feedback, customizable review depth |

| Pull Sense | Free (BYOK), $4/user/month (Pro) | BYOK privacy model, GitHub-only, security-first design, instant feedback |

| CodeAnt AI | $10-15/user/month | One-click fixes, multi-platform support (GitHub/GitLab), security/code quality summaries |

| Sweep AI | $25/month (Pro) | JetBrains-native, offline/on-prem options, context-aware suggestions |

| DeepCode AI | Free (basic), $25/month (Teams) | Hybrid symbolic + generative AI, security-focused, verified auto-fixes |

| CodePeer | Free (OSS), $8/user/month (Pro) | Turn-tracking, progress saving, minimalist UI, blocker management |

| PullRequest | $129/user/month (annual billing) | AI + human expert reviews, 90-minute turnaround, security-first |

| Graphite Diamond | $20/committer/month | Logic/edge-case detection, PR comment metrics, GitHub integration |

| Korbit AI | $9/user/month (annual billing) | Context-aware reviews, interactive PR bot, GitHub-only |

| Sourcegraph Cody | Free, $9/user/month (Pro) | Codebase-aware insights, test generation, multi-LLM support |

| Kody | Free (self-hosted), $9/user/month | Custom natural-language rules, Git integration, security/performance checks |

| Claude AI Sonnet | Free, $18-25/user/month | Cross-language support, bug/inefficiency detection, refactoring suggestions |

| CloudAEye | Free, $19.99/user/month | Security vulnerability detection, self-hosted LLM, test failure analysis |

| Sourcery | Free (OSS), $12/user/month | In-line suggestions, 30+ language support, shift-left IDE integration |

| Greptile | 0.45/file(capped at 50/user/month) | Natural-language PR summaries, codebase-aware reviews, quick fixes |

| Codacy | Free (basic), $21/user/month | Static analysis, security dashboards, multi-language linter support |

How to Choose the Right AI Code Review Tool

Selecting the ideal AI code review tool requires evaluating several key factors:

- Integration Capabilities: Compatibility with your version control system, IDEs, and CI/CD pipeline. Task tracker integration options (Jira, Linear, Asana).

- Technical Considerations: Programming language support and analysis depth. Repository size handling and performance impact. Customization options for coding standards.

- Team Dynamics: Consider team size, experience levels, and distribution. Assess existing review practices and culture.

- Business Factors: Pricing model suitability for your team structure. Security requirements and vendor stability.

When making your selection, focus on your team’s primary pain points. If quality consistency is your challenge, prioritize standardization features. For teams struggling with review speed, look for automation capabilities. Organizations with security concerns should evaluate security scanning integrations.

Start by defining what you hope to achieve-faster reviews, better onboarding, quality improvement, or security enhancement-and choose accordingly. I love that Qodo Merge takes a highly tailored approach to solving the unique challenges teams face in code reviews. It isn’t just about automating tasks; it’s about addressing the pain points that matter most.

Practical Recommendations for Using AI Code Review Tools

- Integration Strategies: Start with a pilot on non-critical repositories. Create clear guidelines for AI vs. human review requirements. Establish a feedback loop for improving configurations.

- Avoiding Common Pitfalls: Don’t replace human reviews entirely-AI tools miss context and nuance. Regularly update rules as your codebase evolves. Start with core features before enabling all checks.

- Balanced Implementation: Use AI for initial screening of obvious issues. Reserve human review for architecture, readability, and business logic.

The most successful implementations of AI-powered code reviews view it as an augmentation of human expertise, not a replacement. They define complementary roles that leverage the strengths of both.

Summary

The developer community’s relationship with AI tools continues to evolve. GitHub’s 2024 Open Source Survey reveals that 73% of open source contributors now use AI tools like GitHub Copilot for coding or documentation-a strong signal of growing acceptance.

Perhaps the most practical insight came from one pragmatic voice in the discussion: “Nothing works until we’ve tried it.”

For now, AI code reviews work best as augmentation rather than replacement. They handle the tedious parts while humans tackle the nuanced judgments requiring context, creativity, and collaboration.

The jury’s still out on whether teaching our digital creations to critique us was genius or hubris-but isn’t that tension what makes engineering so interesting in the first place?

FAQs

What are the best GitHub AI code review tools?

For GitHub repositories, Qodo Merge stands out as the best AI code review tool currently available. Its intuitive slash commands, automatic PR description generation, and context-aware suggestions make it particularly effective within the GitHub ecosystem. Other options include GitHub Copilot for PRs and CodeRabbit, which both offer solid integration with GitHub’s workflow.

What are the best Bitbucket AI code review tools?

For Bitbucket environments, Qodo Merge offers excellent support with its cross-platform compatibility. Traycer also works effectively with Bitbucket, providing flexible analysis options that integrate well with Bitbucket’s PR workflow. When selecting a tool for Bitbucket specifically, look for one that maintains feature parity across platforms rather than those optimized primarily for GitHub.

Can AI code reviewers replace human reviewers?

No, AI code reviewers cannot fully replace human reviewers. While AI excels at consistency, pattern matching, and detecting common issues, it struggles with understanding business context, evaluating architectural decisions, assessing readability, and providing mentorship. The most effective approach combines AI’s systematic analysis with human judgment and experience. AI should be viewed as a powerful assistant that handles routine checks, freeing humans to focus on higher-level concerns.

Do AI code reviews significantly improve code quality?

Yes, when properly implemented, AI code reviews can significantly improve code quality. They ensure consistent adherence to standards, catch common bugs and anti-patterns early, identify security vulnerabilities, and reduce the cognitive load on human reviewers. Teams typically report fewer production issues and more consistent codebases after implementing AI review tools alongside human reviews. The key is using AI to enhance the code review process rather than cutting corners.

Are AI code reviews reliable for finding critical issues?

AI code reviews are increasingly reliable for finding certain types of critical issues, particularly security vulnerabilities following known patterns, performance bottlenecks in common algorithms, and memory management issues. However, they remain less effective at identifying logical errors, edge cases, and domain-specific problems that require deeper contextual understanding. Critical systems should still receive thorough human review, with AI serving as an additional safety net.

How much developer oversight is needed when using AI tools?

Developer oversight requirements depend on codebase complexity and risk level. For routine changes in well-established codebases, light oversight may be sufficient. For core functionality or security-sensitive areas, significant oversight remains necessary. Architectural changes still require full human review. Most teams find that AI tools reduce review time by 30-50% while maintaining quality, but complete elimination of human oversight is not recommended in any production environment.

What’s the future potential for AI in code reviewing?

The future of AI code review is promising. We can expect developments including deeper understanding of architectural patterns and business logic, improved reasoning about code behavior across complex systems, more effective learning from team-specific patterns, and integration with requirement tracking to verify implementation correctness. As large language models continue to advance, we’ll see AI reviewers that better understand context, provide more nuanced feedback, and potentially participate in higher-level design discussions.